x <- read.csv("minimum wage.csv", as.is = TRUE)Learning Microeconometrics with R

Introduction

The Intern

You have been hired as a summer intern for a right-of-center think tank in Washington DC. It is going to be a great summer! You will play softball on the Mall. Go to Nats games. Hang out with friends interning on the Hill. And melt onto the sidewalk when you commute to work.

First Day

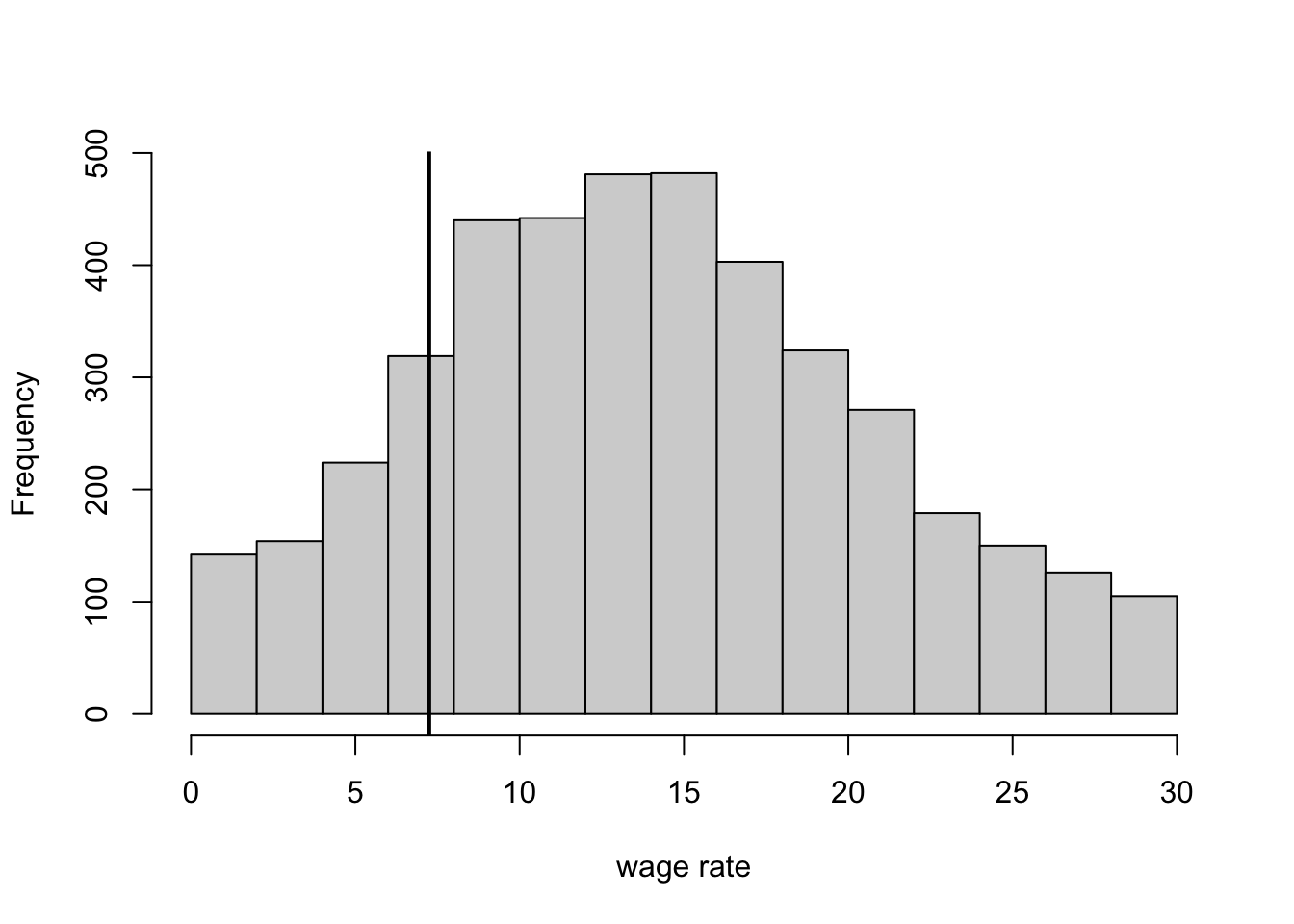

The think tank is arguing against a federal increase in the minimum wage. You have been asked to predict what will happen if the minimum wage increases from $7.25 to $15.00.

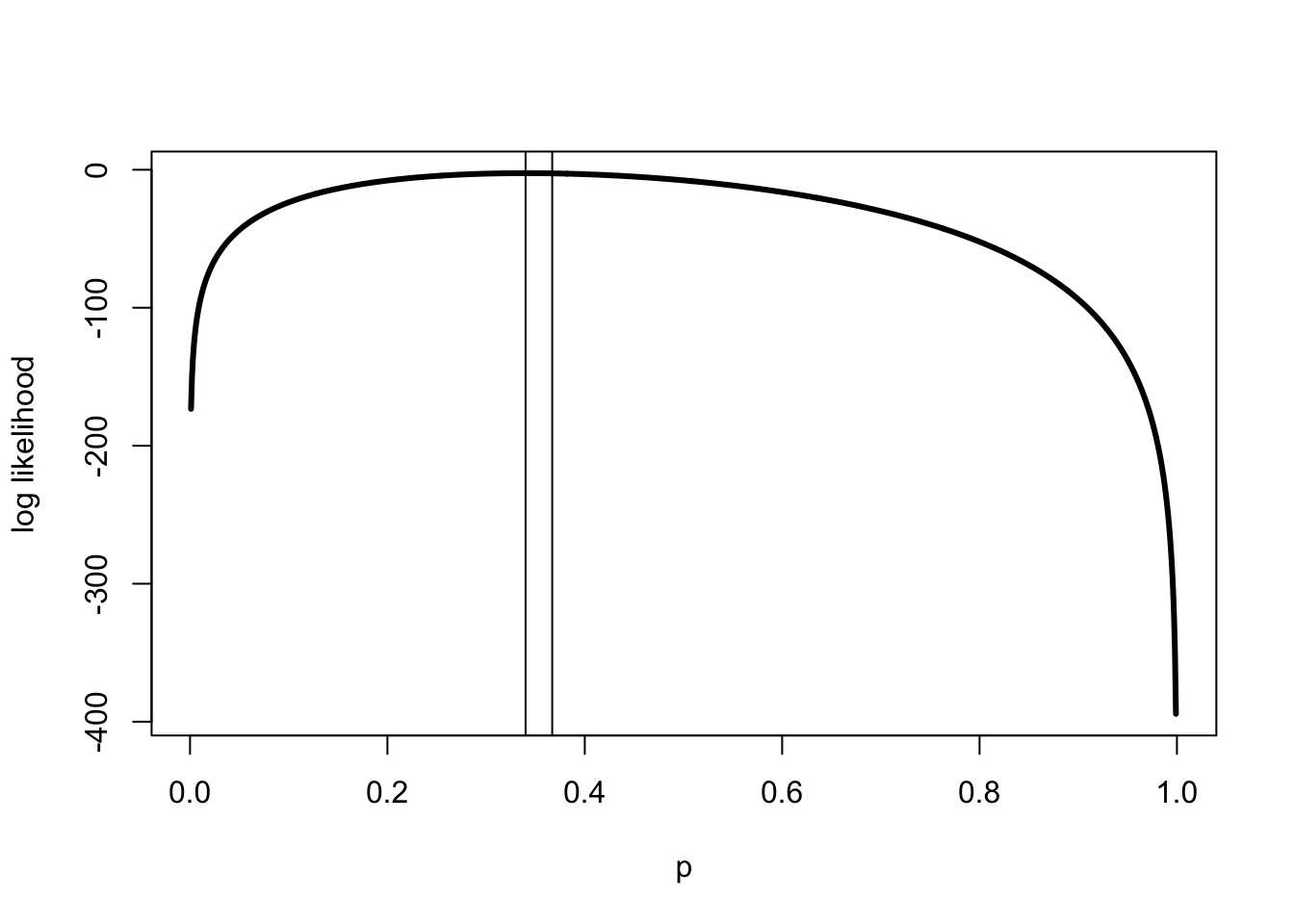

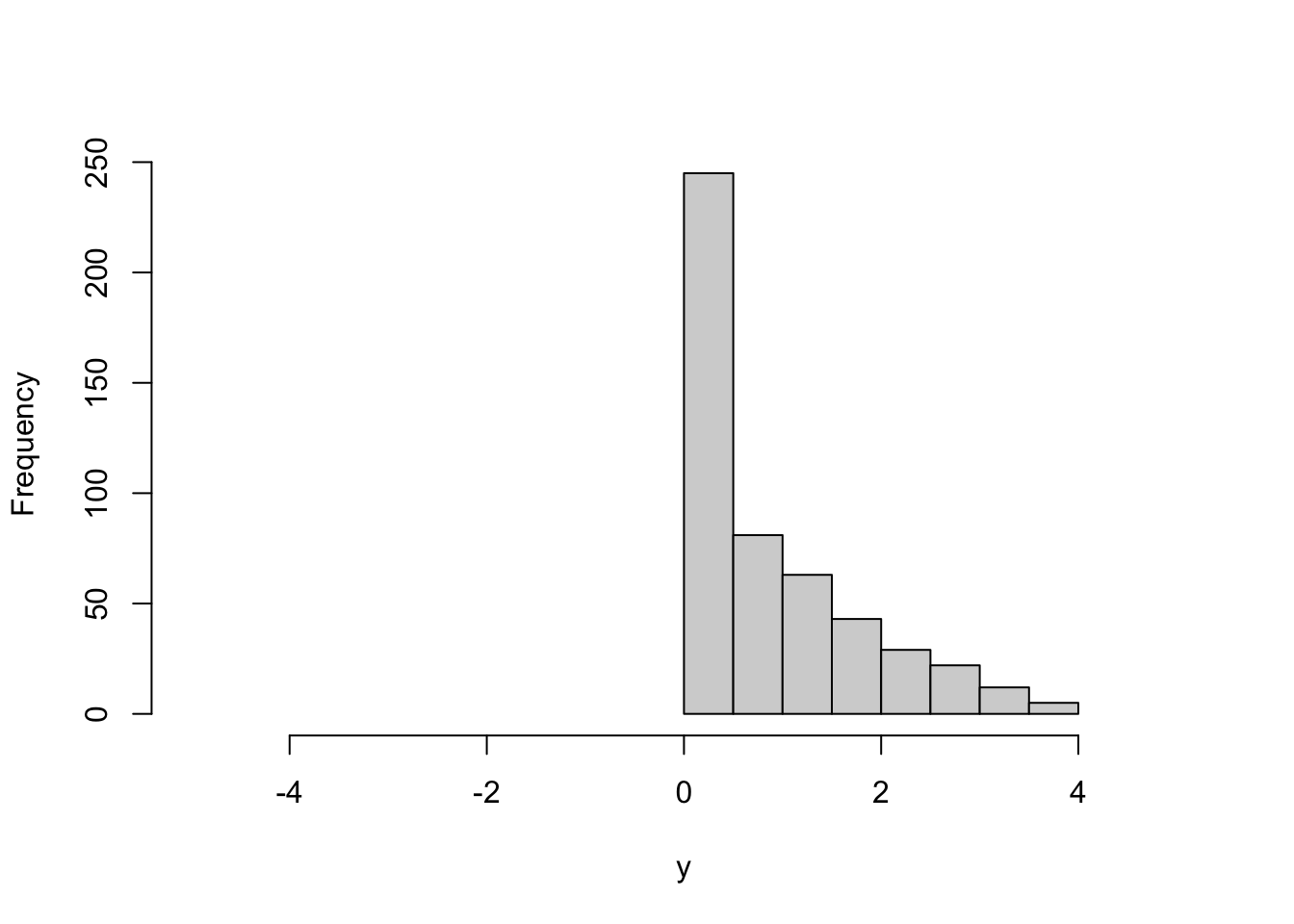

You have a vague memory of a discussion of the minimum wage in your Econ 101 class. To refresh your memory you google “minimum wage Khan Academy.” You listen to Sol explain that the equilibrium wage is $6 per hour and workers work 22 million hours per month. Sol shows that a minimum wage of $7 leads to 2 million hours of unemployment and $1 million of output per month lost in the economy. This seems straightforward.

But what actually happens in the real world? Your supervisor suggests looking up minimum wages for each state and state level unemployment levels from the Bureau of Labor Statistics (https://bls.gov). She says that different states have changed their minimum wage over time and a number of states have minimum wages that are above $7.25, although none as high as $15.

You download the data on each state’s current minimum wage and unemployment rate. You put everything in a spreadsheet. A fellow intern shows you how to save it as a csv file. He says this will allow importing the data into R, which is the statistical language of choice at your think tank.

You then download R and RStudio (the IDE you are told, whatever that is). Your colleague shows you how to get set up. He shows you how to open up RStudio and then create a new script file. You call the file minwage.R and save it to the minimum wage folder where you have the data set. He then tells you to go to Session > Set Working Directory > To Source File Location. “Trust me. It makes coding easier,” he says.

Now you are ready to write your first line of code.

Your colleague explains that read.csv will import the data set that you created. The data set is simply called x. He explains that you must use the assign <- arrow. You ask why. He shrugs, “that was what I was told when I started.” Also, he says you should probably write as.is = TRUE because R has a habit of changing numbers to characters and other, even stranger, things.

You click Run. It worked! The letter x appears in the Global Environment. You click on it. A tab with your data appears.

You want to calculate the relationship between the minimum wage and unemployment. You want to run a regression.1 You ask your cubicle neighbor how to do that. She tells you to write the following.

lm1 <- lm(x$Unemployment.Rate ~ x$Minimum.Wage)You ask about the whole thing with <-. Your neighbor says that you must do it that way but refuses to explain why.

You write out the code and hit Run. Nothing happens. Actually, lm1 appears in the box in the upper right of the screen. Apparently it is a List of 12. You were hoping to see a table with regression results and t-statistics. But nothing. You ask for help from your neighbor. She rolls her eyes. “No. You just created an object called lm1. To look at it, use summary.”

summary(lm1)[4]$coefficients

Estimate Std. Error t value Pr(>|t|)

(Intercept) 3.49960275 0.32494078 10.7699709 1.62017e-14

x$Minimum.Wage 0.01743224 0.03733321 0.4669365 6.42615e-01Cool. You got what you were looking for. The minimum wage increases unemployment! It increases it by 0.01743. You wonder what that means. Another intern comes by, looks at what you did, and then types the following code on your computer. He leaves with a “you’re welcome.” Boy, is that guy annoying.

a1 <- (15-7.25)*lm1$coefficients[2]

a1/mean(x$Unemployment.Rate)x$Minimum.Wage

0.03710335 Your neighbor explains it all. You want to know what happens to unemployment when the minimum wage increase from $7.25 to $15. The second coefficient states that amount. Then you can put it in percentage terms relative to the current unemployment rate.

You go back to your supervisor. You say you found that a minimum wage increase to $15 would increase the unemployment rate by four percent. “The unemployment rate would go to 8%!” she exclaims. No, no, no. You clarify that it would increase by 4 percent not 4 percentage points. From say 4% to 4.15%. “Oh. Still that is a big increase.” Then she says, “But are you sure? How can you tell what will happen in states that haven’t changed their minimum wage?” You respond accurately. “I don’t know.” As you ponder this, you notice everyone is getting dressed for softball.

Second Day

On your way into the building you run into your supervisor. You explain how you were able to beat CBO’s Dynamic Scorers by just one run. She congratulates you and says, “You should plot the relationship between the minimum wage and unemployment.”

After some coffee and some googling you find code to do what your supervisor suggested.

plot(x$Minimum.Wage, x$Unemployment.Rate)

The annoying guy from yesterday breezes by and says. “Oh no. Looks like it is censored. You will need to use a Tobit.” At this, someone a few cubicles away pops up like a meerkat. He says, “No. Don’t use a Tobit, use a Heckit. The data is probably selected.” Then he is gone.

What the heck is a Heckit? What the heck is a Tobit? What the heck is a meerkat?

The Book

The book is designed to help our fictitious intern hero survive the summer in DC.

What Does it Cover?

The book is based on a course I have taught at Johns Hopkins University as part of their Masters of Applied Economics. The book and the course aim to provide an introduction to applied microeconometrics. The goal is for the reader to have competence in using standard tools of microeconometrics including ordinary least squares (OLS), instrumental variables (IV), probits and logits. Not only should you be able to understand how these models work, but more importantly, you should be able to understand when they don’t.

In addition to these standard models, the book and the course introduce important models commonly used in microeconometrics. These include the Tobit and the Heckman selection model (Heckit). The book introduces approaches that have become common in my subfield of empirical industrial organization. These approaches center around the analysis of games. That is, situations where a small number of individuals or firms interact strategically.

Lastly, the book introduces some new techniques that have been developed to analyze panel data models and other situations where an object of interest is measured repeatedly. It discusses difference in difference as well as stochastic controls. It touches on how machine learning techniques can be applied in microeconometrics. The book also introduces to a broader economic audience ideas of Harvard statistician, Herbert Robbins, called empirical Bayesian analysis.

What is the Approach?

The book teaches microeconometrics through R. It is not primarily aimed at teaching R. Rather, it is primarily aimed at teaching microeconometrics. This idea of using computer programming as a tool of instruction goes back to at least Seymour Papert and MIT’s AI lab in the 1970s.2 South African-born Papert helped develop a programming language called Logo. The goal of Logo was to teach mathematics by programming how a turtle moves around the screen. You may have used one of the offspring of Logo, such as Scratch or Lego Mindstorms.

I learned math through Logo. When I was a pre-teen, Logo became available on the personal computer, the Apple II. My parents taught me to program in Logo and I learned a number of geometric concepts such as Euclidean distance and the Pythagorean theorem by programming up a model of how a moth circled a light source.

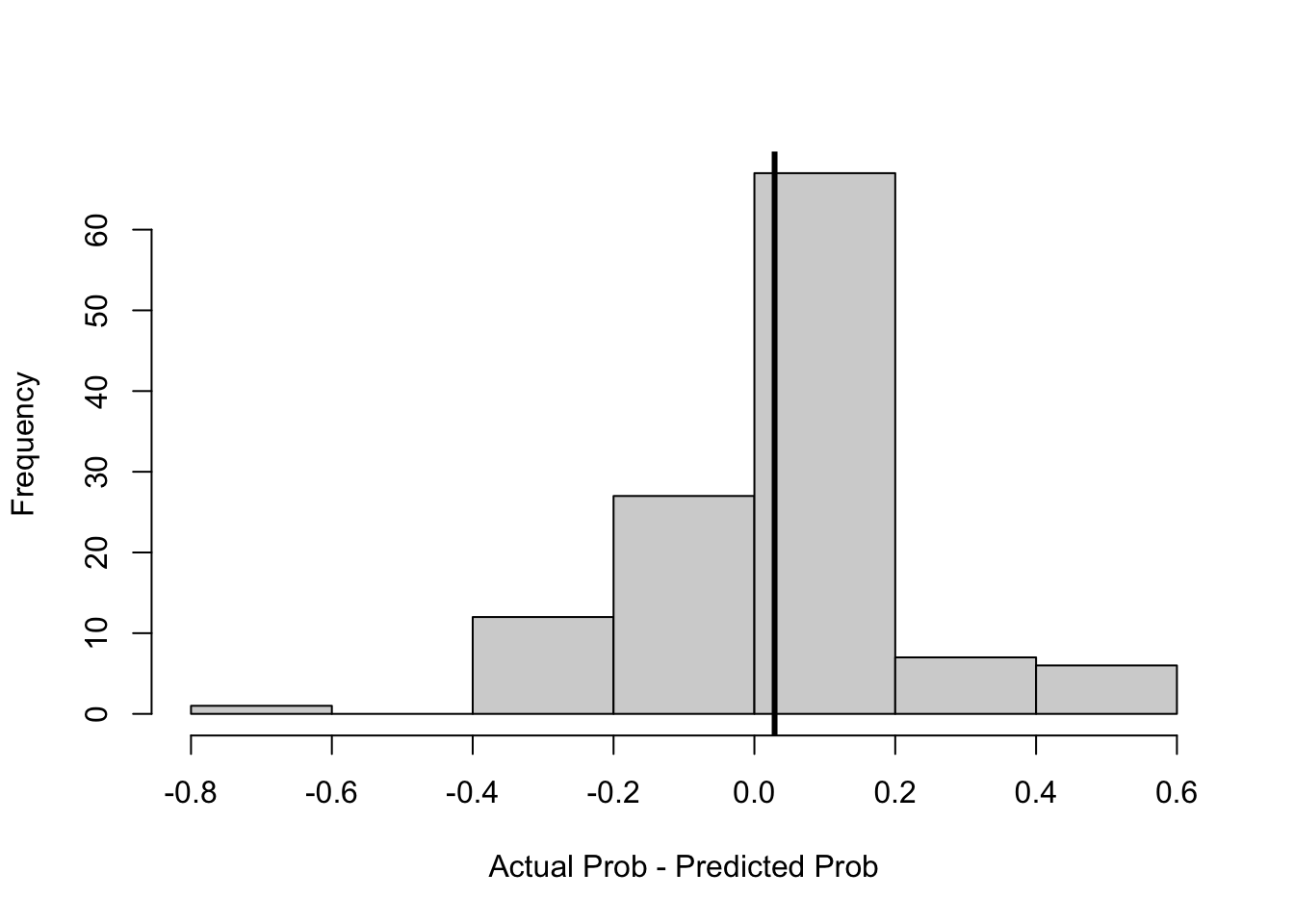

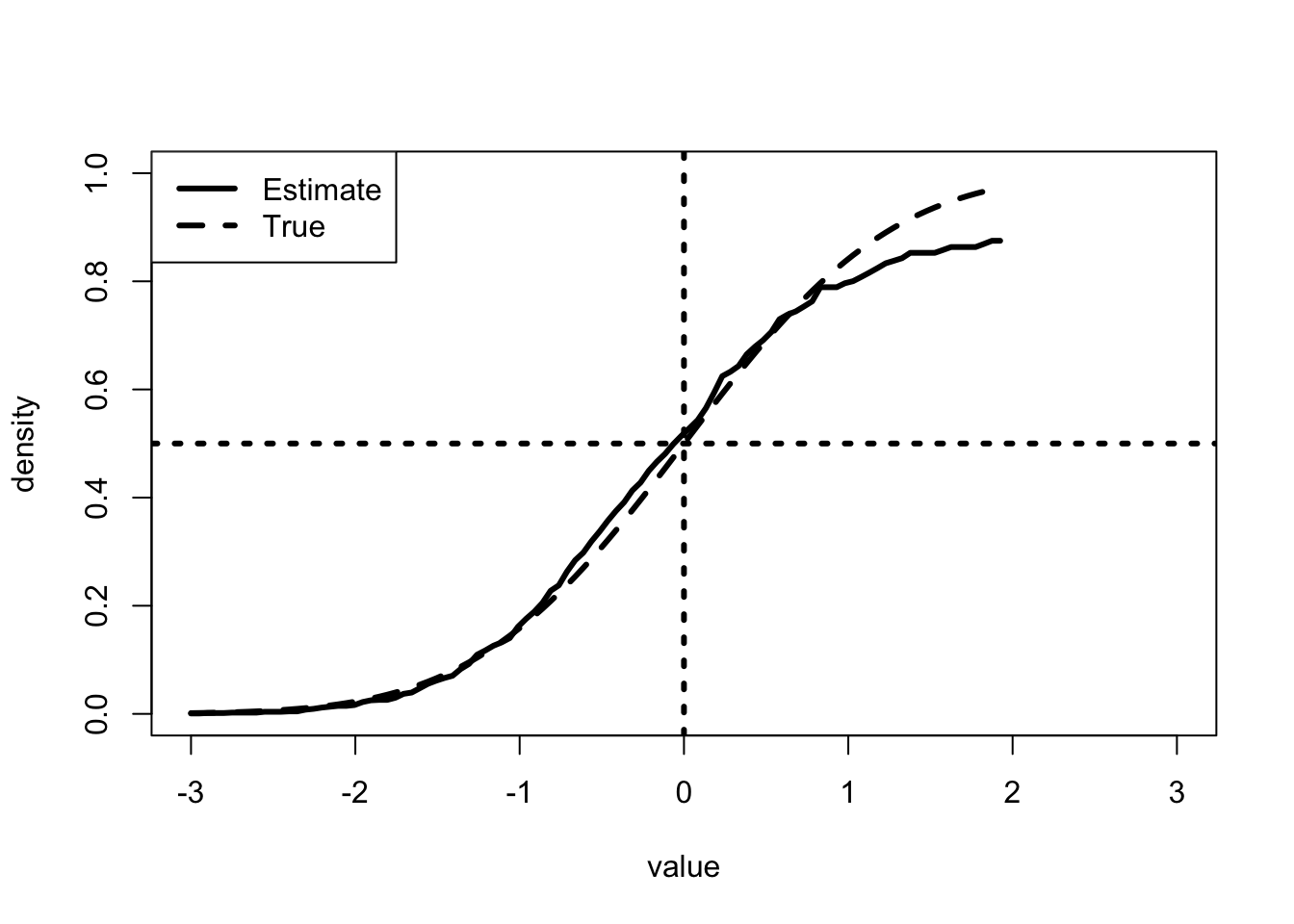

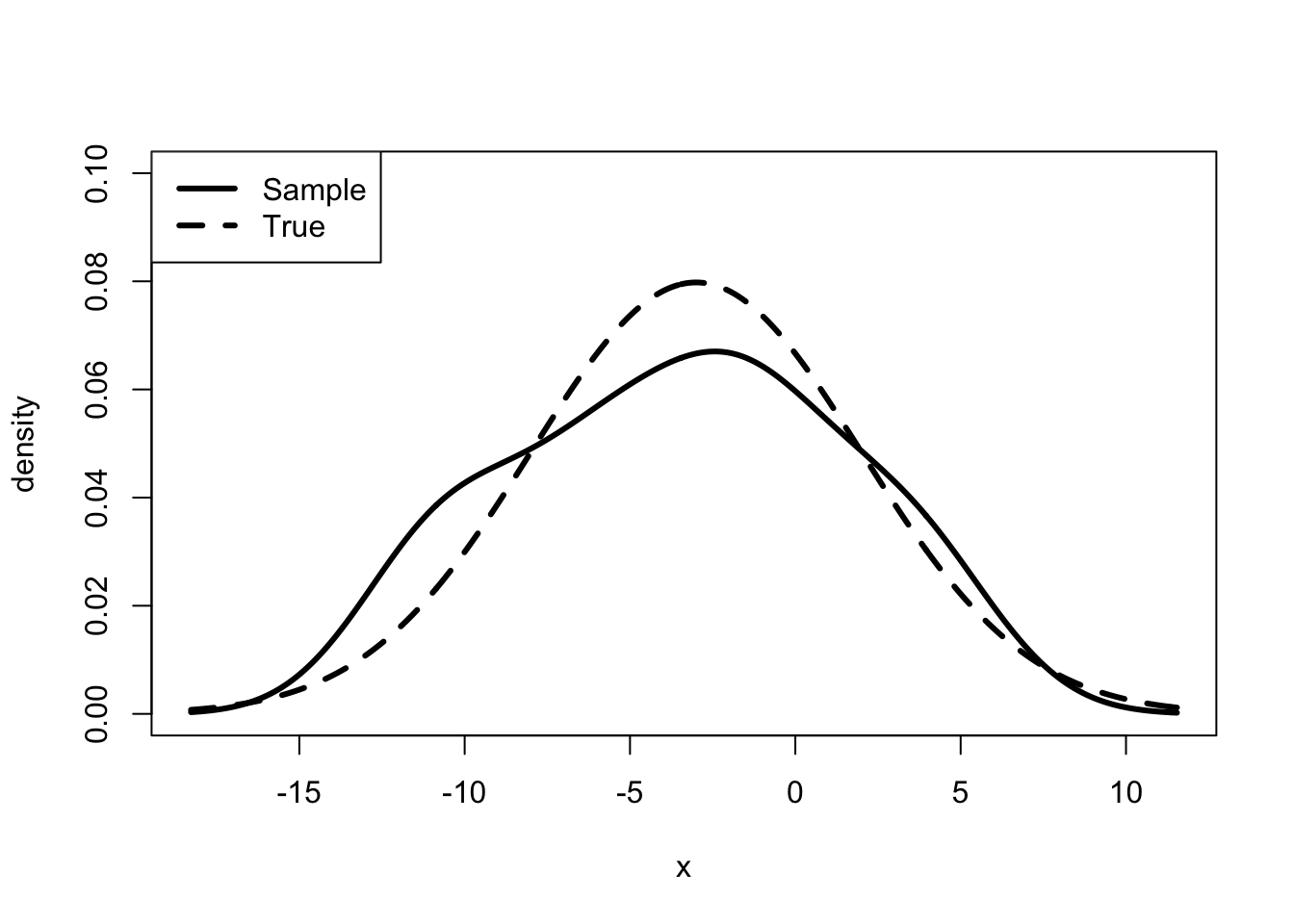

The book uses Papert’s ideas to teach microeconometrics. You will learn the math of the estimator and then how to program up that estimator.3 The book makes particular use of the computer’s ability to simulate data. This allows us to compare our estimates to what we know to be the true values. In some cases these simulations illustrate that our estimator is correct; in others, the simulations help us to understand why our estimator is incorrect. Testing models on simulated data has the additional benefit of allowing you to check your programming.

The book is written in RStudio using Sweave. Sweave allows to be integrated into R. is a free type-setting language that is designed for writing math. Almost all the code that is used in the book is actually presented in the book. On occasion it is more practical to create a data set outside the book. In those cases, the data and the code that created the data are available here https://sites.google.com/view/microeconometricswithr/table-of-contents. In a couple of other cases, the preferred code does not produce nice output for the book. I have highlighted those cases in the text. I also generally hide repetitive code. For the most part, the coding in the book is in base R . The book makes little use of packages. This shows you the underlying code and illustrates the econometrics. That said, there are a few packages that I really like, including stargazer and xtable which both make nice tables in .

What are POs, DAGs, and Do Operators?

POs, DAGs and Do Operators sound like school-yard put-downs, but they form the core of the book’s approach to econometrics. This approach is heavily influenced by Northwestern econometrician, Charles Manski. I was lucky enough to have Chuck teach me econometrics in my first year of graduate school. It was a mind-altering experience. I had taken a number of econometrics classes as an undergraduate. I thought much of it was bunk. Manski said much of what you learned in standard econometrics classes was bunk. Manski gave meat to the bones of my queasiness with econometrics.

The book focuses on the question of identification. Does the algorithm estimate the parameter we want to know? Other books spend an inordinate amount of time on the accuracy of the parameter estimate or the best procedure for calculating the estimate. This book steps back and ask whether the procedure works at all. Can the data even answer the question? This seems to be fundamental to econometrics, yet is given short shrift in many presentations.

The book focuses on identifying the causal effect. What happens to the outcome of interest when the policy changes? What happens if college becomes free? What happens if prices are increased? What happens if the federal minimum wage is increased?

To answer these causal questions, the book uses directed acyclic graphs (DAG) and do operators. The book, particularly the early chapters, relies on ideas of Israeli and UCLA computer scientist, Judea Pearl. DAGs help us to understand whether the parameter of interest can be identified from the data we have available. These diagrams can be very useful models of the data generating process. Hopefully, it will be clear that DAGs are models, and as such, they highlight some important issues while suppressing others.

Pearl’s do operator helps illustrate the old statistical chestnut, “correlation is not causality.” Observing the unemployment rate for two different minimum wage laws in two different states is quite different from changing the minimum wage law for one state. In the first instance we observe a statistical quantity, the unemployment rate conditional on the minimum wage law. In the second case we are making a prediction, what will happen to the unemployment rate if the law is changed?

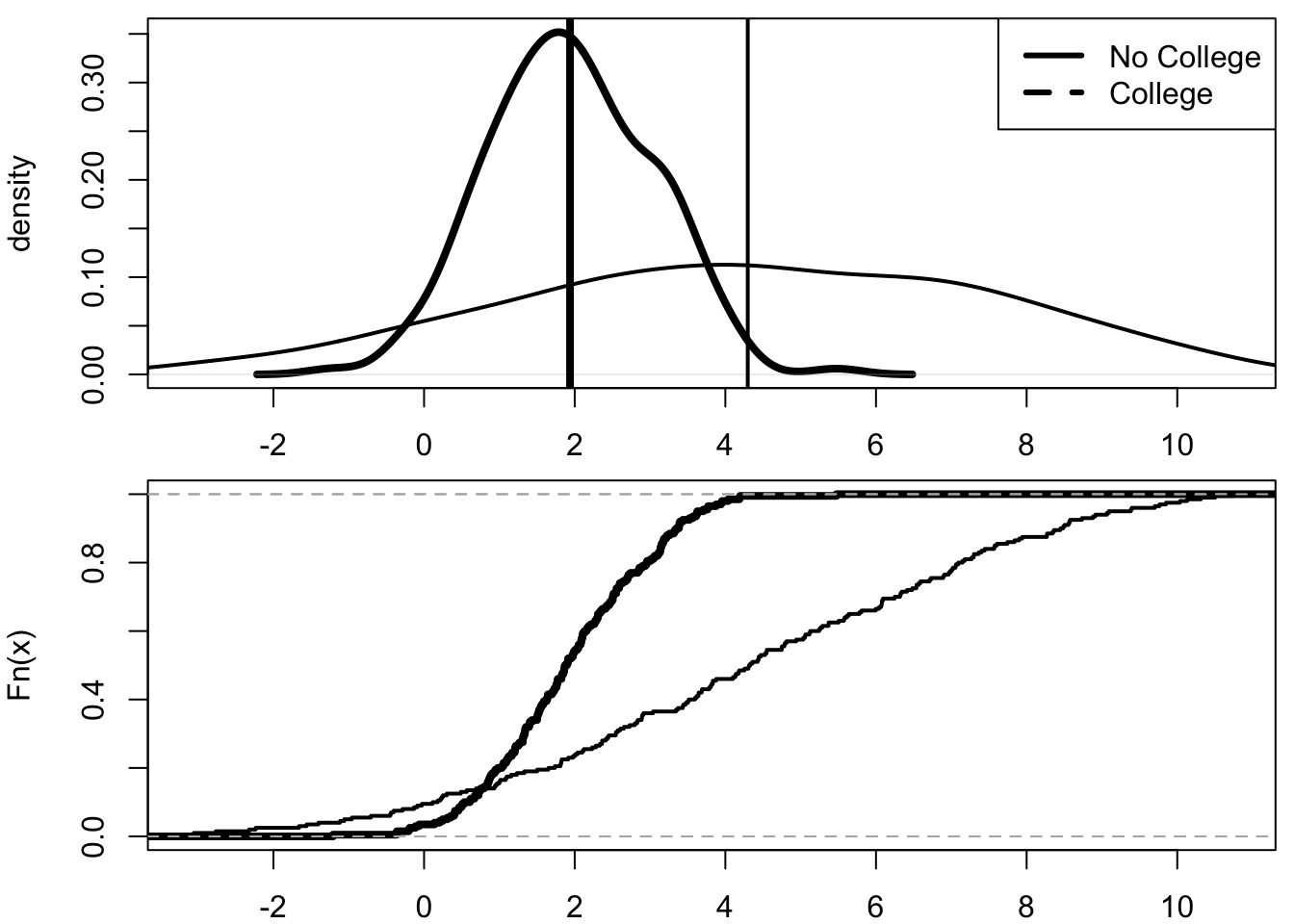

In some cases it is useful to illustrate these issues using the potential outcome (PO) model of former Harvard statistician, Donald Rubin. This model highlights the fundamental identification problem of statistics. We can never observe the difference in state unemployment rates for two different minimum wage laws. Sure, we can observe unemployment rates for two different states with different minimum wage laws. We can even observe the difference in unemployment rates for the same state before and after a change in the minimum wage law. However, we cannot observe the unemployment rate for the same state at the same time with two different minimum wage laws.

In addition, the PO model illustrates that causal effect is not single valued. A policy that encourages more people to attend college may allow many people to earn higher incomes, but it may not help all people. It is even possible that some people are made worse off by the policy. There is a distribution of causal effects.

What About the Real World?

The course I have taught at Hopkins is for a Masters of Applied Economics. I take the applied part of this seriously. The course and this book aim to show how to do microeconometrics. I have spent my career using data to answer actual policy questions. Did a realtor group’s policies lead to higher prices for housing transactions?4 Did Google’s changes to the search results page harm competitors or help consumers?

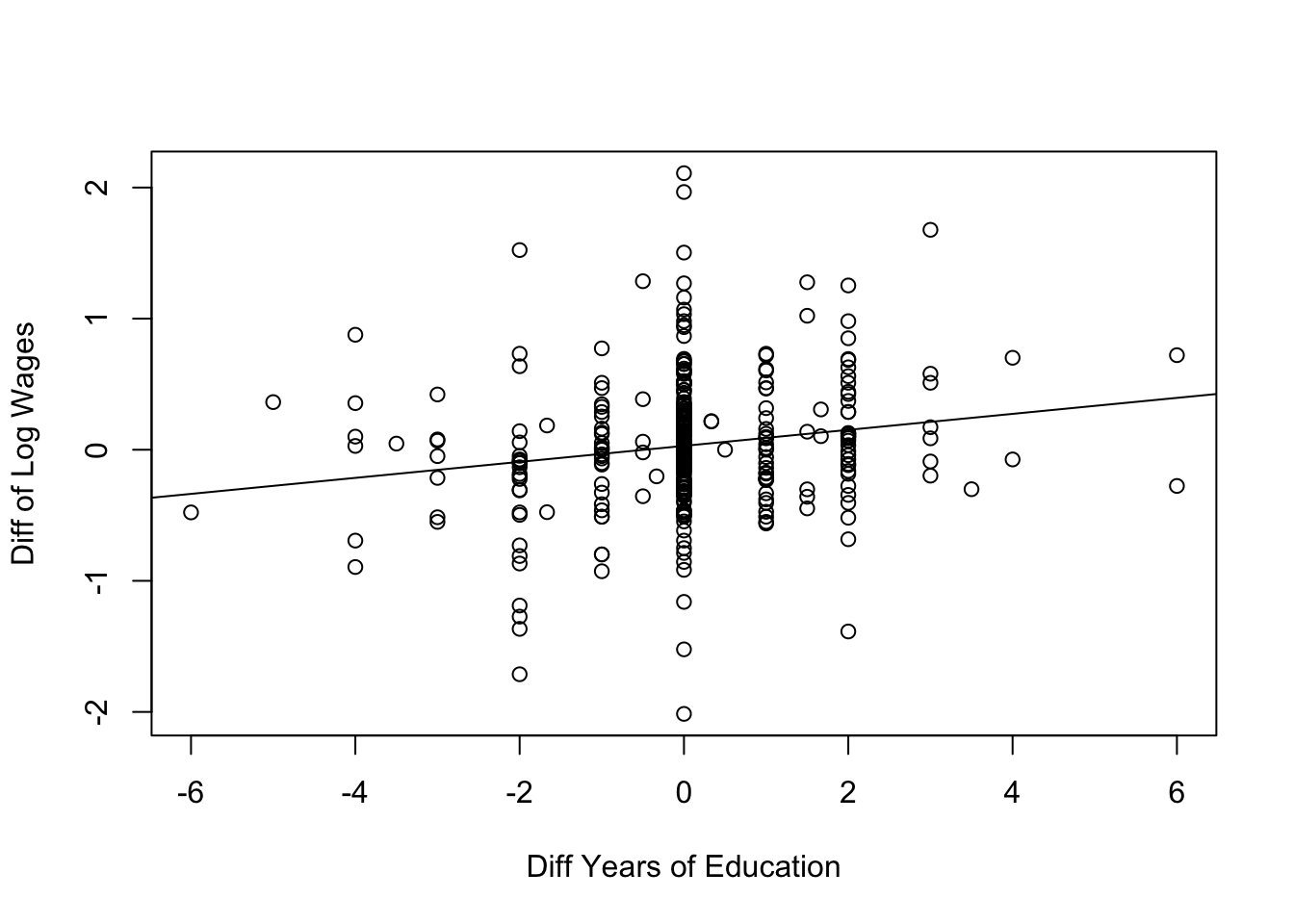

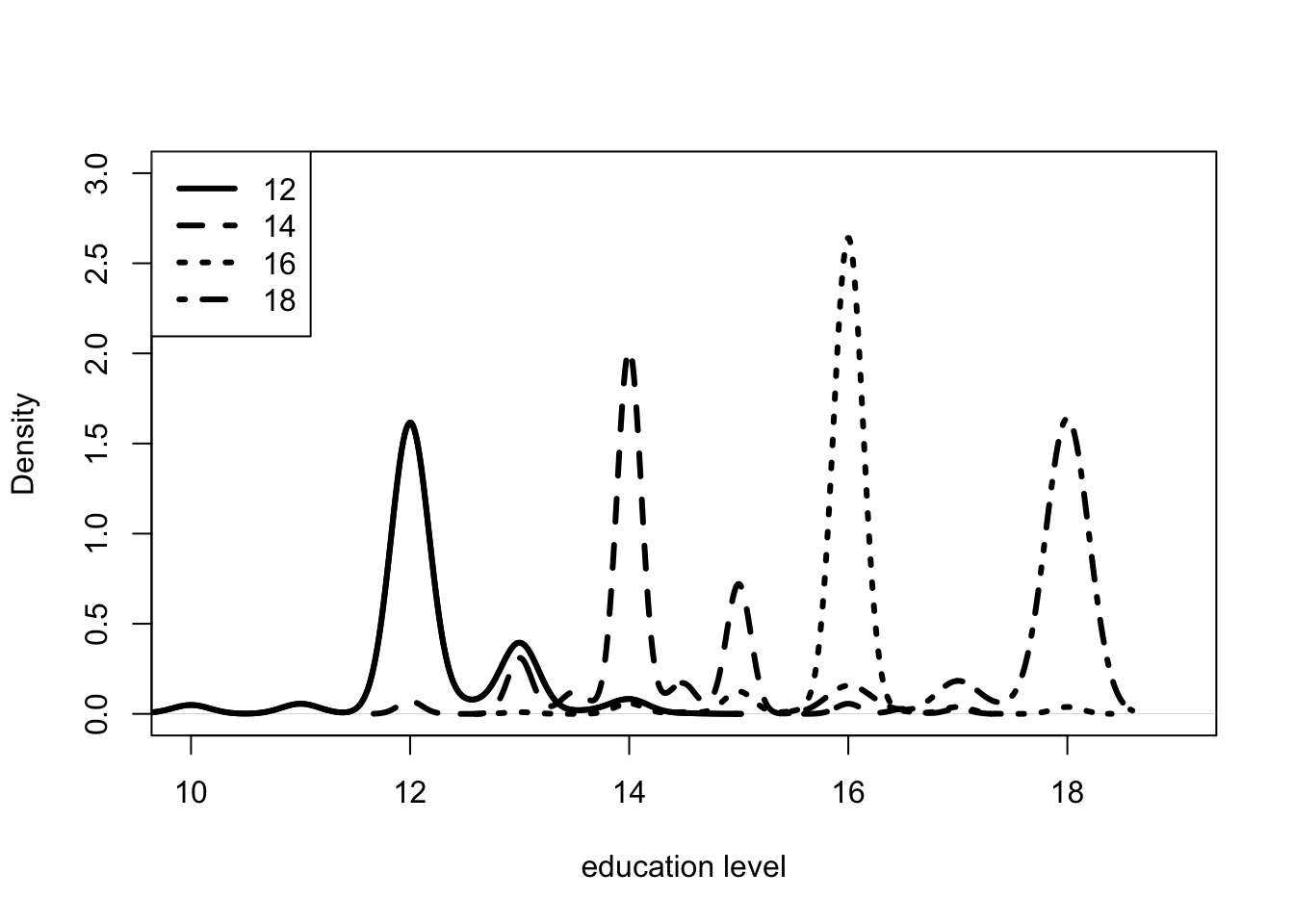

The book presents interesting and important questions. One of the most important is measuring “returns to schooling.” What is the causal effect on income of having one more year of school? It is easy to see that people with college degrees earn more than those with high school diplomas. It is much harder to determine if a policy that encourages someone to finish college actually leads that person to earn more money. I throw lots of data, economic theory and statistical techniques at this question. Hopefully, by the end you will see how analysis of survey data with OLS, IV, Heckman selection and GMM models helps us answer this question. You will also see how mixture models can be used to analyze comparisons of twins.

The book discusses important questions beyond returns to schooling. It discusses racism in mortgage lending. It discusses gender bias in labor market earnings. It discusses increasing the federal minimum wage. It discusses the effect of guns on crime. It even discusses punting on fourth down. I hope the book points you to new questions and new data to answer existing questions.

The book does not recommend policies. The government economist and founding Director of the Congressional Budget Office, Alice Rivlin, argued that it is extremely important to provide policy makers with objective analysis. In a memo to staff she said the following.5

We are not to be advocates. As private citizens, we are entitled to our own views on the issues of the day, but as members of CBO, we are not to make recommendations, or characterize, even by implication, particular policy questions as good or bad, wise or unwise.

Economists in government, the private sector and the academy work on important policy questions. I believe that economists are most effective when they do not advocate for policy positions, but present objective analysis of the economics and the data. I hope that this book presents objective analysis of interesting policy questions and you have no idea whether I think particular policy positions are good or bad, wise or unwise.6

The Outline

The book’s twelve chapters are broken into three parts based on the main approach to identification. The first part presents methods that rely on the existence of an experiment. This part includes chapters covering ordinary least squares (OLS), instrumental variables (IV), randomized controlled trials (RCTs) and Manski bounds. The second part presents methods that rely on economic theory to identify parameters of interest. This is often referred to as a structural approach. These chapters discuss demand models and discrete estimators such as logits and probits, censored and selection models, non-parametric auction models and generalized method of moments (GMM). The third part presents methods that rely on the existence of repeated measurement in the data. These methods include difference in difference, fixed effects, synthetic controls and factor models.

Experiments

The first four chapters rely on experiments, broadly construed, to identify the causal effects of policies.

Chapter 1 introduces the work-horse algorithm of economics, ordinary least squares (OLS). This model is simple and quick to estimate and often produces reasonable results. The chapter illustrates how the model is able to disentangle the effects on the outcome of interest. OLS relies on strong assumptions. In particular, the model assumes that the policy variable of interest affects the outcome independently of any unobserved term.

Chapter 2 considers how additional observed characteristics improve our estimates. It shows when adding more control variables improves the estimation and when it produces garbage. The chapter discusses the problem of multicollinearity. It discusses an alternative to the standard approach based on the work of Judea Pearl. The chapter replicates the OLS model used in Card (1995) to estimate returns to schooling. The chapter uses a DAG and Pearl’s approach to help determine whether there exists evidence of systematic racism in mortgage lending.

Chapter 3 introduces the instrumental variables model. This model allows the independence assumption to be weakened. The model allows the policy variable to be affected by unobserved characteristics that also determine the outcome. The chapter presents IV estimates of returns to schooling by replicating Card (1995). DAGs are used to illustrate and test the assumptions. The Local Average Treatment Effect (LATE) is proposed as an estimator when the researcher is unwilling to assume the treatment effects each person identically.

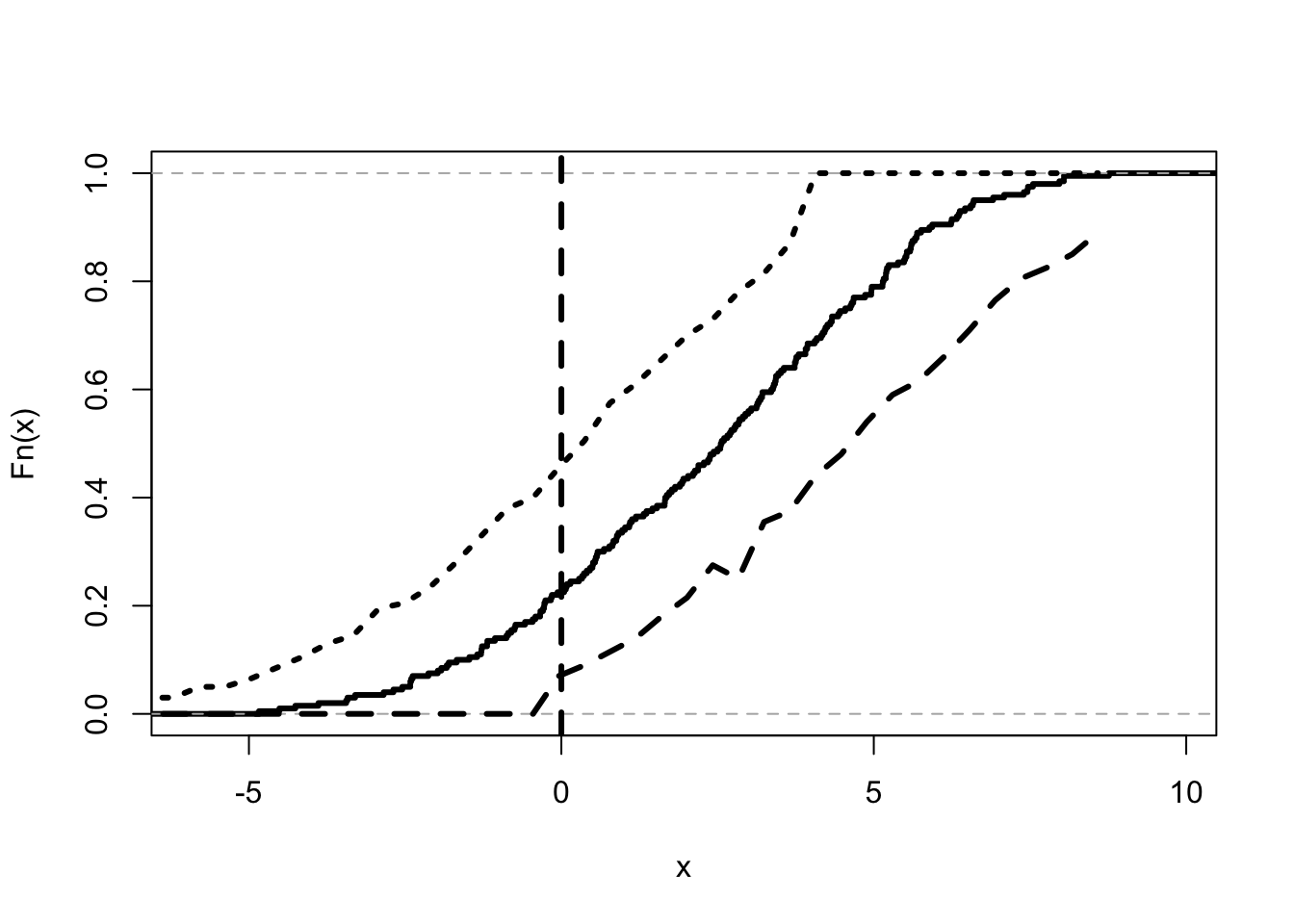

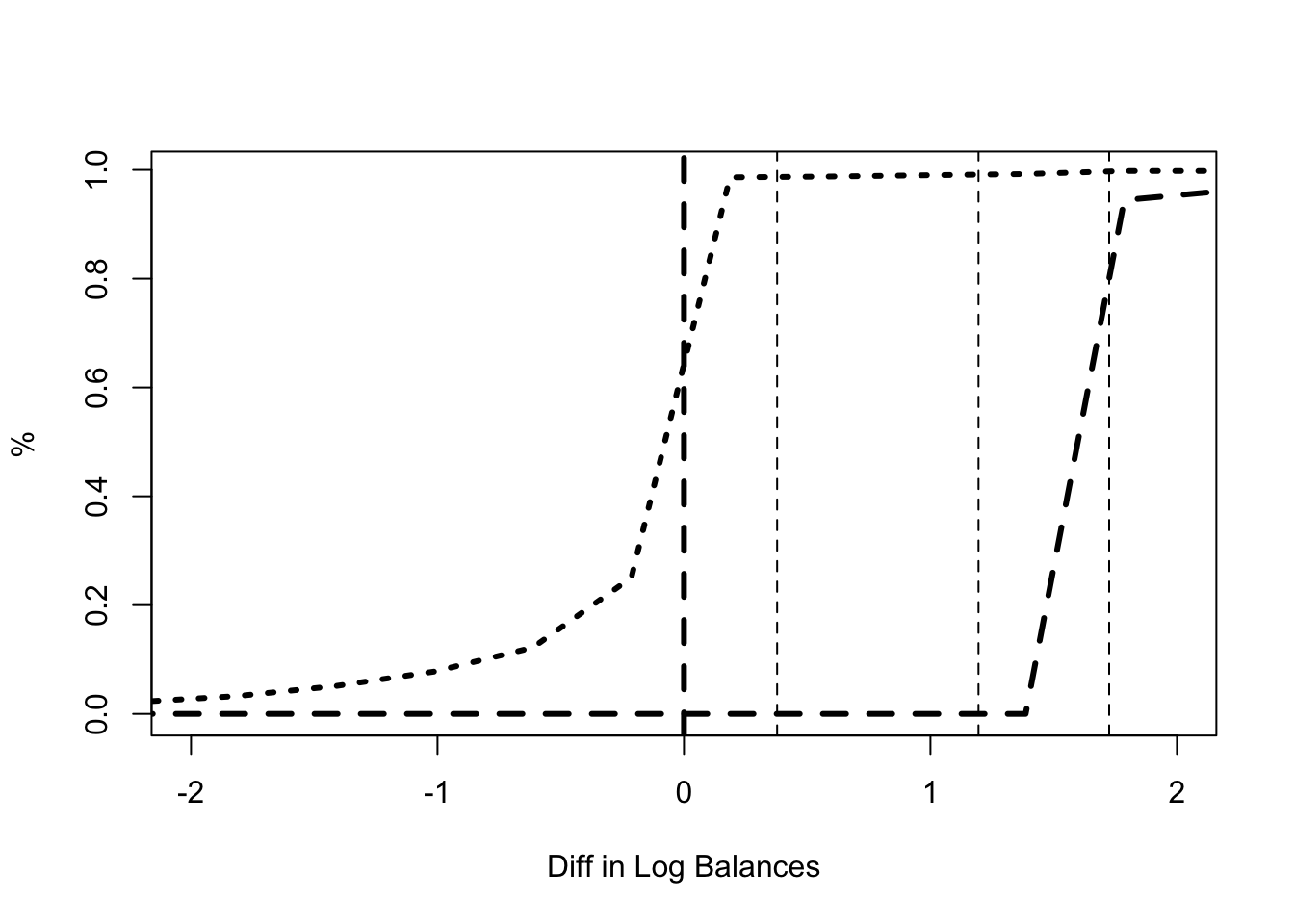

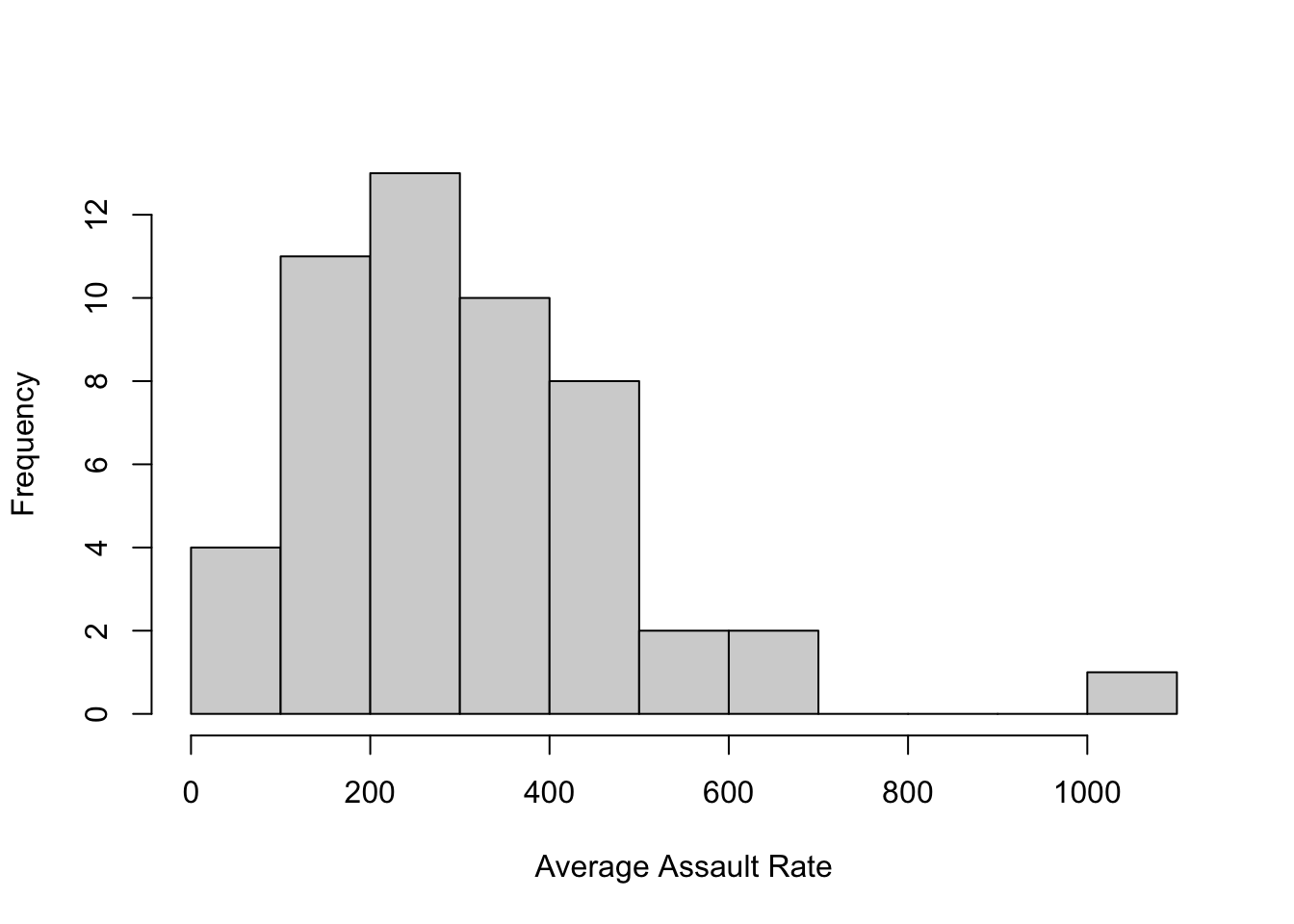

Chapter 4 considers formal experiments. The ideal randomized controlled trial allows the researcher to estimate the average effect of the policy variable. It also allows the researcher to bound the distribution of effects using Kolmogorov bounds. The method is used to bound the effect of commitment savings devices on increasing or decreasing savings. The chapter presents Manski’s natural bounds and discusses inference when the data does not come from ideal randomized controlled trials. It considers the problem of estimating the causal effect of guns on crime using variations in state gun laws.

Structural Estimation

The first four chapters consider questions and issues relevant to economics, but describe standard estimation methods. Chapters 5 to 9 use economic theory directly in the estimation methods.

Chapter 5 introduces revealed preference. The chapter shows how this idea is used to infer unobserved characteristics of individual economic actors. Berkeley econometrician, Dan McFadden, pioneered the idea of using economic theory in his analysis of how people would use the new (at the time) Bay Area Rapid Transit (BART) system. This chapter introduces standard tools of demand analysis including the logit and probit models. It takes these tools to the question of whether smaller US cities should invest in urban rail infrastructure.

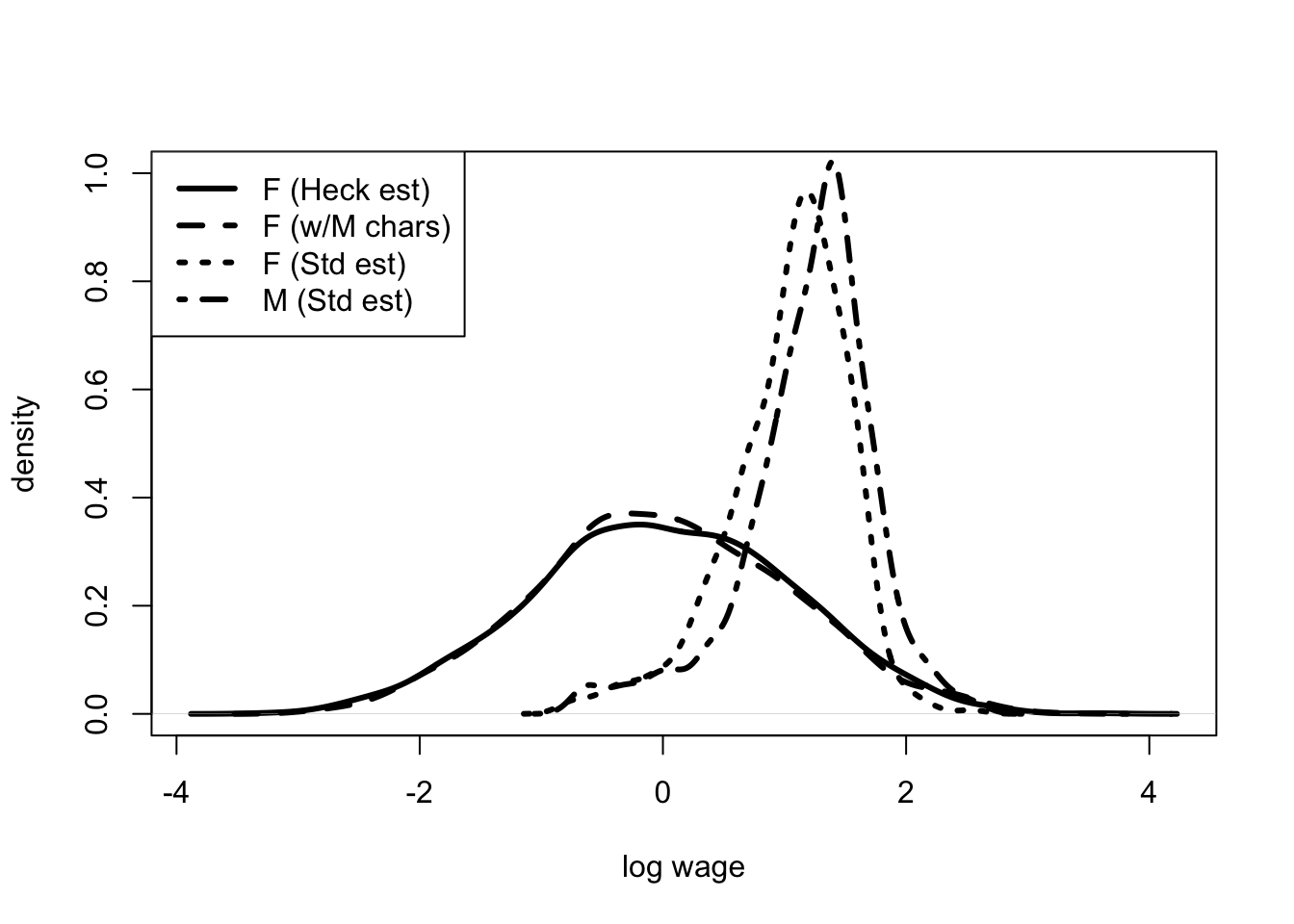

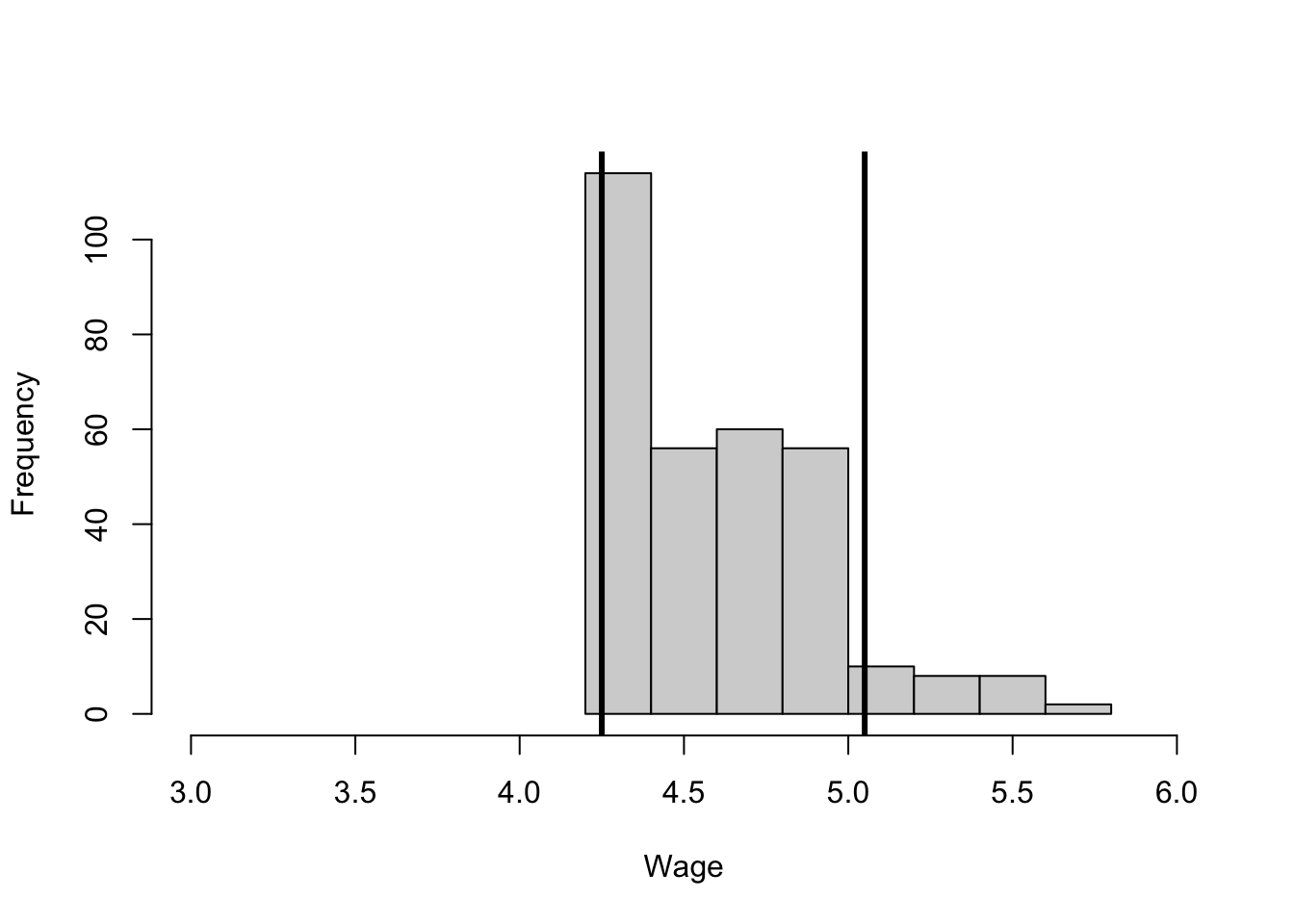

Chapter 6 also uses revealed preference, but this time to analyze labor markets. Chicago’s Jim Heckman shared the Nobel prize with Dan McFadden for their work on revealed preference. In McFadden’s model you, the econometrician, do not observe the outcome from any choice, just the choice that was made. In Heckman’s model you observe the outcome from the choice that was made, but not the outcome from the alternative. The chapter describes the related concepts of censoring and selection, as well as their model counterparts the Tobit and Heckit. The section uses these tools to analyze gender differences in wages and returns to schooling.

Chapter 7 returns to the question of estimating demand. This time it allows the price to be determined as the outcome of market interactions by a small number of firms. This chapter considers the modern approach to demand analysis developed by Yale economist, Steve Berry. This approach combines game theory with IV estimation. The estimator is used to determine the value of Apple Cinnamon Cheerios.

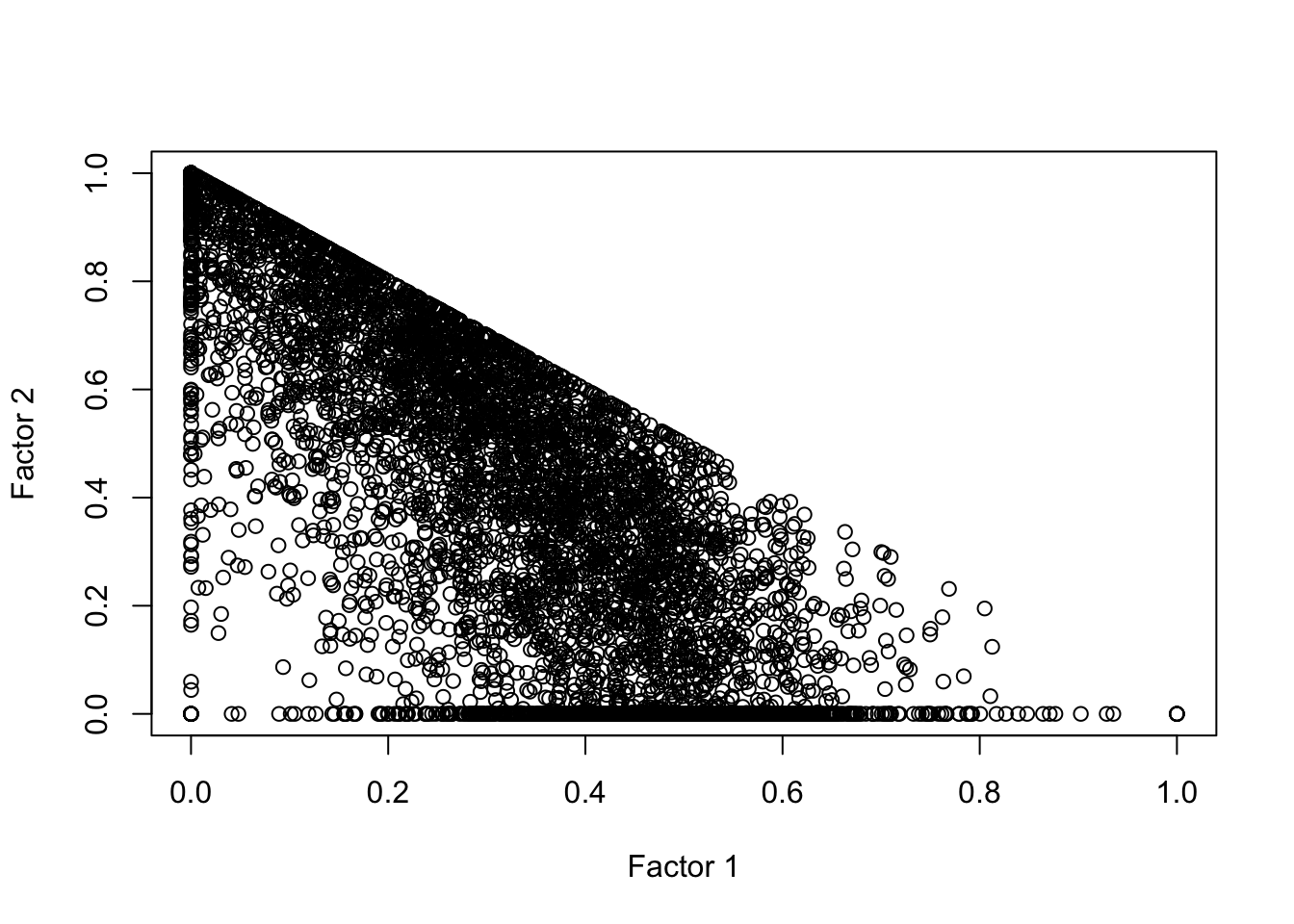

Chapter 8 uses game theory and the concept of a mixed strategy Nash equilibrium to reanalyze the work of Berkeley macroeconomist, David Romer. Romer used data on decision making in American football to argue that American football coaches are not rational. In particular, coaches may choose to punt too often on fourth down. Reanalysis finds the choice to punt to be generally in line with the predictions of economic theory. The chapter introduces the generalized method of moments (GMM) estimator developed by the University of Chicago’s Lars Peter Hansen.

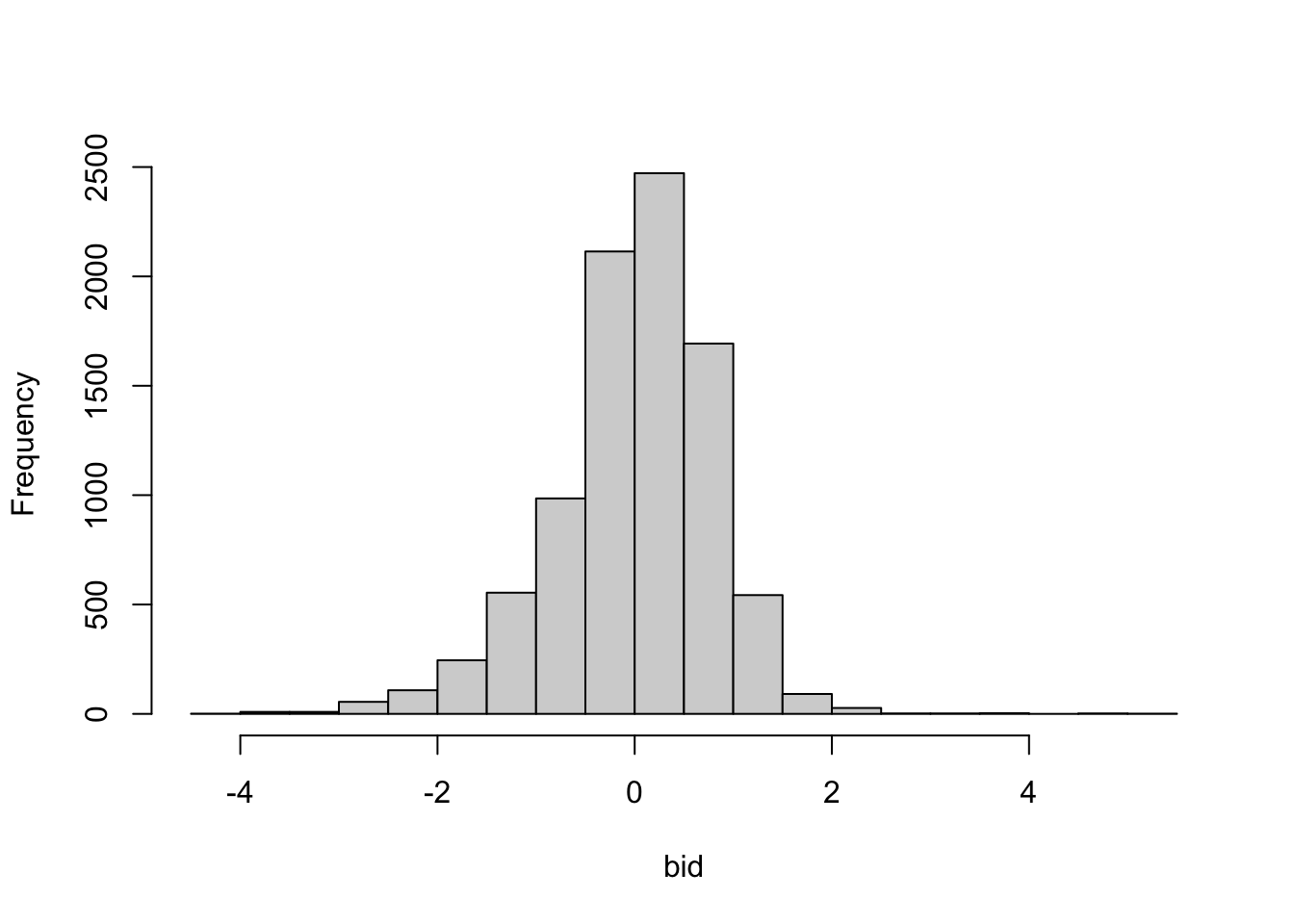

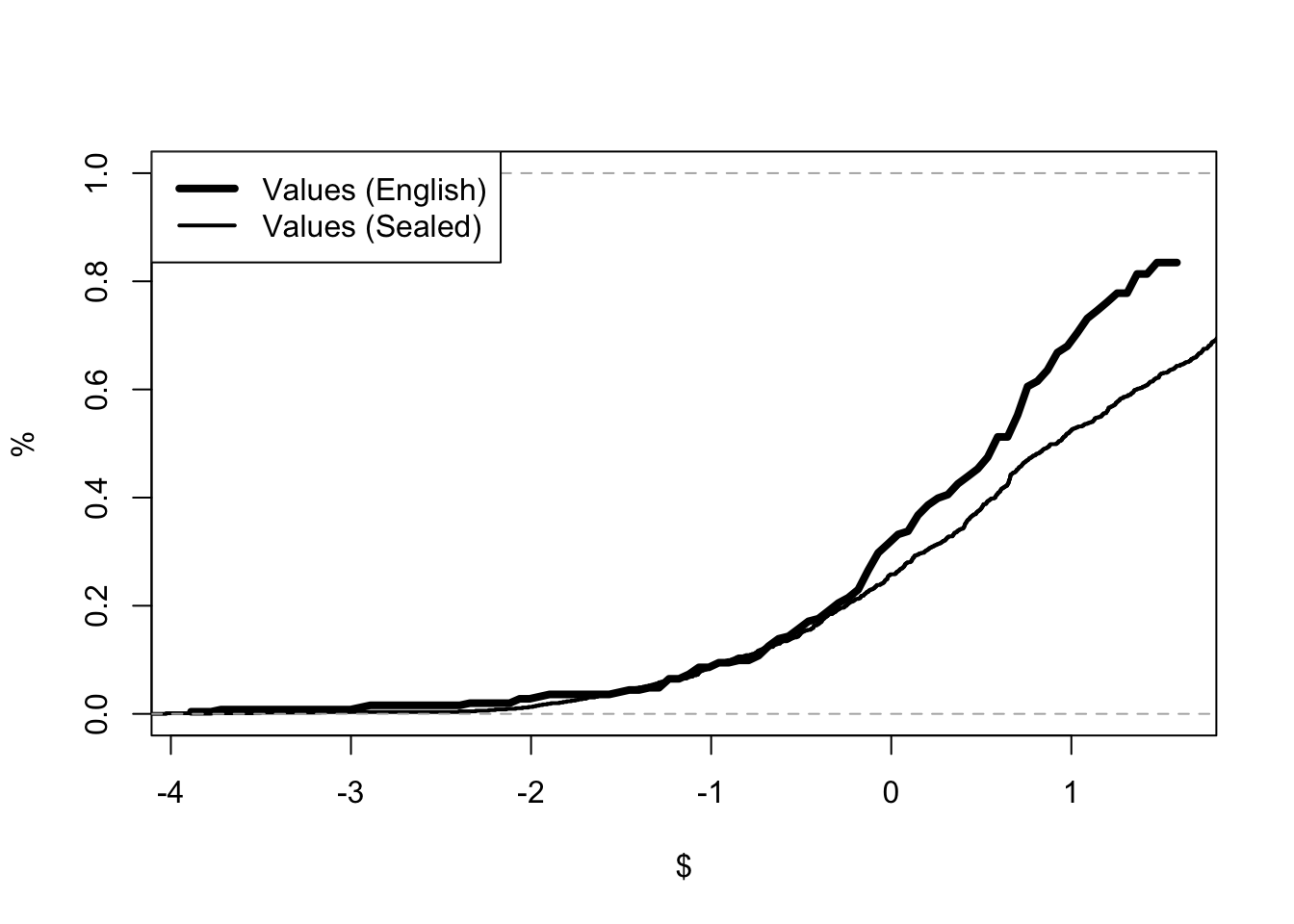

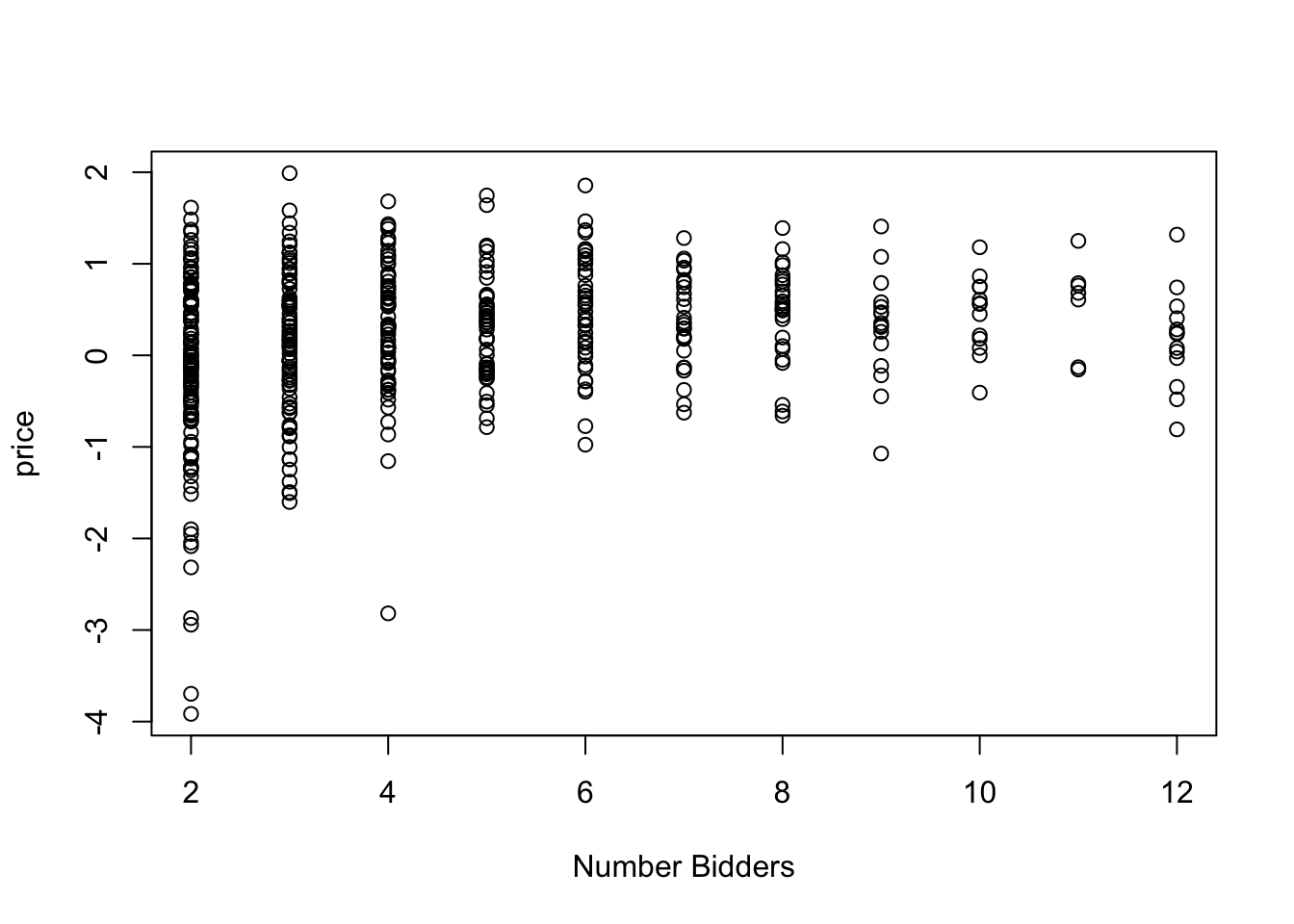

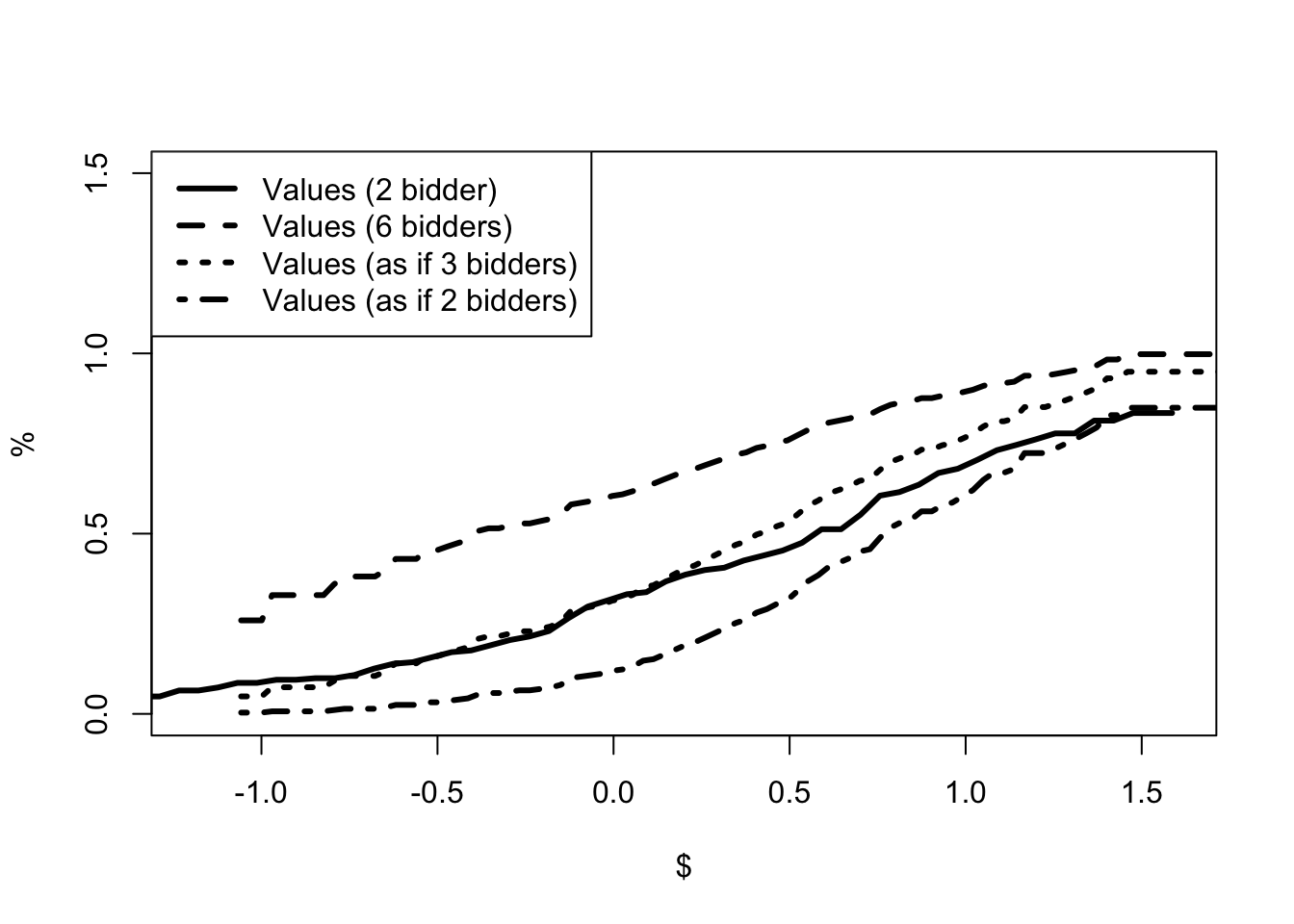

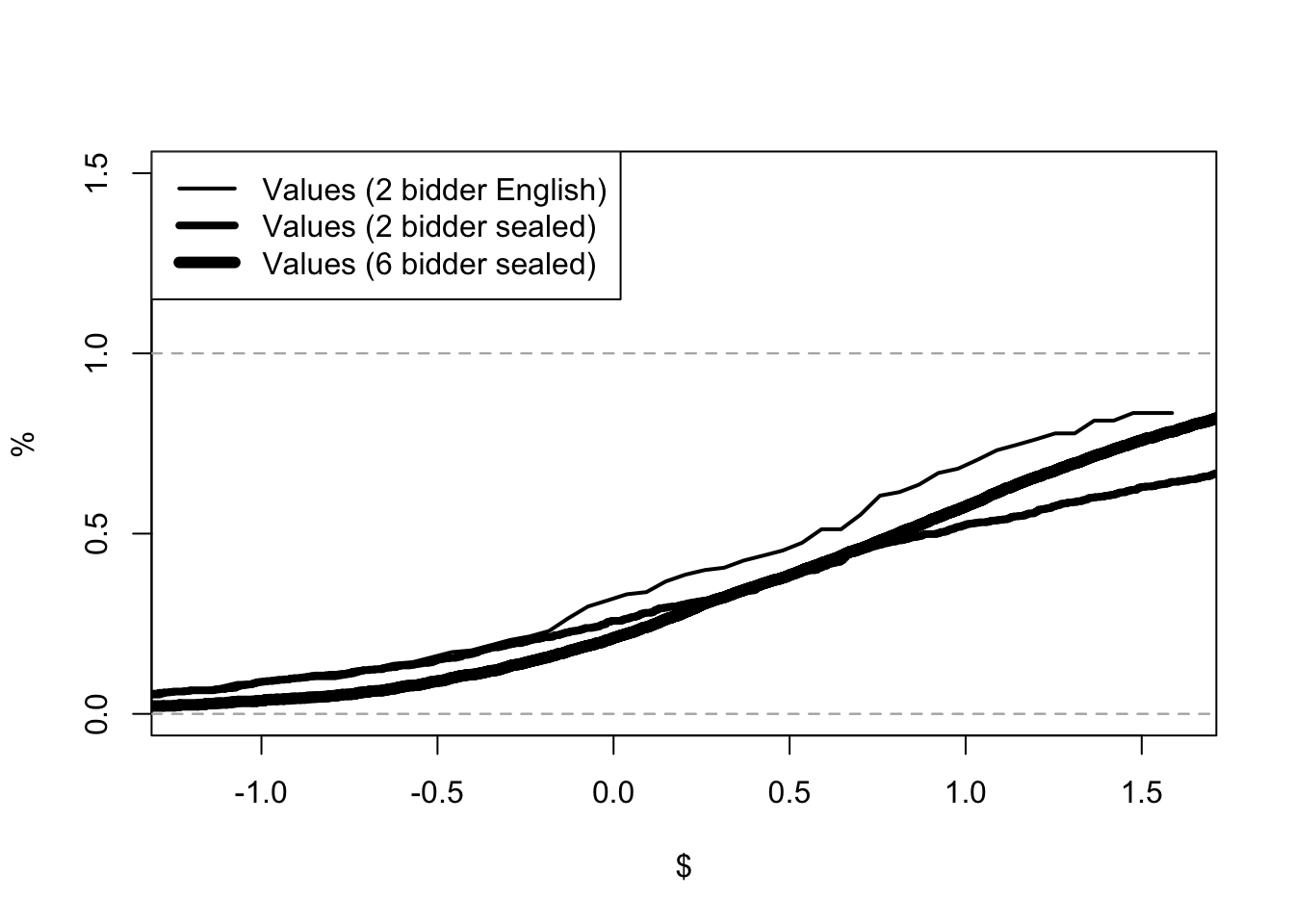

Chapter 9 considers the application of game theory to auction models. The book considers the GPV model of first price (sealed-bid) auctions and the Athey-Haile model of second price (English) auctions. GPV refers to the paper by Emmanuel Guerre, Isabelle Perrigne and Quang Vuong, Optimal Nonparametric Estimation of First-Price Auctions published in 2000. The paper promoted the idea that auctions, and structural models more generally, can be estimated in two steps. In the first step, standard statistical methods are used to estimate statistical parameters of the auction. In the second step, economic theory is used to back out the underlying policy parameters. For second-price auctions, the chapter presents Athey and Haile (2002). Stanford’s Susan Athey and Yale’s Phil Haile provide a method for analyzing auctions when only some of the information is available. In particular, they assume that the econometrician only knows the price and the number of bidders. These methods are used to analyze timber auctions and determine whether the US Forestry Service had legitimate concerns about collusion in the 1970s logging industry.

Repeated Measurement

Chapters 10 to 12 consider data with repeated measurement. Repeated measurement has two advantages. First, it allows the same individual to be observed facing two different policies. This suggests that we can measure the effect of the policy as the difference in observed outcomes. Second, repeated measurement allows the econometrician to infer unobserved differences between individuals. We can measure the value of a policy that affects different individuals differently.

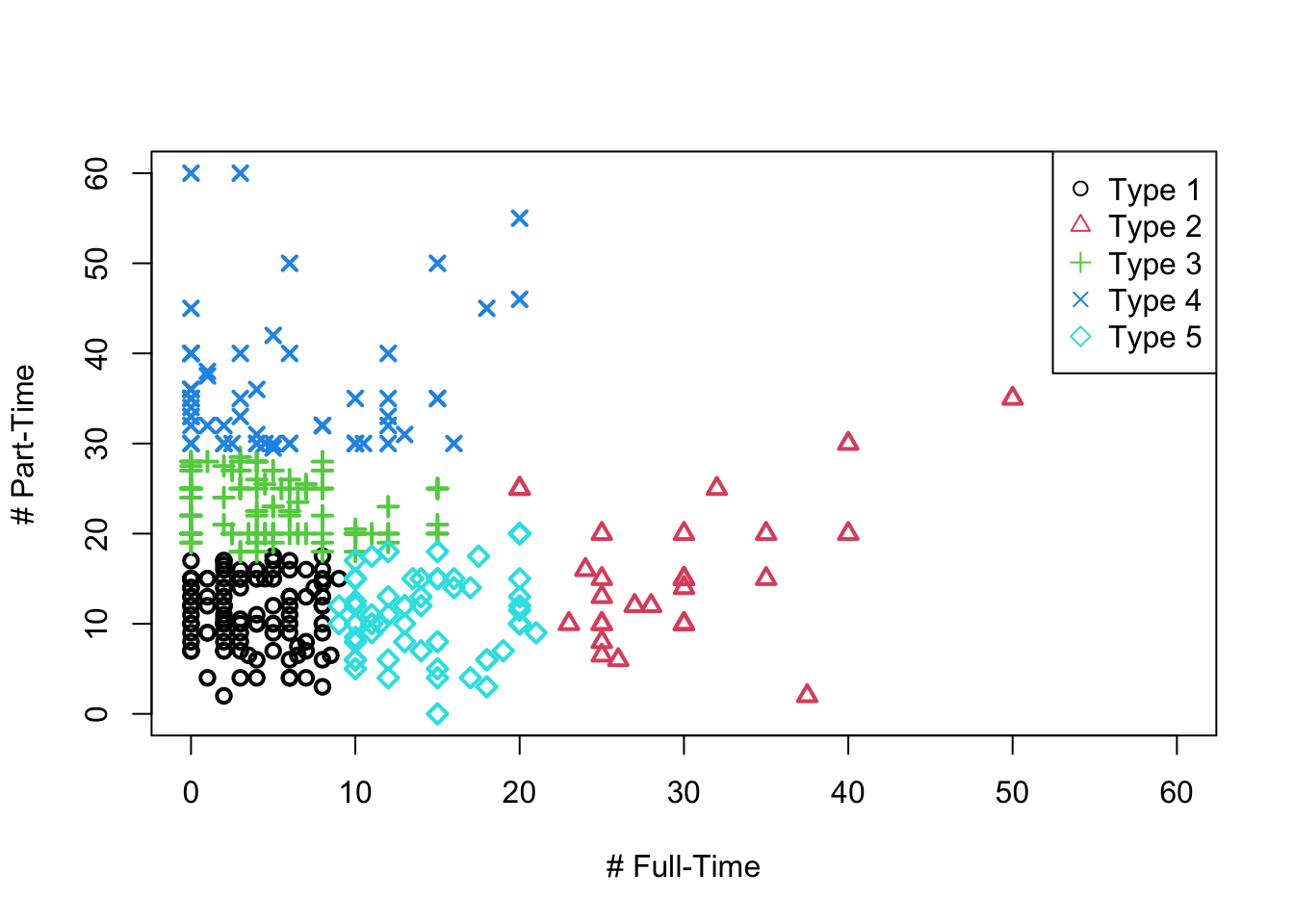

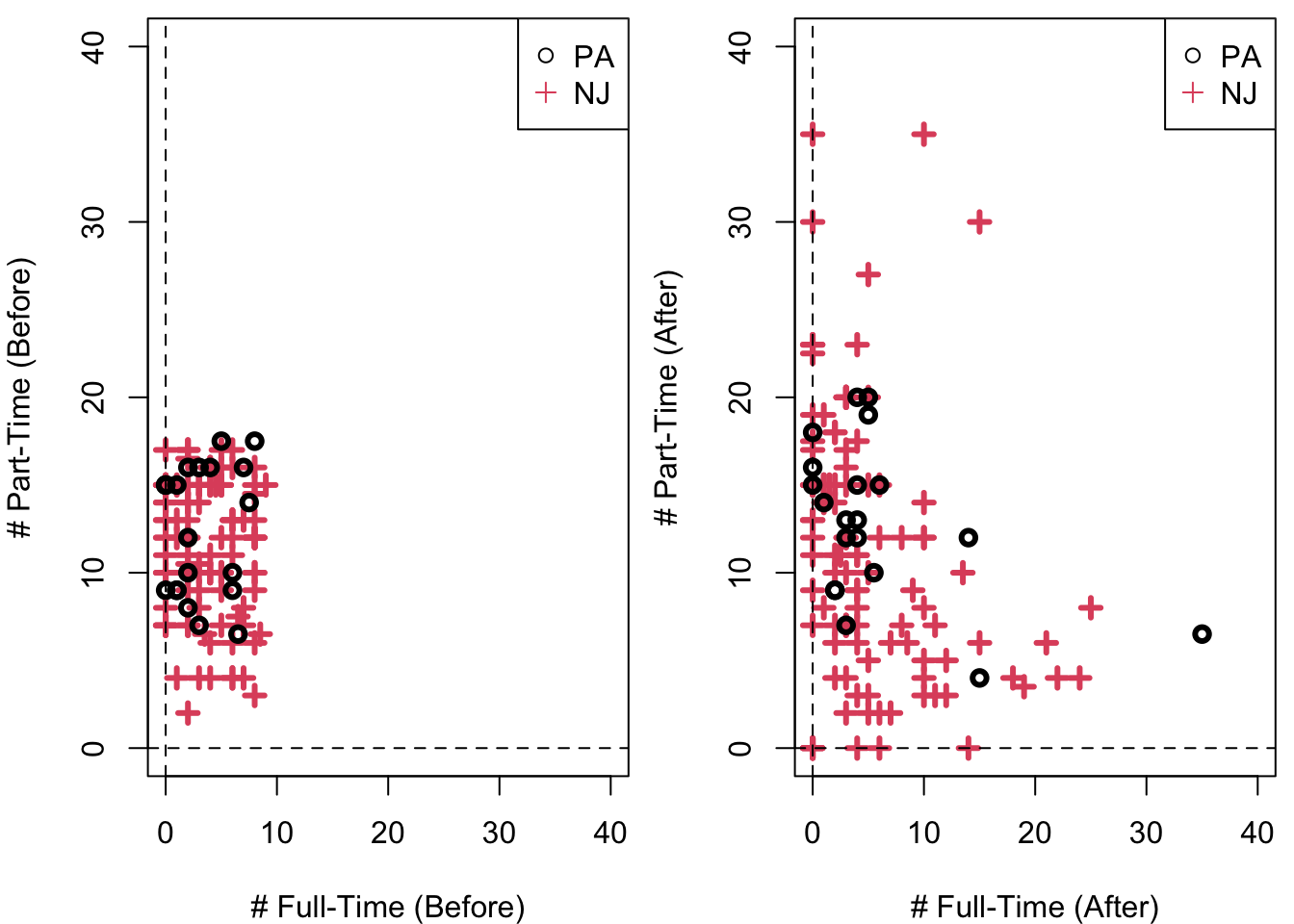

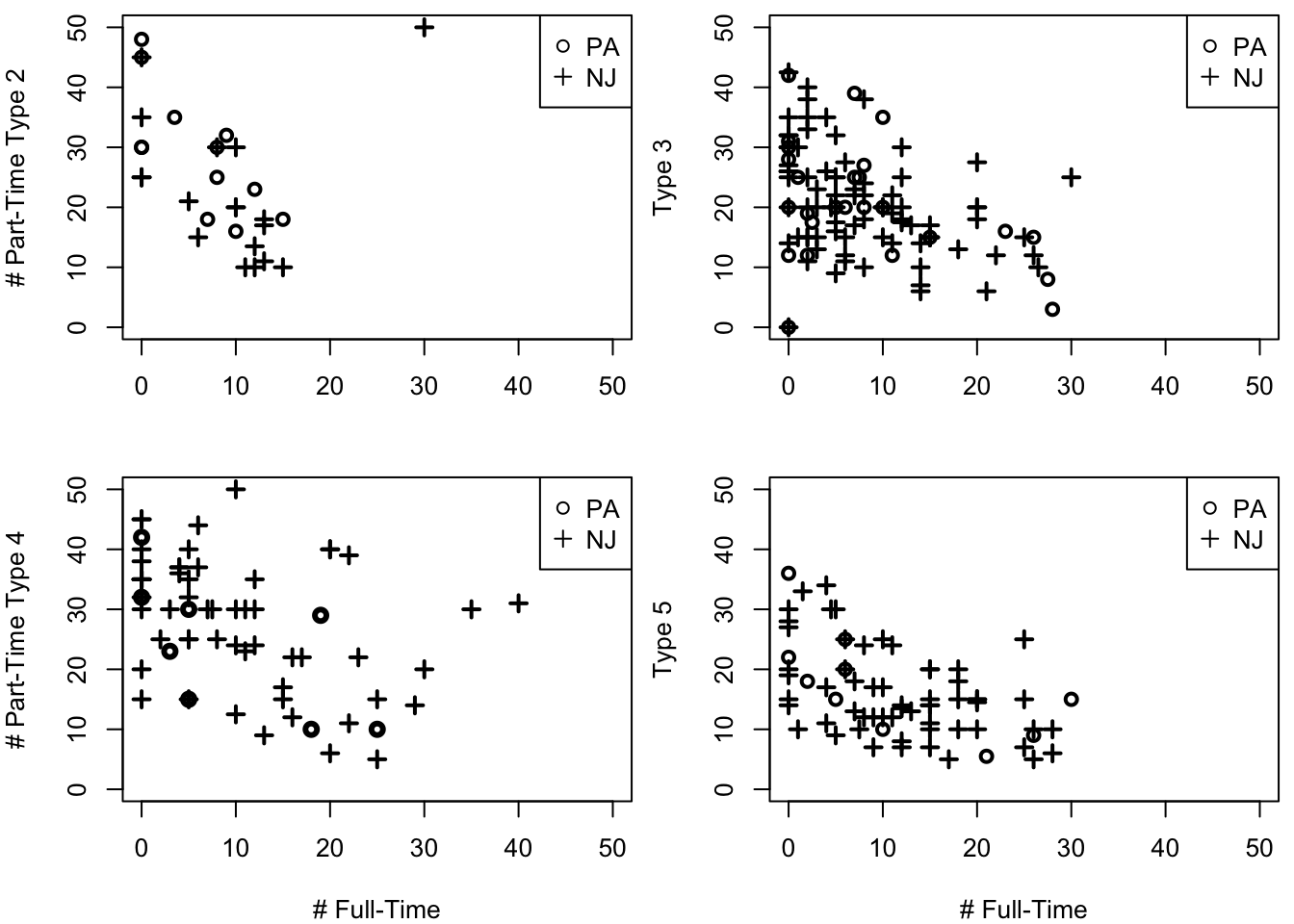

Chapter 10 considers panel data models. Over the last 25 years, the difference in difference estimator has become one of the most used techniques in microeconometrics. The chapter covers difference in difference and the standard fixed effects model. These methods are used to analyze the impact of increasing the minimum wage. The chapter replicates David Card and Alan Krueger’s famous work on the impact of increasing the minimum wage in New Jersey on restaurant employment. The chapter also measures the impact of the federal increase in the minimum wage that occurred in the late 2000s. The chapter follows Currie and Fallick (1996) and uses fixed effects and panel data from the National Longitudinal Survey of Youth 1997.

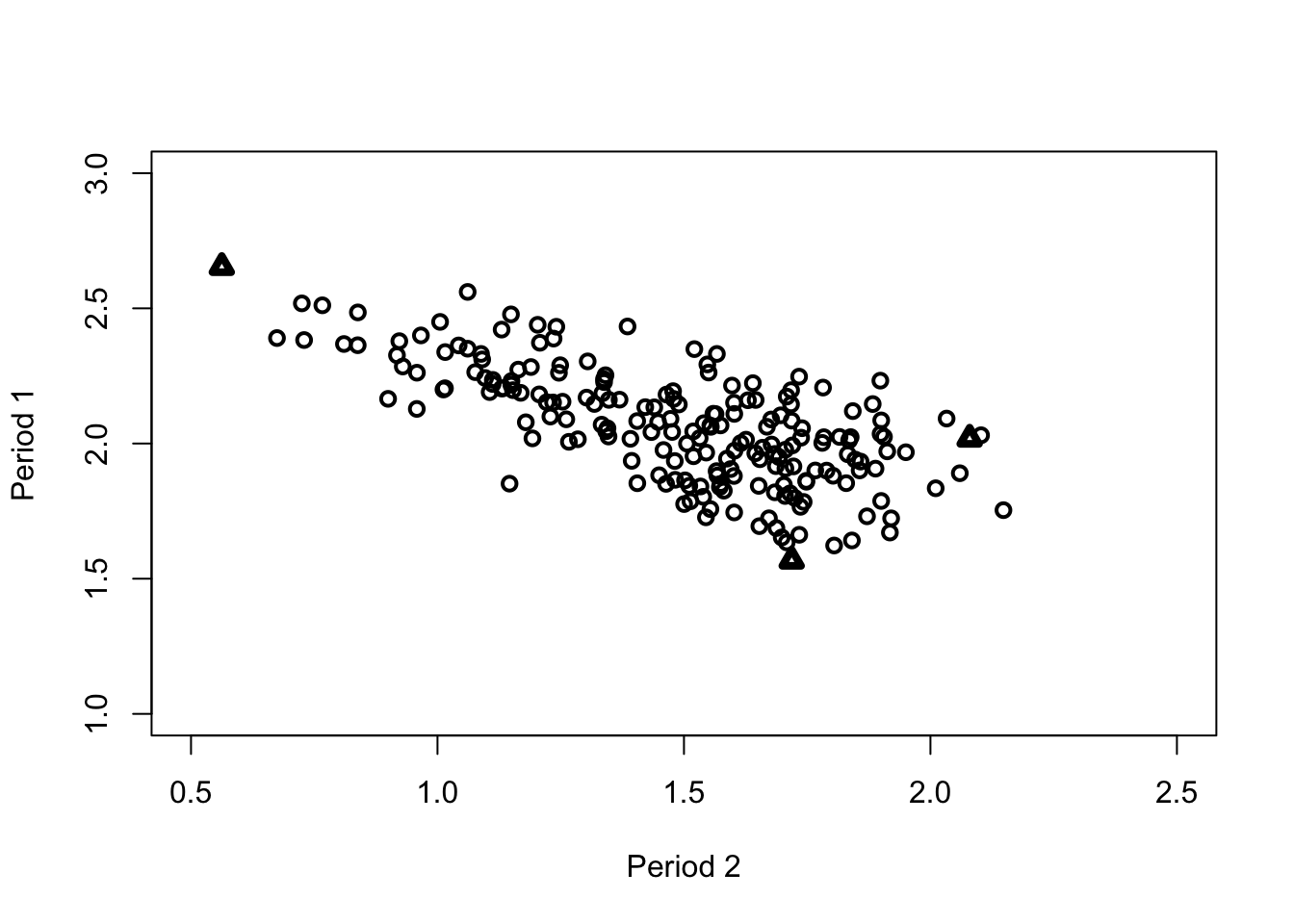

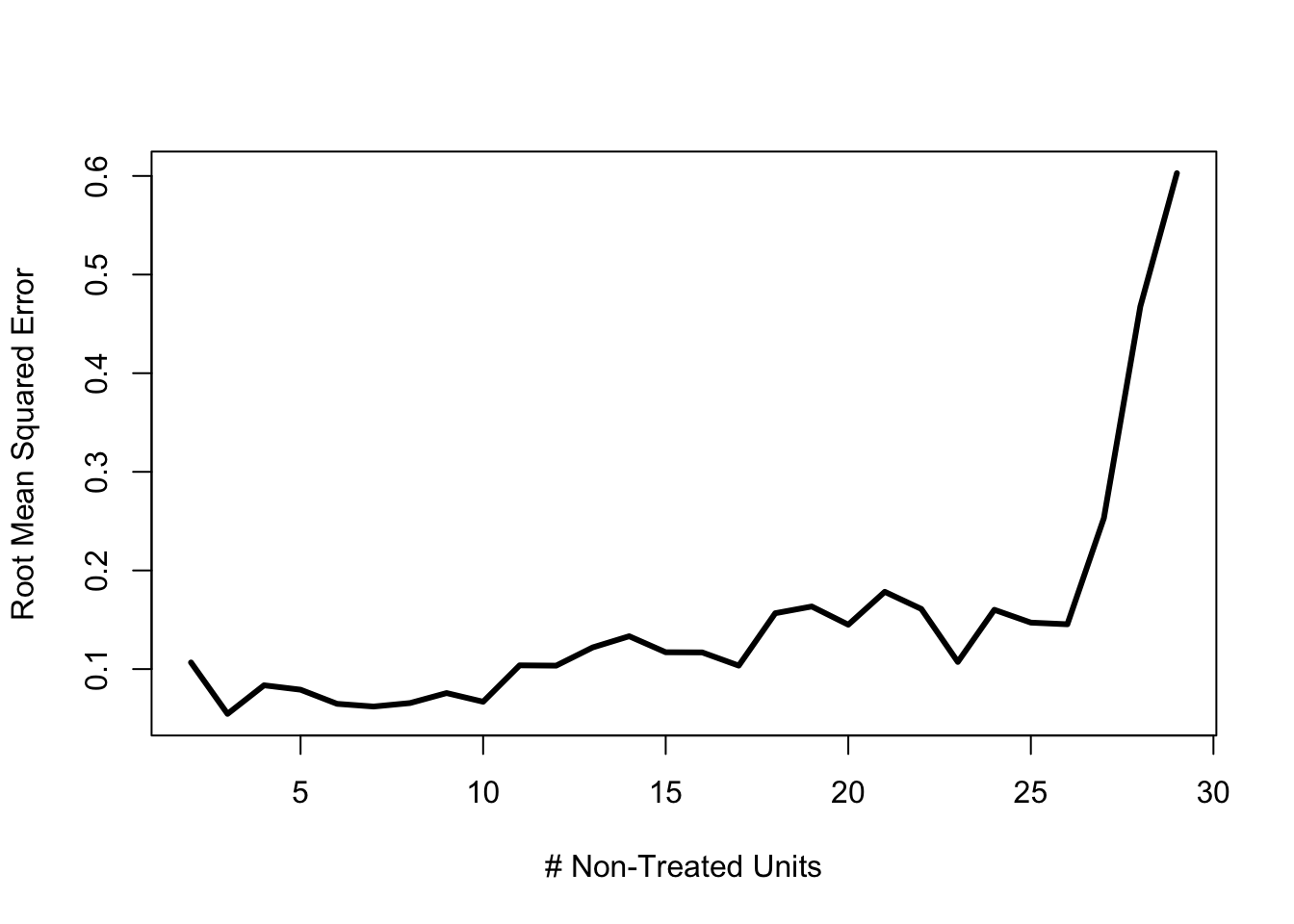

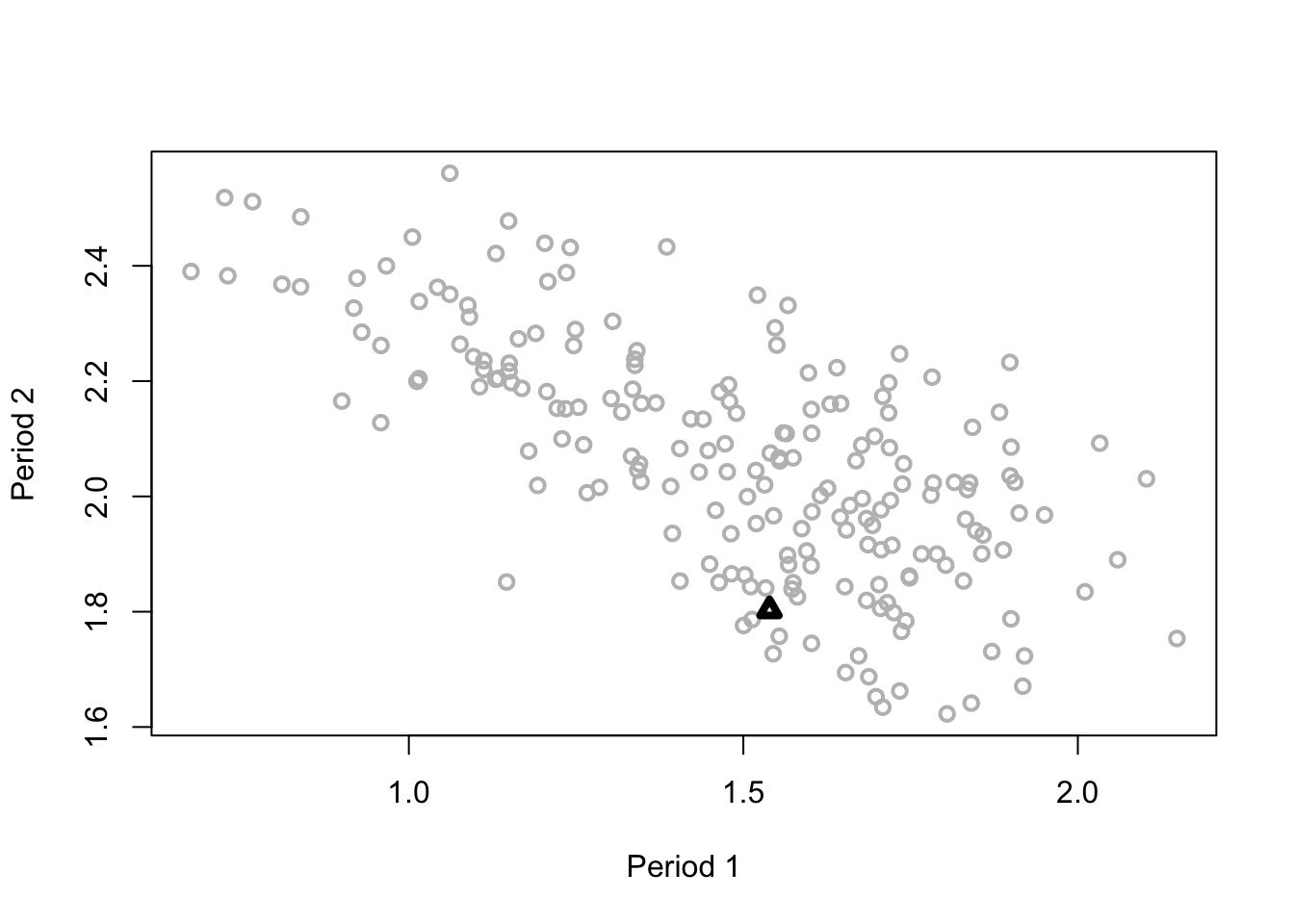

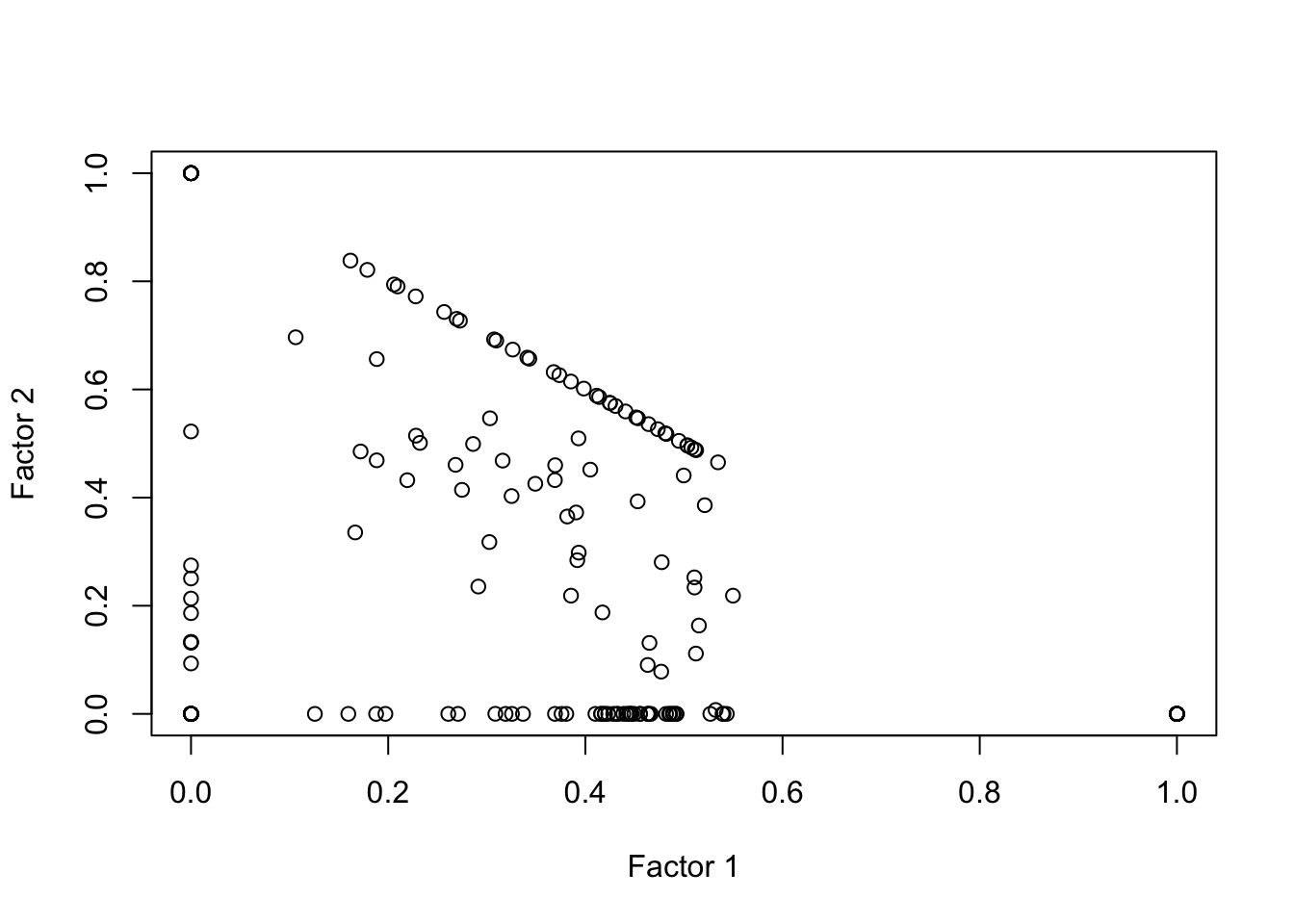

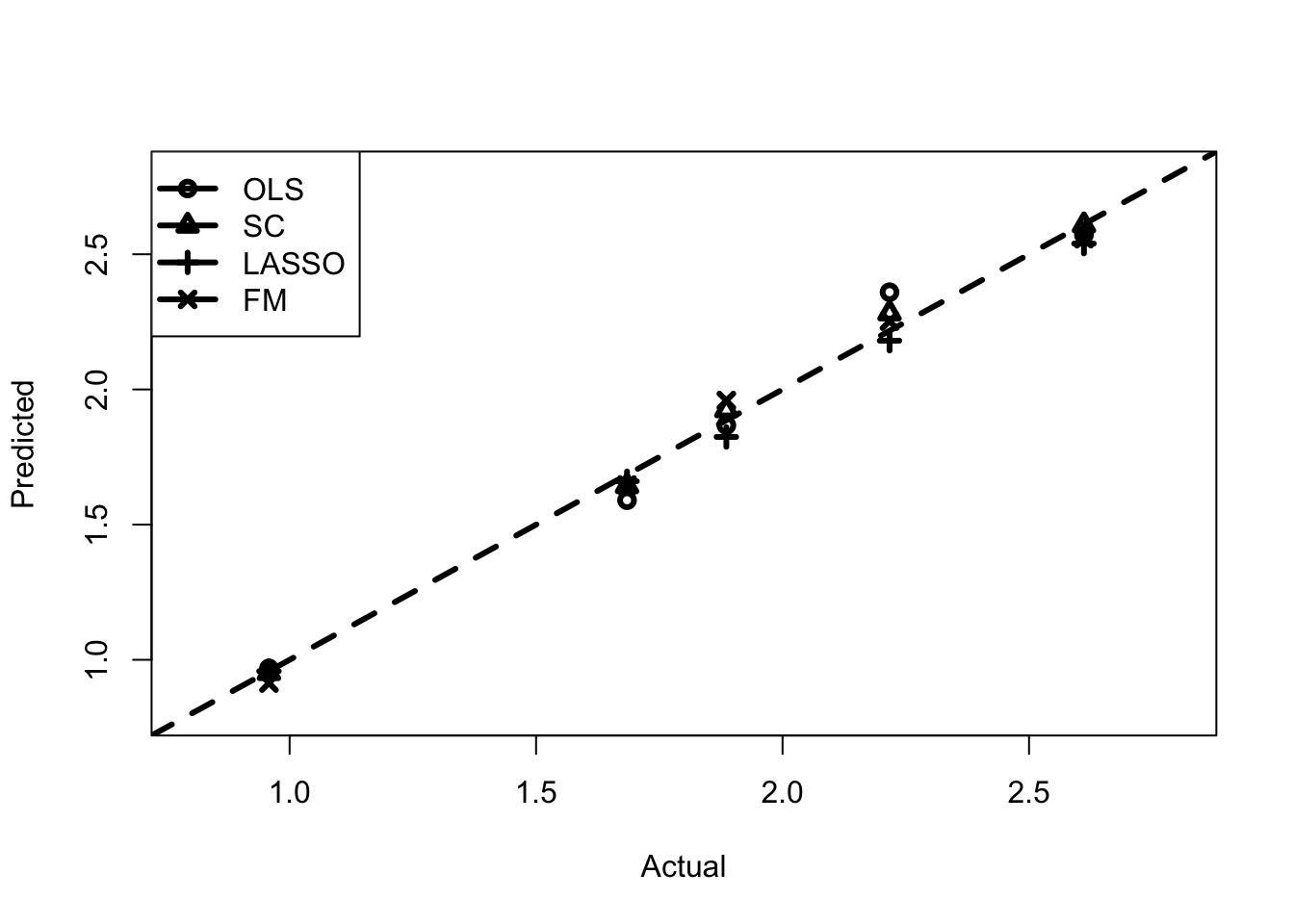

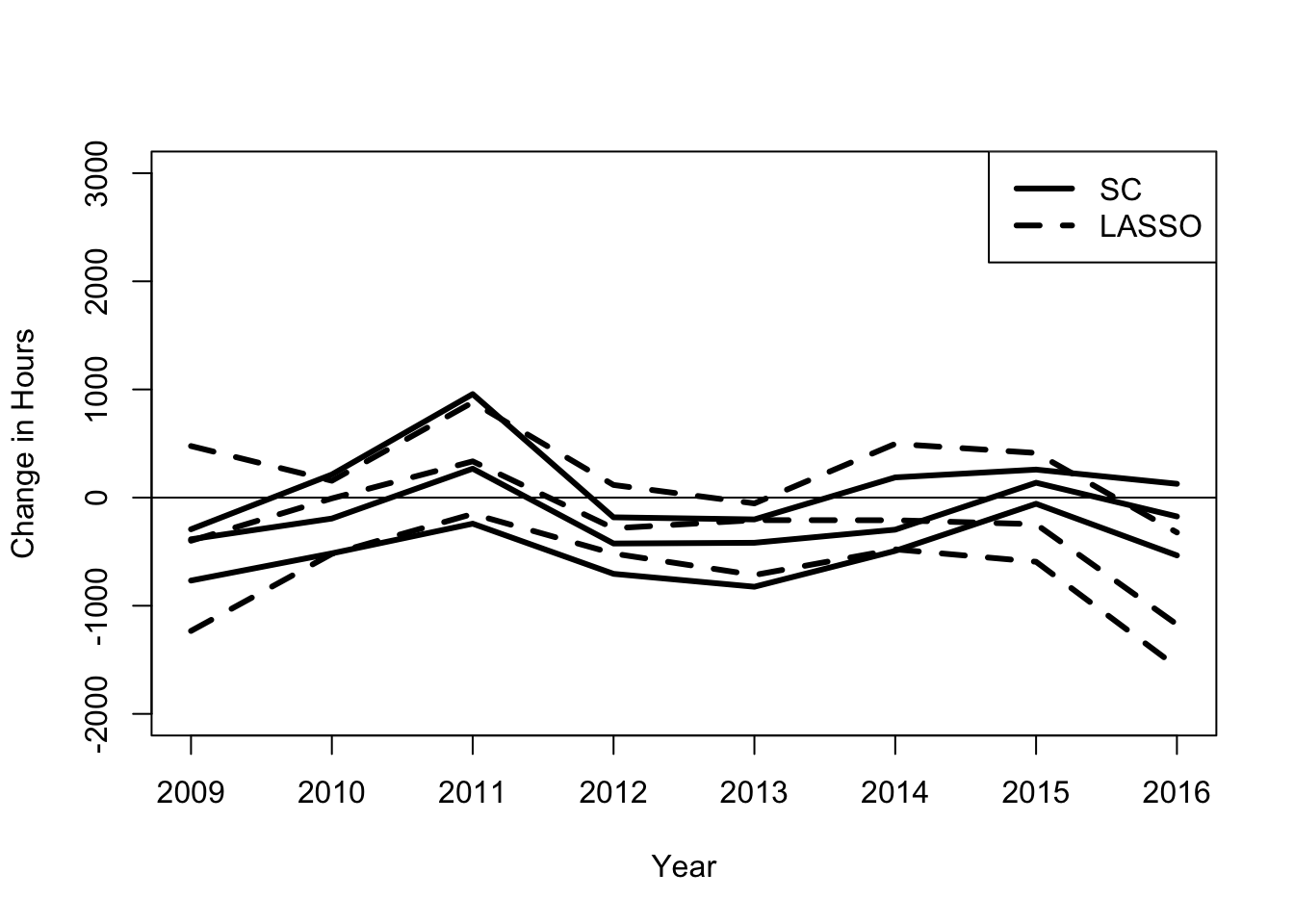

Chapter 11 considers a more modern approach to panel data analysis. Instead of assuming that time has the same effect on everyone, the chapter considers various methods for creating synthetic controls. It introduces the approach of Abadie, Diamond, and Hainmueller (2010) as well as alternative approaches based on regression regularization and convex factor models. It discusses the benefits and costs of these approaches and compares them using NLSY97 to measure the impact of the federal increase in the minimum wage in the late 2000s.

Chapter 12 introduces mixture models. These models are used throughout microeconometrics, but they are particularly popular as a way to solve measurement error issues. The chapter explains how these models work. It shows that they can be identified when the econometrician observes at least two signals of the underlying data process of interest. The idea is illustrated estimating returns to schooling for twins. The chapter returns to the question of the effect of New Jersey’s minimum wage increase on restaurant employment. The mixture model is used to suggest that the minimum wage increase reduced employment for small restaurants, consistent with economic theory.

Technical Appendices

The book has two technical appendices designed to help the reader to go into more depth on some issues that are not the focus of the book.

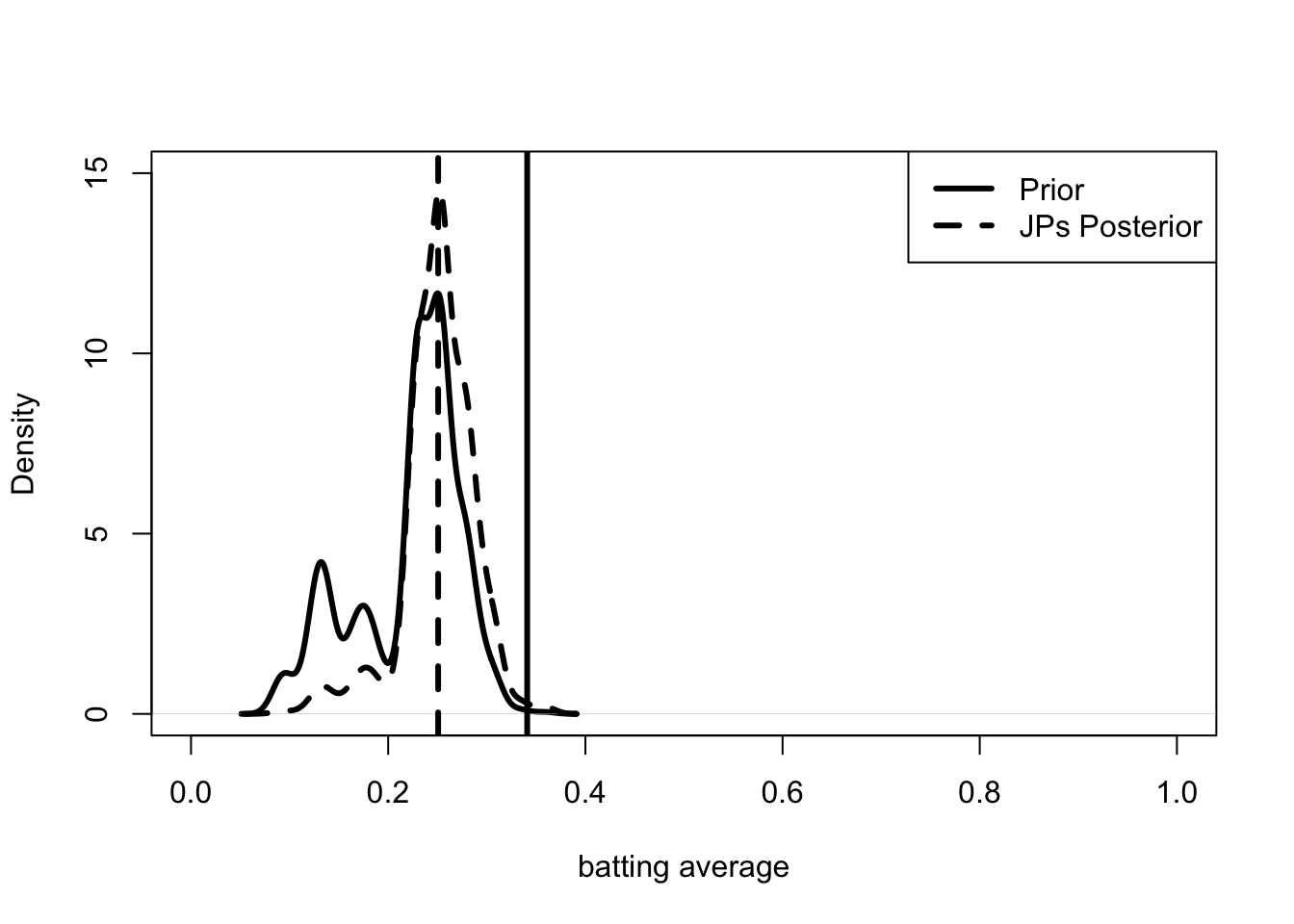

Appendix A presents statistical issues, including assessing the value of estimators using measures of bias, consistency and accuracy. It presents a discussion of the two main approaches to finding estimators, the classical method and the Bayesian method. It discusses standard classical ideas based on the Central Limit Theorem and a more recent innovation known as bootstrapping. The Bayesian discussion includes both standard ideas and Herbert Robbins’ empirical Bayesian approach. Like the rest of the book, this appendix shows how you can use these ideas but also gives the reader some insight on why you would want to use them. The appendix uses the various approaches to ask whether John Paciorek was better than Babe Ruth.

Appendix B provides more discussion of R and various programming techniques. The appendix discusses how R is optimized for analysis of vectors, and the implications for using loops and optimization. The chapter discusses various objects that are used in R, basic syntax and commands as well as basic programming ideas including if () else, for () and while () loops. The appendix discusses how matrices are handled in R . It also provides a brief introduction to optimization in R .

Notation

As you have seen above, the book uses particular fonts and symbols for various important things. It uses the symbol R to refer to the scripting language. It uses typewriter font to represent code in R . Initial mentions of an important term are in bold face font.

In discussing the data analysis it uses \(X\) to refer to some observed characteristic. In general, it uses \(X\) to refer to the policy variable of interest, \(Y\) to refer to the outcome, and \(U\) to refer to the unobserved characteristic. When discussing actual data, it uses \(x_i\) to refer to the observed characteristic for some individual \(i\). It uses \(\mathbf{x}\) to denote a vector of the \(x_i\)’s. For matrices it uses \(\mathbf{X}\) for a matrix and \(\mathbf{X}'\) for the matrix transpose. A row of that matrix is \(\mathbf{X}_i\) or \(\mathbf{X}_i'\) to highlight that it is a row vector. Lastly for parameters of interest it uses Greek letters. For example, \(\beta\) generally refers to a vector of parameters, although in some cases it is a single parameter of interest, while \(\hat{\beta}\) refers to the estimate of the parameter. An individual parameter is \(b\).

Hello R World

To use this book you need to download R and RStudio on your computer. Both are free.

Download R and RStudio

First, download the appropriate version of RStudio here: https://www.rstudio.com/products/rstudio/download/#download. Then you can download the appropriate version of R here: https://cran.rstudio.com/.

Once you have the two programs downloaded and installed, open up RStudio. To open up a script go to File > New File > R Script. You should have four windows: a script window, a console window, a global environment window, and a window with help, plots and other things.

Using the Console

Go to the console window and click on the >. Then type print("Hello R World") and hit enter. Remember to use the quotes. In general, R functions have the same basic syntax, functionname with parentheses, and some input inside the parentheses. Inputs in quotes are treated as text while inputs without quotes are treated as variables.

print("Hello R World")[1] "Hello R World"Try something a little more complicated.

a <- "Chris" # or write your own name

print(paste("Welcome",a,"to R World",sep=" "))[1] "Welcome Chris to R World"Here we are creating a variable called a. To define this variable we use the<- symbol which means “assign.” It is possible to use = but that is generally frowned upon. I really don’t know why it is done this way. However, when writing this out it is important to include the appropriate spaces. It should be a <- "Chris" rather than a<-"Chris". Not having the correct spacing can lead to errors in your code. Note that # is used in R to “comment out” lines in codes. R does not read the line following the hash.

In R we can place one function inside another function. The function paste is used to join text and variables together. The input sep = " " is used to place a space between the elements that are being joined together. When placing one function inside another make sure to keep track of all of the parentheses. A common error is to have more or fewer closing parentheses than opening parentheses.

A Basic Script

In the script window name your script. I usually name the file something obvious like Chris.R. You can use your own name unless it is also Chris.

# Chris.R Note that this line is commented out, so it is does nothing. To actually name your file you need to go to File > Save As and save it to a folder. When I work with data, I save the file to the same folder as the data. I then go to Session > Set Working Directory > To Source File Location. This sets the working directory to be the same as your data. It means that you can read and write to the folder without complex path names.

Now you have the script set up. You can write into it.

# Chris.R

# Import data

x <- read.csv("minimum wage.csv", as.is = TRUE)

# the data can be imported from here:

# https://sites.google.com/view/microeconometricswithr/

# table-of-contents

# Summarize the data

summary(x) State Minimum.Wage Unemployment.Rate

Length:51 Min. : 0.000 Min. :2.100

Class :character 1st Qu.: 7.250 1st Qu.:3.100

Mode :character Median : 8.500 Median :3.500

Mean : 8.121 Mean :3.641

3rd Qu.:10.100 3rd Qu.:4.100

Max. :14.000 Max. :6.400 # Run OLS

lm1 <- lm(Unemployment.Rate ~ Minimum.Wage, data = x)

summary(lm1)[4]$coefficients

Estimate Std. Error t value Pr(>|t|)

(Intercept) 3.49960275 0.32494078 10.7699709 1.62017e-14

Minimum.Wage 0.01743224 0.03733321 0.4669365 6.42615e-01# the 4th element provides a nice table.To run this script, you can go to the Run > Run All. The first line imports the data. You can find the data at the associated website for the book. The data has three variables: the State, their June 2019 minimum wage, and their June 2019 unemployment rate. To see these variables, you can run summary(x).

To run a standard regression use the lm() function. I call the object lm1. On the left-hand side of the tilde (\(\sim\)) is the variable we are trying to explain, Unemployment.Rate, and on the right-hand side is theMinimum.Wage variable. If we use the option data = x, we can just use the variable names in the formula for thelm() function.

The object, lm1, contains all the information about the regression. You can run summary(lm1) to get a standard regression table.7

Discussion and Further Reading

The book is a practical guide to microeconometrics. It is not a replacement for a good textbook such as Cameron and Trivedi (2005) or classics like Goldberger (1991) or Greene (2000). The book uses R to teach microeconometrics. It is a complement to other books on teaching R , particularly in the context of econometrics, such as Kleiber and Zeileis (2008). For more discussion of DAGs and do operators, I highly recommend Judea Pearl’s Book of Why \citep{Pearl:2018]. To learn about programming in R , I highly recommend Kabacoff (2011). The book is written by a statistician working in a computer science department. Paarsh and Golyaev (2016) is an excellent companion to anyone starting out doing computer intensive economic research.

Ordinary Least Squares

Introduction

Ordinary least squares (OLS) is the work-horse model of microeconometrics. It is quite simple to estimate. It is straightforward to understand. It presents reasonable results in a wide variety of circumstances.

The chapter uses OLS to disentangle the effect of the policy variable from other unobserved characteristics that may be affecting the outcome of interest. The chapter describes how the model works and presents the two standard algorithms, one based on matrix algebra and the other based on least squares. It uses OLS on actual data to estimate the value of an additional year of high school or college.

Estimating the Causal Effect

You have been asked to evaluate a policy which would make public colleges free in the state of Vermont. The Green Mountain state is considering offering free college education to residents of the state. Your task is to determine whether more Vermonters will attend college and whether those additional attendees will be better off. Your boss narrows things down to the following question. Does an additional year of schooling cause people to earn more money?

The section uses simulated data to illustrate how averaging is used to disentangle the effect of the policy variable from the effect of the unobserved characteristics.

Graphing the Causal Effect

Your problem can be represented by the causal graph in Figure 1. The graph shows an arrow from \(X\) to \(Y\) and an arrow from \(U\) to \(Y\). In the graph, \(X\) represents the policy variable. This is the variable that we are interested in changing. In the example, it represents years of schooling. The outcome of interest is represented by \(Y\). Here, the outcome of interest is income. The arrow from \(X\) to \(Y\) represents the causal effect of \(X\) on \(Y\). This means that a change in \(X\) will lead to a change in \(Y\). The size of the causal effect is represented by \(b\). The model states that if a policy encourages a person to gain an additional year of schooling (\(X\)), then the person’s income (\(Y\)) increases by the amount \(b\). Your task is to estimate \(b\).

If the available data is represented by the causal graph in Figure 1, can it be used to estimate \(b\)? The graph shows there is an unobserved term \(U\) that is also affecting \(Y\). \(U\) may represent unobserved characteristics of the individual or the place where they live. In the example, some people live in Burlington while others live in Northeast Kingdom. Can we disentangle the effect of \(X\) on \(Y\) and the effect of \(U\) on \(Y\)?

A Linear Causal Model

Consider a simple model of our problem. Individual \(i\) earns income \(y_i\) determined by their education level \(x_i\) and unobserved characteristics \(\upsilon_i\). We assume that the relationship is a linear function.

\[ y_i = a + b x_i + \upsilon_i \tag{1}\]

where \(a\) and \(b\) are the parameters that determine how much income individual \(i\) earns and how much of that is determined by their level of education.

Our goal is to estimate these parameters from the data we have.

Simulation of the Causal Effect

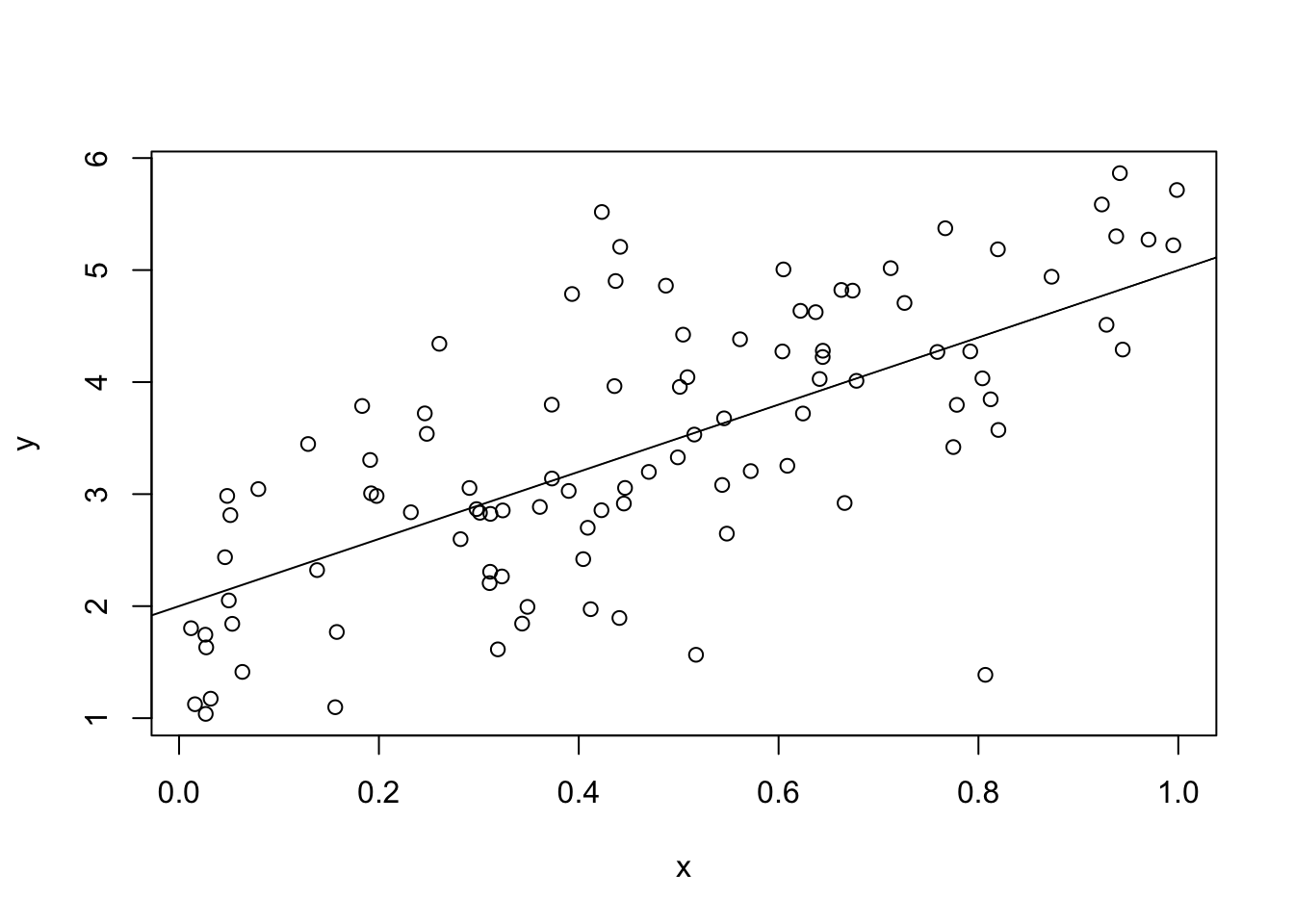

In the simulated data, we have a true linear relationship between \(x\) and \(y\) with an intercept of 2 and a slope of 3. The unobserved characteristic is distributed **standard normal} (\(\upsilon_i \sim \mathcal{N}(0, 1)\)). This means that the unobserved characteristic is drawn from a normal distribution with mean 0 and standard deviation of 1. We want to estimate the value of \(b\), which has a true value of \(3\).

# Create a simulated data set set.seed(123456789)

# use to get the exact same answer each time the code is run.

N <- 100

# Set N to 100, to represent the number of observations.\

a <- 2

b <- 3

# model parameters of interest \# Note the use of \<- to mean "assign".

x <- runif(N)

# create a vector where the observed characteristic, x,\

# is drawn from a uniform distribution.

u <- rnorm(N)

# create a vector where the unobserved characteristic, \# u is drawn from a standard normal distribution.

y <- a + b*x + u

# create a vector y \#* allows a single number to be multiplied through \# the whole vector \# + allows a single number to be added to the whole vector \# or for two vectors of the same length to be added together.A computer does not actually generate random numbers. It generates pseudo-random numbers. These numbers are derived from a distinct function. If you know the function and the current number, then you know exactly what the next number will be. The book takes advantage of this process by using the R function, set.seed() to generate exactly the same numbers every time.

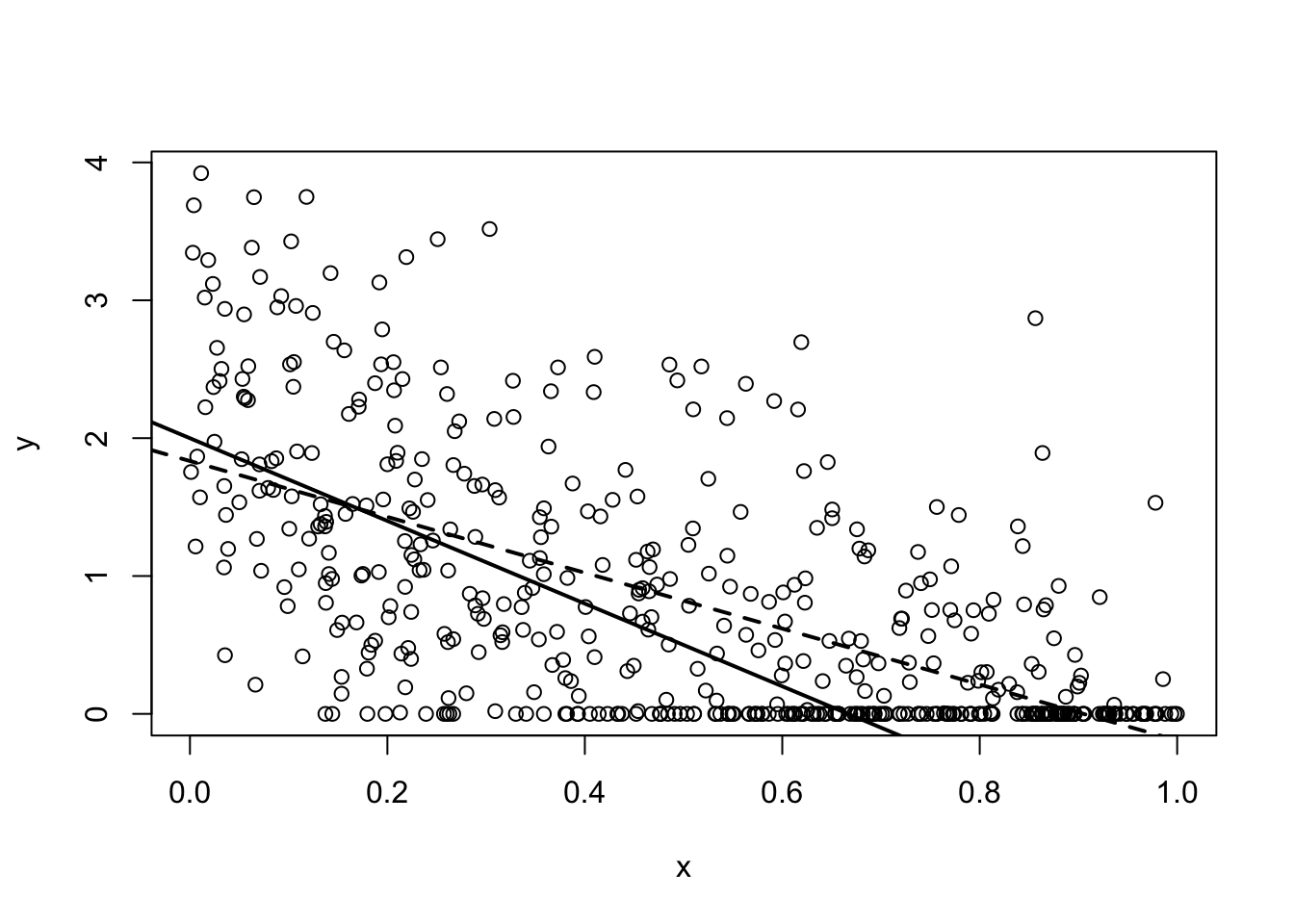

plot(x,y) # creates a simple plot

abline(a = 2,b = 3) # adds a linear function to the plot.

# a - intercept, b - slope.

Figure 2 presents the true relationship between \(X\) and \(Y\) as the solid line sloping up. The observed relationship is represented as the circles randomly spread out over the box. The plot suggests that we can take averages in order to determine the true relationship between \(X\) and \(Y\).

Averaging to Estimate the Causal Effect}

Figure 2 suggests that we can use averaging to disentangle the effect of \(X\) on \(Y\) from the effect of \(U\) on \(Y\). Although the circles are spread out in a cloud, they follow a distinct upward slope. Moreover, the line that sits in the center of the cloud represents the true relationship. If we take values of \(X\) close to 0, then the average of \(Y\) will be near 2. If we look at values of \(X\) close to 1, the average of \(Y\) is near 5. That is, if we change the value of \(X\) by 1, the average value of \(Y\) increases by 3.

mean(y[x > 0.95]) - mean(y[x < 0.05]) [1] 3.626229# mean takes an average \# the logical expression inside the square brackets # creates an index for the elements of y where the logical \# expression in x holds.We can do this with the simulated data. In both cases, we are “close” but not actually equal to 0 or 1. The result is that we find a slope that is equal to 2.72, not 3.

We disentangle the effect of \(X\) and \(U\) by averaging out the unobserved characteristic. By taking the difference in the average of \(Y\) calculated at two different values of \(X\), we can determine how \(X\) affects the average value of \(Y\). In essence, this is what OLS does.

Assumptions of the OLS Model

The major assumptions of the OLS model are that unobserved characteristics enter independently and additively. The first assumption states that conditional on observed characteristics (the \(X\)’s), the unobserved characteristic (the \(U\)) has independent effects on the outcome of interest (\(Y\)). In Figure 1}, this assumption is represented by the fact there is no arrow from \(U\) to \(X\). In the econometrics literature, the assumption is given the awkward appellation, unconfoundedness (Imbens 2010).

To understand the independence assumption, it is helpful to go back to the original problem. We are interested in determining the economic value of attending college. Our estimated model of the effect of schooling on income allows the unobserved characteristics to determine income. Importantly, the model does not allow unobserved characteristics to affect both schooling and income. The model does not allow students from wealthy families to be more likely to go to college and get a good job due to their family background.

The second assumption states that unobserved characteristics enter the model additively.8 The implication is that the effect of the policy cannot vary with unobserved characteristics. Attending college increases everyone’s income by the same amount. This assumption allows the effect of \(U\) and \(X\) to be disentangled using averaging. This assumption also allows the use of the quick and robust least squares algorithm.

The simulated data set satisfies these two assumptions. The unobserved characteristic, \(u\), is drawn independently of \(x\) and it affects \(y\) additively.

Matrix Algebra of the OLS Model

The chapter presents two algorithms for solving the model and revealing the parameter estimates; the algebraic algorithm and the least squares algorithm.9 This section uses matrix algebra to derive and program up the OLS estimator in R.10

Standard Algebra of the OLS Model

Let’s simplify the problem. Consider (Equation 23) and let \(a = 2\), so we only need to determine the parameter \(b\).

\[ b = \frac{y_i - 2 - \upsilon_i}{x_i} \tag{2}\]

The parameter of interest (\(b\)) is a function of both observed terms (\(\{y_i,x_i\}\)) and the unobserved term (\(\upsilon_i\)).

Rearranging (Equation 36}) highlights two problems. The first problem is that the observed terms (\(\{y_i,x_i\}\)) are different for each person \(i\), but (Equation 36) states that \(b\) is exactly the same for each person. The second problem is that the unobserved term (\(\upsilon_i\)) is unobserved. We do not know what it is.

Luckily, we can “kill two birds with one stone.” As (Equation 36) must hold for each individual \(i\) in the data, we can determine \(b\) by averaging. Going back to the original equation ( (Equation 23)), we can take the average of both sides of the equation.

\[ \frac{1}{N}\sum_{i=1}^N y_i = \frac{1}{N}\sum_{i=1}^N (2 + b x_i + \upsilon_i) \tag{3}\]

We use the notation \(\sum_{i=1}^N\) to represent summing over \(N\) individuals in data. The Greek letter \(\sum\) is capital sigma.11

Summing through on the right-hand side, we have the slightly simplified equation below.

\[ \frac{1}{N}\sum_{i=1}^N y_i = 2 + b \frac{1}{N}\sum_{i=1}^N x_i + \frac{1}{N}\sum_{i=1}^N \upsilon_i\\ \\ or\\ \bar{y} = 2 + b \bar{x} + \bar{\upsilon} \tag{4}\]

where \(\bar{y}\) denotes the sample average.

Dividing by \(\bar{x}\) and rearranging to make the parameter of interest (\(b\)) as a function of the observed and unobserved terms:

\[ b = \frac{\bar{y} - 2 - \bar{\upsilon}}{\bar{x}} \tag{5}\]

Unfortunately, we cannot actually solve this equation and determine \(b\). The problem is that we still cannot observe the unobserved terms, the \(\upsilon_i\)’s.

However, we can estimate \(b\) using (Equation 5). The standard notation is \(\hat{b}\).

\[ \hat{b} = \frac{\bar{y} - 2}{\bar{x}} \tag{6}\]

The estimate of \(b\) is a function of things we observe in the data. The good news is that we can calculate \(\hat{b}\). The bad news is that we don’t know if it is equal to \(b\).

How close is our estimate to the true value of interest? How close is \(\hat{b}\) to \(b\)? If we have a lot of data and it is reasonable to assume the mean of the unobserved terms is zero, then our estimate will be very close to the true value. If we assume that \(E(u_i) = 0\) then by the Law of Large Numbers if \(N\) is large, \(\frac{1}{N}\sum_{i=1}^N u_i = 0\) and our estimate is equal to the true value (\(\hat{b} = b\)).12

Algebraic OLS Estimator in R

We can use the algebra presented above as pseudo-code for our first estimator in R.

b_hat <- (mean(y) - 2)/mean(x)

b_hat [1] 3.065963Why does this method not give a value closer to the true value? The method gives an estimate of 3.46, but the true value is 3. One reason may be that the sample size is not very large. You can test this by running the simulation and increasing \(N\) to 1,000 or 10,000. Another reason may be that we have simplified things to force the intercept to be 2. Any variation due to the unobserved term will be taken up by the slope parameter giving an inaccurate or biased estimate.13

Using Matrices

In general, of course, we do not know \(a\) and so need to jointly solve for both \(a\) and \(b\). We use matrix algebra to solve this more complicated problem.

- shows the equations representing the data. It represents a system of 100 linear equations.

\[ \left [\begin{array}{c} y_1\\ y_2\\ y_3\\ y_4\\ y_5\\ \vdots \end{array} \right] = \left [\begin{array}{c} 2 + 3x_1 + u_1\\ 2 + 3x_2 + u_2\\ 2 + 3x_3 + u_3\\ 2 + 3x_4 + u_4\\ 2 + 3x_5 + u_5\\ \vdots \end{array} \right] \tag{7}\]

Using matrix algebra, we can rewrite the system of equations.

\[ \left [\begin{array}{c} y_1\\ y_2\\ y_3\\ y_4\\ y_5\\ \vdots \end{array} \right] = \left [\begin{array}{cc} 1 & x_1\\ 1 & x_2\\ 1 & x_3\\ 1 & x_4\\ 1 & x_5\\ \vdots \end{array} \right] \left [\begin{array}{c} 2\\ 3 \end{array} \right] + \left [\begin{array}{c} u_1\\ u_2\\ u_3\\ u_4\\ u_5\\ \vdots \end{array} \right] \tag{8}\]

Notice how matrix multiplication is done. Standard matrix multiplication follows the rule below.

\[ \left[\begin{array}{cc} a & b\\ c & d \end{array}\right] \left[\begin{array}{cc} e & f\\ g & h \end{array}\right] = \left[\begin{array}{cc} ae + bg & af + bh\\ ce + dg & cf + dh \end{array}\right] \tag{9}\]

Check what happens if you rearrange the order of the matrices. Do you get the same answer?14

Multiplying Matrices in R

We now illustrate matrix multiplication with R.

x1 = x[1:5]

# only include the first 5 elements

X1 = cbind(1,x1)

# create a matrix with a columns of 1s \# cbind means column bind -

# it joins columns of the same length together.

# It returns a "matrix-like" object.

# Predict value of y using the model

X1%*%c(2,3) [,1]

[1,] 3.513248

[2,] 2.845124

[3,] 4.375409

[4,] 3.268176

[5,] 3.811467# See how we can add and multiply vectors and numbers in R. \# In R %*% represents standard matrix multiplication. \# Note that R automatically assumes c(2,3) is a column vector \# Compare to the true values

y[1:5] [1] 4.423434 2.597724 4.274064 2.856203 4.273605In the simulated data we see the relationship between \(y\) and \(x\). Why aren’t the predicted values equal to the true values?15

Matrix Estimator of OLS

It is a lot more compact to represent the system in (1) with matrix notation.

\[ \mathbf{y} = \mathbf{X} \beta + \mathbf{u} \tag{10}\]

where \(\mathbf{y}\) is a \(100 \times 1\) column vector of the outcome of interest \(y_i\), \(\mathbf{X}\) is a \(100 \times 2\) rectangular matrix of the observed explanatory variables \(\{1, x_i\}\), \(\beta\) is a \(2 \times 1\) column vector of the model parameters \(\{a, b\}\) and \(\mathbf{u}\) is a \(100 \times 1\) column vector of the unobserved term \(u_i\).

To solve the system we can use the same “division” idea that we used for standard algebra. In matrix algebra, we do division by multiplying the inverse of the matrix (\(\mathbf{X}\)) by both sides of the equation. Actually, this is how we do division in normal algebra, it is just that we gloss over it and say we “moved” it from one side of the equation to the other.

The problem is that our matrix is not invertible. Only full-rank square matrices are invertible and our matrix is not even square.16 The solution is to create a generalized matrix inverse.

We can make our matrix (\(\mathbf{X}\)) square by pre-multiplying it by its transpose.17 The notation for this is \(\mathbf{X}'\). Also remember that in matrix multiplication order matters! Pre-multiplying means placing the transposed matrix to the left of the original matrix.

\[ \mathbf{X}' \mathbf{y} = \mathbf{X}' \mathbf{X} \beta + \mathbf{X}' \mathbf{u} \tag{11}\]

The matrix \(\mathbf{X}' \mathbf{X}\) is a \(2 \times 2\) matrix as it is a \(2 \times 100\) matrix multiplied by a \(100 \times 2\) matrix.18

To solve for the parameters of the model (\(\beta\)), we pre-multiply both side of (Equation 128) by the matrix we created. Thus we have the following equation.

\[ (\mathbf{X}'\mathbf{X})^{-1} \mathbf{X}' \mathbf{y} = (\mathbf{X}'\mathbf{X})^{-1} \mathbf{X}' \mathbf{X} \beta + (\mathbf{X}'\mathbf{X})^{-1}\mathbf{X}' \mathbf{u} \tag{12}\]

Simplifying and rearranging we have the following linear algebra derivation of the model.

\[ \beta = (\mathbf{X}'\mathbf{X})^{-1} \mathbf{X}' \mathbf{y} - (\mathbf{X}'\mathbf{X})^{-1} \mathbf{X}' \mathbf{u} \tag{13}\]

From this we have the matrix algebra based estimate of our model.

\[ \hat{\beta} = (\mathbf{X}'\mathbf{X})^{-1} \mathbf{X}' \mathbf{y} \tag{14}\]

Remember that we never observe \(u\) and so we do not know the second part of (Equation 13).19

We didn’t just drop the unobserved term, we averaged over it. If the assumptions of OLS hold, then summation \((\mathbf{X}' \mathbf{X})^{-1} \mathbf{X}' \mathbf{u}\) will generally be close to zero. This issue is discussed more in Chapter 3 and Appendix A.

Matrix Estimator of OLS in R

We can follow a similar procedure for inverting a matrix using R. The matrix of explanatory variables includes a first column of 1’s which accounts for the intercept term.

X <- cbind(1,x)

# remember the column of 1's Next we need to transpose the matrix. To illustrate a matrix transpose consider a matrix \(\mathbf{A}\) which is \(3 \times 2\) (3 rows and 2 columns) and has values 1 to 6.

A <- matrix(c(1:6),nrow=3)

# creates a 3 x 2 matrix.

A [,1] [,2]

[1,] 1 4

[2,] 2 5

[3,] 3 6# See how R numbers elements of the matrix.

t(A) [,1] [,2] [,3]

[1,] 1 2 3

[2,] 4 5 6# transpose of matrix A

t(A)%*%A [,1] [,2]

[1,] 14 32

[2,] 32 77# matrix multiplication of the transpose by itself In our problem the transpose multiplied by itself gives the following \(2 \times 2\) matrix.

t(X)%*%X x

100.00000 46.20309

x 46.20309 28.68459In R, the matrix inverse can be found using the solve() function.

solve(t(X)%*%X) x

0.03909400 -0.06296981

x -0.06296981 0.13628919# solves for the matrix inverse. The matrix algebra presented (Equation 14}) is pseudo-code for the operation in R.

beta_hat <- solve(t(X)%*%X)%*%t(X)%*%y

beta_hat [,1]

1.939289

x 3.197364Our estimates are \(\hat{a} = 2.18\) and \(\hat{b} = 3.09\). These values are not equal to the true values of \(a = 2\) and \(b = 3\), but they are fairly close. Try running the simulation again, but changing \(N\) to 1,000. Are the new estimates closer to the their true values? Why?20

We can check that we averaged over the unobserved term to get something close to 0.

solve(t(X)%*%X)%*%t(X)%*%u [,1]

-0.06071137

x 0.19736442Least Squares Method for OLS

An alternative algorithm is least squares. This section presents the algebra of least squares and compares the linear model (lm()) estimator to the matrix algebra version derived above.

Moment Estimation

Least squares requires that we assume the unobserved characteristic has a mean of 0. We say that the first moment of the unobserved characteristic is 0. A moment refers to the expectation of a random variable taken to some power. The first moment is the expectation of the variable taken to the power of 1. The second moment is the expectation of the variable taken to the power of 2, etc.

\[ E(u_i) = 0 \tag{15}\]

From (Equation 23) we can rearrange and substitute into (Equation 15).

\[ E(y_i - a - b x_i) = 0 \tag{16}\]

- states that the expected difference, or mean difference, between \(y_i\) and the predicted value of \(y_i\) (\(a + bx_i\)) is 0.

The sample analog of the left-hand side of (2}) is the following average.

\[ \frac{1}{N} \sum_{i=1}^{N} (y_i - a - b x_i) \tag{17}\]

The sample equivalent of the mean is the average. This approach to estimation is called analog estimation (Charlies F. Manski 1988). We can make this number as close to zero as possible by minimizing the sum of squares, that is, finding the “least squares.”

Algebra of Least Squares

Again it helps to illustrate the algorithm by solving the simpler problem. Consider a version of the problem in which we are just trying to estimate the \(b\) parameter.

We want to find the \(b\) such that (2) holds. Our sample analog is to find the \(\hat{b}\) that makes (Equation 17) as close to zero as possible.

\[ \min_{\hat{b}} \frac{1}{N}\sum_{i=1}^N (y_i - 2 - \hat{b} x_i)^2 \tag{18}\]

We can solve for \(\hat{b}\) by finding the first order condition of the problem.21

\[ \frac{1}{N} \sum_{i=1}^N -2x_i(y_i - 2 - \hat{b}x_i) = 0 \tag{19}\]

Note that we can divide both sides by -2, giving the following rearrangement.

\[ \hat{b} = \frac{\frac{1}{N} \sum_{i=1}^N x_i y_i - 2 \frac{1}{N} \sum_{i=1}^N x_i}{\frac{1}{N} \sum_{i=1}^N x_i x_i} \tag{20}\]

Our estimate of the relationship \(b\) is equal to a measure of the covariance between \(X\) and \(Y\) divided by the variance of \(X\). This is the sense that we can think of our OLS estimate as a measure of the correlation between the independent variable (the \(X\)’s) and the outcome variable (the \(Y\)).

Estimating Least Squares in R

We can program the least squares estimator in two ways. First, we can solve the problem presented in (Equation 18). Second, we can use the solution to the first order condition presented in (Equation 20).

optimize(function(b) sum((y - 2 - b*x)^2), c(-10,10))$minimum[1] 3.099575# optimize() is used when there is one variable.

# note that the function can be defined on the fly

# $minimum presents one of the outcomes from optimize() We can use optimize() to find a single optimal value of a function. We can use function() to create the sum of squared difference function, which is the first argument of optimize(). The procedure searches over the interval which is the second argument. Here it searches over the real line from -10 to 10 (c(-10,10)).22 It looks for the value that minimizes the sum of the squared differences. The result is 3.37. Why do you think this is so far from the true value of 3?23

Alternatively, we can use the first order condition.

(mean(x*y) - 2*mean(x))/mean(x*x) [1] 3.099575Solving out (Equation 20) in R gives an estimate of 3.37. Why do these two approaches give identical answers?

The lm() Function

The standard method for estimating OLS in R is to use the lm() function. This function creates an object in R. This object keeps track of various useful things such as the vector of parameter estimates.

data1 <- as.data.frame(cbind(y,x))

# creates a data.frame() object which will be used in the

# next section.

lm1 <- lm(y ~ x)

# lm creates a linear model object

length(lm1) # reports the number of elements of the list object [1] 12names(lm1) # reports the names of the elements [1] "coefficients" "residuals" "effects" "rank"

[5] "fitted.values" "assign" "qr" "df.residual"

[9] "xlevels" "call" "terms" "model" We can compare the answers from the two algorithms, the lm() procedure and the matrix algebra approach.

lm1$coefficients (Intercept) x

1.939289 3.197364 # reports the coefficient estimates

# $ can be used to call a particular element from the list.

# lm1[1] reports the same thing.

t(beta_hat) # results from the matrix algebra. x

[1,] 1.939289 3.197364Comparing the two different approaches in R, we can see they give identical estimates. This is not coincidental. The solve() function is based on a least squares procedure. It turns out there is no real computational difference between an “algebra” approach and a “least squares” approach in R.

Measuring Uncertainty

How do we give readers a sense of how accurate our estimate is? The previous section points out that the estimated parameter is not equal to the true value and may vary quite a bit from the true value. Of course, in the simulations we know exactly what the true value is. In real world econometric problems, we do not.

This section provides a brief introduction to some of the issues that arise when thinking about reporting the uncertainty around our estimate. Appendix A discusses these issues in more detail.

Data Simulations

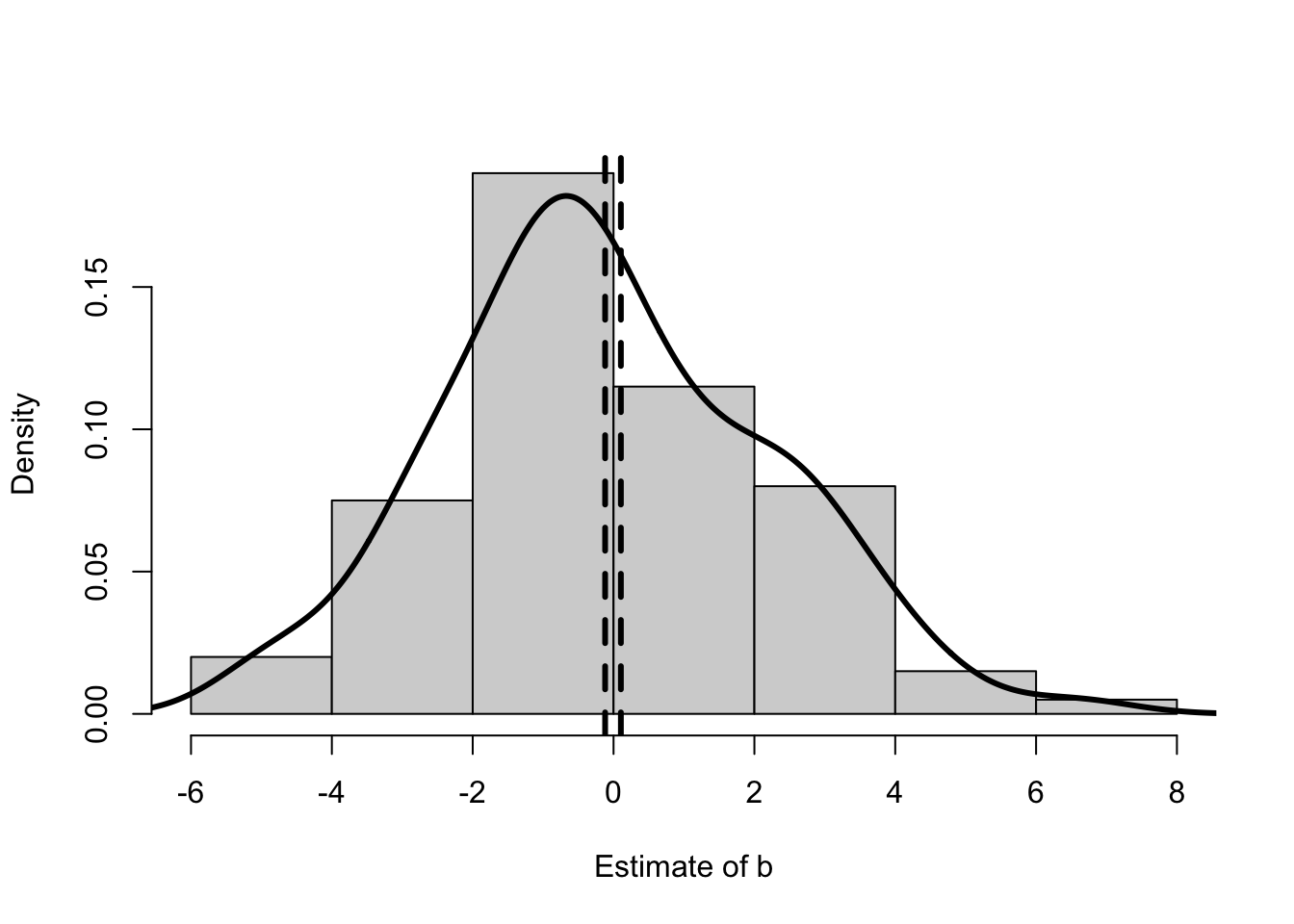

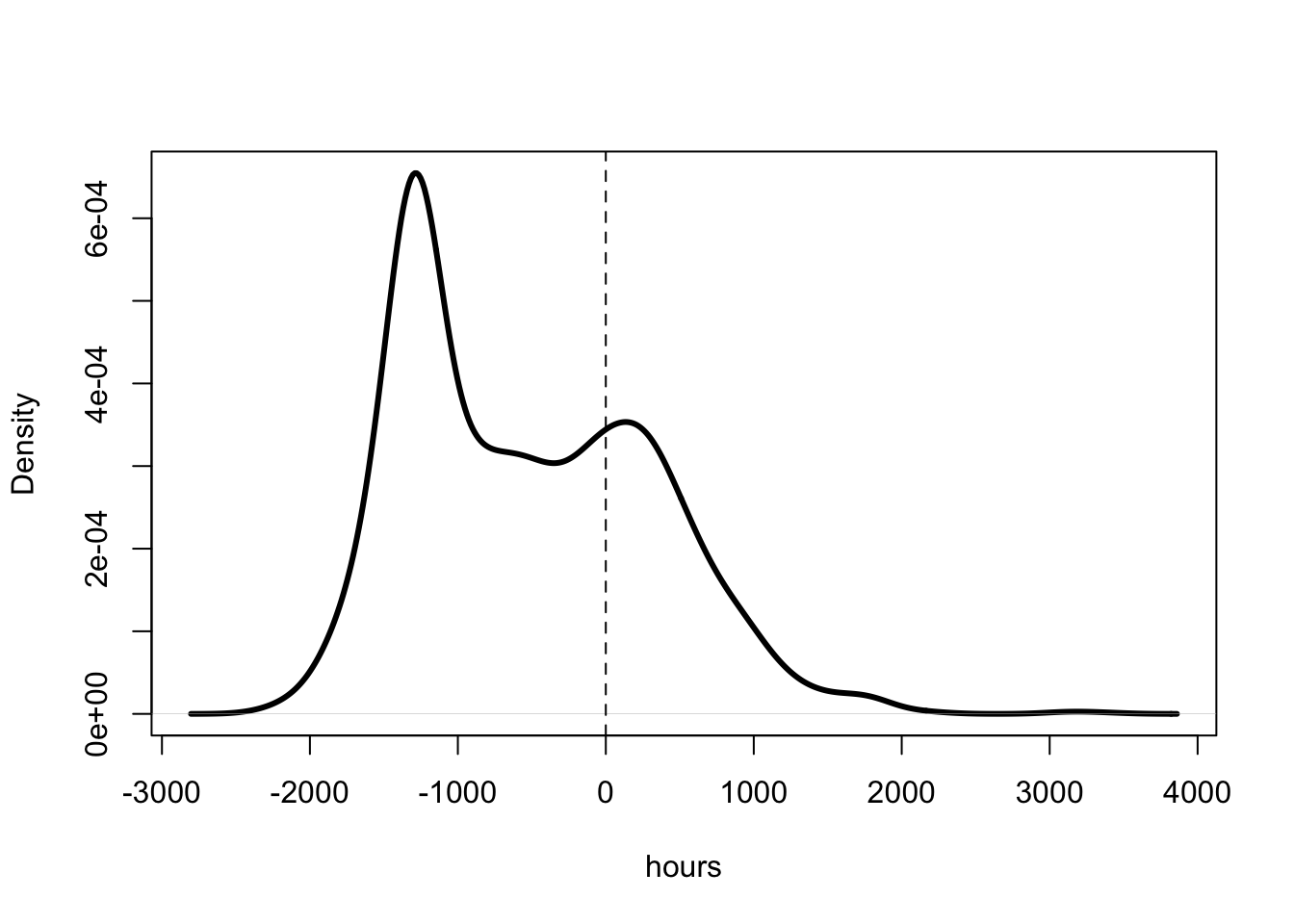

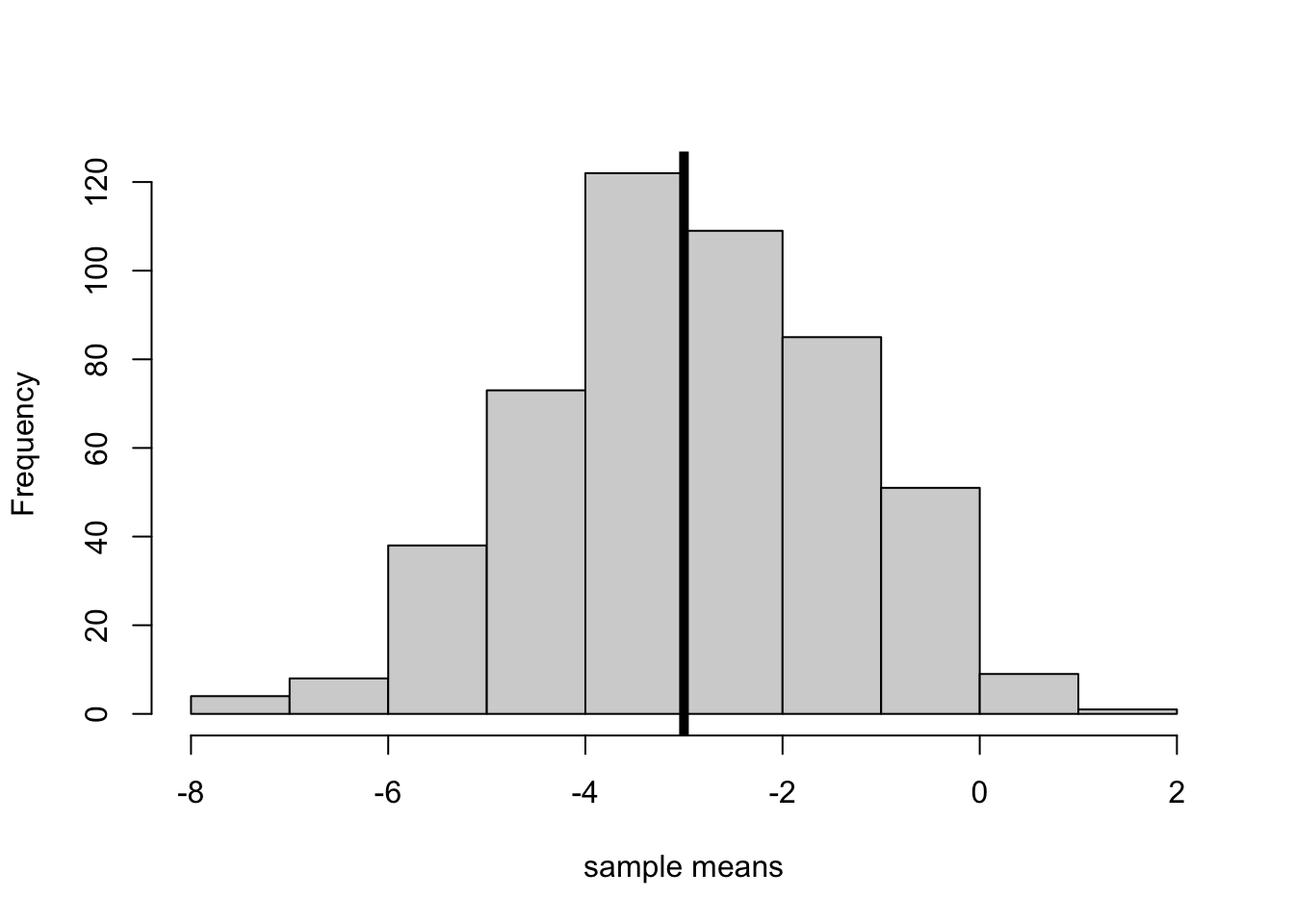

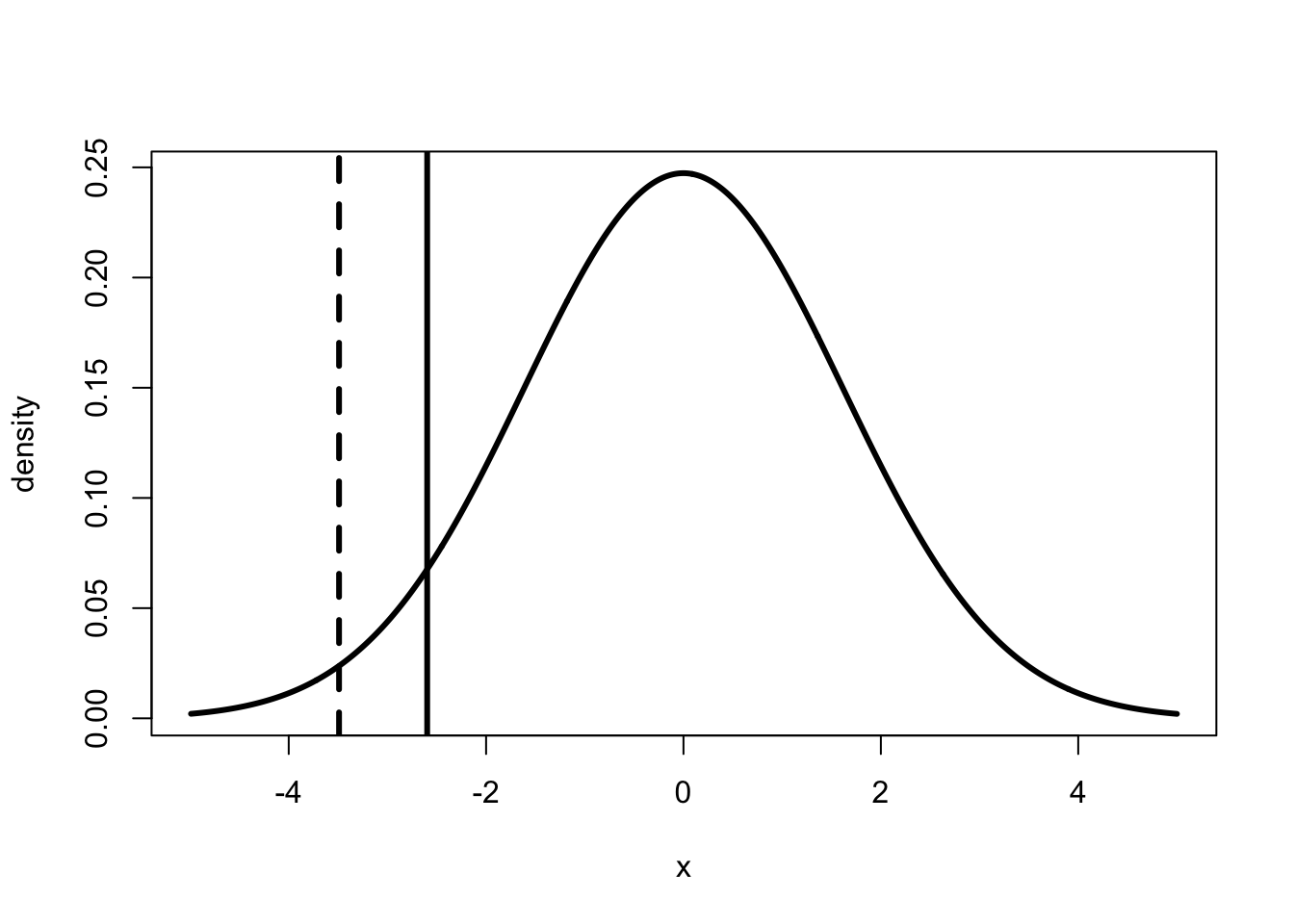

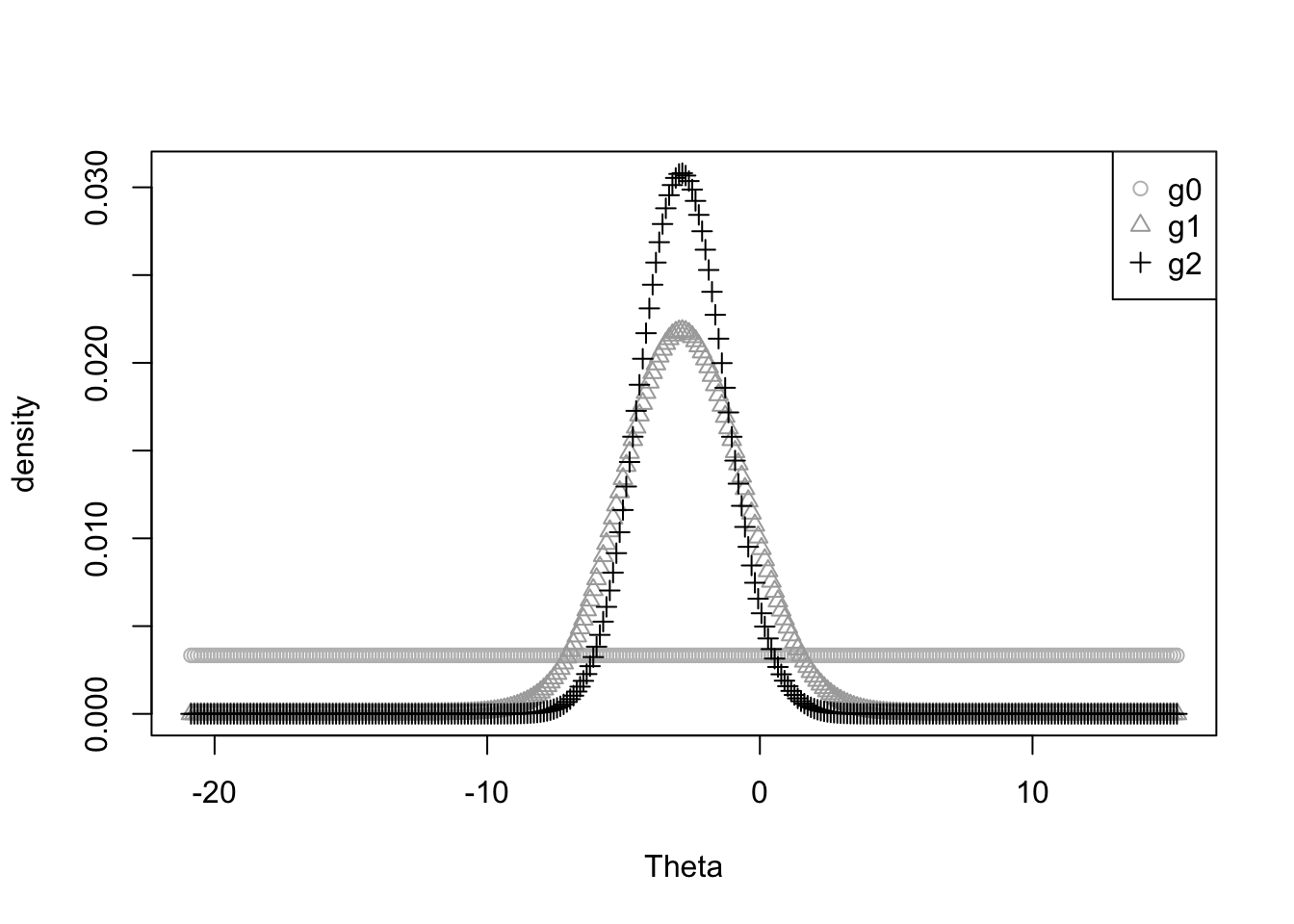

Standard statistical theory assumes that the data we observe is one of many possible samples. Luckily, with a computer we can actually see what happens if we observe many possible samples. The simulation is run 1,000 times. In each case a sample of 100 is drawn using the same parameters as above. In each case, OLS is used to estimate the two parameters \(\hat{a}\) and \(\hat{b}\).

set.seed(123456789)

K <- 1000

a <- 2

b <- 3

sim_res <- matrix(NA,K,2)

# creates a 1000 x 2 matrix filled with NAs.

# NA denotes a missing number in R.

# I prefer using NA rather than an actual number like 0.

# It is better to create the object to be filled

# prior to running the loop.

# This means that R has the object in memory.

# This simple step makes loops in R run a lot faster.

for (k in 1:K) { # the "for loop" starts at 1 and moves to K

x <- runif(N)

u <- rnorm(N, mean = 0, sd = 2)

y <- a + b*x + u

sim_res[k,] <- lm(y ~ x)$coefficients

# inputs the coefficients from simulated data into the

# kth row of the matrix.

# print(k)

# remove the hash to keep track of the loop

}

colnames(sim_res) <- c("Est of a", "Est of b")

# labels the columns of the matrix. require(knitr)Loading required package: knitr# summary produces a standard summary of the matrix.

sum_tab <- summary(sim_res)

rownames(sum_tab) <- NULL # no row names.

# NULL creates an empty object in R.

kable(sum_tab)| Est of a | Est of b |

|---|---|

| Min. :0.7771 | Min. :0.5079 |

| 1st Qu.:1.7496 | 1st Qu.:2.5155 |

| Median :2.0295 | Median :2.9584 |

| Mean :2.0260 | Mean :2.9666 |

| 3rd Qu.:2.3113 | 3rd Qu.:3.4122 |

| Max. :3.5442 | Max. :5.9322 |

Table 1 summarizes the results of the simulation. On average the estimated parameters are quite close to the true values, differing by 0.026 and 0.033 respectively. These results suggest the estimator is unbiased. An estimator is unbiased if it is equal to the true value on average. The OLS estimator is unbiased in this simulation because the unobserved term is drawn from a distribution with a mean of 0.

The table also shows that our estimates could differ substantially from the true value. At worst, the estimated value of the \(b\) parameter could be almost twice its true value. As an exercise, what happens when the sample size is decreased or increased? Try \(N = 10\) or \(N = 5,000\).

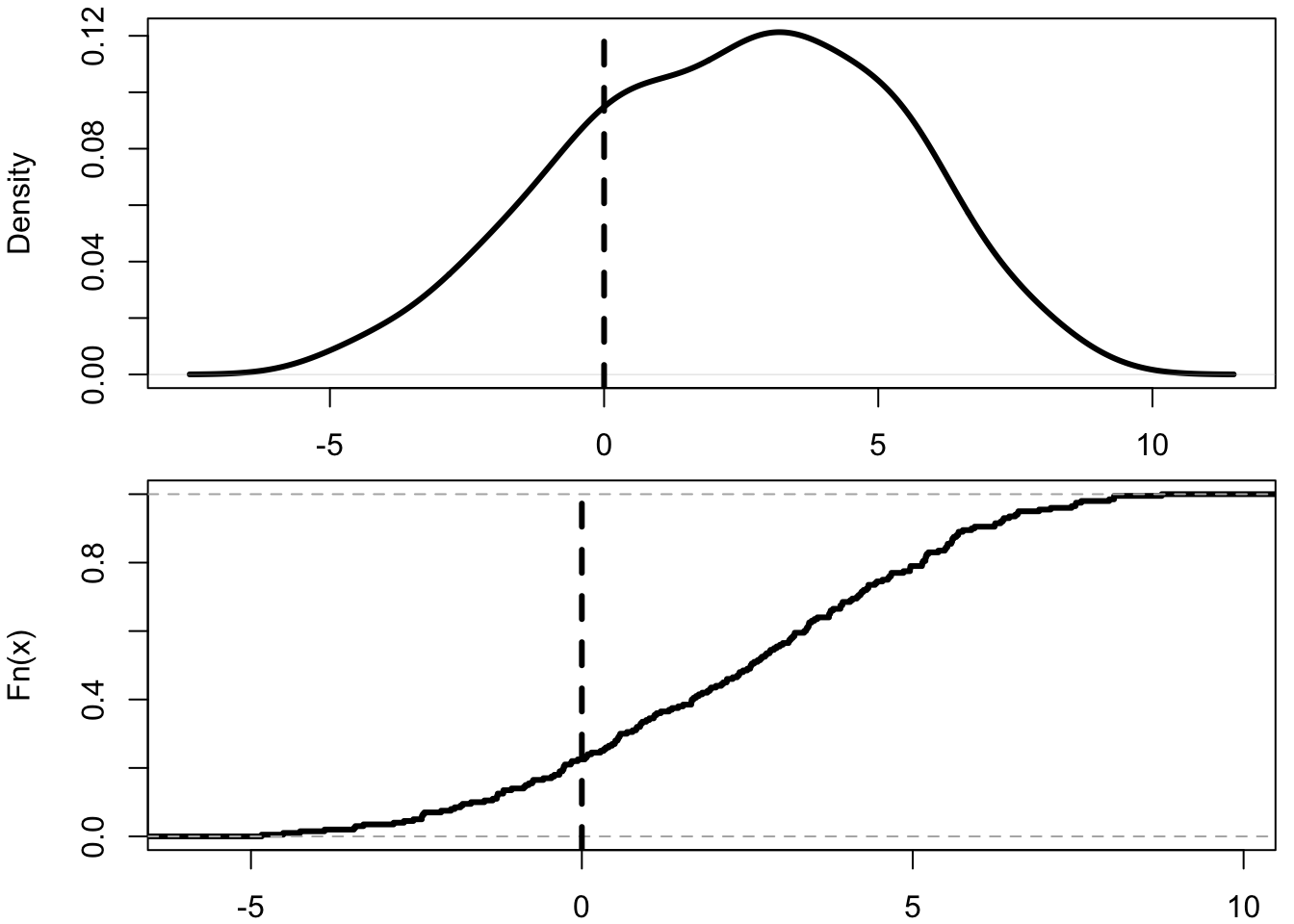

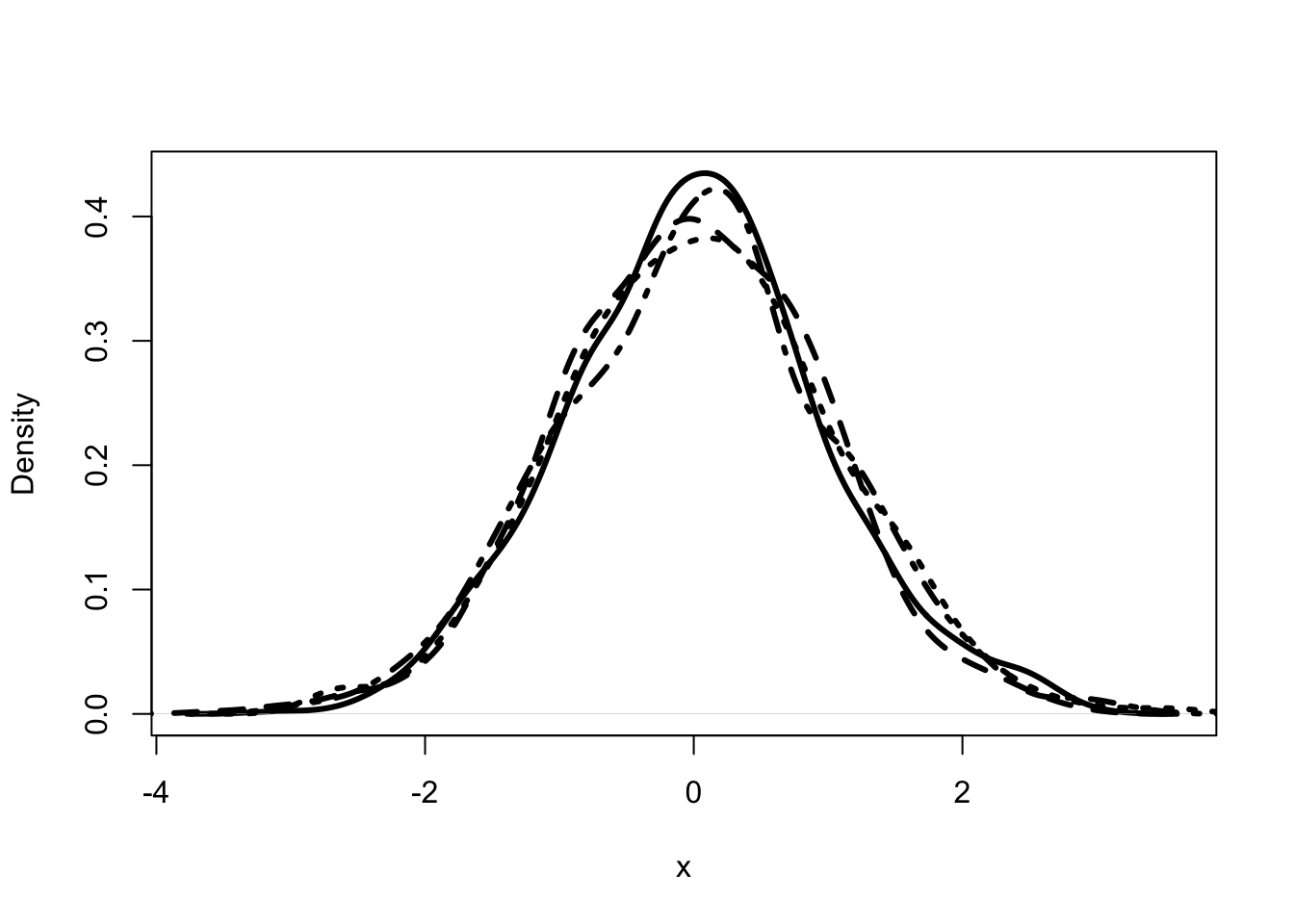

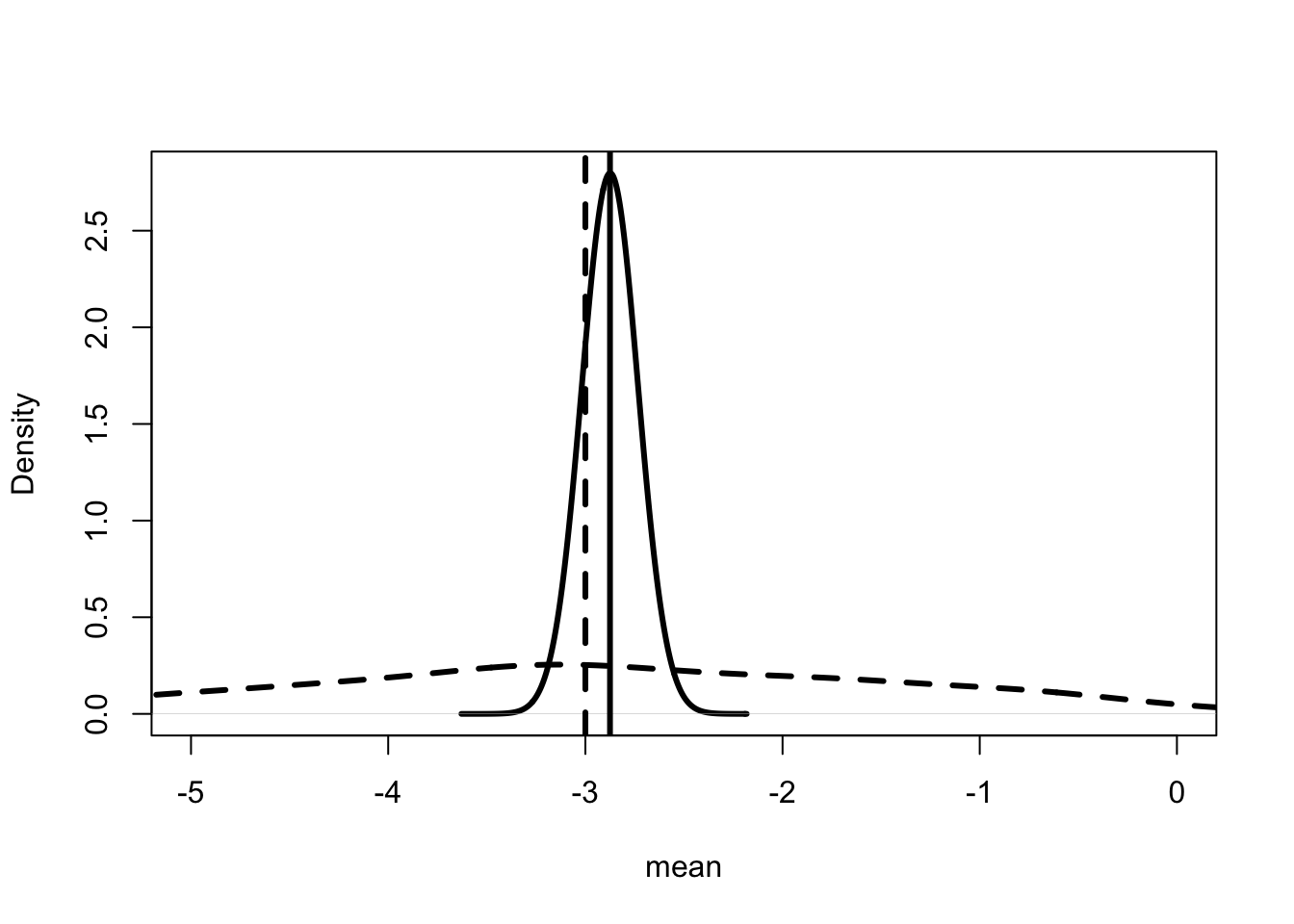

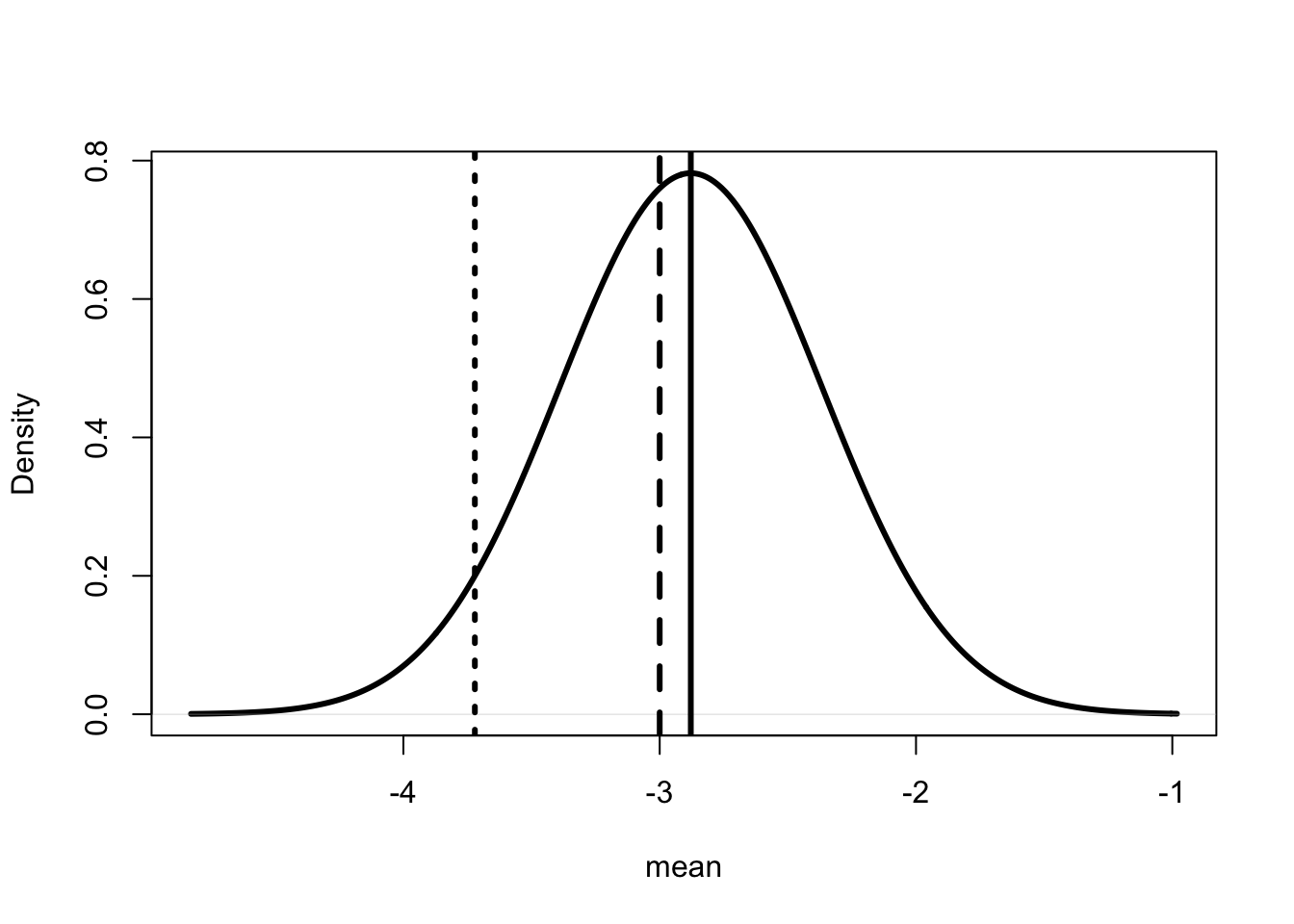

Introduction to the Bootstrap

It would be great to present the analysis above for any estimate. Of course we cannot do that because we do not know the true values of the parameters. The Stanford statistician, Brad Efron, argues we can use the analogy principle. We don’t know the true distribution but we do know its sample analog, which is the sample itself.

Efron’s idea is called bootstrapping. And no, I have no idea what a bootstrap is. It refers to the English saying, “to pull one’s self up by his bootstraps.” In the context of statistics, it means to use the statistical sample itself to create an estimate of how accurate our estimate is. Efron’s idea is to repeatedly draw pseudo-samples from the actual sample, randomly and with replacement, and then for each pseudo-sample re-estimate the model.

If our sample is pretty large then it is a pretty good representation of the true distribution. We can create a sample analog version of the thought exercise above. We can recreate an imaginary sample and re-estimate the model on that imaginary sample. If we do this a lot of times we get a distribution of pseudo-estimates. This distribution of pseudo-estimates provides us with information on how uncertain our original estimate is.

Bootstrap in R

We can create a bootstrap estimator in R. First, we create a simulated sample data set. The bootstrap code draws repeatedly from the simulated sample to create 1000 pseudo-samples. The code then creates summary statistics of the estimated results from each of the pseudo-samples.

set.seed(123456789)

K <- 1000

bs_mat <- matrix(NA,K,2)

for (k in 1:K) {

index_k <- round(runif(N,min=1,max=N))

# creates a pseudo-random sample.

# draws N elements uniformly between 1 and N.

# rounds all the elements to the nearest integer.

data_k <- data1[index_k,]

bs_mat[k,] <- lm(y ~ x, data=data_k)$coefficients

# print(k)

}

tab_res <- matrix(NA,2,4)

tab_res[,1] <- colMeans(bs_mat)

# calculates the mean for each column of the matrix.

# inputs into the first column of the results matrix.

tab_res[,2] <- apply(bs_mat, 2, sd)

# a method for having the function sd to act on each

# column of the matrix. Dimension 2 is the columns.

# sd calculates the standard deviation.

tab_res[,3] <- quantile(bs_mat[,1],c(0.025,0.975))

# calculates quantiles of the column at 2.5% and 97.5%.

tab_res[,4] <- quantile(bs_mat[,2],c(0.025,0.975))

colnames(tab_res) <- c("Mean", "SD", "2.5%", "97.5%")

rownames(tab_res) <- c("Est of a","Est of b")

# labels the rows of the matrix. kable(tab_res)| Mean | SD | 2.5% | 97.5% | |

|---|---|---|---|---|

| Est of a | 1.950349 | 0.1497943 | 1.663262 | 2.608809 |

| Est of b | 3.165321 | 0.2788436 | 2.241956 | 3.683724 |

Table 2 presents the bootstrapped estimates of the OLS model on the simulated data. The table presents the mean of the estimates from the pseudo-samples, the standard deviation of the estimates and the range which includes 95% of the cases. It is good to see that the true values do lie in the 95% range.

Standard Errors

kable(summary(lm1)[4])

|

Table 3 presents the standard results that come out of the lm() procedure in R. Note that these are not the same as the bootstrap estimates.24 The second column provides information on how uncertain the estimates are. It gives the standard deviation of the imaginary estimates assuming that the imaginary estimates are normally distributed. The last two columns present information which may be useful for hypothesis testing. This is discussed in detail in Appendix A.

The lm() procedure assumes the difference between the true value and the estimated value is distributed normally. That turns out to be the case in this simulation. In the real world there may be cases where it may be less reasonable to make this assumption.

Returns to Schooling

Now we have the basics of OLS, we can start on a real problem. One of the most studied areas of microeconometrics is returns to schooling (Card 2001). Do policies that encourage people to get more education, improve the economic outcomes? One way to answer this question is to use survey data to determine how much education a person received and how much income they earned.

Berkeley labor economist David Card, analyzed this question using survey data on men aged between 14 and 24 in 1966 (Card 1995). The data set provides information on each man’s years of schooling and income. These are measured ten years later, in 1976.

A Linear Model of Returns to Schooling

Card posits that income in 1976 is determined by the individual’s years of schooling.

\[ \mathrm{Income}_i = \alpha + \beta \mathrm{Education}_i + \mathrm{Unobserved}_i \tag{21}\]

In (Equation 28), income in 1976 for individual \(i\) is determined by their education level and other unobserved characteristics such as the unemployment rate in the place they live. We want to estimate \(\beta\).

NLSM Data

The National Longitudinal Survey of Older and Younger Men (NLSM) is a survey data set used by David Card. Card uses the young cohort who were in the late teens and early twenties in 1966. Card’s version can be downloaded from his website, http://davidcard.berkeley.edu/data_sets.html.25 The original data is a .dat file. The easiest way to wrangle the data is to import it into excel as a “fixed width” file and then copy the variable names from the codebook file. You can then add the variable names to the first line of the excel file and save it as a .csv file. In the code below I named the file nls.csv.26

x <- read.csv("nls.csv", as.is=TRUE)

# I follow the convention of defining any data set as x

# (or possibly y or z).

# I set the working directory to the one where my main

# script is saved, which is the same place as the data.

# Sessions -> Set Working Directory -> Source File Location

# It is important to add "as.is = TRUE",

# otherwise R may change your variables into "factors"

# which is confusing and leads to errors.

# factors can be useful when you want to instantly create

# a large number of dummy variables from a single variable.

# However, it can make things confusing if you don't realize

# what R is doing.

x$wage76 <- as.numeric(x$wage76) Warning: NAs introduced by coercionx$lwage76 <- as.numeric(x$lwage76)Warning: NAs introduced by coercion# changes format from string to number.

# "el wage 76" where "el" is for "log"

# Logging helps make OLS work better. This is because wages

# have a skewed distribution, and log of wages do not.

x1 <- x[is.na(x$lwage76)==0,]

# creates a new data set \# with missing values removed

# is.na() determines missing value elements ("NA"s) After reading in the data, the code changes the format of the data. Because some observations have missing values, the variables will import as strings. Changing the string to a number creates a missing value code NA. The code then drops the missing variables.

Plotting Returns to Schooling

lm1 <- lm(lwage76 ~ ed76, data=x1)

plot(x1$ed76,x1$lwage76, xlab="Years of Education",

ylab="Log Wages (1976)")

# plot allows us to label the charts

abline(a=lm1$coefficients[1],b=lm1$coefficients[2],lwd=3)

Figure 3 presents a simple plot of the relationship between log wages in 1976 and the years of schooling. Again we see a distinct positive relationship even though the observations are spread out in a cloud. We can see that people who do not graduate from high school (finish with less than 12 years of education) earn less on average than those who attend college (have more than 12 years of education). There is a lot of overlap between the distributions.

Estimating Returns to Schooling

kable(summary(lm1)[4])

|

We can use OLS to estimate the average effect of schooling on income. Table 4 gives the OLS estimate. The coefficient estimate of the relationship between years of schooling and log wages is 0.052. The coefficient is traditionally interpreted as the percentage increase in wages associated with a 1 year increase in schooling (Card 1995). This isn’t quite right, but it is pretty close. Below shows the predicted percentage change in wages, measured at the mean of wages, being 5.4%. Compare this to reading the coefficient as 5.2%.

exp(log(mean(x1$wage76)) + lm1$coefficients[2])/mean(x1$wage76) ed76

1.053475 This estimate suggests high returns to schooling. However, the model makes a number of important assumptions about how the data is generated. The following chapters discuss the implications of those assumptions in detail.

Discussion and Further Reading

OLS is the “go to” method for estimation in microeconometrics. The method makes two strong assumptions. First, the unobserved characteristics must be independent of the policy variable. Second, the unobserved characteristics must affect the outcome variable additively. These two assumptions allow averaging to disentangle the causal effect from the effect of unobserved characteristics. We can implement the averaging using one of two algorithms, a matrix algebra-based algorithm or the least squares algorithm. In R, the two algorithms are computationally equivalent. The next two chapters consider weakening the assumptions. Chapter 2 takes the first step by allowing the OLS model to include more variables. Chapter 3 considers cases where neither assumption holds.

Measuring and reporting uncertainty has been the traditional focus of statistics and econometrics. This book takes a more “modern” approach to focus attention on issues around measuring and determining causal effects. This chapter takes a detour into the traditional areas. It introduces the bootstrap method and discusses traditional standard errors. These topics are discussed in more detail in the Appendix.

The chapter introduces causal graphs. Pearl and Mackenzie (2018) provide an excellent introduction to the power of this modeling approach.

To understand more about the returns to schooling literature, I recommend Card (2001). Chapters 2 and 3 replicate much of the analysis presented in Card (1995)}. The book returns to the question of measuring returns to schooling in Chapters 2, 3, 6, 8 and 11.

Multiple Regression

Introduction

In Chapter 1 there is only one explanatory variable. However, in many questions we expect multiple explanations. In determining a person’s income, the education level is very important. There is clear evidence that other factors are also important, including experience, gender and race. Do we need to account for these factors in determining the effect of education on income?

Yes. In general, we do. Goldberger (1991) characterizes the problem as one of running a short regression when the data is properly explained by a long regression. This chapter discusses when we should and should not run a long regression. It also discusses an alternative approach. Imagine a policy that can affect the outcome either directly or indirectly through another variable. Can we estimate both the direct effect and the indirect effect? The chapter combines OLS with directed acyclic graphs (DAGs) to determine how a policy variable affects the outcome. It then illustrates the approach using actual data on mortgage lending to determine whether bankers are racist or greedy.

Long and Short Regression

Goldberger (1991) characterized the problem of omitting explanatory variables as the choice between long and short regression. The section considers the relative accuracy of long regression in two cases: when the explanatory variables are independent of each other, and when the explanatory variables are correlated.

Using Short Regression

Consider an example where true effect is given by the following long regression. The dependent variable \(y\) is determined by both \(x\) and \(w\) (and \(\upsilon\)).

\[ y_i = a + b x_i + c w_i + \upsilon_i \tag{22}\]

In our running example, think of \(y_i\) as representing the income of individual \(i\). Their income is determined by their years of schooling (\(x_i\)) and by their experience (\(w_i\)). It is also determined by unobserved characteristics of the individual (\(\upsilon_i\)). The last may include characteristics of where they live such as the current unemployment rate.

We are interested in estimating \(b\). We are interested in estimating returns to schooling. In Chapter 1, we did this by estimating the following short regression.

\[ y_i = a + b x_i + \upsilon_{wi} \tag{23}\]

By doing this we have a different “unobserved characteristic.” The unobserved characteristic, \(\upsilon_{wi}\) combines both the effects of the unobserved characteristics from the long regression (\(\upsilon_i\)) and the potentially observed characteristic (\(w_i\)). We know that experience affects income, but in Chapter 1 we ignore the possibility.

Does it matter? Does it matter if we just leave out important explanatory variables? Yes. And No. Maybe. It depends. What was the question?

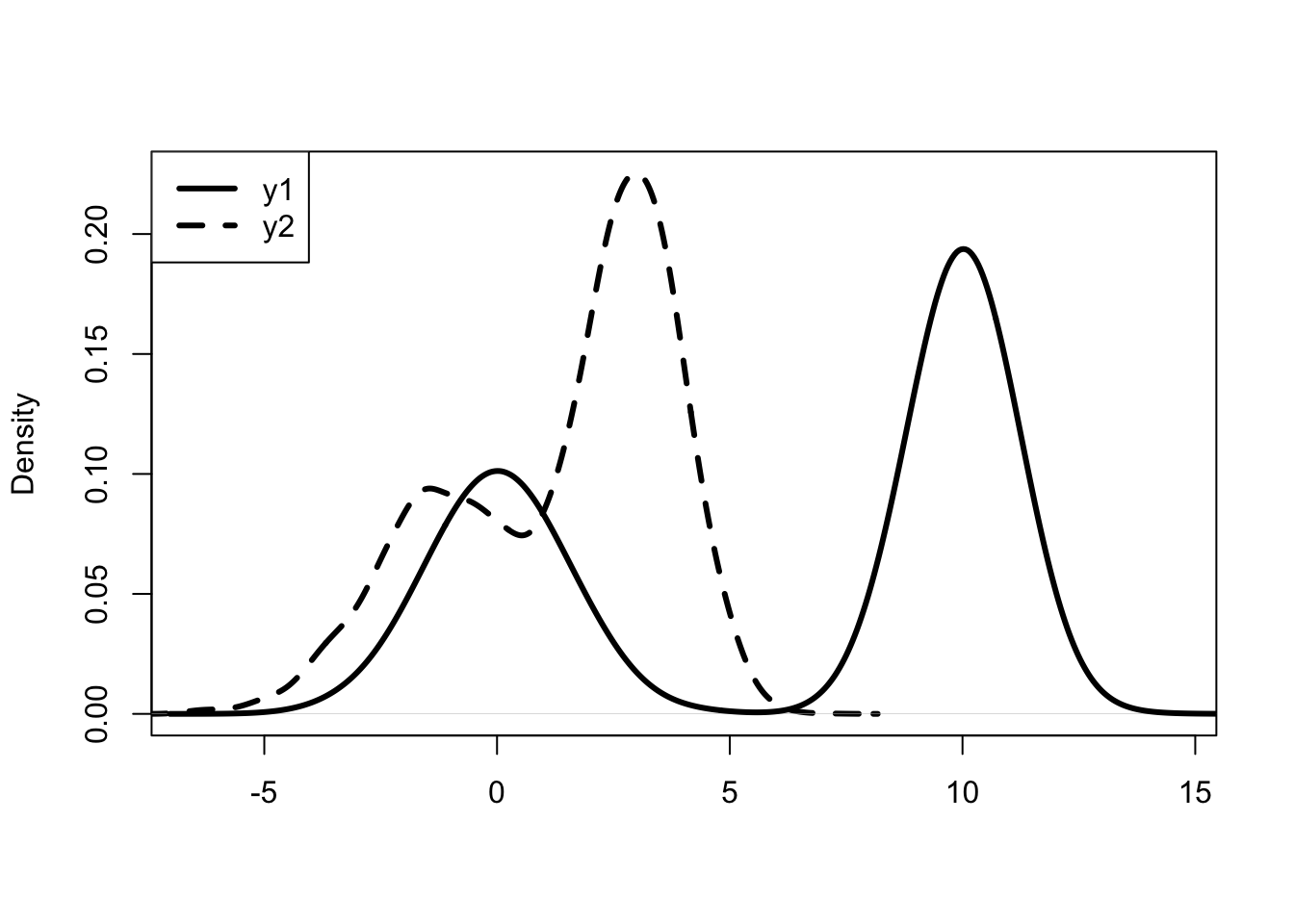

Independent Explanatory Variables

flowchart TD X(X) -- b --> Y(Y) W(W) -- c --> Y

The Figure 4 presents the independence case. There are two variables that determine the value of \(Y\), these are \(X\) and \(W\). If \(Y\) is income, then \(X\) may be schooling while \(W\) is experience. However, the two effects are independent of each other. In the figure, independence is represented by the fact that there is no line joining \(X\) to \(W\), except through \(Y\).

Consider the simulation below and the results of the various regressions presented in Table 5. Models (1) and (2) show that it makes little difference if we run the short or long regression. Neither of the estimates is that close, but that is mostly due to the small sample size. It does impact the estimate of the constant; can you guess why?

Dependent Explanatory Variables

flowchart TD A(X) -- b --> B(Y) C(W) -- c --> B D --> A D --> C

Short regressions are much less trustworthy when there is some sort of dependence between the two variables. Figure 5 shows the causal relationship when \(X\) and \(W\) are related to each other. In this case, a short regression will give a biased estimate of \(b\) because it will incorporate \(c\). It will incorporate the effect of \(W\). Pearl and Mackenzie (2018) calls this a backdoor relationship because you can trace a relationship from \(X\) to \(Y\) through the backdoor of \(U\) and \(W\).

Models (3) and (4) of Table 5 present the short and long estimators for the case where there is dependence. In this case we see a big difference between the two estimators. The long regression gives estimates of \(b\) and \(c\) that are quite close to the true values. The short regression estimate of \(b\) is considerably larger than the true value. In fact, you notice the backdoor relationship. The estimate of \(b\) is close to 7 which is the combined value of \(b\) and \(c\).

Simulation with Multiple Explanatory Variables

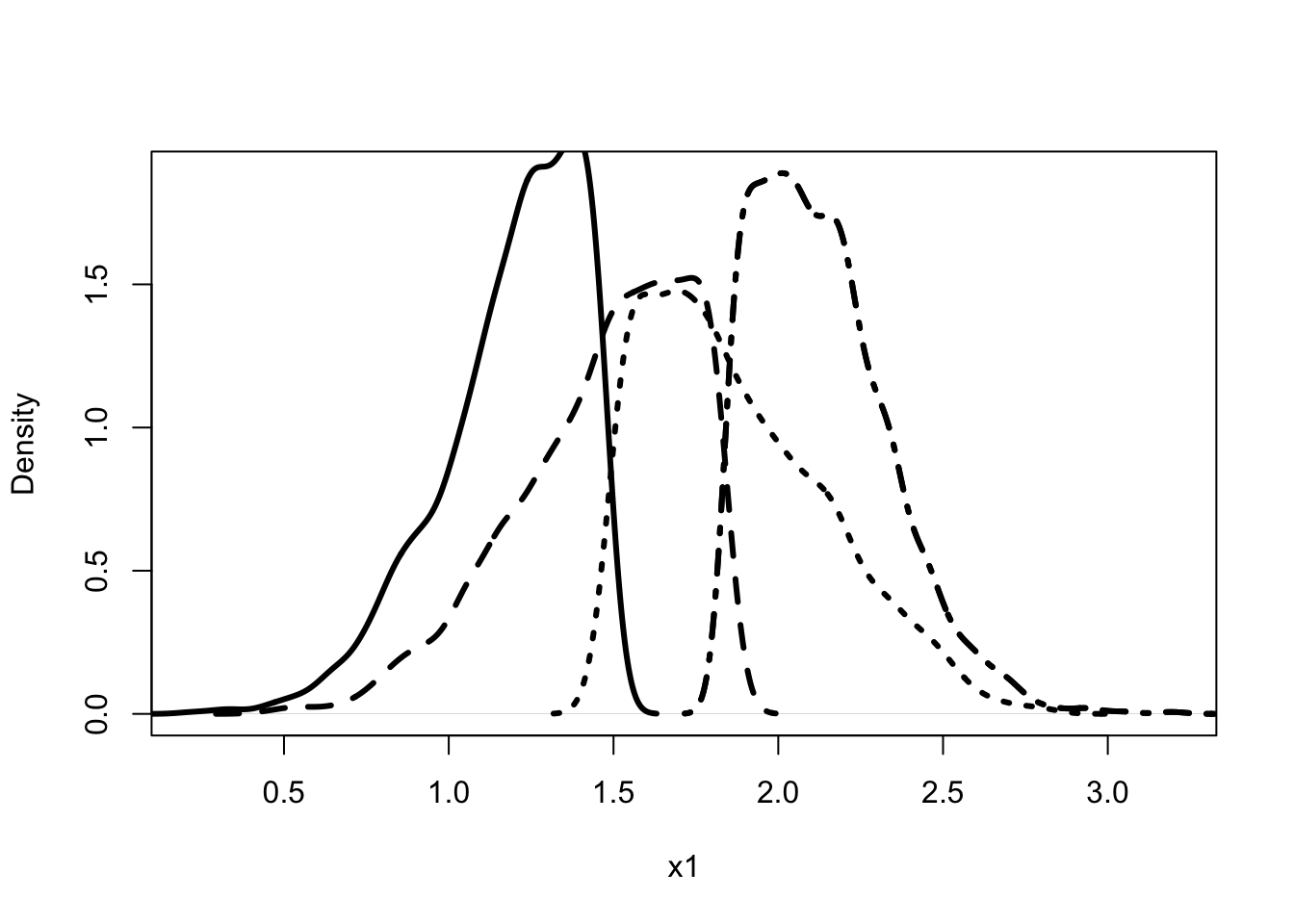

The first simulation assumes that \(x\) and \(w\) affect \(y\) independently. That is, while \(x\) and \(w\) both affect \(y\), they do not affect each other nor is there a common factor affecting them. In the simulations, the common factor is determined by the weight \(\alpha\). In this case, there is zero weight placed on the common factor potentially affecting both observed characteristics.

set.seed(123456789)

N <- 1000

a <- 2

b <- 3

c <- 4

u_x <- rnorm(N)

alpha <- 0

x <- x1 <- (1 - alpha)*runif(N) + alpha*u_x

w <- w1 <- (1 - alpha)*runif(N) + alpha*u_x

u <- rnorm(N)

y <- a + b*x + c*w + u

lm1 <- lm(y ~ x)

lm2 <- lm(y ~ x + w)The second simulation allows for dependence between \(x\) and \(w\). In the simulation this is captured by a positive value for the weight that each characteristic places on the common factor \(u_x\). Table 5 shows that in this case it makes a big difference if a short or long regression is run. The short regression gives a biased estimate because it is accounting for the effect of \(w\) on \(y\). Once this is accounted for in the long regression, the estimates are pretty close to the true value.

alpha <- 0.5

x <- x2 <- (1 - alpha)*runif(N) + alpha*u_x

w <- w2 <- (1 - alpha)*runif(N) + alpha*u_x

y <- a + b*x + c*w + u

lm3 <- lm(y ~ x)

lm4 <- lm(y ~ x + w)The last simulation suggests that we need to take care not to overly rely on long regressions. If \(x\) and \(w\) are highly correlated, then the short regression gives the dual effect, while the long regression presents garbage.27

alpha <- 0.95

x <- x3 <- (1 - alpha)*runif(N) + alpha*u_x

w <- w3 <- (1 - alpha)*runif(N) + alpha*u_x

y <- a + b*x + c*w + u

lm5 <- lm(y ~ x)

lm6 <- lm(y ~ x + w)Warning: package 'modelsummary' was built under R version 4.4.1Warning: package 'gt' was built under R version 4.4.1| (1) | (2) | (3) | (4) | (5) | (6) | |

|---|---|---|---|---|---|---|

| (Intercept) | 3.983 | 2.149 | 2.142 | 2.071 | 2.075 | 2.077 |

| (0.099) | (0.082) | (0.046) | (0.036) | (0.033) | (0.032) | |

| x | 3.138 | 2.806 | 6.842 | 2.857 | 7.014 | 0.668 |

| (0.168) | (0.111) | (0.081) | (0.166) | (0.034) | (1.588) | |

| w | 4.054 | 4.159 | 6.346 | |||

| (0.111) | (0.160) | (1.588) | ||||

| Num.Obs. | 1000 | 1000 | 1000 | 1000 | 1000 | 1000 |

| R2 | 0.258 | 0.682 | 0.877 | 0.927 | 0.977 | 0.978 |

| R2 Adj. | 0.257 | 0.681 | 0.877 | 0.927 | 0.977 | 0.978 |

| AIC | 3728.3 | 2882.6 | 3399.4 | 2884.8 | 2897.3 | 2883.4 |

| BIC | 3743.0 | 2902.2 | 3414.2 | 2904.4 | 2912.0 | 2903.1 |

| Log.Lik. | -1861.141 | -1437.292 | -1696.717 | -1438.393 | -1445.661 | -1437.712 |

| RMSE | 1.56 | 1.02 | 1.32 | 1.02 | 1.03 | 1.02 |

Table 5 shows what happens when you run a short regression with dependence between the variables.28 When there is no dependence the short regression does fine, actually a little better in this example. However, when there is dependence the short regression is capturing both the effect of \(x\) and the effect of \(w\) on \(y\). Running long regressions is not magic. If the two variables are strongly correlated then the long regression cannot distinguish between the two different effects. There is a multicollinearity problem. This issue is discussed in more detail below.

Matrix Algebra of Short Regression

To illustrate the potential problem with running a short regression consider the matrix algebra.

\[ y = \mathbf{X} \beta + \mathbf{W} \gamma + \upsilon \tag{24}\]

The (Equation 24) gives the true relationship between the outcome vector \(y\) and the observed explanatory variables \(\mathbf{X}\) and \(\mathbf{W}\). “Dividing” by \(\mathbf{X}\) we have the following difference between the true short regression and the estimated short regression.

\[ \hat{\beta} - \beta = (\mathbf{X}'\mathbf{X})^{-1} \mathbf{X}' \mathbf{W} \gamma + (\mathbf{X}'\mathbf{X})^{-1} \mathbf{X}' \upsilon \tag{25}\]

The (Equation 25) shows that the short regression gives the same answer if \((\mathbf{X}'\mathbf{X})^{-1} \mathbf{X}' \upsilon = 0\) and either \(\gamma = 0\) or \(\mathbf{X}'\mathbf{X})^{-1} \mathbf{X}' \mathbf{W} = 0\). The first will tend to be close to zero if the \(X\)s are independent of the unobserved characteristic. This is the standard assumption for ordinary least squares. The second will tend to be close to zero if either of the \(W\)’s have no effect on the outcome (\(Y\)) or there is no correlation between the \(X\)s and the \(W\)s. The linear algebra illustrates that the extent that the short regression is biased depends on both the size of the parameter \(\gamma\) and the correlation between \(X\) and \(W\). The correlation is captured by \(\mathbf{X}'\mathbf{W}\).

cov(x1,w1) # calculates the covariance between x1 and w1[1] 0.007019082cov(x2,w2)[1] 0.2557656t(x1)%*%w1/N [,1]

[1,] 0.2581774# this corresponds to the linear algebra above

t(x2)%*%w2/N [,1]

[1,] 0.3188261# it measures the correlation between the Xs and Ws.In our simulations we see that in the first case the covariance between \(x\) and \(w\) is small, while for the second case it is much larger. It is this covariance that causes the short regression to be biased.

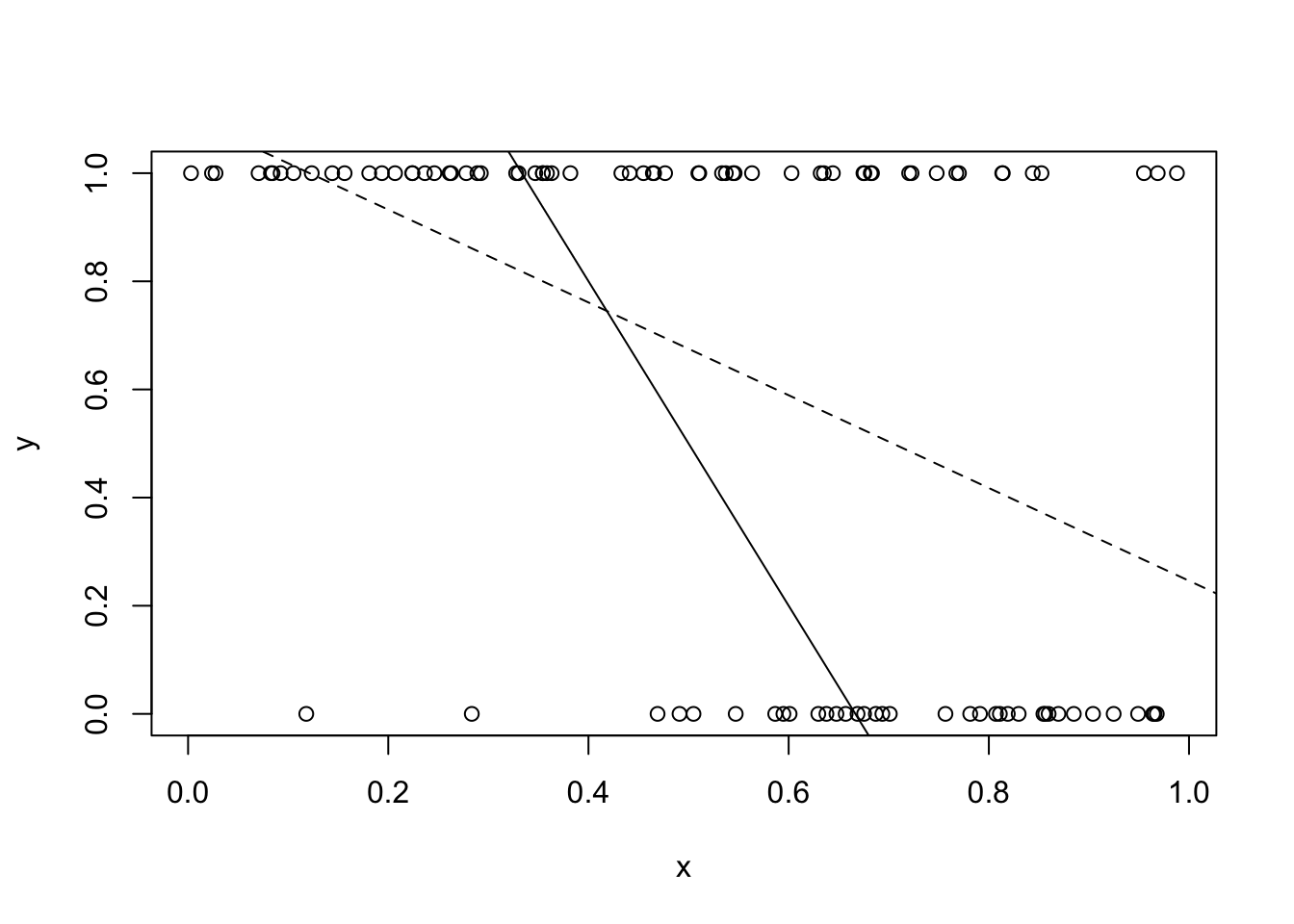

Collinearity and Multicollinearity