Introduction to Pure Mathematics

Florian Bouyer and John Mackay

16 September 2024

- 1 Introduction

- 2 The building blocks of pure mathematics - sets and logic

- 3 The rationals are not enough

- 4 Proof by induction

- 5 Studying the integers

- 6 Moving from one set to another - Functions

- 7 Cardinality

- 8 Sets with structure - Groups

- 9 Linking groups together

- 10 Modular arithmetic and Lagrange

- 11 Taming infinity

1 Introduction

A mathematical theory is not to be considered complete until you have made it so clear that you can explain it to the first man whom you meet on the street. – David Hilbert –

Proofs are at the heart of mathematics, distinguishing what we know is mathematically true (or untrue) from what we still don’t know. The first aim of this course is to see different types of proofs and mathematical reasoning, and learning how to make your own mathematical arguments. Reading proofs will develop our critical thinking, e.g., how does this sentence follow from the previous one; how have we used all our assumptions; what happen if we tweak our assumptions; etc. Writing proofs will develop our creative and communication skills, e.g., how do we put together various ideas to come up with a new one; how do we explain our argument to someone else; etc.

As we can not see mathematical arguments without mathematical content, the second aim of this course is to give you a strong foundation to pure mathematics - a very broad branch of mathematics.

This notes are based on the notes from a previous Introduction to Proofs and Group Theory course (Steffi Zegowitz; Lynne Walling; Jos Gunns; Jeremy Rickard; John Mackay), and the early chapters from a previous Analysis course (Thomas Jordan; Ivor McGillivray; Oleksiy Klurman); .

1.1 How to use these notes

These notes are colour coded to help you identify the important bits.

Results from the course, which will either be theorems, lemmas, propositions or corollaries, will have this background colour. It is important to know them so to be able to apply them to different situations.

After most results, there will be a proof with this background colour. It is important to understand each proof as similar techniques can be applied in different mathematical situations. Note that many proofs are left as exercises so that one can practice coming up and writing proofs.

□

Interest, History and Etymological (origin of words) notes will not have a background colour, however there is a line to show the start and the end of the note. These notes are there for general interest and is not examinable content.

Most of the Etymology notes comes from the book “The words of Mathematics” by Steven Schwartzman (The Mathematical Association of America, 1994)

Most of the history of the development of Group Theory comes from the article “The Evolution of Group Theory: A Brief Survey” by Israel Kleiner (Mathematics Magazine, Vol 59, No 4, 192-215, 1986).2 The building blocks of pure mathematics - sets and logic

To be able to prove mathematical results, we need two ingredients - the setting in which we are doing maths; and the logical reasoning we use to do maths.

2.1 Sets

Before we can start to do maths, we need to know the setting in which we are doing maths. For this reason, sets can be seen as the building block of all maths.

We use curly brackets { and } to denotes sets.

We use the symbol \(\in\) to say an element is in a set. We drawn a line through it to negate it, i.e. we use \(\notin\) to say an element is not in a set.

We use the symbol \(<\) to mean “(strictly) less than” and \(\leq\) to mean “less than or equal to”. Similarly we use \(>\) to mean “greater than” and \(\geq\) to mean “greater than or equal to”The set of trigonometric functions: \(\{\sin(x),\cos(x),\tan(x)\}=\{\cos(x),\tan(x),\cos(x),\sin(x)\}\).

The set of integers between \(2\) and \(6\): \(\{2,3,4,5,6\}=\{6,4,2,2,3,4,6,5\}\).

Since \(2\leq 6\) and \(6\leq 6\), we have \(6 \in \{2,3,4,5,6\}\), however \(15 \notin \{2,3,4,5,6\}\) as \(15>6\).

If a set does not have any elements, we call it the empty set and use \(\emptyset\) (or \(\{\ \}\)).

\(\mathbb{Z}\) is the set of integers, that is \(\mathbb{Z}=\{\ldots, -3, -2, -1, 0, 1, 2, 3, \ldots\}\).

The symbol \(\mathbb{Z}\) comes from the German “Zahlen” - which means numbers. It was first used by David Hilbert (German mathematician, 1862 - 1943), and popularised in Europe by Nicolas Bourbaki (a collective of mainly French mathematicians, 1935 - ) in their 1947 book “Algèbre”. Integers come from the Latin in, which means “not”, and tag which means “to touch”. An integer is a number that has been “untouched”, i.e. “intact” or “whole”.

The set of integers is equipped with the operations of additions \(+\) and multiplication \(\cdot\) that satisfy the following 10 arithmetic properties (5 relating to addition; a Distributive Law; and 4 relating to multiplication):

(A1) - Closure under addition For all \(x,y \in \mathbb{Z}\) we have \(x+y \in \mathbb{Z}\).

(A2) - Associativity under addition For all \(x,y,z \in \mathbb{Z}\) we have \(x+(y+z)=(x+y)+z\).

(A3) - Commutativity of addition For all \(x,y\in\mathbb{Z}\) we have \(x+y=y+x\).

(A4) - Additive identity For all \(x \in \mathbb{Z}\) we have \(x+0=x\).

(A5) - Additive inverse For all \(x \in \mathbb{Z}\) we have \(-x\in\mathbb{Z}\) and \(x+(-x)=0\).

(A6) - Distributive Law For all \(x,y,z\in\mathbb{Z}\) we have \(x(y+z)=xy+xz\).

(A7) - Closure under multiplication For all \(x,y \in \mathbb{Z}\) we have \(xy \in \mathbb{Z}\).

(A8) - Associativity under multiplication For all \(x,y,z \in \mathbb{Z}\) we have \(x(yz)=(xy)z\).

(A9) - Commutativity of multiplication For all \(x,y\in\mathbb{Z}\) we have \(xy=yx\).

(A10) - Multiplicative identity For all \(x \in \mathbb{Z}\) we have \(1\cdot x=x\).

Formally speaking, this is saying that \(\mathbb{Z}\) with \(+\) and \(\cdot\) is a ring. The notion of a ring is explored in more details in later units such as the second year unit Algebra 2. Properties (A1) to (A5) tells us that \(\mathbb{Z}\) with \(+\) is an abelian group. We will explore the notion of groups and abelian groups later in this unit.

On top of these 10 arithmetic properties, \(\mathbb{Z}\) is well ordered, i.e., it comes with 4 order properties:

(O1) - trichotomy For all \(x,y\in\mathbb{Z}\) either \(x<y\), \(x=y\) or \(x>y\).

(O2) - transitivity For all \(x,y,z\in\mathbb{Z}\), if \(x<y\) and \(y<z\) then \(x<z\).

(O3) - compatibility with addition For all \(x,y,z in \mathbb{Z}\), if \(x<y\) then \(x+z<y+z\).

(O4) - compatibility with multiplication For all \(x,y,z \in \mathbb{Z}\), if \(x<y\) and \(z>0\) then \(zx<zy\).

There are many words above that seems to have come from nowhere, but can be related back to words used in everyday English.

Associative comes from the Latin ad meaning “to” and socius meaning “partner, companion”. An associate is someone who is a companion to you. The property \(x+(y+z)=(x+y)+z\) shows that it doesn’t matter who \(y\) “keeps company with”, the result is still the same.

Commutative comes from the Latin co meaning “with” and mutare meaning “to move”. To commute is “to change, to exchange, to move”. In everyday situation, a commute is the journey (i.e., moving) from home to work. The property \(x+y=y+x\) shows that we can move/exchange \(x\) and \(y\) and the result is the same.

Identity comes from the Latin idem meaning “same”. The additive identity is the element which keeps other elements the same when added to it. The multiplicative identity is the element which keeps other elements the same when multiplied by it.

Inverse comes from in and vertere which is the verb “to turn”. The additive inverse of \(x\) is the quantity that turns back “adding \(x\)”.

Trichotomy comes from the Greek trikha meaning “in three parts” and temnein meaning “to cut”. If you pick \(x\in \mathbb{Z}\), then you can cut \(\mathbb{Z}\) into three parts, the integers less than \(x\), the integers equal to \(x\) and the integers greater than \(x\).

Transitive comes from the Latin trans meaning “across, beyond” and the verb itus/ire meaning “to go”. Knowing \(x<y\) and \(y<z\) allows us to go across/beyond \(y\) to conclude \(x<z\).

As seen from the properties above, we often need to quantify objects in mathematics, that is we need to distinguish between a criteria always being met (“for all”), or the existence of a case where a criteria is met (“there exists”). Sometimes we also need to distinguish whether there is a unique case where a criteria is met.

We have the following symbolic notation:

The symbol \(\forall\) denotes for all, or equivalently, for every.

The symbol \(\exists\) denotes there exists.

We use \(\exists !\) to denote there exists a unique, or equivalently there exists one and only one.

□

We can use \(\mathbb{Z}\) as a starting point to construct different sets.

Within the curly brackets of a set, we use colon, \(\colon\), to mean “such that”.

Returning to the previous example, the set of integers between \(2\) and \(6\) can be written as \(\{x \in \mathbb{Z}\colon 2\leq x \leq 6\}\);

We use \(+\) to denote the positive numbers in a set. I.e., \(\mathbb{Z}_+= \{x\in \mathbb{Z}: x> 0\} = \{1,2,\dots,\}\) denotes the set of positive integers.

Similarly, \(\mathbb{Z}_- = \{x\in \mathbb{Z}: x<0 \} = \{-1,-2,-3,\dots\}\) denotes the set of negative integers.

We denote the set of non-negative integers by \(\mathbb{Z}_{\geq 0}=\{x\in \mathbb{Z}: x\geq 0\} = \{0,1,2,\dots,\}\).

If \(n \in \mathbb{Z}\), we write \(n\mathbb{Z} = \{nx:x\in \mathbb{Z}\} = \{y \in \mathbb{Z}: \exists x \in \mathbb{Z} \text{ with } y=xn\} = \{\dots,-3n,-2n,-n,0,n,2n,3n,\dots\}\).

You may have also heard of the natural numbers denoted \(\mathbb{N}\). However, some sources consider \(0\) as a natural number (so \(\mathbb{N} = \mathbb{Z}_{\geq 0}\)) and other consider \(0\) not to be a natural number (so \(\mathbb{N} = \mathbb{Z}_+\)). To avoid any confusion (and because we will need \(\mathbb{Z}_{\geq 0}\) sometimes and \(\mathbb{Z}_+\) at other times), we will not be using \(\mathbb{N}\) in this course.

\(\mathbb{Q}\) is the set of rational numbers, that is \(\mathbb{Q}=\left\{\frac{a}{b}:\ a\in\mathbb{Z},\ b\in\mathbb{Z}_+ \right\}\).

We will later see how \(\mathbb{Q}\) can be constructed from \(\mathbb{Z}\) and how this lead to a “natural” way to write each rational numbers

The symbol \(\mathbb{Q}\) stands for the word “quotient”, which is Latin for “how often/how many”, i.e., the quotient \(\frac{a}{b}\) is “how many times does \(b\) fit in \(a\)”. Surprisingly, the word “rational” to describe some numbers came after the use of “irrational” number. Ratio is Latin for “thinking/reasoning”. When the Phythagorean school in Ancient Greece realised some numbers could not be expressed as the quotient of two whole numbers (such as \(\sqrt{2}\), which we will prove later), they called those “irrational”, i.e. numbers that should not be thought about. “Rational” numbers were numbers that were not “irrational”, i.e., one could think about them.

We extend the operations of addition and multiplication as well as the order relation for the integers to the rational numbers. Let \(\frac{a}{b}, \frac{c}{d} \in \mathbb{Q}\), then:

\[\frac{a}{b}+\frac{c}{d} = \frac{ad+bc}{bd} \text{ and } \frac{a}{b}\cdot\frac{c}{d} = \frac{ac}{bd}.\] Similarly to \(\mathbb{Z}\), we have that \(\mathbb{Q}\) with \(+\) and \(\cdot\) satisfies the properties (A1) to (A10) as well as (O1) to (O4). It also satisfy the extra arithmetic property:

(A11) - multiplicative inverse For all \(x\in\mathbb{Q}\) with \(x\neq 0\), we have \(x^{-1} = \frac{1}{x} \in \mathbb{Q}\) and \(x^{-1}x = 1\).

Notice that (A11) is similar to (A5) but for multiplication. As we will see later in the course, another way of saying (A7) to (A11) is that \(\mathbb{Q}\) without \(0\) under \(\cdot\) is an abelian group. As you will see in Linear Algebra, the arithmetic properties of \(\mathbb{Q}\) ((A1) to (A11)) comes from the fact that \(\mathbb{Q}\) is a field.

Similarly with \(\mathbb{Z}\), using \(\mathbb{Q}\) we can construct the sets \(\mathbb{Q}_+\), \(\mathbb{Q}_-\), \(\mathbb{Q}_{\geq 0}\) etc.

2.2 Truth table

Now that we have some objects to work with, we want to know what we can do with them. In a mathematical system, statements are either true or false, but not both at once. We sometimes say a statement \(P\) holds to mean it is true. The label ‘true’ or ‘false’ associated to a given statement is its truth value.

Definitions (i.e., \(\mathbb{Z}\)) and axioms (i.e., (A1)-(A11) ) are statements we take to be true, while propositions, theorems and lemmas are statements that we want to prove are true and often consist of smaller statements linked together. While we often don’t write statements symbolically, looking at truth table and statements help us understand the fundamentals of how a proof works. We first introduce the four building blocks of statements.

We use the symbol \(\neg\) to mean not. The truth table below shows the value \(\neg P\) takes depending on the truth value of \(P\).

| \(P\) | \(\neg P\) |

|---|---|

| T | F |

| F | T |

We will see concrete examples of how to negate statements later in this chapter.

We use the symbol \(\wedge\) to mean and. Let \(P\) and \(Q\) be two statements, we have that \(P\wedge Q\) is true exactly when \(P\) and \(Q\) are true. The corresponding truth table is as follows:

| \(P\) | \(Q\) | \(P\wedge Q\) |

|---|---|---|

| T | T | T |

| T | F | F |

| F | T | F |

| F | F | F |

We use the symbol \(\vee\) to mean or. Let \(P\) and \(Q\) be two statements, we have that \(P\vee Q\) is true exactly when at least one of \(P\) or \(Q\) is true. The corresponding truth table is as follows.

| \(P\) | \(Q\) | \(P\vee Q\) |

|---|---|---|

| T | T | T |

| T | F | T |

| F | T | T |

| F | F | F |

We use the symbol \(\implies\) to mean implies. Let \(P\) and \(Q\) be two statements “\(P \implies Q\)” is the same as “If \(P\), then \(Q\)”, or “for \(P\) we have \(Q\)”. The corresponding truth table is as follows.

| \(P\) | \(Q\) | \(P\implies Q\) |

|---|---|---|

| T | T | T |

| T | F | F |

| F | T | T |

| F | F | T |

The above truth table can seem confusing, but consider the following example.

Recall (A1) - “For all \(x,y \in \mathbb{Z}\) we have \(x+y \in \mathbb{Z}\)”. Let \(P\) be the statement \(x,y \in \mathbb{Z}\) and \(Q\) be the statement \(x+y \in \mathbb{Z}\), then (A1) can be written symbolically as \(\forall x,y, P \implies Q\). This statement is true, regardless of what the value of \(x\) and \(y\) are. But let us look at the truth value of \(P\) and \(Q\) with different \(x\) and \(y\)

| \(x\) | \(y\) | \(P\) | \(Q\) |

|---|---|---|---|

| \(0\) | \(1\) | T | T |

| \(0\) | \(\frac{3}{2}\) | F | F |

| \(\frac{1}{2}\) | \(1\) | F | F |

| \(\frac{1}{2}\) | \(\frac{3}{2}\) | F | T |

Many theorems are of the type “If \(P\) then \(Q\)”. A common method to prove such statement is to start with the assumption \(P\) (i.e., assume \(P\) is true) and use logical steps to arrive at \(Q\).These are often referred to as “direct proofs”.

The above example also shows that you can not start a proof with what you want to prove, as you could start with something false and end up with something true.□

Turning back to sets, we look at examples of direct proofs.

A set \(A\) is a subset of a set \(B\), denoted by \(A\subseteq B\), if every element of \(A\) is also an element of \(B\) (Symbolically: \(\forall x \in A\), we have \(x \in B\)).

We write \(A\not\subseteq B\) when \(A\) is not a subset of \(B\), so there is at least one element of \(A\) which is not an element of \(B\) (Symbolically: \(\exists x \in A\) such that \(x\notin B\)).

A set \(A\) is a proper subset of a set \(B\), denoted by \(A\subsetneq B\), if \(A\) is a subset of \(B\) but \(A\neq B\).Let \(A = 4\mathbb{Z}= \{4n: n\in\mathbb{Z}\}\) and \(B = 2\mathbb{Z}=\{ 2n: n\in \mathbb{Z}\}\). We will prove \(A \subseteq B\) using a direct proof. [If we let \(P\) be the statement \(x \in A\) and \(Q\) be the statement \(x \in B\), note that \(\forall x \in A\) we have \(x\in B\) translate symbolically to \(\forall x, P \implies Q\).]

Let \(x\in A\) [i.e., suppose \(P\) is true]. Then there exists \(n\in\mathbb{Z}\) such that \(x=4n\). Hence, \(x=4n=2(2n)=2m\), for some \(m\in\mathbb{Z}\), i.e. there exists \(m\in \mathbb{Z}\) such that \(x = 2m\). Hence, \(x\in B\) [i.e., \(Q\) is true]. Since this argument is true for any \(x \in A\), we have that for all \(x \in A, x \in B\), hence \(A\subseteq B\).

We will now prove that \(B \not\subseteq A\) by showing there is an element of \(B\) which is not an element of \(A\). Take \(x=10\). Then \(x\in B\) [as \(x=5\cdot 2\)] but \(x\not\in A\) [as \(x = \frac{5}{2} \cdot 4\) but \(4\notin \mathbb{Z}\)].

Combining the two statements above, it follows that \(A\subsetneq B\).To prove something is true for all \(x\in X\), we “let \(x\in X\)” or “suppose \(x\in X\)” with no further conditions. Whatever we conclude about \(x\) is true for all \(x\in X\). This is the technique we used in Part 1. above.

Suppose \(A\) and \(B\) are sets. Showing that \(A=B\) is the same as showing that \(A\subseteq B\) and \(B\subseteq A\).□

We show that \(2\mathbb{Q} = \mathbb{Q}\), by showing \(2\mathbb{Q} \subseteq \mathbb{Q}\) and \(\mathbb{Q} \subseteq 2\mathbb{Q}\).

Let \(x \in 2\mathbb{Q}\). Then there exists \(y \in \mathbb{Q}\) such that \(x = 2y\). By (A6), since \(2,y\in\mathbb{Q}\), we have \(x=2y\in \mathbb{Q}\). As this is true for all \(x\in 2\mathbb{Q}\) we have \(2\mathbb{Q} \subseteq \mathbb{Q}\).

Let \(x \in \mathbb{Q}\). Let \(y = \frac{x}{2}\). Note that \(\frac{1}{2} \in \mathbb{Q}\) so by (A6), since \(\frac{1}{2},x \in \mathbb{Q}\), we have \(y = \frac{x}{2} \in \mathbb{Q}\). Hence, there exists \(y \in \mathbb{Q}\) such that \(x = 2y\), so \(x\in 2\mathbb{Q}\). As this is true for all \(x\in \mathbb{Q}\) we have \(\mathbb{Q} \subseteq 2\mathbb{Q}\).

We can combine the three basic symbols together to make more complicated statements, and use truth tables to find when their truth values based on the truth values of \(P\) and \(Q\).

Let \(P\) and \(Q\) be two statements. The corresponding truth table for \((\neg P) \vee Q\) is as follows.

| \(P\) | \(Q\) | \(\neg P\) | \((\neg P)\vee Q\) |

|---|---|---|---|

| T | T | F | T |

| T | F | F | F |

| F | T | T | T |

| F | F | T | T |

Let \(P\) and \(Q\) be two statements. The corresponding truth table for \((\neg Q) \implies (\neg P)\) is as follows.

| \(P\) | \(Q\) | \(\neg P\) | \(\neg Q\) | \((\neg Q) \implies (\neg P)\) |

|---|---|---|---|---|

| T | T | F | F | T |

| T | F | F | T | F |

| F | T | T | F | T |

| F | F | T | T | T |

2.3 Logical Equivalence

As well as combining statements together to make new statements, we also want to know whether two statements are equivalent, that is they are the same.

We use the symbol \(\iff\) to mean if and only if. For two statements \(P\) and \(Q\), “\(P \iff Q\)” means \(P\implies Q\) and \(Q \implies P\). In this case we say “\(P\) and \(Q\) are equivalent”. The corresponding truth table is as follows.

| \(P\) | \(Q\) | \(P\iff Q\) |

|---|---|---|

| T | T | T |

| T | F | F |

| F | T | F |

| F | F | T |

If we take two statements which are logically equivalent, say \(P\) is equivalent to \(Q\), then proving \(P\) to be true is equivalent to proving \(Q\) to be true. Similarly, proving \(Q\) to be true is equivalent to proving \(P\) to be true. We can use truth tables to prove if two (abstract) statements are equivalent.This will prove to be useful later on when we turn a statement we want to prove is true into another equivalent statement that may be easier to prove.

Let \(P\) and \(Q\) be two statements.

\(P\implies Q\) is equivalent to \((\neg Q)\implies(\neg P)\).

\(P\implies Q\) is equivalent to \((\neg P)\vee Q\).

Using the last two examples and the truth table for \(P\implies Q\), we have the following truth table.

| \(P\) | \(Q\) | \(P\implies Q\) | \((\neg Q)\implies(\neg P)\) | \((\neg P)\vee Q\) |

|---|---|---|---|---|

| T | T | T | T | T |

| T | F | F | F | F |

| F | T | T | T | T |

| F | F | T | T | T |

Hence, for all truth values of \(P\) and \(Q\), we have that \(P\implies Q\) and \((\neg Q)\implies (\neg P)\) have the same truth values. Therefore, \(P\implies Q\) is equivalent to \((\neg Q)\implies(\neg P)\).

Similarly, for all truth values of \(P\) and \(Q\), we have that \(P\implies Q\) and \((\neg P)\vee Q\) have the same truth values. Therefore, \(P\implies Q\) is equivalent to \((\neg P)\vee Q\).□

Notice that the above proof has several sentences to explain to the reader what is going on. It is made up of full sentences with a clear conclusion on what the calculation (in this case the truth table) shows. A proof should communicate clearly to the reader why the statement (be that a theorem, proposition or lemma) is true.

How much detail you put in a proof will be influenced by who your target audience is - this is a skill you will develop over your time as a student.□

Suppose \(P\) and \(Q\) are two statements. Then:

\(P\iff (\neg(\neg P))\).

\((P\vee Q)\iff((\neg P)\implies Q)\)

Exercise.

□

The next proposition shows that \(\wedge\) and \(\vee\) are associative, that is, \(P\wedge Q\wedge R\) and \(P\vee Q\vee R\) are statements that are clear without parentheses (and therefore do not require parentheses).

Suppose \(P,Q,R\) are statements.

\(((P\wedge Q)\wedge R)\iff (P\wedge(Q\wedge R)).\)

\(((P\vee Q)\vee R )\iff (P\vee(Q\vee R)).\)

We prove part a. and leave the proof of part b. as an exercise. We have the following truth table.

| \(P\) | \(Q\) | \(R\) | \(P\wedge Q\) | \((P\wedge Q)\wedge R\) | \(Q\wedge R\) | \(P\wedge (Q\wedge R)\) |

|---|---|---|---|---|---|---|

| T | T | T | T | T | T | T |

| T | T | F | T | F | F | F |

| T | F | T | F | F | F | F |

| T | F | F | F | F | F | F |

| F | T | T | F | F | T | F |

| F | T | F | F | F | F | F |

| F | F | T | F | F | F | F |

| F | F | F | F | F | F | F |

□

Let \(P, Q, R\) be statements. Then \(P \implies (Q \iff R)\) and \((P\implies Q) \iff R\) are not equivalent.

We have the following truth table

| \(P\) | \(Q\) | \(R\) | \(Q \iff R\) | \(P \implies (Q \iff R)\) | \((P\implies Q)\) | \((P\implies Q) \iff R\) |

|---|---|---|---|---|---|---|

| T | T | T | T | T | T | T |

| T | T | F | F | F | T | F |

| T | F | T | F | F | F | F |

| T | F | F | T | T | F | T |

| F | T | T | T | T | T | T |

| F | T | F | F | T | T | F |

| F | F | T | F | T | T | T |

| F | F | F | T | T | T | F |

□

The above proposition shows that the statement \(P \implies Q \iff R\) therefore has no clear meaning without parenthesis. Similarly, there is an exercise to show that \(P \iff (Q \implies R)\) and \((P\iff Q) \implies R\) are not equivalent, so \(P \iff Q \implies R\) is likewise not clear. Hence the meaning of assertions such as \(P\implies Q \iff R \implies S\) is undefined (unless one puts in parenthesis).

As an exercise, one may also prove the following sometimes useful equivalences.

Let \(P,Q,R\) be statements. Then:

\((P\wedge (Q\wedge R))\iff\left((P\wedge Q)\wedge(P\wedge R)\right)\);

\((P\vee (Q\vee R))\iff\left((P\vee Q)\vee(P\vee R)\right).\)

Exercise.

□

Let \(P,Q,R\) be statements. Then

(\(P\wedge(Q\vee R))\iff ((P\wedge Q)\vee(P\wedge R)).\)

(\(P\vee(Q\wedge R))\iff ((P\vee Q)\wedge(P\vee R)).\)

We will prove part a. and leave the proof of part b. as an exercise. We have the following truth table.

| \(P\) | \(Q\) | \(R\) | \(Q\vee R\) | \(P\wedge (Q \vee R)\) | \(P\wedge Q\) | \(P\wedge R\) | \((P\wedge Q) \vee (P\wedge R)\) |

|---|---|---|---|---|---|---|---|

| T | T | T | T | T | T | T | T |

| T | T | F | T | T | T | F | T |

| T | F | T | T | T | F | T | T |

| T | F | F | F | F | F | F | F |

| F | T | T | T | F | T | F | F |

| F | T | F | T | F | F | F | F |

| F | F | T | T | F | F | F | F |

| F | F | F | F | F | F | F | F |

□

Let us return to sets to see how logic may be applied to prove statements.

Suppose that \(A\) and \(B\) are subsets of some set \(X\).

\(A\cup B\) denotes the union of \(A\) and \(B\), that is \[A\cup B=\{x\in X:\ x\in A\text{ or }x\in B\ \}.\]

\(A\cap B\) denotes the intersection of \(A\) and \(B\), that is \[A\cap B=\{x\in X:\ x\in A \text{ and }x\in B\ \}.\]

When \(A\cap B=\emptyset\), we say that \(A\) and \(B\) are disjoint.

We have \(\mathbb{Z}_{\geq 0}\cup \mathbb{Z}_- = \mathbb{Z}\). We also see that \(\mathbb{Z}_{\geq 0} \cap \mathbb{Z}_- =\emptyset\), hence they are disjoint.

The word union comes from the Latin unio meaning “a one-ness”. The union of two sets is a set that lists every element in each set just once (even if the element appears in both sets). While the symbol \(\cup\) looks like a “U” (the first letter of union), this is a coincidence. While the symbol \(\cup\) was first used by Hermann Grassmann (Polish/German mathematician, 1809 - 1877) in 1844, Giuseppe Peano (Italian mathematician, 1858-1932) used it to represent the union of two sets in 1888 in his article Calcolo geometrico secondo Ausdehnungslehre di H. Grassmann. However, at the time the union was referred to as the disjunction of two sets.

The word intersect comes from the Latin inter meaning “within, in between” and sectus meaning “to cut”. The interesection of two curves is the place they cut each other, the intersection of two sets is the “place” where two sets overlaps. While the symbol \(\cap\) was first used by Gottfried Leibniz (German mathematician, 1646 - 1716), he also used it to represent regular multiplication (there are some links between the two ideas). Again, \(\cap\) was used by Giuseppe Peano in 1888 to refer to intersection only.

The word disjoint comes from the Latin dis meaning “away, in two parts” and the word joint. Two sets are disjoint if they are apart from each other without any joints between them. (Compare this to disjunction, which has the same roots but is used to mean joining two things that are apart).Let \(X\) be a set, and for \(x\in X\), let \(P(x)\) be the statement that \(x\) satisfies the criteria \(P\), and let \(Q(x)\) be the statement that \(x\) satisfies the criteria \(Q\). Set \[A=\{x\in X:P(x) \} \qquad\text{and}\qquad B=\{x\in X: Q(x) \}.\] Then \[\begin{align*} A\cap B&=\{x\in X: P(x) \wedge Q(x) \} ,\\ A\cup B&=\{x\in X: P(x) \vee Q(x) \}. \end{align*}\]

For \(x\in X\), we have that \(x\in A\) if and only if the statement \(P(x)\) holds. Similarly, we have that \(x\in B\) if and only if the statement \(Q(x)\) holds. Then \[\begin{align*} A\cap B&=\{x\in X:( x\in A) \wedge (x\in B) \}\\ &=\{x\in X: P(x)\wedge Q(x) \} \end{align*}\] and \[\begin{align*} A\cup B&=\{x\in X:( x\in A) \vee (x\in B) \}\\ &=\{x\in X: P(x)\vee Q(x) \}. \end{align*}\]

□

We can use our work on logical equivalence to show that that \(\cap\) and \(\cup\) are associative.

Suppose \(A,B,C\) are subsets of a set \(X\). Then

\(A\cap(B\cap C)=(A\cap B)\cap C\).

\(A\cup(B\cup C)=(A\cup B)\cup C\).

We will prove part a. and leave part b. as an exercise

Suppose \(x\in X\). Let \(P\) be the statement that \(x\in A\), let \(Q\) be the statement that \(x\in B\), and let \(R\) be the statement that \(x\in C\). Recall Proposition 2.10, \(P\wedge(Q\wedge R)\iff(P\wedge Q)\wedge R\). Then \[\begin{align*} x\in A\cap(B\cap C)&\iff (x\in A)\wedge (x\in B\cap C)\\ &\iff (x\in A)\wedge((x\in B)\wedge (x\in C))\\ &\iff P\wedge(Q\wedge R)\\ &\iff (P\wedge Q)\wedge R\\ &\iff ((x\in A)\wedge (x\in B))\wedge (x\in C)\\ &\iff (x\in A\cap B)\wedge (x\in C)\\ &\iff x\in(A\cap B)\cap C. \end{align*}\] Hence, we have that \(x\in A\cap(B\cap C)\) if and only if \(x\in (A\cap B)\cap C\). It follows that \(A\cap(B\cap C)=(A\cap B)\cap C.\)□

□

Similarly, we have that \(\cup\) and \(\cap\) are distributive.

Let \(A,B,C\) be subsets of a set \(X\). Then

\(A\cap(B\cup C)=(A\cap B)\cup(A\cap C).\)

\(A\cup(B\cap C)=(A\cup B)\cap(A\cup C).\)

We will prove part a. and leave part b. as an exercise

Suppose \(x\in X\). Let \(P\) be the statement that \(x\in A\), let \(Q\) be the statement that \(x\in B\), and let \(R\) be the statement that \(x\in C\). Recall Proposition 2.13 that \(P\wedge(Q\vee R)\iff (P\wedge Q)\vee(P\wedge R).\) Then \[\begin{align*} x\in A\cap(B\cup C) &\iff\ (x\in A)\wedge (x\in B\cup C)\\ &\iff\ (x\in A)\wedge ((x\in B)\vee (x\in C))\\ &\iff\ P\wedge(Q\vee R)\\ &\iff\ (P\wedge Q)\vee(P\wedge R)\\ &\iff\ ((x\in A)\wedge (x\in B))\vee((x\in A)\wedge (x\in C))\\ &\iff\ (x\in A\cap B)\vee(x\in A\cap C)\\ &\iff\ x\in (A\cap B)\cup(A\cap C). \end{align*}\] Hence, we have that \(x \in A\cap(B\cup C)\) if and only if \(x \in (A\cap B)\cup(A\cap C)\). It follows that \(A\cap(B\cup C)=(A\cap B)\cup(A\cap C)\).□

2.4 Negations

Being able to negate statements is important for two reasons:

Instead of proving \(P\) is true, it might be easier to prove that \(\neg P\) is false (proof by contradiction).

Instead of proving \(P \implies Q\) it might be easier (by Theorem 2.8 ) to prove \(\neg Q \implies \neg P\) (proof by contrapositive).

We will expand on these two points later. We already know how to negate most simple statements, for example:

the negation of \(x = 5\) is \(x\neq 5\).

the negation of \(x > 5\) is \(x\leq 5\) (notice the strict inequality became unstrict).

the negation of \(x \in X\) is \(x\notin X\).

To negate simple statements that have been strung together, we use the following theorem.

Suppose \(P,Q\) are two statements. Then

\(\neg(P\wedge Q) \iff ((\neg P) \vee (\neg Q)).\)

\(\neg(P\vee Q) \iff ((\neg P) \wedge (\neg Q)).\)

\(\neg(P\implies Q)\iff (P\wedge (\neg Q)).\)

We will prove part a. and leave part b. and c. as exercises. We have the following truth table.

| \(P\) | \(Q\) | \(\neg P\) | \(\neg Q\) | \(P\wedge Q\) | \(\neg(P\wedge Q)\) | \((\neg P)\vee (\neg Q)\) |

|---|---|---|---|---|---|---|

| T | T | F | F | T | F | F |

| T | F | F | T | F | T | T |

| F | T | T | F | F | T | T |

| F | F | T | T | F | T | T |

□

If \(x\) is not between \(5\) and \(10\), then \(x\) is either less than \(5\) or more than \(10\). Formally speaking \(\neg(5\leq x\leq 10)\) if and only if \(\neg(5\leq x)\) or \(\neg(x\leq 10)\) if and only if \(x<5\) or \(10<x\).

For statements that involves quantifiers (i.e., \(\forall, \exists\)), we use the following theorem.

Let \(X\) be a set, and suppose that \(P(x)\) is a statement involving \(x\in X\). Then

\[\neg(\forall\ x\in X,\ P(x) )\quad \iff\quad \exists \ x\in X \text{ such that } \neg P(x).\]

We also have

\[\neg(\exists\ x\in X \text{ such that } P(x) )\quad \iff \quad \forall\ x\in X,\ \neg P(x).\]

Suppose that \(\forall\ x\in X, P(x)\) is a false statement. Then there must be at least one \(x\in X\) such that \(P(x)\) does not hold. That is, \[\begin{equation} \neg(\forall\ x\in X,\ P(x)) \quad \implies\quad \exists\ x\in X \text{ such that } \neg P(x). \tag{2.1} \end{equation}\]

Conversely, suppose that \(\exists\ x\in X \text{ such that } \neg P(x)\) is a true statement. Then it is not the case that \(P(x)\) holds for all \(x\in X\), that is \[\begin{equation} \exists\ x\in X \text{ such that } \neg P(x) \quad \implies\quad \neg(\forall\ x\in X,\ P(x)). \tag{2.2} \end{equation}\]

By (2.1) and (2.2), it follows that \[\neg(\forall\ x\in X,\ P(x) \quad \iff\quad \exists \ x\in X \text{ such that } \neg P(x).\]

Equivalently, we have \[\begin{align*} \neg(\neg(\forall\ x\in X,\ P(x)))\quad &\iff \quad\neg (\exists\ x\in X \text{ such that } \neg P(x))\\ &\iff \quad\forall\ x\in X,\ P(x). \end{align*}\] Setting \(Q(x)=\neg P(x)\), we have \[ \neg(\exists\ x\in X \text{ such that } Q(x) )\quad \iff \quad \forall\ x\in X,\ \neg Q(x). \]□

Let \(X\) be a set and let us negate the statement “\(\forall x \in X, P(x)\implies Q(x)\)”, by writing equivalent statements using Theorem 2.18 and Theorem 2.19.

\[\begin{align*} \neg(\forall x \in X, P(x)\implies Q(x)) &\ \iff &\ \exists x \in X \text{such that} \neg(P(x) \implies Q(x)) \\ &\ \iff &\ \exists x \in X \text{such that } (P(x) \wedge \neg Q(x)). \end{align*}\]

To make this more concrete, let \(P(x)\) be the statement \(x \in 2\mathbb{Z}\) and \(Q(x)\) the statement \(x \in 4\mathbb{Z}\). We have already shown that \(2\mathbb{Z} \nsubseteq 4\mathbb{Z}\), i.e. \(\forall x \in \mathbb{Z}, P(x) \implies Q(x)\) is false. We did this by showing when \(x=10\), \(P(x)\) is false while \(Q(x)\) is true, i.e., \(\exists x \in \mathbb{Z}\) such that \(P(x)\) is true and \(Q(x)\) is false.Negating statements is also very useful to see when an object does not satisfy a definition. We will see more examples of this later in the course, but for the moment here is an example.

Using (O2) as an example, a set \(X\) satisfies transitivity (with respect to \(<\) ) if for all \(x,y,z\in X\) if \(x<y\) and \(y<z\) then \(x<z\). Let us see what it means for \(X\) not to satisfy (O2). First we turn the definition into symbolic language \(\forall x,y,z \in X, (x<y)\wedge(y<z)\implies (x<z)\). We then negate this

\[\begin{align*} &\neg(\forall x,y,z \in X, (x<y)\wedge(y<z)\implies (x<z)) \\ \iff & \exists x,y,z \in X \text{ such that } \neg((x<y)\wedge(y<z) \implies (x<z)) \\ \iff & \exists x,y,z \in X \text{ such that } ((x<y)\wedge(y<z))\wedge \neg(x<z)) \\ \iff & \exists x,y,z \in X \text{ such that } (x<y)\wedge(y<z)\wedge (x\geq z). \end{align*}\]

□

To negate a statement with the quantifier \(\exists!\), it is useful to first translate this notation to not include “!”.

Suppose \(X\) is a set, and \(P(x)\) is a proposition dependent on \(x\in X\). When we say there is a unique \(x\in X\) so that \(P(x)\) holds we mean first that:

there is some \(x\in X\) so that \(P(x)\) is true, and

if we have \(x_1,x_2\in X\) with \(P(x_1)\) and \(P(x_2)\) are true, then \(x_1=x_2\).

More symbolically, we have \[\left[\exists!x\in X, P(x)\right] \iff \left[(\exists x\in X,\ P(x))\wedge(\forall x_1,x_2\in X,\ (P(x_1)\wedge P(x_2))\implies x_1=x_2)\right].\] So negating, we get: \[\begin{align*} &\neg\left[\exists!x\in X, P(x)\right]\\ &\iff \neg\left[(\exists x\in X,\ P(x))\wedge(\forall x_1,x_2\in X,\ (P(x_1)\wedge P(x_2))\implies x_1=x_2)\right]\\ &\iff \left[\neg(\exists x\in X,\ P(x))\vee\neg[(\forall x_1,x_2\in X,\ (P(x_1)\wedge P(x_2)\implies x_1=x_2)]\right]\\ &\iff \left[(\forall x\in X,\ \neg P(x))\vee[\exists x_1,x_2\in X,\ \neg(P(x_1)\wedge P(x_2)\implies x_1=x_2)]\right]\\ &\iff \left[(\forall x\in X,\ \neg P(x))\vee[\exists x_1,x_2\in X,\ (P(x_1)\wedge P(x_2)\wedge x_1\not=x_2)]\right]. \end{align*}\]

Thus the negation of exactly one \(x\) such that \(P(x)\) holds is “either there exists no \(x\) such that \(P(x)\) holds or there exists more than one \(x\) such that \(P(x)\) holds”2.5 Contradiction and the contrapositive

As mentioned earlier, negating statements are useful when trying to prove statements using different methods. First we look at proof by contradiction.

□

While we will use this method more extensively later, let us see an easy example.

Statement: Let \(x,y \in \mathbb{Q}\). If \(x<y\) then \(-x>-y\).

Proof: For the sake of a contradiction let us assume that \(x<y\) and \(-x\leq-y\). (Recall the negation of \(P \implies Q\) is \(P \vee \neg Q\).) Since \(x<y\) we have \(x-x<y-x\) [by (O3)], i.e. \(0<y-x\). Since \(-x\leq -y\) then \(-x+y\leq -y+y\), i.e. \(y-x\leq 0\). So \(0<y-x\) and \(y-x\leq 0\), i.e. [by (O1)] \(0<0\), which is a contradiction. Hence \(x<y\) and \(-x\leq-y\) is false, so If \(x<y\) then \(-x>-y\). \(\square\)Another technique is a proof by contrapositive.

The contrapositive of (A1), “for all \(x,y\), if \(x,y \in \mathbb{Z}\) then \(x+y \in \mathbb{Z}\)” is “for all \(x,y\), if \(x+y \notin \mathbb{Z}\) then \(x\notin\mathbb{Z}\) or \(y\notin\mathbb{Z}\).”

The contrapositive of (O3), “for all \(x,y,z \in \mathbb{Q}\), if \(x<y\) then \(x+z<y+z\)” is “$for all \(x,y,z\in\mathbb{Q}\) if \(x+z\geq y+z\) then \(x\geq y\)”.By Theorem 2.8, we know that \(P \implies Q\) is equivalent to its contrapositive. Sometimes, proving the contrapositive is easier than proving \(P \implies Q\) (we will see more examples of this later).

Statement: Let \(x,y \in \mathbb{Q}\). If \(x<y\) then \(-x>-y\).

Proof: We prove the contrapositive. The contrapositive is “if \(-x\leq -y\) then \(x\geq y\)”. Suppose \(-x\leq -y\) then \(-x+(x+y) \leq -y+(x+y)\) [since \(x+y \in \mathbb{Q}\) by (A1) and using (O3)*]. In other words \(y\leq x\), i.e. \(x\geq y\). \(\square\)Note that we have proven “\(x<y \implies -x>-y\)” by contradiction and by contrapositive. It is also worth noting that we could have proven it directly. This is meant to show that often there are numerous way to prove the same thing.

Note that the contrapositive doesn’t just change the implication symbol, but it also negates \(P\) and \(Q\). The contrapositive can often be confused with the converse:

Note that \(P \implies Q\) is not equivalent to its converse. Be careful when using a theorem/lemma/proposition that you are not using the converse by accident (which may not be true).

If the converse of \(P\implies Q\) is true, then we can deduce that \(P \iff Q\).

The converse of “If \(x<y\) then \(-x>-y\)” is “\(-x>-y\) then \(x<y\)”. We can show this is true, suppose \(-x>-y\) then \(-x+(x+y)>-y+(x+y)\), i.e. \(y>x\), i.e. \(x<y\).

Therefore we conclude, for all \(x,y \in \mathbb{Q}\), \(x<y\) if and only if \(-x>-y\).

Contradiction comes from the Latin contra which means “against” and dict which is a conjugation of the verb “to say, tell”. A contradiction is a statement that speaks against another statement.

Contrapositive also comes from the Latin contra and the Latin positus which is a conjugation of the verb “to put”. The contrapositive of a statement is a statement “put against” the original statement, we have negated both parts and reversed the order. Furthermore it is “positive” as it has the same truth value as the original statement.

On the other hand, while sounding similar, converse comes from the Latin con which means “together with” and vergere which means “to turn”. The converse turns the order of \(P\) and \(Q\).2.6 Set complement

We finish this section by looking at the complement of sets.

Suppose that \(A\) and \(B\) are subsets of some set \(X\).

\(A\setminus B\) denotes the relative complement of \(A\) with respect to \(B\), that is \[A\setminus B=\{x\in X: \ x\in A \text{ and }x\not\in B\ \}.\]

\(A^c\) denotes the complement of \(A\), that is \[A^c=\{x\in X:\ x\not\in A\ \}.\]

Suppose \(A,B\) are subsets of a set \(X\). Then

- \(A\setminus B=A\cap B^c.\)

- \((A\setminus B)^c=A^c\cup B\).

We will prove part a. and leave part b. as an exercise.

Let \(x\in X\). Then \[\begin{align*} x\in A\setminus B &\iff (x\in A)\wedge (x\not\in B)\\ &\iff (x\in A)\wedge (x\in B^c)\\ &\iff x\in A\cap B^c. \end{align*}\] Hence, we have that \(x\in A\setminus B\) is equivalent to \(x\in A\cap B^c\). It follows that \(A\setminus B=A\cap B^c.\)□

Suppose that \(A,B,C\) are subsets of a set \(X\). Then

\(A\setminus (B\cup C)=(A\setminus B)\cap(A\setminus C).\)

\(A\setminus (B\cap C)=(A\setminus B)\cup(A\setminus C).\)

\((A\cap B)^c=A^c\cup B^c\).

\((A\cup B)^c=A^c\cap B^c\).

We will prove parts a. and d. and leave parts b. and c. as exercises.

Proving a.) Let \(x\in X\). Recall that for statements \(P,Q,R\), we have that \(P\wedge(Q\wedge R)\iff(P\wedge Q)\wedge(P\wedge R).\) Then \[\begin{align*} x\in A\setminus(B\cup C) &\iff (x\in A)\wedge(x\not\in B\cup C)\\ &\iff (x\in A)\wedge (\neg(x\in B\cup C))\\ &\iff (x\in A)\wedge (\neg((x\in B) \vee (x\in C)))\\ &\iff (x\in A)\wedge ((x\not\in B)\wedge (x\not\in C))\\ &\iff ((x\in A)\wedge (x\not\in B))\wedge((x\in A)\wedge (x\not\in C))\\ &\iff (x\in A\setminus B)\wedge(x\in A\setminus C)\\ &\iff x\in (A\setminus B)\cap(A\setminus C). \end{align*}\]

Hence, we have that \(x\in A\setminus(B\cup C)\) if and only if \(x\in(A\setminus B)\cap(A\setminus C)\). It follows that \(A\setminus(B\cup C)=(A\setminus B)\cap(A\setminus C).\)

Proving d.) Let \(x\in X\). Then \[\begin{align*} x\in (A\cup B)^c &\iff \neg(x\in A\cup B)\\ &\iff \neg((x\in A)\vee (x\in B))\\ &\iff \neg((x\in A)\wedge(\neg(x\in B)))\\ &\iff (x\in A^c)\wedge (x\in B^c)\\ &\iff x\in A^c \cap B^c. \end{align*}\]

Hence, we have that \(x\in (A\cup B)^c\) if and only if \(x\in A^c\cap B^c\). It follows that \((A\cup B)^c=A^c\cap B^c\).□

The above theorem is often known as De Morgan’s Laws, or De Morgan’s Theorem. Augustus De Morgan (English Mathematician, 1806 - 1871) was the first to write this theorem using formal logic (the one we are currently seeing). However this result was known and used by mathematicians and logicians since Aristotle (Greek philosopher, 384BC - 322BC), and can be found in the medieval texts by William of Ockham (English philosopher, 1287 - 1347) or Jean Buridan (French philosopher, 1301 - 1362).

It is often convenient to denote the elements of a set using indices. For example, suppose \(A\) is a set with \(5\) elements. Then we can denote these elements as \(a_1,a_2,a_3,a_4,a_5\). So we can write \[A=\{a_i:\ i\in I\ \}, \text{ where } I=\{1,2,3,4,5\}.\] The set \(I\) is called the indexing set.

Let \(\{A_i\}_{i\in I}\) be a collection of subsets of a set \(X\) where \(I\) is an indexing set. Then we write \(\bigcup\limits_{i\in I}A_i\) to denote the union of all the sets \(A_i\), for \(i\in I\). That is, \[\bigcup_{i\in I}A_i=\{x\in X: \exists\ i\in I \text{ such that } x\in A_i \}.\] Furthermore, we write \(\bigcap\limits_{i\in I}A_i\) to denote the intersection of all the sets \(A_i\), for \(i\in I\). That is, \[\bigcap_{i\in I}A_i=\{x\in X: \forall\ i\in I,\ x\in A_i \}.\]

Let \(X\) be a set, let \(A\) be a subset of \(X\), and let \(\{B_i\}_{i\in I}\) be an indexed collection of subsets, where \(I\) is an indexing set. Then we have

\(A\setminus \bigcap\limits_{i\in I}B_i = \bigcup\limits_{i\in I}(A\setminus B_i).\)

\(A\setminus \bigcup\limits_{i\in I}B_i = \bigcap\limits_{i\in I}(A\setminus B_i).\)

We will prove part a. and leave part b. as an exercise.

We know that \(x\in \bigcap\limits_{i\in I}B_i\) if and only if we have that \(x\in B_i\), for all \(i\in I\). Then \(x\not\in \bigcap\limits_{i\in I}B_i\) if and only if there exists an \(i\in I\) such that \(x\not\in B_i\). Now, suppose that \(x\in A\setminus \bigcap\limits_{i\in I}B_i\). Then \(x\in A\), and for some \(i\in I\), we have that \(x\not\in B_i\). Hence, for some \(i\in I\), we have that \(x\in A\setminus B_i\). Then \(x\in\bigcup\limits_{i\in I}(A\setminus B_i)\) which shows that \(A\setminus \bigcap\limits_{i\in I}B_i\,\subseteq\,\bigcup\limits_{i\in I}(A\setminus B_i).\)

Now, suppose that \(x\in \bigcup\limits_{i\in I}(A\setminus B_i).\) Hence, for some \(i\in I\), we have that \(x\in A\setminus B_i\). Then for some \(i\in I\), we have that \(x\in A\) and \(x\not\in B_i\). Since there exists some \(i\in I\) such that \(x\not\in B_i\), we have \(x\not\in \bigcap\limits_{i\in I}B_i\). Then \(x\in A\setminus \bigcap\limits_{i\in I}B_i\) which shows that \(\bigcup\limits_{i\in I}(A\setminus B_i)\,\subseteq\, A\setminus \bigcap\limits_{i\in I}B_i\). Summarising the above, we have that \(A\setminus \bigcap\limits_{i\in I}B_i\,=\,\bigcup\limits_{i\in I}(A\setminus B_i).\)□

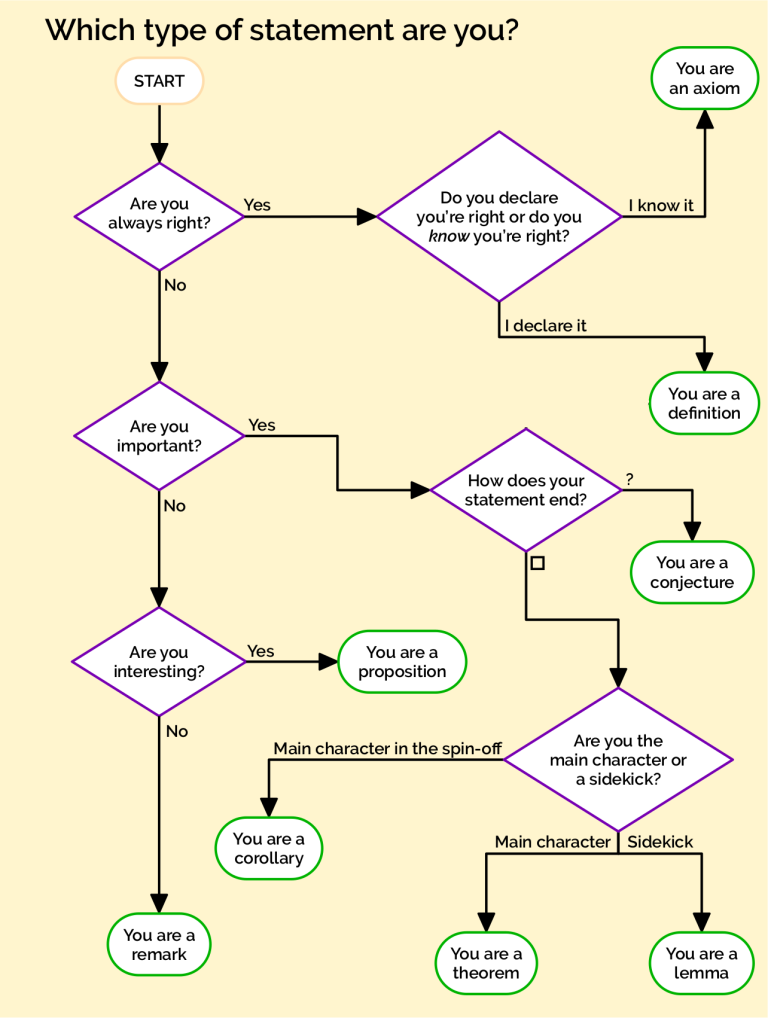

We finish this section with a brief side-note. How does one differentiate whether a statement is a definition, a theorem, a proposition, a lemma etc? The team at Chalkdust Magazine made the following flowchart which while is not meant to be serious, does reflect quite well how one can classify different statements. That is: a definition is a statement taken to be true; roughly speaking a proposition is an interesting but non-important result; while a theorem is an interesting, important main result; and a lemma is there to build up to a theorem. The original figure can be found at https://chalkdustmagazine.com/regulars/flowchart/which-type-of-statement-are-you/

Figure 2.1: What statement are you? Copyright Chalkdust Magazine, Issue 17.

3 The rationals are not enough

Now that we have a solid foundation of logic and abstract set notation, let us explore sets within \(\mathbb{Q}\). That is, let us look at subsets of the rationals. This will lead us to notice that irrational numbers exists, and hence exploring a new set called the reals, \(\mathbb{R}\).

3.1 The absolute value

Before we look at subsets of \(\mathbb{Q}\), we introduce the notion of absolute value.

For \(x\in \mathbb{Q}\), the absolute value or modulus of \(x\), denoted \(|x|\), is defined by \[|x| := \begin{cases}x &\text{ if } x\geq 0;\\ -x &\text{ if } x<0.\end{cases}\]

It is often helpful to think of the absolute value \(|x|\) as the distances between the point \(x\) and the origin \(0\). Likewise, \(|x-y|\) is the distance between the points \(x\) and \(y\).

For any \(x, y \in \mathbb{Q}\)

\(|x|\geq 0\) with \(|x| = 0\) if and only if \(x=0\);

\(|xy| = |x||y|\);

\(|x^2| = |x|^2 = x^2\).

Exercise

□

Statement Show that \(a^2+b^2\geq 2ab\) for any \(a,b \in \mathbb{Q}\).

Solution Let \(a,b\in\mathbb{Q}\). We have that \((a-b)^2\geq 0\), so [expanding the bracket] \(a^2-2ab+b^2\geq 0\). Rearranging, this gives \(a^2+b^2 \geq 2ab\).

For all \(x,y \in \mathbb{Q}\) we have \(|x+y|\leq |x|+|y|\).

We prove this by case by case analysis. First note that for all \(x\in\mathbb{Q}\) we have \(x\leq |x|\) and \(-x\leq |x|\). Let \(x,y \in \mathbb{Q}\).

Case 1: Suppose \(x\geq -y\) then \(x+y\geq 0\) and so \(|x+y| = x+y \leq |x|+|y|\).

Case 2: Suppose \(x< -y\) then \(x+y<0\) and so \(|x+y|=-(x+y)=-x+(-y)\leq |x|+|y|\).□

3.2 Bounds for sets

With this notion of absolute value, we can start asking whether a subset of \(\mathbb{Q}\) contains arbitrarily large or small elements.

Let \(A \subseteq \mathbb{Q}\) be non-empty. We that that \(A\) is:

bounded above (in \(\mathbb{Q}\)) by \(\alpha \in \mathbb{Q}\) if for all \(x\in A\), \(x\leq \alpha\);

bounded below (in \(\mathbb{Q}\)) by \(\alpha \in \mathbb{Q}\) if for all \(x\in A\), \(x\geq \alpha\);

bounded if it is bounded above and below;

If \(A\) is bounded above by \(\alpha\) and below by \(\beta\), then by setting \(\gamma = \max\{|\alpha|,|\beta|\}\) we have \(A\) is bounded by \(\gamma\), i.e., for all \(x\in A\), we have \(|x|\leq \gamma\).

Note that \(\alpha\) is far from unique. For example, take the set \(A = \left\{\frac{1}{n}:n\in \mathbb{Z}_+\right\}\). Then we can see that \(A\) is bounded above by \(1\), but it is also bounded above by \(2\) and by \(100\) etc.

Let \(A \subseteq \mathbb{Q}\) be non-empty. The least (or smallest) upper bound of \(A\) (in \(\mathbb{Q}\)) is \(\alpha \in \mathbb{Q}\) such that:

\(\alpha\) is an upper bound, i.e. for all \(x\in A\), \(x\leq \alpha\);

any rational number \(\beta\) less than \(\alpha\) is not an upper bound, i.e. for all \(\beta \in \mathbb{Q}\) with \(\beta < \alpha\), there exists \(x\in A\) with \(\beta<x\).

The greatest (or largest) lower bound of \(A\) (in \(\mathbb{Q}\)) is \(\alpha \in \mathbb{Q}\) such that:

\(\alpha\) is a lower bound, i.e. for all \(x\in A\), \(x\geq \alpha\);

any rational number \(\beta\) greater than \(\alpha\) is not a lower bound, i.e. for all \(\beta \in \mathbb{Q}\) with \(\beta > \alpha\), there exists \(x\in A\) with \(\beta>x\).

We use our work on negating statements to negate the above definition and say

\(\alpha \in \mathbb{Q}\) is not the least upper bound of \(A\) if:

there exists \(x\in A\) such that \(x>\alpha\) (\(\alpha\) is not an upper bound) or;

there exists \(\beta \in \mathbb{Q}\) with \(\beta < \alpha\) and for all \(x\in A\) we have \(x\leq \beta\) (there is an upper bound lower than \(\alpha\)).

Similarly, \(\alpha \in \mathbb{Q}\) is not the greatest lower bound of \(A\) if:

there exists \(x\in A\) such that \(x<\alpha\) (\(\alpha\) is not a lower bound) or;

there exists \(\beta \in \mathbb{Q}\) with \(\beta > \alpha\) and for all \(x\in A\) we have \(x\geq \beta\) (there is an lower bound greater than \(\alpha\)).

Let \(A = \left\{\frac{1}{n}:n\in \mathbb{Z}_+\right\}\). We show that \(1\) is the least upper bound of \(A\). As remarked before, we have \(1\) is an upper bound since if we take \(x \in A\) then \(x = \frac{1}{n}\) with \(n\in \mathbb{Z}_+\). In particular \(n\geq 1\), so \(x=\frac{1}{n}\leq \frac{1}{1}=1\).

We now show that \(1\) is the least upper bound by showing any number less than \(1\) is not an upper bound. Let \(\beta <1\). By taking \(n=1 \in \mathbb{Z}_+\), we see that \(1=\frac{1}{1} \in A\), hence \(\beta<1\) means \(\beta\) is not an upper bound. Hence \(1\) is the least upper bound.

We show that \(0\) is the greatest lower bound. First we show \(0\) is a lower bound. Let \(x \in A\) then \(x = \frac{1}{n}\) with \(n\in \mathbb{Z}_+\). In particular \(n > 0\), so \(x=\frac{1}{n}> 0\).

We now show that \(0\) is the greatest lower bound by showing any number greater than \(0\) is not a lower bound. Let \(\beta = \frac{a}{b} >0\) [so \(a,b \in \mathbb{Z}_+\)]. Set \(n=b+1 \in \mathbb{Z}\), so \(\frac{1}{n}\in A\). Then \[\frac{1}{n}=\frac{1}{b+1}<\frac{1}{b}\leq a\frac{1}{b} = \frac{a}{b} = \beta.\]

So we have found \(x \in A\) such that \(x<\beta\), so \(\beta\) is not a lower bound.

The argument for \(\beta\) not being a lower bound above seems to come from nowhere. Sometimes it is hard to see where to start a proof, so mathematician first do scratch work. This is the rough working we do as we explore different avenues and arguments, but that is not included in the final proof (so to keep the proof clean and easy to understand). The scratch work for the above proof of might have been along the lines:

We want to find \(n \in \mathbb{Z}_+\) such that \(\beta =\frac{a}{b}>\frac{1}{n}\). Rearranging, this gives \(an>b\) (as both \(n\) and \(b\) are positive), so \(n>\frac{b}{a}\). Since \(a\geq 1\), we have \(\frac{b}{a}\leq b\). Picking \(n=b+1\) would satisfy \(n=b+1>b\geq\frac{b}{a}\).□

Note that in the example above the greatest lower bound of \(A\) is not in \(A\) itself [if \(0\in A\), then there exists \(n\in \mathbb{Z}_+\) such that \(0=\frac{1}{n}\), i.e. \(0=1\), which is a contradiction.]. If a set is a bounded subset of \(\mathbb{Z}\), then we get a different story.

Any non-empty subset of \(\mathbb{Z}_{\geq 0}\) contains a minimal element.

Exercise to be done after we have introduced the completeness axiom.

□

Let \(A \subseteq \mathbb{Z}\) be non-empty. If \(A\) is bounded below, it contains a minimal element (i.e. its greatest lower bound is in \(A\)). If it is bounded above, it contains a maximal element (i.e., its least upper bound is in \(A\)).

As we saw above with the set \(A = \left\{\frac{1}{n}:n\in \mathbb{Z}_+\right\}\), this is not the case for general subsets of \(\mathbb{Q}\). However, it is even worst, because a general subset of \(\mathbb{Q}\) might be bounded and not have a greatest lower bound or least upper bound in \(\mathbb{Q}\), as we will see in the next section.

3.3 The irrationals and the reals

We first show that there exists irrational numbers (i.e., numbers that are not rational) and show that this means that there exists subsets of \(\mathbb{Q}\) whose lower upper bound is not rational (and hence we need a bigger number system).

There does not exists \(x\in \mathbb{Q}\) such that \(x^2=2\).

For the sake of a contradiction, suppose there exists \(x =\frac{a}{b} \in \mathbb{Q}\) such that \(x^2 =2\). Without loss of generality, since \(x^2=(-x)^2\) and \(0^2=0\), we can assume \(x>0\), i.e. \(a,b \in \mathbb{Z}_+\). Furthermore, if \(0<x\leq 1\), then \(x^2\leq 1<2\), and if \(x\geq 2\) then \(x^2\geq 4>2\). So we assume that \(1<x<2\).

Let \(A = \{r \in \mathbb{Z}_+:rx \in \mathbb{Z}\} \subseteq \mathbb{Z}\). Note that \(A\) is non-empty since, \(bx = a \in \mathbb{Z}_+\), so \(b \in A\). We have that \(A\) is bounded below by \(0\), so by the Well Ordering Principle, \(A\) contains a minimal element, call if \(m\). We will prove that \(m\) is not minimal by finding \(0<m_1<m\) with \(m\in A\). This will be a contradiction to the Well Ordering Principle.

Define \(m_1=m(x-1)= mx-m \in \mathbb{Z}\). Since \(1<x\), we have \(x-1>0\) so \(m_1=m(x-1)>0\). Similarly, since \(x<2\) we have \(x-1<1\) so \(m_1=m(x-1)<m\). Hence \(0<m_1<m\). Now \[m_1x=m(x-1)x=mx^2-mx = 2m-mx \in \mathbb{Z}.\] Hence \(m_1 \in A\) and \(0<m_1<m\) which is a contradiction.□

Since irrational numbers exists if we restrict ourselves to only using rational number then there are many unanswerable questions. From simple geometrical problems (what is the length of the diagonal of a square with length side 1), to the fact that there are some bounded set of rationals which do not have a rational least upper bower.

Consider the set \[A = \{x\in \mathbb{Q}:x^2<2\}.\] We have \(A\) is bounded above (for example, by \(2\) or \(10\)), but we show it does not have a least upper bound in the rational.

Let \(\alpha =\frac{a}{b} \in \mathbb{Q}\) be the least upper bound of \(A\) and note we can assume \(\alpha>0\). We either have \(\alpha^2<2\), \(\alpha^2=2\) or \(\alpha^2>2\). We will show that all three of these cases leads to a contradiction.

Case 1: \(\alpha^2<2\). We show that in this case \(\alpha\) is not an upper bound by finding \(x \in A\) such that \(\alpha<x\).

[Scratch work: We look for \(c\in \mathbb{Z}\) such that \(\left(\alpha+\frac{1}{bc}\right)^2=\left(\frac{ac+1}{bc}\right)^2<2\). Rearranging, we get \(a^2c^2+2ac+1<2b^2c^2\), then \(2ac+1<c^2(2b^2-a^2)\). Since \(\alpha^2<2\), we know \(a^2<2b^2\), so \(2b^2-a^2>0\). Since \(2b^2-a^2 \in \mathbb{Z}\), we have \(2b^2-a^2\geq 1\), so \(c^2(2b^2-a^2)\geq c^2\). So to find \(c\) such that \(2ac+1<c^2\), i.e. \(0<c^2-2ac-1\), i.e. by completing the square \(0<(c-a)^2-(a^2+1)\). To simplify our life, let us take \(c\) to be a multiple of \(a\), say \(ka\), then we are looking for \(0<(k-1)^2a^2-a^2-1 = ((k-1)^2-1)a^2-1\), i.e. \((k-1)^2-1)>2\), so \(k=3\) should work, i.e. \(c=3a\).]

Let \(x = \alpha+\frac{1}{3ab}>\alpha\). We prove that \(x\in A\) (and hence \(\alpha\) is not an upper bound) by showing \(x^2<2\). We have \[\begin{align*} x^2 &= \left(\frac{3a^2+1}{3ab}\right)^2 &\\ &= \frac{9a^4+6a^2+1}{9a^2b^2} &\\ &< \frac{9a^4+9a^2}{9b^2a^2} & \text{ (since $6a^2+1<9a^2$ )}\\ &=\frac{a^2+1}{b^2}& \\ &< \frac{a^2+(2b^2-a^2)}{b^2}& \text{ (since $a^2<2b^2$)}\\ &<2.& \end{align*}\]

Case 2: \(\alpha^2=2\). This is a contradiction to Theorem 3.8

Case 3: \(\alpha^2>2\). We leave this as an exercise. [Hint: Find appropriate \(c\) so that \(x = \alpha - \frac{1}{bc} \in \mathbb{Q}\) is such that \(x^2>2\). Argue that \(x\) is an upper bound for \(A\) and \(x<\alpha\) to conclude \(\alpha\) is not the least upper bound. ]

Notice that in the above we made sure to have \(x>\alpha\) by setting \(x = \alpha+\epsilon\) where \(\epsilon>0\). By doing so, we reduced the numbers of properties we needed \(x\) to have.

□

We use this as a motivation to introduce the real numbers.

The set of real numbers, denoted \(\mathbb{R}\), equipped with addition \(+\), multiplication \(\cdot\) and the order relation \(<\) satisfies axioms (A1) to (A11), (O1) to (O4) and the Completeness Axiom

Completeness Axiom: Every non-empty subset \(A\) of \(\mathbb{R}\) which is bounded above has a least upper bound.

It can be shown that there is exactly one quadruple \((\mathbb{R};+;\cdot;<)\) which satisfies these properties - up to isomorphism. We do not discuss the notion of isomorphism in this context here (although we will later look at it in the context of groups) save to remark that any two real number systems are in bijection (we will define this later) and preserves certain properties. This allows us to speak of the real numbers.

There are several ways of constructing the real number system from the rational numbers. One option is to use Dedekind cuts. Another is to define the real numbers as equivalence classes of Cauchy sequences of rational numbers (you will explore Cauchy sequences in Analysis).You may continue to imagine the real numbers as a number line as you did pre-university.

The definition of the absolute value is the same for real numbers as for the rational numbers, as is the notion of bounded sets (and therefore all the results in the previous section still holds for \(\mathbb{R}\)).

We can use the absolute value to define a distance or metric on \(\mathbb{R}\). To do so we define \[d(x,y) = |x-y|\] for any two points \(x,y\in \mathbb{R}\). This distance has the following properties, for any \(x,y,z \in \mathbb{R}\):

\(d(x,y)\geq 0\) and \(d(x,y)=0\) if and only if \(x=y\);

\(d(x,y)=d(y,x)\);

\(d(x,y)\leq d(x,z)+d(z,y)\).

We deduce two important result about \(\mathbb{R}\).

Every non-empty subset \(A\) of \(\mathbb{R}\) bounded below has a greatest lower bound.

Let \(A\subset \mathbb{R}\) be non-empty and bounded below. Let \(c\in \mathbb{R}\) be a lower bound. Define the set \[B=\{-x:x\in A\}.\] Let \(x\in B\), so \(-x\in A\). Since \(c\) is a lower bound, \(-x\geq c\), i.e. \(x\leq -c\). So \(-c\) is an upper bound for \(B\), and \(B\) is non-empty [since \(A\) is non-empty]. By the Completeness Axiom \(B\) has a least upper bound, \(u\in \mathbb{R}\). Let \(\ell = -u\). We prove that \(\ell\) is the greatest lower bound for \(A\).

We first show \(\ell\) is a lower bound. Let \(x\in A\), then \(-x\in B\) so \(-x\leq u\). Hence \(x\geq -u=\ell\).

We now show \(\ell\) is the greatest lower bound by showing any real number bigger than \(\ell\) is not a lower bound. Let \(y\in \mathbb{R}\) be such that \(y>\ell\), so \(-y<-\ell=u\). Now \(-y\) is not an upper bound for \(B\) since \(u\) is the least upper bound of \(B\). So by definition, there exists \(b\in B\) such that \(b>-y\), i.e. \(-b<y\). Since \(b\in B\), we have \(-b\in A\). Hence \(y\) is not a lower bound for \(a\).

So \(\ell\) is the greatest lower bound for \(A\).□

For any \(x\in \mathbb{R}\) there exists \(n\in \mathbb{Z}_+\) such that \(x\leq n\).

We prove this by contradiction.

\(\big[\neg(\forall x \in \mathbb{R}, \exists n\in \mathbb{Z}_+\) such that \(x\leq n\)) \(\iff \exists x \in \mathbb{R}\) such that \(\forall n \in \mathbb{Z}_+, x>n \big]\).

Suppose there exists \(x\in \mathbb{R}\) such that \(n<x\) for all \(n\in \mathbb{Z}_+\). In particular, this means \(\mathbb{Z}_+\subseteq \mathbb{R}\) is bounded above. So by the completeness axiom, \(\mathbb{Z}_+\) has a least upper bound \(\alpha\) [in \(\mathbb{R}\)]. Since \(\alpha\) is the least upper bound, \(\alpha-\frac{1}{2}\) is not an upper bound, i.e. there exists \(b \in \mathbb{Z}_+\) such that \(\alpha-\frac{1}{2}<b<\alpha\). But then, \(b+1\in \mathbb{Z}\) and \(\alpha+\frac{1}{2}<b+1<\alpha+1\) so \(\alpha<b\). This contradicts the fact \(\alpha\) is an upper bound for \(\mathbb{Z}_+\).□

The above property is named after Archimedes of Syracuse (Sicilian/Italian mathematician, 287BC - 212 BC) although when Archimedes wrote down this theorem, he credited Eudoxus of Cnidus (Turkish mathematician and astronomer, 408BC - 355BC). It was Otto Stolz (Austrian mathematician, 1842 - 1905) who coined this property - partly because he studied fields where this property is not true and therefore needed to coin a term to distinguish between what is now know as Archimedean fields and non-Archimedean fields.

We finish this section by introducing notation for some common subsets of \(\mathbb{R}\).

Let \(a,b \in \mathbb{R}\) are such that \(a\leq b\), we denote:

the open interval of \(a,b\) by \((a,b)=\{x\in \mathbb{R}:a<x<b\}\);

the closed interval of \(a,b\) by \([a,b] = \{x \in \mathbb{R}:a\leq x\leq b\}\).

\([a,b) = \{x \in \mathbb{R}: a\leq x<b\}\); \((a,b] = \{x\in \mathbb{R}:a<x \leq b\}\);

\((a,\infty) = \{x \in \mathbb{R}:a<x\}\); \([a,\infty) = \{x\in \mathbb{R}:a\leq x\}\);

\((-\infty,b) = \{x \in \mathbb{R}: x<b\}\); \((-\infty,b] = \{x \in \mathbb{R}: x\leq b\}\).

By convention we have \((a,a)=[a,a)=(a,a]=\emptyset\), while \([a,a]=\{a\}\).

3.4 The supremum and infimum of a set.

Since \(\mathbb{R}\) is complete, i.e., every bounded set has a least upper bound and greatest lower bound, we introduce the notion of supremum and infimum of a set.

Let \(A\subseteq \mathbb{R}\). We define the supremum of \(A\), denoted \(\sup(A)\) as follows:

If \(A=\emptyset\), then \(\sup(A) = -\infty\).

If \(A\) is non-empty and is bounded above [i.e., there exists \(\alpha \in \mathbb{R}\) such that for all \(x\in A\), \(x\leq \alpha\)] then \(\sup(A)\) is the least upper bound of \(A\) (which we know exists by the Completeness Axiom)

If \(A\) is non-empty and is not bounded above [i.e., for all \(\alpha \in \mathbb{R}\), there exists \(x\in A\) such that \(x>\alpha\)] then \(\sup(A)= +\infty\).

Let \(A\subseteq \mathbb{R}\). We define the infimum of \(A\), denoted \(\inf(A)\) as follows:

If \(A=\emptyset\), then \(\inf(A) = +\infty\).

If \(A\) is non-empty and is bounded below [i.e., there exists \(\alpha \in \mathbb{R}\) such that for all \(x\in A\), \(x\geq \alpha\)] then \(\inf(A)\) is the greatest lower bound of \(A\) (which we know exists by the Completeness Axiom)

If \(A\) is non-empty and is not bounded below [i.e., for all \(\alpha \in \mathbb{R}\), there exists \(x\in A\) such that \(x<\alpha\)] then \(\sup(A) = -\infty\).

Supremum comes from the Latin super meaning “over, above” while infimum comes from the Latin inferus meaning “below, underneath, lower” (these words gave rise to words like superior and inferior).

Let \(A = \left\{\frac{1}{n}:n \in \mathbb{Z}_+\right\}\). We have already seen that \(\sup(A) = 1\) (in \(A\)) and \(\inf(A)= 0\) (not in \(A\)).

Let \(a,b \in \mathbb{R}\) with \(a<b\). Then

\(\sup((a,b)) = \sup((a,b]) = \sup([a,b))= \sup([a,b]) = b\);

\(\inf((a,b)) = \inf((a,b]) = \inf([a,b)) = \inf([a,b]) = a\);

\(\sup((a,\infty)) = \sup([a,\infty)) = +\infty\);

\(\inf((-\infty,a)) = \inf((-\infty,a]) = -\infty\);

\(\sup((-\infty,a)) = \sup((-\infty,a]) = \inf([a,\infty)) = \inf((a,\infty)) = a\).

We will only prove \(\sup((a,b)) = b\) and \(\sup((a,\infty)) = +\infty\) and leave the rest as the arguments are very similar.

Let \(a,b \in \mathbb{R}\) with \(a<b\), we will show \(\sup((a,b)) = b\). [Note that \((a,b)\) is non-empty (as \(a\neq b\)) and it is bounded above.]

First we show that \(b\) is an upper bound. Indeed, let \(x\in (a,b)\) then by definition \(a<x<b\) so \(x\leq b\) as required.

Next we show that \(b\) is the least upper bound [by showing any real number less than \(b\) is not an upper bound]. Let \(y\in \mathbb{R}\) with \(y<b\).Suppose \(a<y\) (i.e. \(y\in (a,b)\)) and let \(x=\frac{b+y}{2}=y+\frac{b-y}{2}=b-\frac{b-y}{2}\). Note that \(x<b\) and \(x>y>a\), so \(x\in (a,b)\). Since \(x>y\), we have \(y\) is not an upper bound. Suppose \(y\leq a\) (i.e. \(y\notin(a,b)\)) and let \(x=\frac{a+b}{2}=a+\frac{b-a}{2}=b-\frac{b-a}{2}\). Then \(x<b\) and \(x>a\leq y\), so \(x\in(a,b)\). Since \(x>y\), we have \(y\) is not an upper bound. In either cases, \(y\) is not an upper bound, so \(b\) is the least upper bound.

Let \(a \in \mathbb{R}\), we show that \(\sup((a,\infty))=+\infty\). [Note that \((a,\infty)\) is non-empty, so we want to show it is not bounded above].

Suppose for contradiction that \((a,\infty)\) is bounded above [i.e., \(\sup((a,\infty))\neq +\infty\)]. Let \(u \in \mathbb{R}\) be an upper bound for \((a,\infty)\). Set \(x=|a|+|u|+1 \in \mathbb{R}\). Note that \(x>|a|\geq a\), so \(x\in (a,\infty)\). Hence \(u\) being an upper bound means \(x\leq u\), however \(x>|u|\geq u\). This is a contradiction.□

Problem: Let \[A=\left\{\frac{n^2+1}{|n+1/2|}:n\in\mathbb{Z}\right\}.\] Show that \(\sup(B) = +\infty\) and \(\inf(B)=4/3\).

Solution: Let us first look at the supremum. [The question is asking us to show \(B\) is not bounded above.] Let \(x\in \mathbb{R}\). By the Archimedean Principle, choose \(n\in\mathbb{Z}_+ \subseteq \mathbb{Z}\) such that \(n>2x\) [Scratch work missing to work out why we choose this particular \(n\)]. Define \(a = \frac{n^2+1}{n+1/2} \in A\). Then \[\begin{align*} a &= n\frac{n+\frac{1}{n}}{n+\frac{1}{2}} &\\ &\geq n\frac{n}{n+\frac{1}{2}} &\text{as $x+\frac{1}{n}\geq n$}\\ &\geq n\frac{n}{2n} & \text{as $n+\frac{1}{2}\leq 2n$} \\ &= \frac{n}{2} &\\ &> x&. \end{align*}\] So we have found \(a\in A\) such that \(a>x\), so \(A\) is not bounded above. Hence \(\sup(A) = +\infty\).

We now look at the infimum. We first show that \(4/3\) is a lower bound. Let \(a=\frac{n^2+1}{|n+1/2|}\) for some \(n \in \mathbb{Z}\), so \(a\in A\). Consider \[\begin{align*} n^2+1-(4/3)|n+1/2| &\geq n^2+1-(4/3)(|n|+1/2) &\text{ by the triangle inequality}\\ &=n^2-(4/3)|n|+1/3& \\ &=(|n|-2/3)^2 - 1/9 & \text{ by completing the square } \\ &\geq 0& \text{ since for all $n\in \mathbb{Z}$, $(|n|-2/3)^2\geq 1/9$}. \end{align*}\] Therefore \(n^2+1\geq (4/3)|n+1/2|\), i.e. \(a =\frac{n^2+1}{|n+1/2|}\geq 4/3\) as required.

We next show that \(4/3\) is the greatest lower bound for \(A\) [by showing any number greater than it is not a lower bound]. First note that by setting \(n=1\), we have \[\frac{n^2+1}{|n+\frac{1}{2}|} = \frac{2}{3/2} = \frac{4}{3}.\] So \(4/3\in A\). Thus, no value \(y>4/3\) can be a lower bound for \(B\). This shows that \(4/3\) is the greatest lower bound. Hence \(\inf(B) = 4/3\).

The next example is more theoretical.

Problem: Let \(A\) and \(B\) be bounded non-empty subsets of \(\mathbb{R}\). Define the sum set as \[A+B = \{a+b:a\in A, b\in B\}.\] Show that \(\sup(A+B) = \sup(A)+\sup(B)\).

Solution: Let \(\alpha =\sup(A)\) and \(\beta \sup(B)\). Note that \(\alpha, \beta \in \mathbb{R}\) as both \(A\) and \(B\) are bounded. We show \(\alpha+\beta = \sup(A+B)\).

We first show \(\alpha+\beta\) is an upper bound for \(A+B\). Let \(c\in A+B\), by definition, there exists \(a\in A\) and \(b\in B\) such that \(c=a+b\). Now \(a\leq \alpha\) and \(b\leq \beta\), so \(c=a+b\leq \alpha+\beta.\)

We now show \(\alpha+\beta\) is the least upper bound for \(A+B\). Let \(\epsilon>0\), we show that \(\alpha+\beta-\epsilon\) is not an upper bound. Since \(\alpha\) is the least upper bound of \(A\), then \(\alpha-\epsilon/2\) is not an upper bound, so there exists \(a\in A\) such that \(a>\alpha-\epsilon/2\). Similarly, there exists \(b\in B\) such that \(b>\beta-\epsilon/2\). Define \(c=a+b \in A+B\). Then \[c=a+b>(\alpha-\epsilon/2)+(\beta-\epsilon/2)=\alpha+\beta-\epsilon.\] This shows for any \(\epsilon>0\), \(\alpha+\beta-\epsilon\) is not an upper bound for \(A+B\). So \(\alpha+\beta\) is the least upper bound for \(A+B\), hence the supremum of \(A+B\).

Sometimes, instead of showing something is true for all \(y>x\), it is easier to show it is true for all \(x+\epsilon\) where \(\epsilon>0\). We can do this because if \(y>x\), then setting \(epsilon = y-x>0\), we see that \(y=x+\epsilon\).

□

Let \(A\subseteq \mathbb{R}\). We say that \(A\) has a maximum if \(\sup(A)\in A\). In this case we write \(\max (A)\) to stand for the element \(a\in A\) with \(a = \sup(A)\).

Similarly we say that \(A\) has a minimum if \(\inf(A)\in A\). In this case we write \(\min (A)\) to stand for the element \(a\in A\) with \(a = \inf(A)\).Note that a set may not have a minimum or a maximum.

Let \[A=\left\{\frac{n^2+1}{|n+1/2|}:n\in\mathbb{Z}\right\}.\] We have seen \(\inf(A)=4/3\in A\), so \(\min(A)=4/3\). However \(A\) does not have a maximum as \(\sup(A)=+\infty \not\in A\) (as \(\infty \notin \mathbb{R}\) and \(A\subseteq \mathbb{R}\)).

Let \(A = \left\{\frac{1}{n}: n \in \mathbb{Z}_+\right\}\). Then we have seen that \(\sup(A)=1\) and \(1\in A\), so \(\max(A)=1\). However it does not have a minimum as we have seen \(\inf(A)=0\) and \(0\notin A\).

4 Proof by induction

We have seen several type of proofs so far:

direct proof (sometimes known as deductive proof);

proof by case analysis;

proof by contradiction;

proof using the contrapositive.

In this chapter, we introduce a new type of proof, called proof by induction.

Let \(n_0 \in \mathbb{Z}_+\) and let \(P(n)\) be a statement for \(n\geq n_0\). A proof by induction is where one prove that:

\(P(n_0)\) is true, and

\(P(n) \implies P(n+1)\) for all \(n\geq n_0\).

□

The word induction comes from the Latin in and ductus meaning “to lead”. An inductive proof is one where a starting case leads into the next case and so on.

(In contrast, deduction has the prefix de meaning “down from”. When we do a proof by deduction, we start from certain rules and truths that “lead down” to specific things that must follow as a consequence.)Statement: Show that, for every \(n\in\mathbb{Z}_+\), we have \[1+2+3+\cdots+n=\sum_{i=1}^n=\frac{n(n+1)}{2}.\]

Proof: For \(n\in\mathbb{Z}_+\), let \(P(n)\) be the following statement. \[1+2+3+\cdots+n=\frac{n(n+1)}{2}.\] We will show that \(P(n)\) is a true statement, for all \(n\in\mathbb{Z}_+\) by giving a proof by induction.

First, let us consider \(P(1)\). We have \(1=\frac{1(1+1)}{2}\), hence, \(P(1)\) holds.

Now, suppose that \(P(k)\) is true for some positive integer \(k\), that is

\[1+2+3+\cdots+k=\frac{k(k+1)}{2}.\]

Then

\[\begin{align*}

1+2+3+\cdots+k+(k+1)&=\frac{k(k+1)}{2}+(k+1)\\

&=\frac{k(k+1)}{2}+\frac{2(k+1)}{2}\\

&=\frac{k^2+3k+2}{2}\\

&=\frac{(k+1)(k+2)}{2}.

\end{align*}\]

This shows that if \(P(k)\) is true then \(P(k+1)\) is true. By the Principle of Mathematical Induction, it follows that \(P(n)\) is true for all natural numbers \(n\).

It is not enough to show \(P(n)\implies P(n+1)\), we need to also show \(P(n_0)\) holds. As we saw in the above example, we often have that \(n_0=1\). However, this is not always the case. The following example highlights these two points.

Let \(P(n)\) be the statement \(n^2\leq 2^{n-1}\). To highlight the importance of the base case, let us first show that for \(n\geq 3\) we have \(P(n) \implies P(n+1)\).

Suppose that \(P(k)\) is a true statement for some natural number \(k\geq 3\), that is \(k^2\leq 2^{k-1}\).

ThenIf we set \(n_0=1\), we do have \(P(1)\) holds (since \(1\leq 1\)), but have not proven \(P(1) \implies P(2)\) (since \(1\not\geq 3\)). In fact, we can not prove \(P(1) \implies P(2)\) since \(P(2)\) is false (\(2^2\not\leq 2^1\)).

Since we have shown that for \(n\geq 3\) we have \(P(n) \implies P(n+1)\), we might want to set \(n_0=3\). However, \(P(3)\) is also false since \(3^2\not\leq 2^2\). In fact, we can check that \(P(3), P(4), P(5)\) and \(P(6)\) are all false. However, \(P(7)\) is true since we have that \(7^2=49\leq 64 = 2^6\).

Therefore, we have \(n_0=7\) and by the Principle of mathematical induction, we have shown that \(n^2\leq 2^{n-1}\) for all \(n\in\mathbb{Z}_+\) such that \(n\geq 7\).Sometimes, the principle of induction is not strong enough, either because we need more than one base case, or because to prove \(P(n)\) is true we need to know \(P(m)\) is true for some unknown \(m<n\). This is where we can use the strong principle of mathematical induction.

Let \(n_0 \in \mathbb{Z}_+\) and let \(P(n)\) be a statement for \(n\geq n_0\). A proof by strong induction is where one prove that:

\(P(n_0), P(n_0+1),\dots P(n_0+k)\) is true (for some \(k\geq 0\)), and

for all \(n\geq n_0+k\), show that \(P(i)\) is true for all \(i\leq n \implies P(n+1)\) is true.

□

Statement: Suppose that \(x_1 = 3\) and \(x_2 = 5\) and for \(n \geq 3\), define \(x_n = 3x_{n-1} -2x_{n-2}\). Show that \(x_n = 2^n + 1\), for all \(n\in\mathbb{Z}_+\).

Proof: Let \(P(n)\) be the statement “\(x_n= 2^n+1\)”. We will show that \(P(n)\) is a true statement, for all \(n\in\mathbb{Z}_+\), by giving a proof by induction. First, we consider our base cases \(n_0=1\) and \(n_{0+1}=2\). We have the given initial conditions \(x_1=3\) and \(x_2=5\). Using the formula \(x_n=2^n+1\), we indeed have \(x_1= 2^{1}+1=3\) and \(x_2= 2^2+1= 5\).Therefore, \(P(1)\) and \(P(2)\) hold.

Let \(n\in\mathbb{Z}_+\) and suppose for all \(i\in \mathbb{Z}_+\) such that \(i \leq n\) we have \(P(n)\) holds. Then by our assumption \(x_{n-1}= 2^{n-1}+1\) and \(x_n = 2^n +1\). We have: This shows that if \(P(i)\) is true, for \(1\leq i\leq n\), then \(P(n+1)\) is true. By the Strong Principle of Mathematical Induction, it follows that \(P(n)\) is true for all natural numbers \(n\). \(\square\)Statement: Show that every natural number can be written as the sum of distinct powers of \(2\). That is for all \(n\in\mathbb{Z}_+\), we can write \(n=2^{a_1}+2^{a_2}+\dots+2^{a_r}\) with \(a_i \in \mathbb{Z}\), \(a_i\geq 0\), and \(a_i\neq a_j\) if \(i\neq j\).

Proof: Let \(P(n)\) be the statements “there exists \(a_1,\dots a_r \in \mathbb{Z}\) such that \(a_i\geq0\), \(a_i\neq a_j\) if \(i\neq j\) and \(n=2^{a_1}+2^{a_2}+\dots+2^{a_r}\)”. We check that our base case \(n_0=1\) is true. Indeed \(1=2^0\) so \(P(1)\) holds.

Let \(n\in\mathbb{Z}_+\) and suppose for all \(i\in \mathbb{Z}_+\) such that \(i \leq n\) we have \(P(n)\) holds. Consider \(A = \{n+1-2^\ell: \ell\in\mathbb{Z}_{>0} \wedge n+1-2^\ell\geq 0\}\). We note that \(A \subseteq \mathbb{Z}_{\geq 0}\) by definition. Taking \(\ell = 1\), we see that \(n-1\in A\), so \(A \neq \emptyset\). So, by the Well Ordering Principle, \(A\) has a minimal element, call it \(m\). If \(m=0\) then \(n+1 = 2^\ell\) and \(P(n+1)\) holds. If \(m\neq 0\), then since \(P(m)\) holds, we have there exists \(a_1,\dots a_r \in \mathbb{Z}\) such that \(a_i\geq0\), \(a_i\neq a_j\) if \(i\neq j\) and \(m=2^{a_1}+2^{a_2}+\dots+2^{a_r}\). Then \(n+1 = 2^\ell+2^{a_1}+2^{a_2}+\dots+2^{a_r}\), so we just need to show \(\ell \neq a_i\) for all \(i\). For a contradiction, suppose \(\ell = a_i\) for some \(i\). Then \(n+1 \geq 2^\ell+2^\ell = 2^{\ell+1}\), so \(0\leq n+1-2^{\ell+1}<m\) which contradicts the definition of \(m\). Therefore the powers are distinct and \(P(n+1)\) holds.

Therefore, by the principle of strong induction, we have showed that every natural number can be written as the sum of distinct powers of \(2\).

A proof by induction is a special case of a proof by strong induction (taking \(k=0\))! We present these two ideas separately as it is easier to understand induction before understanding strong induction. However, most mathematician will say “induction” to mean both induction and strong induction and do not distinguish between the two (so feel free to also not distinguish between the two).

5 Studying the integers

The integers, \(\mathbb{Z}\), is an interesting set as unlike \(\mathbb{Q}\) or \(\mathbb{R}\), we can not divide. This restriction bring a lot of interesting properties that we will now study in more details. (The Well Ordering Principle also sets \(\mathbb{Z}\) apart from \(\mathbb{Q}\) and \(\mathbb{R}\) and we’ll also make use of that principle.)

5.1 Greatest common divisor

While we can not do division in general, there are cases when we can divide \(b \in \mathbb{Z}\) by \(a \in \mathbb{Z}\).

Notice that if we tried to extend this definition into \(\mathbb{Q}\), we will find that for all \(a\in\mathbb{Q}\setminus\{0\}\), \(b\in \mathbb{Q}\) we have \(a\mid b\).

Note that if \(b\neq 0\) then \(a\mid b\) implies \(|a|\leq |b|\).

Let \(a,b \in \mathbb{Z}\) with \(a\neq 0\). Then there exists a unique \(q,r\in \mathbb{Z}\) such that \(b=aq+r\) and \(0\le r<|a|\).

We call \(q\) the quotient and \(r\) the remainder

Let \(A = \{b-ak: k \in \mathbb{Z} \wedge b-ak \in \mathbb{Z}_{\geq 0}\}\). By definition \(A\subseteq \mathbb{Z}_{\geq 0}\). If \(b\geq0\), taking \(k=0\) gives \(b\in A\). If \(b<0\), taking \(k=a\cdot b\) gives \(b-a^2b = b(1-a^2) \in A\), since \(b<0\) and \((1-a^2)\leq 0\). So \(A\) is non-empty, and contains a least element. Let this element be \(r\) and the corresponding \(k\) be \(q\). Since \(r\in A\), we know \(r\geq 0\). We show \(r<|a|\) by contradiction. Suppose \(r\geq |a|\) and consider \(r>r-|a|\geq 0\). Note that \(r-|a| = (b-ak)-|a| =b-(k\pm 1)a \in A\), which contradicts the minimality of \(r\). Hence \(r<a\) as required.

It remains to show that \(r\) and \(q\) are unique. For the sake of a contradiction, suppose there exists \(q_1,q_2,r_1,r_2 \in \mathbb{Z}\) with \(0\leq r_1 < r_2 <|a|\) (without loss of generality, we assume \(r_1<r_2\) as they are not equal) and \(b = q_ia+r_i\) for \(i=1,2\). Then \(q_1a+r_1 = q_2a+r_2\) means \(r_2-r_1 = q_1a-q_2a = a(q_1-q_2)\). Since \(q_1,q_2\in \mathbb{Z}\), we have \(q_1-q_2 \in \mathbb{Z}\), so \(a|(r_2-r_1)\), i.e. \((r_2-r_1) \in a\mathbb{Z}\). But \(0<r_2-r_1 < |a|\) and there are no integers between \(0\) and \(|a|\) which is divisible by \(a\). This is a contradiction to our assumption. Hence \(r_1=r_2\), and we deduce that \(q_1=q_2\).□

Note that the division theorem shows that \(a|b\) if and only if the remainder is equal to \(0\).

Because we have a notion of division, the following definition is natural.

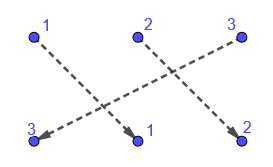

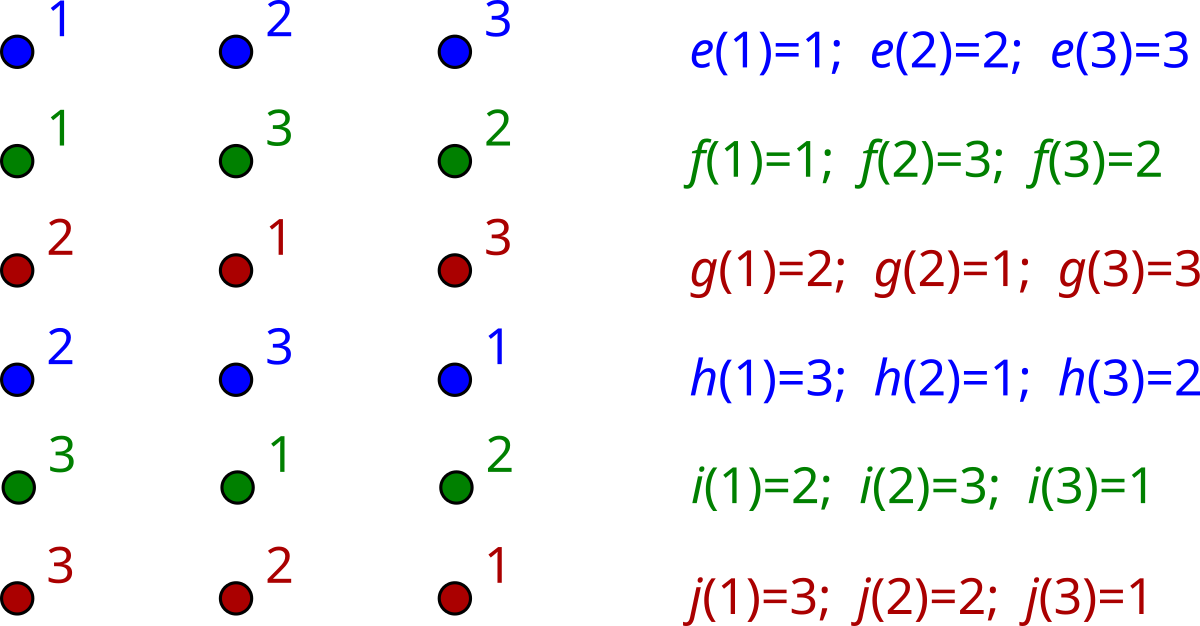

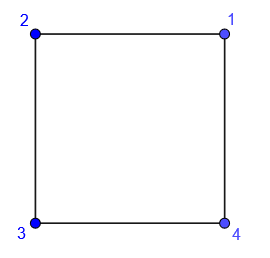

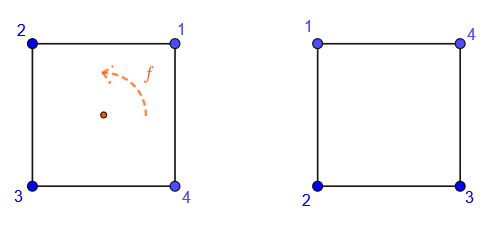

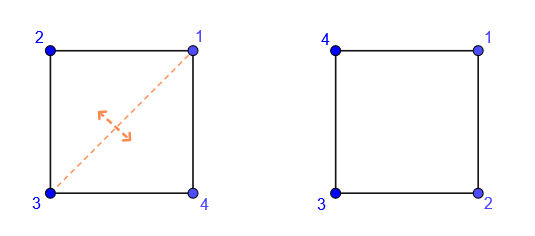

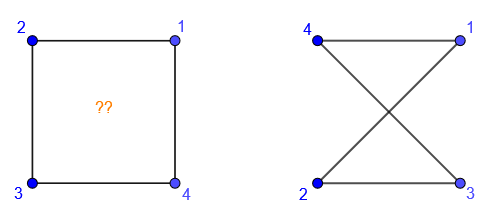

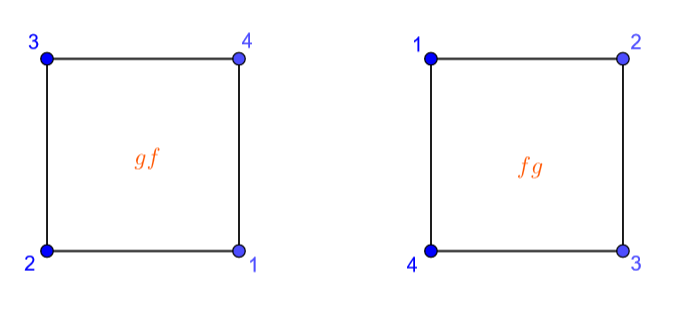

Note that \(1\) is always a common divisor of \(a\) and \(b\), and if \(a\not=0\), no integer larger than \(|a|\) can be a common divisor of \(a\) and \(b\).