3 Linear Probability Models (R)

3.1 Lab Overview

Today you will be learning the first regression method you can use to predict a binary outcome. This web page includes a more detailed explanation of Linear Probability Models in r and a script file you will execute to learn the basics of running this new model. The last section of the script will ask you to apply the code you’ve learned with a simple example.

Research Question: What characteristics of passengers are associated with survival on the Titanic?

Lab Files

Data:

Download titanic_df.csv

Script file:

Download lab1_LPM.r

Script with answers to application question:

Download lab1_LPM_w.answers.r

Packages for this lab:

You may need to install packages that you have never used before with install.packages("packagename")

# Basic data cleaning and visualization packages

library(tidyverse)

library(ggplot2)

# To plot predicted values (aka margins)

library(ggeffects)

# To calcuate robust standard errors

library(lmtest)

library(sandwich)3.2 What is a Linear Probability Model (LPM)?

First, let’s review some of the basic characteristics of a Linear Probability Model (LPM):

LPM uses a normal OLS linear regression (ordinary least squares), but with a binary outcome rather than a continuous outcome.

A binary outcome is coded as 0 = not present, 1 = present. Sometimes binary outcomes are thought of as “success” or “failure.” Sometimes a binary outcome could be a characteristic. Some examples of binary outcomes are: graduating from high school, getting arrested, agreeing with a statement, getting a disease, and many more!

When you run a LPM, your interpretation of the regression results changes. Your coefficients now refers to the probability that an outcome occurs.

Probability of an event is always between 0 and 1, but a LPM can sometimes give us probabilities greater than 1.

The LPM is an alternative to logistic regression or probit regression. There are rarely big differences in the results between the three models.

This is the basic equation set up for a linear probability model:

\[P(Yi = 1|Xi) = \beta_0 \ + \ \beta_1X_{i,1} \ + \ \beta_2X_{i,2} + ... \beta_kX_{i,k} + \epsilon\]

- \(P(Yi = 1|Xi)\): The probability that the outcome is present (1 = present / 0 = not present)

- That probability is predicted as a linear function (meaning the effects of each coefficient are just added together to get the outcome probability).

- \(\beta_0\) - the intercept (or probability of all coefficients are 0).

- \(\beta_k\) - the probability associated with any covariate

- \(X_k\) - the value of the covariate

- \(\epsilon\) - the error (aka the difference between the predicted probability and the actual outcome [0/1])

- \(\beta_0\) - the intercept (or probability of all coefficients are 0).

Why should you care about this equation?

For each model we learn this quarter, I will break down how to understand the basic model equation. I do this for several reasons:

- Very practically, you will need to write out the equation for your model in the methods sections of your quantitative papers.

- It will help with your quantitative literacy when reading journal articles! I know I sometimes check out when I see an equation in an article that goes way over my head. This is a way to make yourself feel like a smarty pants.

- It can help you wrap your mind around what a model is actually doing. Any model that we run is predicting an outcome as an equation, like a recipe. One part this variable plus one part that variable should equal this outcome. As we switch from model to model, the equation that we are using to predict outcomes will change.

3.2.1 Assumptions of the model

The assumptions of a LPM are pretty much the same as a normal linear regression. This means you need to consider each of these assumptions when you run a linear probability model on a binary outcome in your data.

1) Violation of the linearity assumption

LPM knowingly violates the assumption that there is a linear relationship between

the outcome and the covariates. If you run a lowess line, it often looks s-shaped.

2) Violation of the homoskedasticity assumption

Errors in a linear probability model will by nature have systematic heteroskedasticity. You can account for this by automatically using robust standard errors.

3) Normally distributed errors

Errors follow a normal distribution. This assumption only matters if you have a very small sample size.

4) Errors are independent

There is no potential clustering within your data. Examples would be data collected from withing a set of schools, within a set of cities, from different years, and so on.

5) X is not invariant

The covariates that you choose can’t all be the same value! You want variation so you can test to see how the outcome changes when the x value changes.

6) X is independent of the error term

There are no glaring instances of confounding in your model. This assumption is about unmeasured or unobserved variables that you might reasonably expect would be associated with your covariates and outcome. You can never fully rule this violation out, but you should familiarize yourself with other studies in your area to know what major covariates you should include.

7) Errors have a mean of 0 (at every level of X)

This is an assumption we did not cover last quarter. It essentially looks at whether your model is consistently over-estimating or under-estimating the outcomes. If the mean is positive, your model is UNDER-estimating the outcome (more of the errors are positive). If the mean is negative, your model is OVER-estimating the outcome.

Why didn’t we cover this assumption last quarter? Good Question! By including an intercept or constant in our regression (\(\beta_0\)), we force the errors to have a mean of 0, so we don’t have to worry about it. You will almost never run a regression without an intercept.

3.2.2 Pros and cons of the model

Pros:

- The LPM is simple to run if you already know how to run a linear regression.

- It is easy to interpret as a change in the probability of the outcome ocurring.

Cons:

- It violates two of the main assumptions of normal linear regression.

- You’ll get predict probabilities that can’t exist (i.e., they are above 1 or below 0).

3.3 Running a LPM in R

Now we will walk through running and interpreting a linear regression in R from start to finish. This will be relatively straightforward if you know how to run a linear regression in R, because we will be following basically the same steps. These steps assume that you have already:

- Cleaned your data.

- Run descriptive statistics to get to know your data in and out.

- Transformed variables that need to be transformed (logged, squared, etc.)

We will be running a LPM to see what personal characteristics are associated with surviving the Titanic shipwreck.

Step 1: Plot your outcome and key independent variable

Before you run your regression. Take a look at your outcome and key independent variables in a scatter plot with a lowess line to see if there may be a relationship between your variables. A flat line would indicate no relationship.

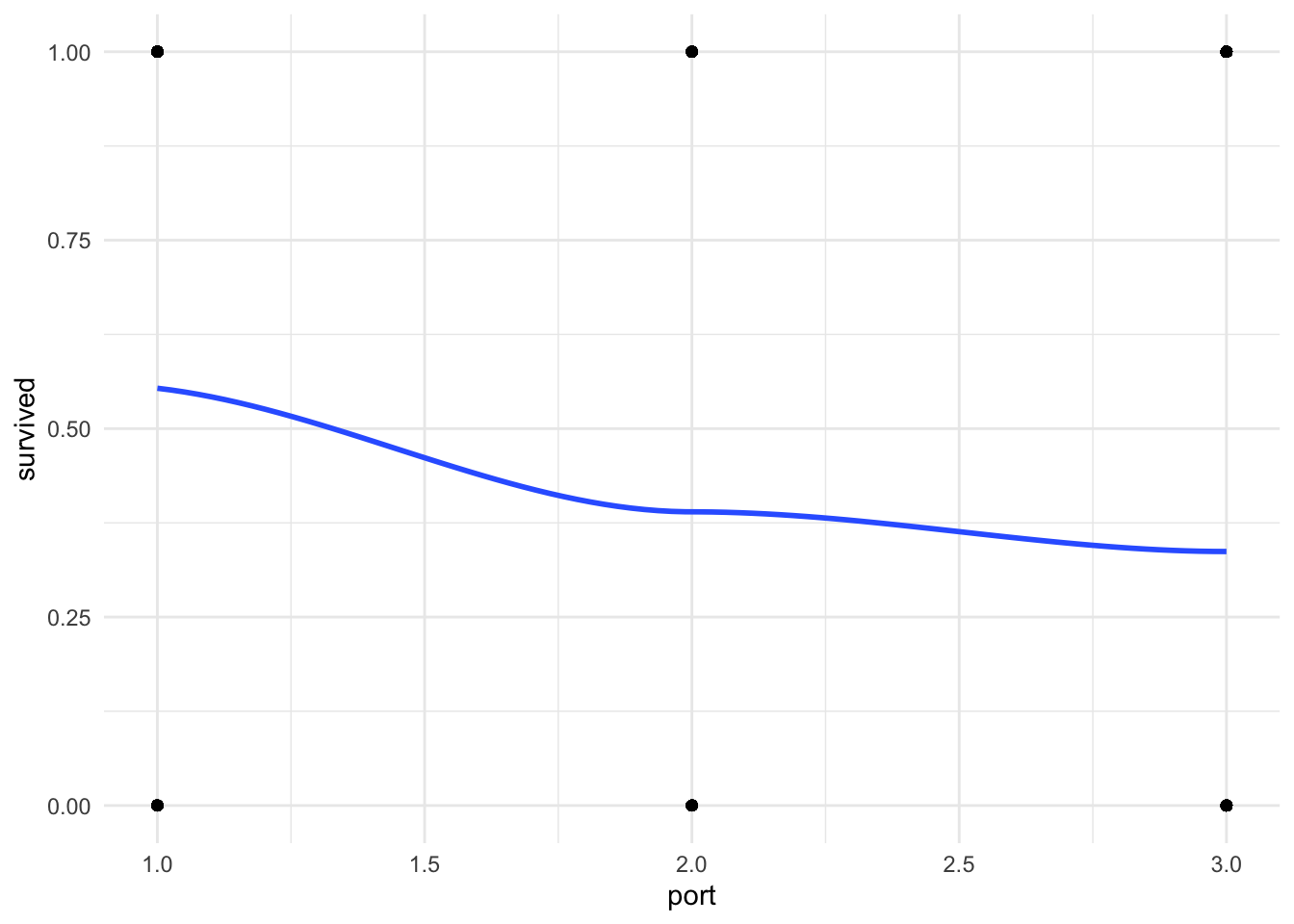

ggplot(df_titanic %>% drop_na(port), aes(x = port, y = survived)) +

geom_point() + # create a scatterplot layer

geom_smooth(method = "loess", se = FALSE) + # add a smoothing line layer

theme_minimal()

You can see that there is a classic curve to this relationship we will be seeing more as we learn other binary regression models this quarter.

Step 2: Run your model

First, in R we have to make sure that all the categorical variables we want are formatted as factors. Here I am just applying labels to the raw values in the dataset.

df_titanic <- df_titanic %>%

mutate(port = factor(port, labels = c("Cherbourg", "Queenstown", "Southhampton")),

female = factor(female, labels = c("Male", "Female")),

pclass = factor(pclass, labels = c("1st (Upper)", "2nd (Middle)", "3rd (Lower)")))Second, you want to run a basic model with your outcome and key independent variable.

NOTE: We will be using robust standard errors because we know we will be violating the heteroskedasticity assumption.

# Run the basic model

basic_fit <- lm(survived ~ port, data = df_titanic)

# Show results with robust standard errors

basic_fit_robust <- coeftest(basic_fit, vcov = vcovHC(basic_fit, type="HC1"))

basic_fit_robust

t test of coefficients:

Estimate Std. Error t value Pr(>|t|)

(Intercept) 0.553571 0.038419 14.4089 < 2.2e-16 ***

portQueenstown -0.163961 0.067638 -2.4241 0.01555 *

portSouthhampton -0.216615 0.042709 -5.0718 4.799e-07 ***

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1Then you want to run your model with additional covariates.

# Run the full model

full_fit <- lm(survived ~ port + female + log_fare, data = df_titanic)

# Show results with robust standard errors

full_fit_robust <- coeftest(full_fit, vcov = vcovHC(full_fit, type="HC1"))

full_fit_robust

t test of coefficients:

Estimate Std. Error t value Pr(>|t|)

(Intercept) 0.022424 0.058042 0.3863 0.69934

portQueenstown -0.086960 0.053972 -1.6112 0.10749

portSouthhampton -0.102385 0.036718 -2.7884 0.00541 **

femaleFemale 0.496048 0.032209 15.4008 < 2.2e-16 ***

log_fare 0.090448 0.014649 6.1744 1.011e-09 ***

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1Step 3: Interpret your model

There are three ways we can interpret and communicate the results of our model:

- Interpretation of the coefficients

- Plot the regression line

- Plot our predicted probabilities (aka margins)

Interpret coefficients

The reason people like the LPM is that the coefficients are so straightforward to interpret. A one unit change in X is associated with a change in the probability that Y occurs. Remember our outcome is binary: yes the outcome occurs or no it does not. It often makes the most sense to interpret that probability change as a change in the percent probability of our outcome occurring, so we multiply the coefficient by 100.

To do basic math with our coefficients in helps to know how r labels them. You can find this out by pulling up your coefficient legend. Each coefficient can be referred to by it’s order in this list.

full_fit$coefficients (Intercept) portQueenstown portSouthhampton femaleFemale

0.02242372 -0.08696024 -0.10238453 0.49604775

log_fare

0.09044800 Let’s interpret each coefficient…

Port - Queenstown: A person boarding in Queenstown had a _____ % descreased probability of surviving compared to someone boarding in Cherbourg. (not statistically significant)

full_fit$coefficients[2] * 100portQueenstown

-8.696024 Port - Southhampton: A person boarding in Southhampton had a _____ % decreased probability of surviving compared to someone boarding in Cherbourg.

full_fit$coefficients[3] * 100portSouthhampton

-10.23845 Female: A female passenger had a ______ % increased probability of surviving compared to a male passenger.

full_fit$coefficients[4] * 100femaleFemale

49.60477 Log_fare: A 1% increase in ticket fare is associated with a ______ % increased probability of surviving. NOTE - the variable for the cost of the ticket (aka ticket fare) was logged. When we log an x variable we can interpret it as a 1% change in X rather than a 1 unit change in X.

full_fit$coefficients[5] * 100log_fare

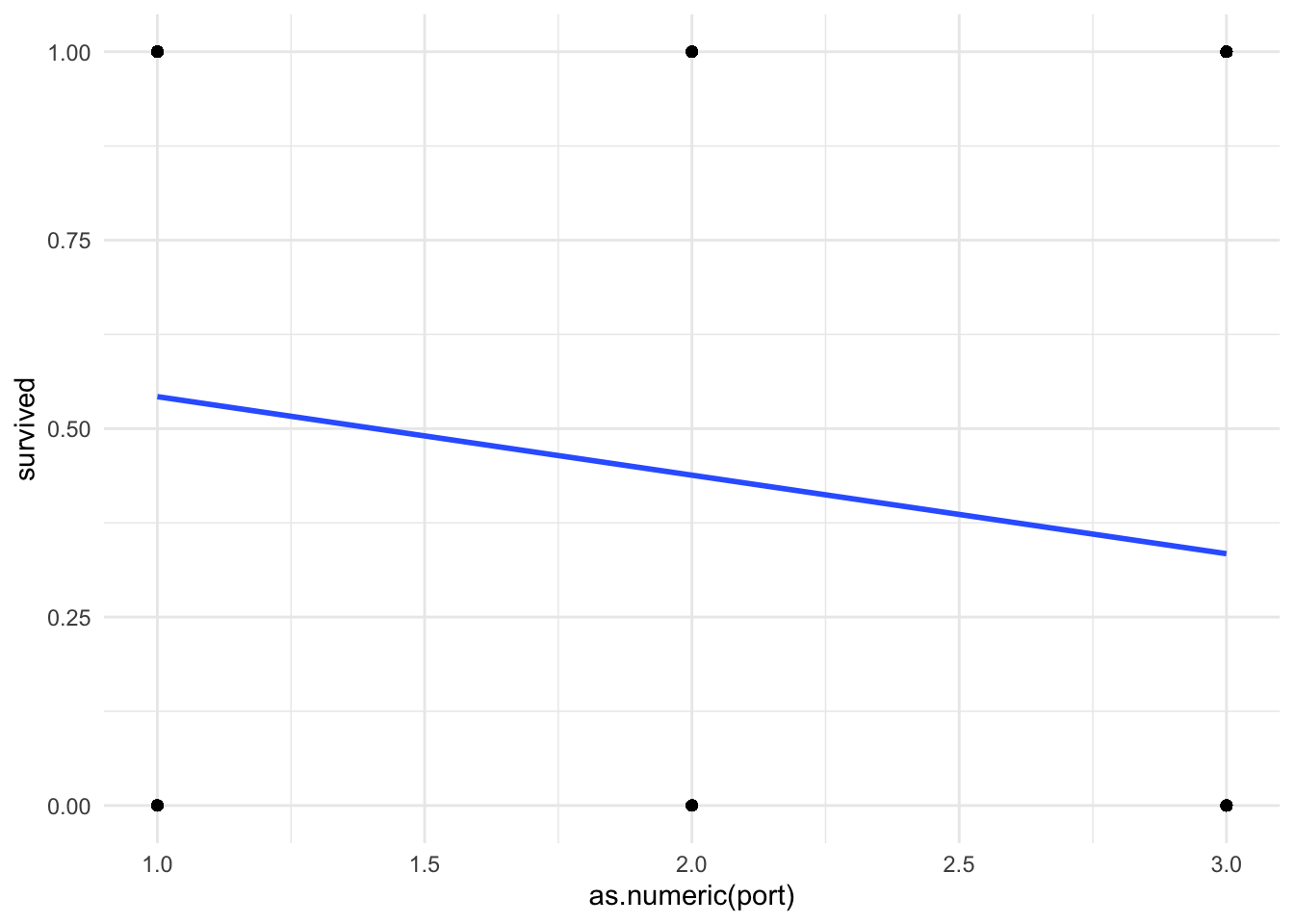

9.0448 Plot your regression line

Because we are forcing a linear regression line onto a nonlinear relationship, I think it useful to do a quick visual of your line of best fit.

# Note that I need to force "port" back to numeric to get this plot to work

ggplot(df_titanic %>% drop_na(port), aes(x = as.numeric(port), y = survived)) +

geom_point() + # create a scatterplot layer

geom_smooth(method = "lm", se = FALSE) + # add a lfit layer

theme_minimal()

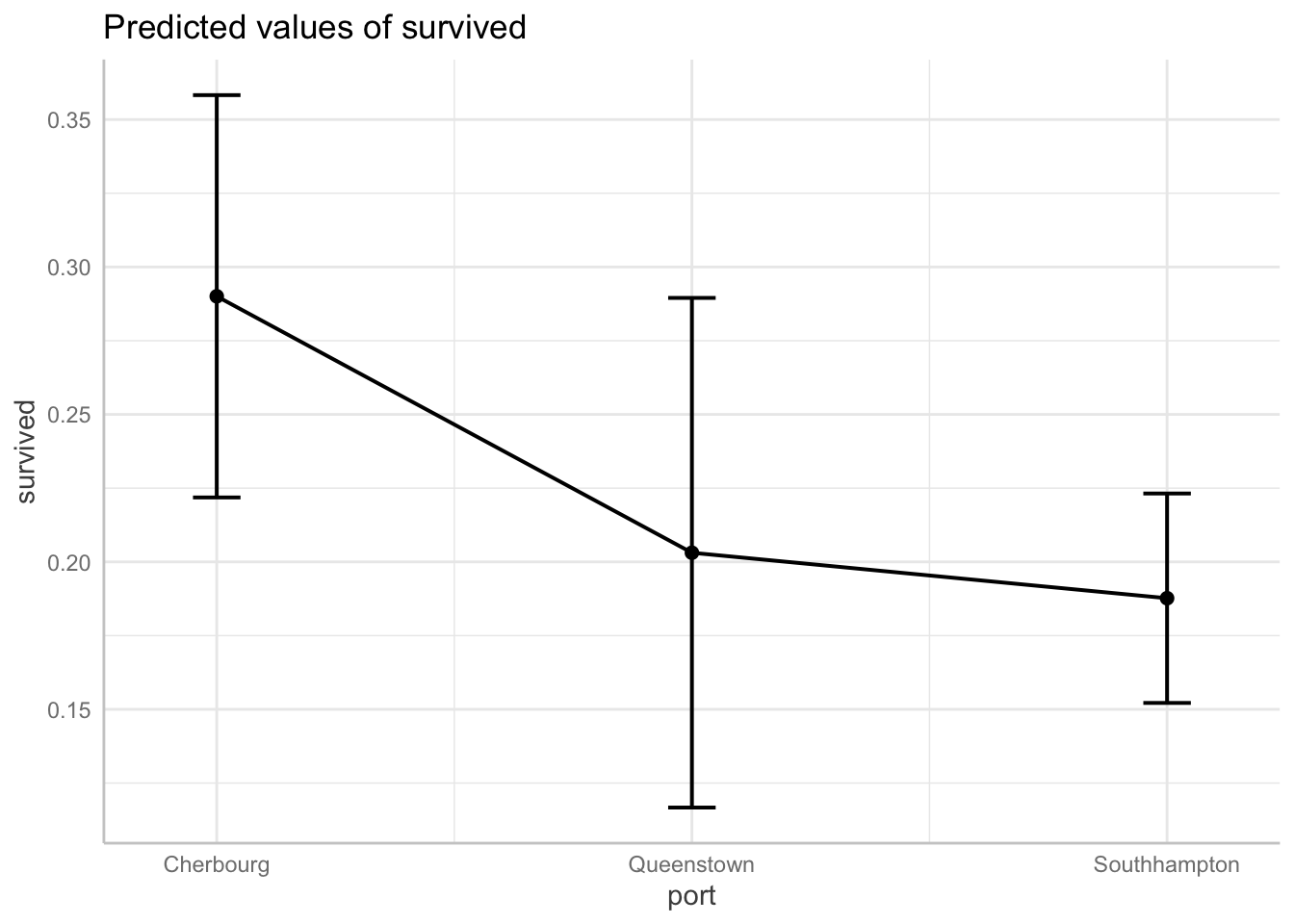

Plot your predicted probabilities in a margins plot

We will have a lab dedicated to reviewing margins plots, but for now here are a couple simple margins plots for this regression. With a normal linear regression, these plots display the predicted values of our outcome across different values of a covariate (or covariates). With a LPM the plots display the predicted probability of our outcome occurring across different values of a covariate (or covariates) we specify.

Predicted probability of surviving by boarding port

# Note you have to specify the robust standard errors

ggpredict(full_fit, terms = "port",

vcov.fun = "vcovHC", vcov.type = "HC0") %>%

plot(connect.lines = TRUE)

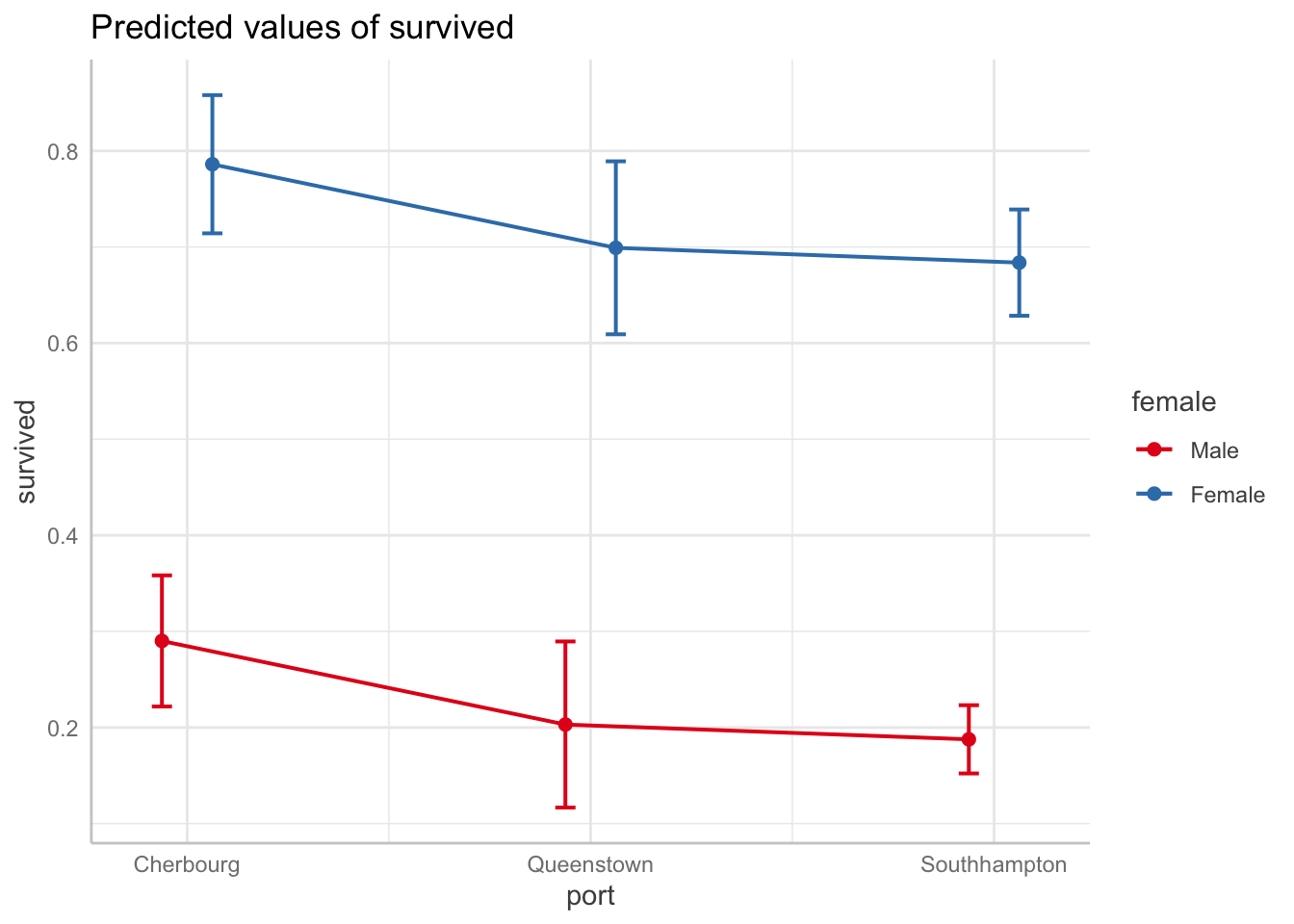

Predicted probability of surviving by boarding port by gender

# Note you have to specify the robust standard errors

ggpredict(full_fit, terms = c("port", "female"),

vcov.fun = "vcovHC", vcov.type = "HC0") %>%

plot(connect.lines = TRUE)

Step 4: Check your assumptions

1) Linearity assumption – violated!

But we don’t care so let’s move on.

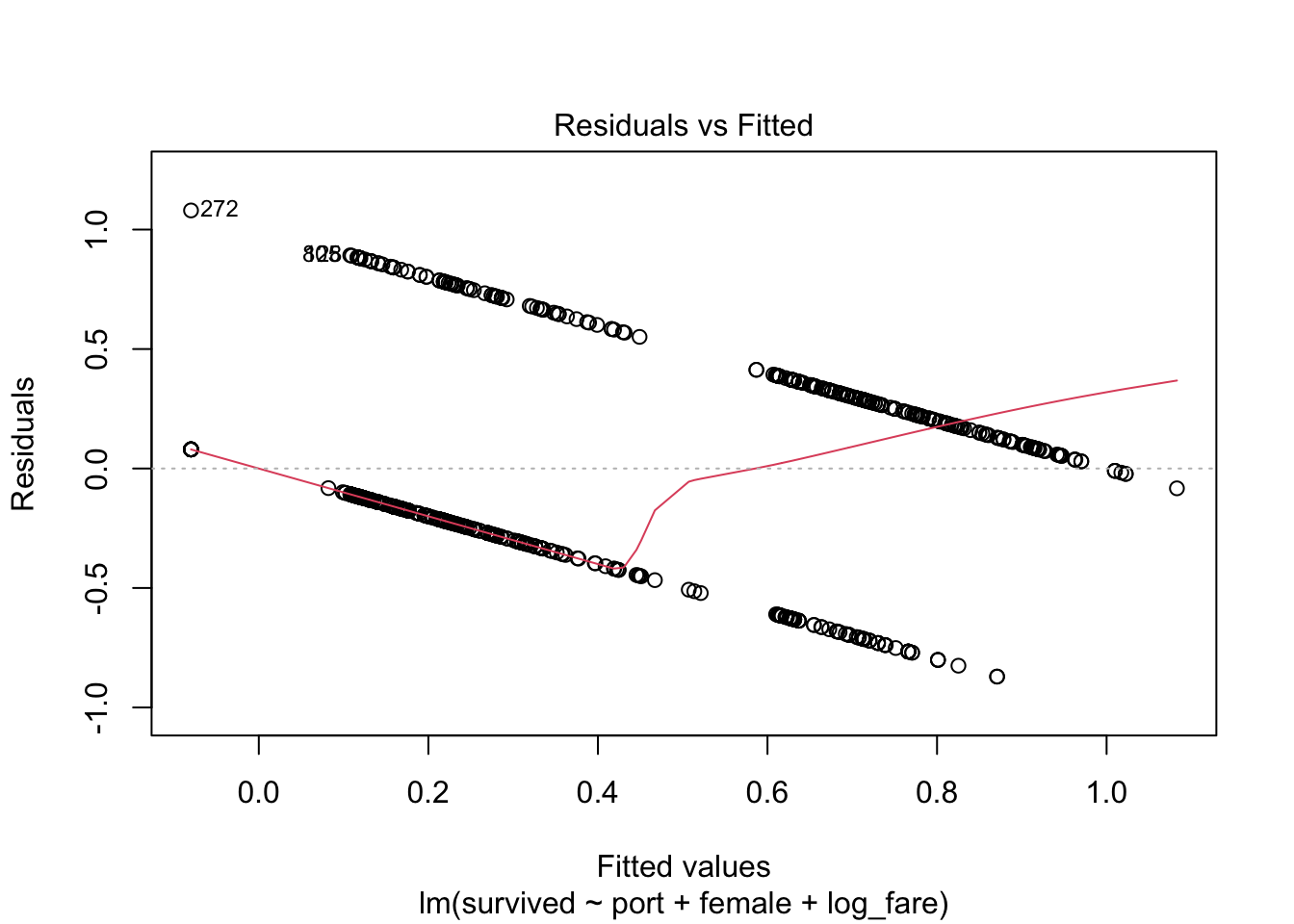

2) Check out the violation of the heteroskedasticity assumption

We know we’re violating this assumption, but because you’ll be using this plot moving forward, let’s check out that violation with a…

Residuals vs Fitted Values Plot

plot(full_fit, which = 1)

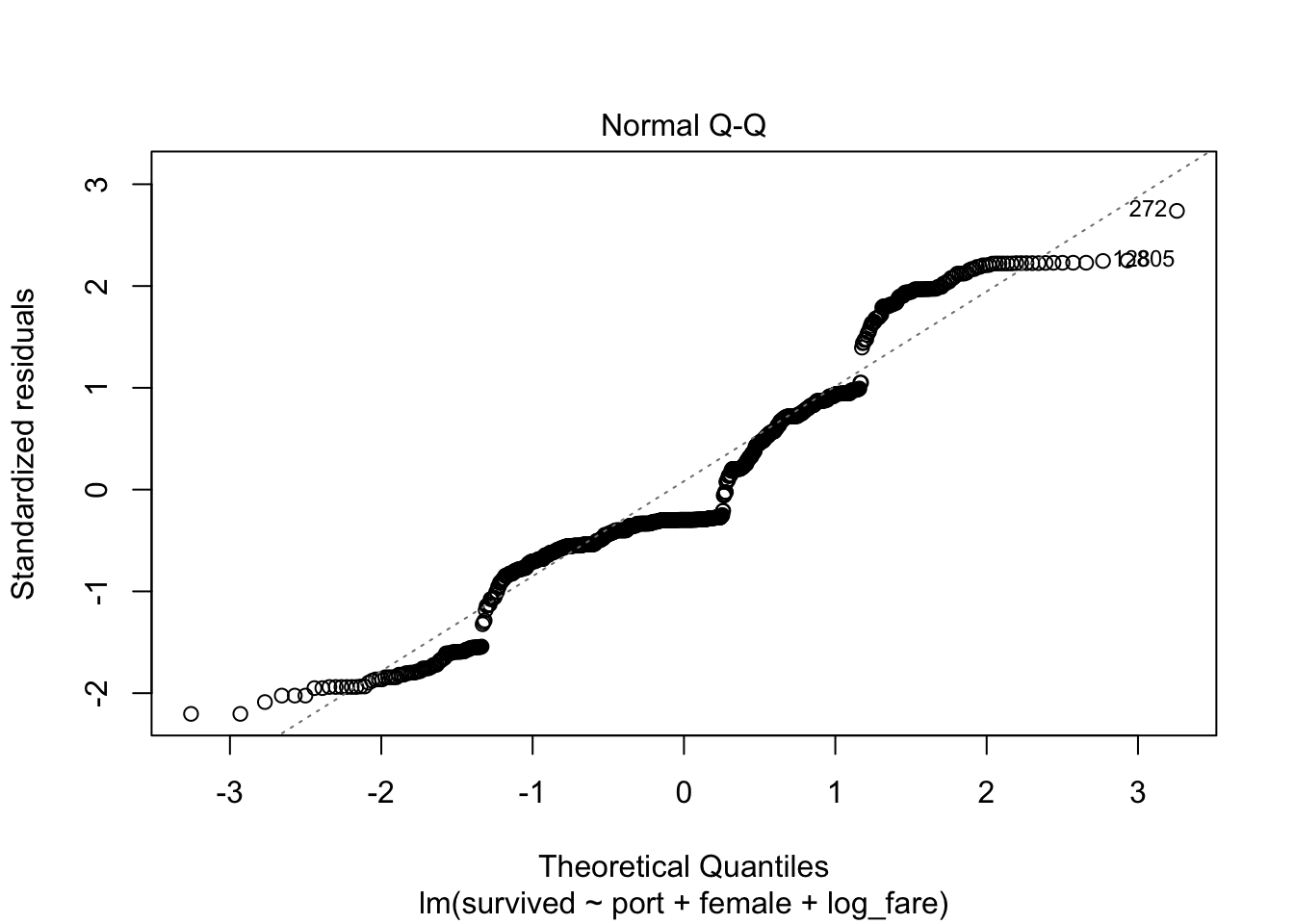

3) Normally distributed errors

In Stata I have the class produce a histogram, but it is so much easier in R to rely on the Q-Q plot for this assumption because there are built-in plots. Remember, if the errors are normal they will stick close to the plotted line.

plot(full_fit, which=2)

Also not normal!

We violate this assumption, but our sample size is high enough that we are okay.

5) X is not invariant

Logic check - yup, our x variables take on more than one value!

6) X is independent of the error term

Are there any confounding variables? For sure. Do we think they are a big enough detail that they would change our results.

We did not include passenger class, which if you know the story of the titanic had a huge effect on who lived and who died. This leads you to our application question…

3.4 Apply this model on your own

At the end of the .do file for this lab, run a LPM adding in passenger class (pclass) as an additional covariate.

- Interpret the

pclasscoefficient. - How does including this variable affect our other coefficients?

- (If time) Try to produce a margins plot for this variable using

ggpredict().

Answers to these questions can be found in the “w.answers” version of the script file.