20 Basic simulations

The Sims is a successful series of a real-life simulation games. In this game, players can create a virtual character (called a “Sim”), equip it with personality traits and stuff like clothes and furniture, meet friends and foes, and then spend days or weeks by observing and nurturing their artificial alter egos to lead their lives in a simulated environment (Figure 20.1).

Figure 20.1: The Sims Social is a real-life simulation game in which people customize and nurture artificial alter egos and observe and influence their behavior. (Image from EA: The Sims.)

This chapter on Basic simulations is the first chapter in the more general part on Modeling. As our goal is to learn how R can be used for representing and solving problems, we will first concentrate on basic and straightforward simulations that either enumerate cases (in Section 20.2) or sample random values from some distribution (in Section 20.3). While the problems considered here are simple enough that they could still be solved analytically, implementing them familiarizes us with many interesting aspects of computer simulations and provides us with an excellent excuse to solve some entertaining puzzles.

The next Chapter 21 on Dynamic simulations will go beyond these elementary cases to include more complex simulations in which future states and outcomes are harder to anticipate.

Preparation

Recommended background readings for this chapter include:

- Chapters 22: Introduction and 23: Model basics of the r4ds book (Wickham & Grolemund, 2017).

Preflections

Remember that the chapters on simulations are enclosed in a book part that is entitled Modeling. This raises some interesting questions:

What is a simulation?

What are the goals of creating a simulation?

-

What are the differences of simulation to a model? Specifically,

- Are all simulations models?

- Are all models simulations?

20.1 Introduction

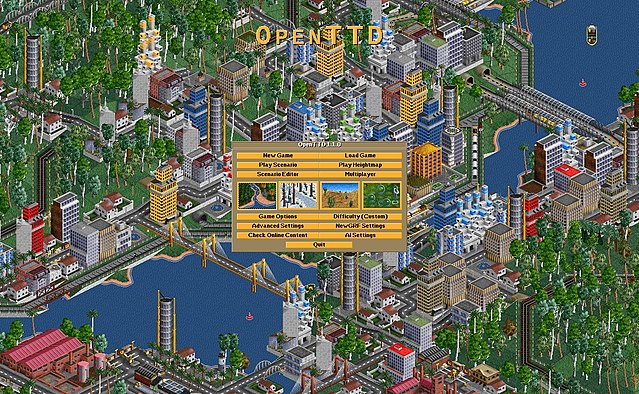

Economic simulation games (see Wikipedia) promise to practice skills in a playful setting. Importantly, the conditions and actions of players are processed by rules to yield plausible consequences and outcomes. To be both believable and instructive, such games need to contain a modicum of truth (Figure 20.2).

Figure 20.2: OpenTTD is an open source simulation game. (Image from OpenTTD.org.)

Clarify some key terminology. Some concepts that are relevant in the present contexts are:

- the distinction between simulations and modeling,

- situations under risk vs. under uncertainty,

- solving problems by description vs. by experience.

20.1.1 What are simulations?

According to Wikipedia, a computer simulation is the process of running a model. Thus, it does not make sense to “build a simulation”. Instead, we can “build a model”, and then either “run the model” or “run a simulation”.

Despite this agreement, people also call a collection of coded assumptions and rules a simulation, rendering the boundaries between models and simulations blurry again. For our purposes, simulations simply are particular types of models of a situation. Thus, all simulations are models, but there are models (e.g., a mathematical formula) that we would not want to call a simulation.

A further difference between models and simulations is their relation to data. When starting with data, we use models to analyze it. When doing statistics, for instance, we are actually modeling our data (or comparing data to theoretical assumptions that can be described as models). By contrast, simulations often generate data.

Conceptually, simulations create concrete observations (i.e., data/cases) from a more abstract description of a problem, task, or situation. Note that, in data analysis or statistical modeling, we typically do the reverse: We start with data (concrete observations) and create a more abstract description of the data.

Creating a simulation typically requires that we represent both a situation and the actions or rules specified in a problem description. Conceptually, a representation involves (a) mapping the essential elements of a problem to corresponding elements in a model, and (b) specifying the processes that can operate upon both sets of elements. Once these representational constructs are in place, we can run the simulation repeatedly to observe what happens.

If the description contains probabilistic parameters, the simulation can include random elements. In many contexts, the term “simulation” is used when models contain random elements. For instance, in statistical simulations, we often start by drawing random values from some well-defined distribution and then compute the result of some algorithm based on these values.

Goals of simulations

Creating simulations typically serves two inter-related purposes:

Explicate premises and processes: If we cannot solve a problem analytically (e.g., mathematically), we can simulate it to inspect its solution. By implementing both the premises and a mechanism, they explicate the assumptions and consequences of a theory. Often we may notice details or hidden premises that remained implicit or unspecified in the problem formulation.

Generate possible results (outputs): This method can be used to explore the consequences of a set of rules or regularities. Such simulations are instantiations that show what happens if certain starting conditions are met and some mechanism is executed. Changing premises or parts of the process shows how this affects our results.

Mental vs. silicon simulations

The idea of using a model or simulation for predicting outcomes is not new — and has often been viewed as an analogy for human thinking, reasoning, and problem solving (see e.g., Craik, 1943; Gentner & Stevens, 1983; Johnson-Laird, 1983). However, rather than thinking things through in our minds, we now also have the computing capacities and tools for quickly building quite powerful computer simulations (e.g., in simulating entire ecosystems or climate phenomena). But out-sourcing simulations from our brains to computing machines does not free us from thinking. The bigger and more complex our simulations get, the more important are solid programming skills and a sound reflection of their pitfalls and limitations.

Crutches vs. useful tools

Note that simulations are often portrayed as a poor alternative to analytic solutions. When we lack the (formal, e.g., mathematical) means to solve something in an analytic fashion, we can use simulations as a crutch that generates results without gaining a clear conceptual and theoretical understanding of the process. However, simulations can also be used in a sensible and responsible fashion (e.g., by systematically varying inputs) and explore systems or theories that are (currently?) too complex to be tackled by analytic approaches (e.g., interactions between neurons in a brain, or sets of physical laws jointly governing weather or climate phenomena). As the merits of any method generally depends on its uses and the availability of alternative approaches, we should generally welcome simulations as one possible way for solving problems.

20.1.2 Static simulations

This chapter on basic simulations focuses on static situations: The environment and the range of moves or options is fixed by the problem description. All relevant information (e.g., the rules of the game or scope of actions) is known a priori.

Such situations can be well-defined, but still contain probabilisitc elements. The psychology of judgment and decision making refers to such situations as risky choice or decision making under risk. A typical situation under risk is repeatedly throwing a random device (e.g., a coin or dice). Here, the set of all possible outcomes and their probabilities can be defined (e.g., by description) or measured (e.g., by experiencing a series of events). Although any particular outcome cannot be know in advance, we can still make observations and predictions regarding the long-term development of outcomes. The mathematics of such problems are governed by the axioms and calculus of probability theory.

By contrast, situations are considered to be under uncertainty when either the set of possible outcomes or their probabilities cannot be known in advance. Most real-life situations are actually under uncertainty, rather than under risk [see Gigerenzer (2014); or Neth & Gigerenzer (2015); for examples]. The boundaries between situations under risk and those under uncertainty are often blurred, as they partly depend on our ability of capturing, expressing, and defining the relevant elements. When considering more complex phenomena (e.g., systems that contain interactions between one or more agents and a reactive and responsive environment), all aspects of a situation may technically still be under risk, yet their dynamic interplay can get too messy and intransparent to provide an analytic solution to the problem. Thus, while analytic solutions may always be desirable, creating simulations may be our only realistic options for keeping track of such environments, their agent(s), or both. (We will examine such situations in Chapter 21 on Dynamic simulations.)

However, even static situations in which the range of optios and probabilities is relatively small and well-defined can often get complex and complicated. Experts in probability may be able to solve them analytically, but the rest of us can tackle them by running a simulation.

For simulating risky situations, we must address the following general issues:

- Creating data structures for representing environments and events

- Keeping track of environmental states, actions, and outcomes

- Managing sampling processes (i.e., randomness and iterations)

20.1.3 Overview

Conceptually, simulations create concrete observations (i.e., cases or data) from a more abstract description. If this description contains probabilistic parameters, the simulation can include random elements.

In the following, we introduce two basic types of simulations:

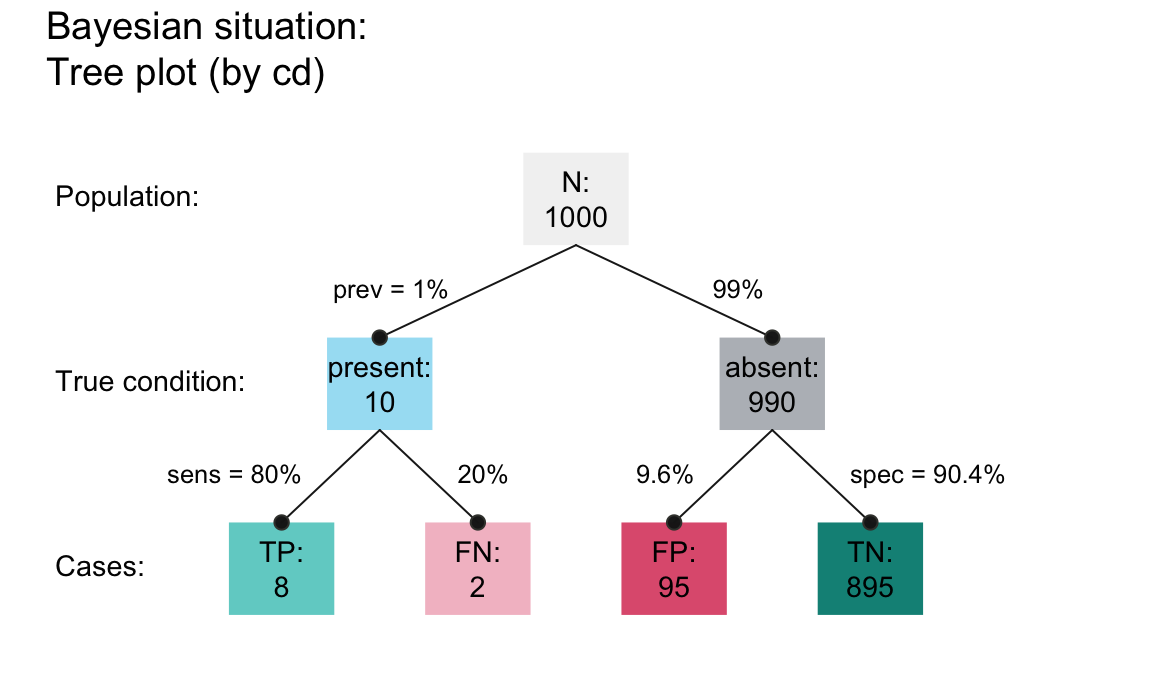

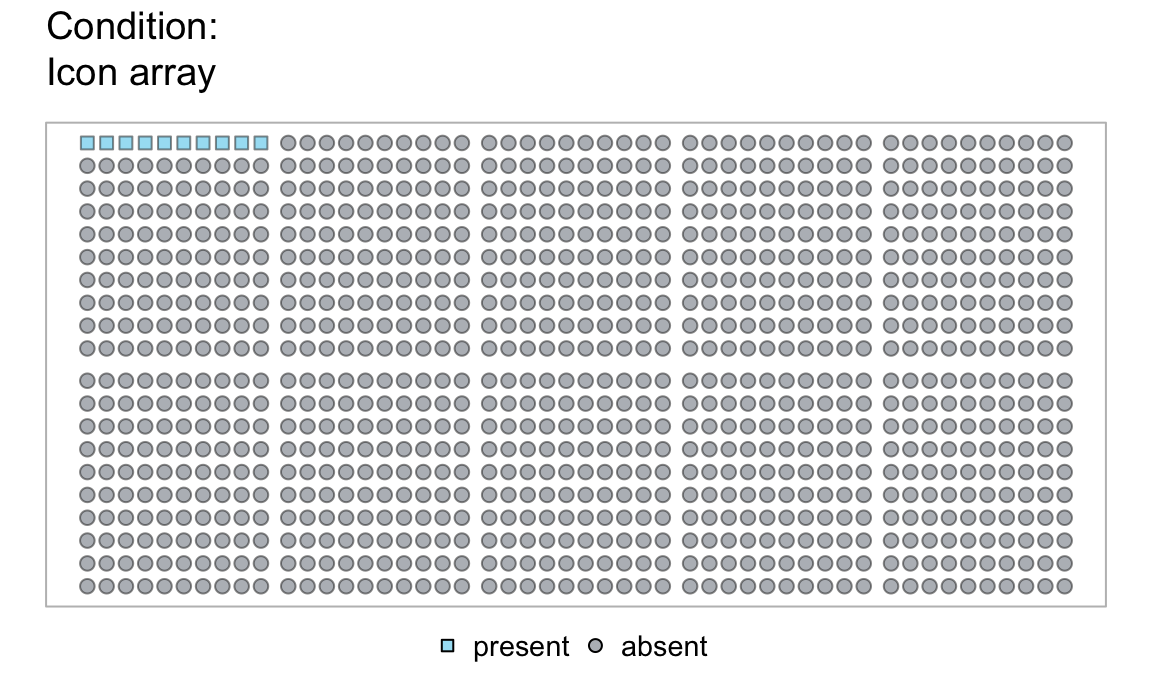

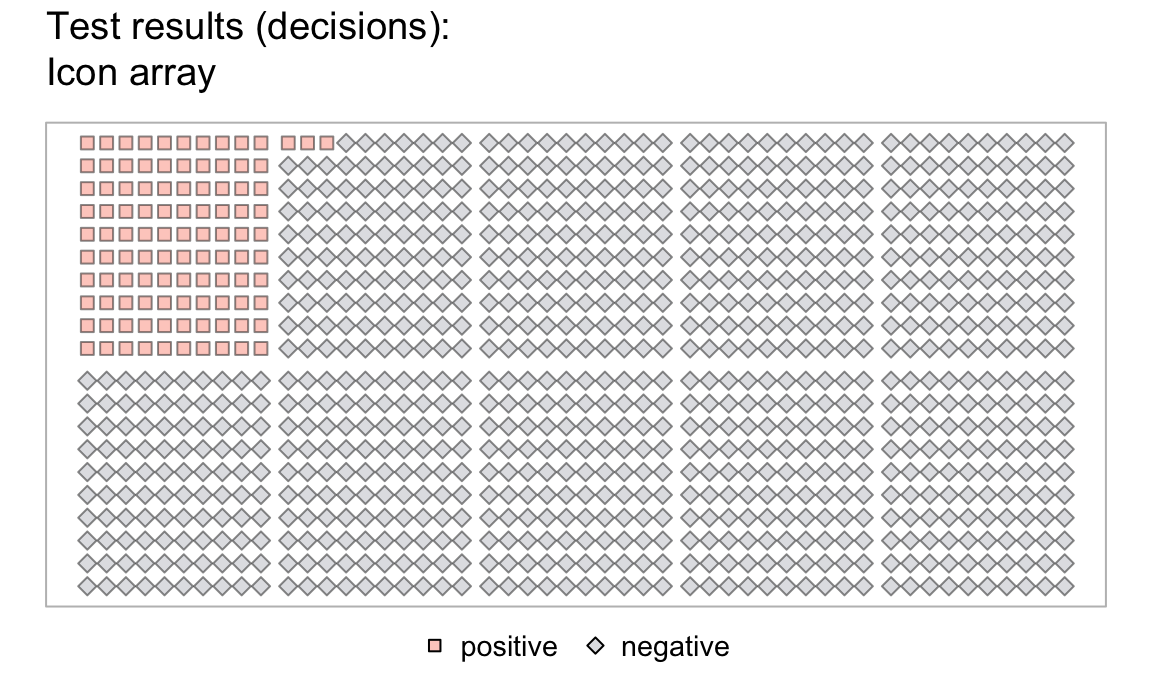

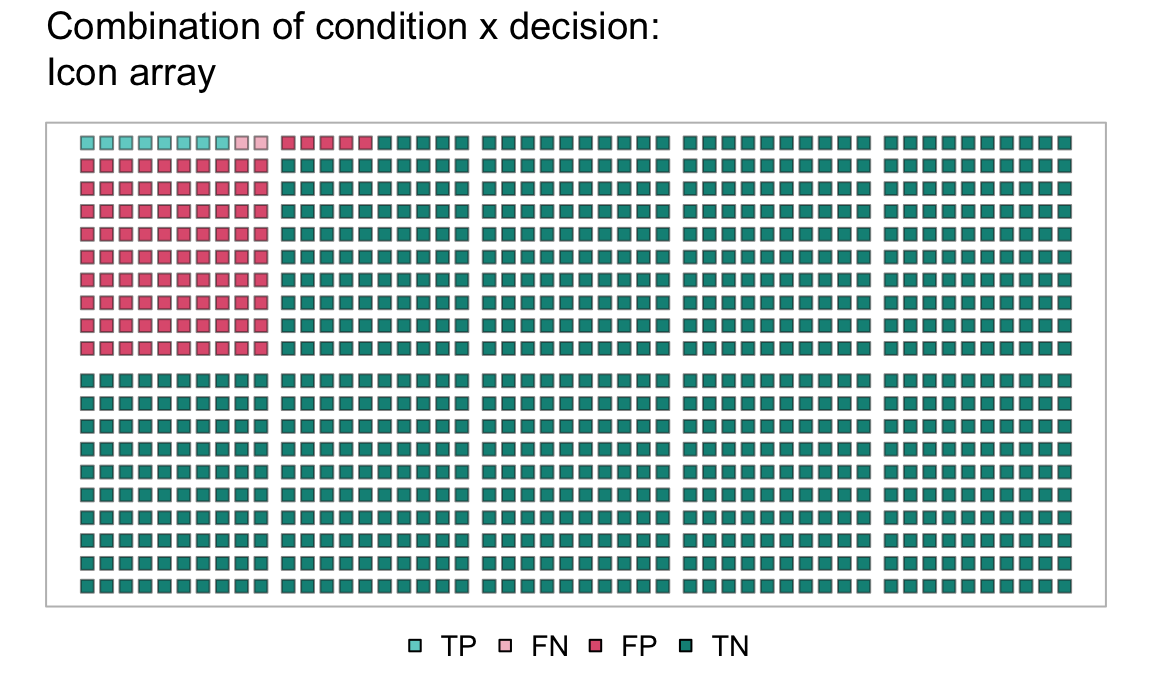

Enumerating cases essentially explicates information that is contained in a problem description. This most basic type of simulation will be used to illustrate so-called “Bayesian situations”, like the notorious mammography problem (Eddy, 1982, see Section 20.2).

Sampling cases employs controlled randomness for solving probabilistic problems by repeated observations. This slightly more sophisticated type of simulation is use to illustrate the Monty Hall problem (Savant, 1990, see Section 20.3).

Conceptually, both types of simulations exploit the continuum between a probabilistic description and its frequentist interpretation as the cumulative outcomes of many repeated random events. As our examples and the exercises (in Section 20.5) will show, the details of the assumed premises and processes matter for the outcomes that we will find — and simulations are particularly helpful for explicating implicit premises and processes.

Problems considered

Examples of problems that can be “solved” by basic simulations include:

- Bayesian situations, like the mammography problem (Eddy, 1982) or cab problem (Kahneman & Tversky, 1972a)

- so-called cognitive illusions, like the engineer/lawyer problem or Linda problem; (e.g., Tversky & Kahneman, 1974)

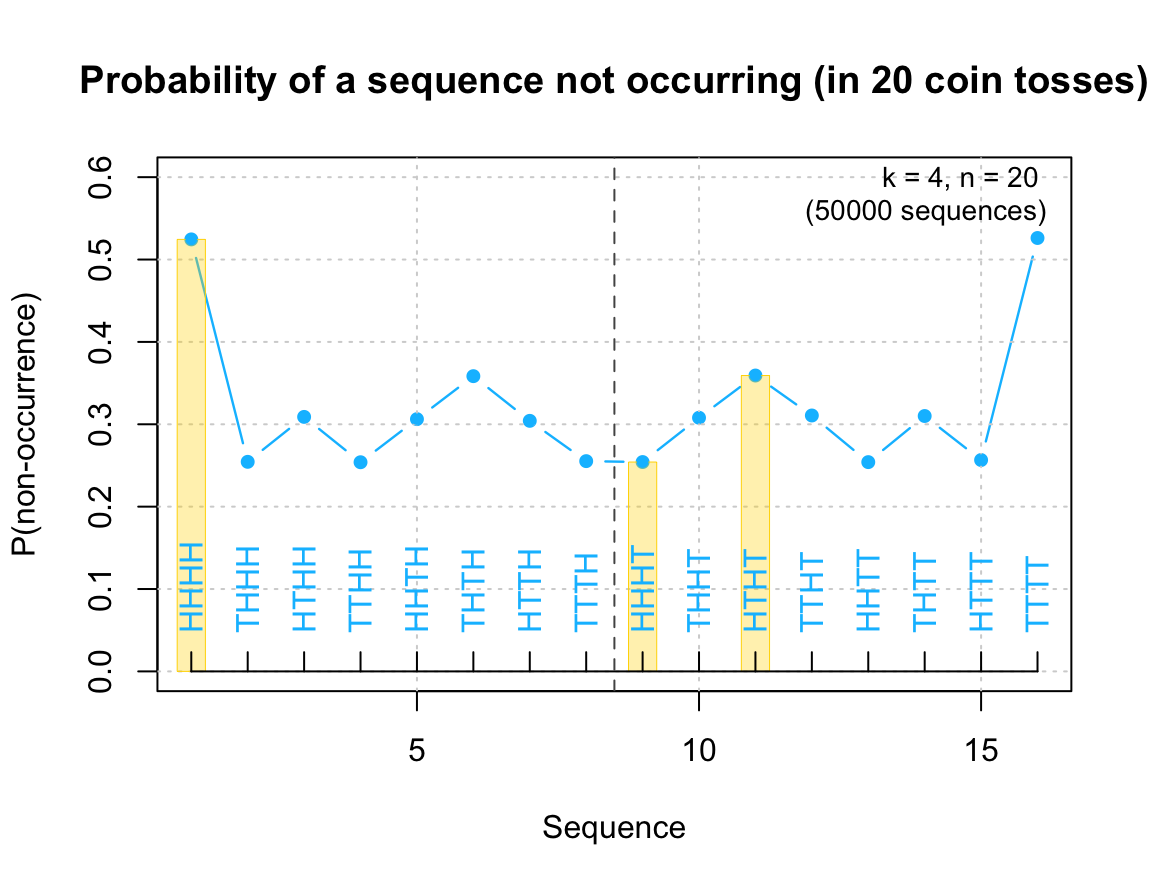

- notorious brain teasers, like the Monty Hall dilemma (Savant, 1990; Selvin, 1975) or the three prisoners problem (e.g., Bar-Hillel, 1980)

- other mathematical (Dudeney, 1917) or probability puzzles (Gardner, 1988; Mosteller, 1965), and

- alleged biases in the human perception of randomness (Hahn & Warren, 2009; Kahneman & Tversky, 1972b)

The prominence of this list shows that simulations can address a substantial proportion of the problems discussed in the psychology of human judgment and decision making.

General goals

By implementing these problems, we pursue some general goals that reach beyond the details of any particular problem. These goals include:

- Explicating a problem’s premises and structure (by implementing it)

- Solving the problem (by repeatedly simulating its assumed process)

- Evaluating the solution’s uncertainty or robustness (e.g., its variability)

Additional motivations for running simulations are that they can provide new insights into a problem and be a lot of fun — but that partly depends on your idea of fun, of course.

20.2 Enumerating cases

The simplest type of simulation merely explicates information that is fully contained in a problem description. This promotes insight by showing the consequences of premises.

20.2.1 Bayesian situations

For several decades, the psychology of reasoning and problem solving has been haunted by a class of problems. The drosophila task of this field is the so-called mammography problem (based on Eddy, 1982).

The mammography problem

The following version of the mammography problem stems from Gigerenzer & Hoffrage (1995) (using the standard probability format):

The probability of breast cancer is 1% for a woman at age forty who participates in routine screening.

If a woman has breast cancer, the probability is 80% that she will get a positive mammography.

If a woman does not have breast cancer, the probability is 9.6% that she will also get a positive mammography.

A woman in this age group had a positive mammography in a routine screening.

What is the probability that she actually has breast cancer?

Do not despair when you find this problem difficult. Only about 4% of naive people successfully solve this problem (McDowell & Jacobs, 2017).

20.2.2 Analysis

We should first identify precisely what is given and asked in this problem:

What is given?

Identifying the information provided by the problem:

Prevalence of the condition (cancer) \(C\): \(p(C)=.01\)

Sensitivity of the diagnostic test \(T\): \(p(T|C)=.80\)

False positive rate of the diagnostic test \(T\): \(p(T|¬C)=.096\)

What is asked?

Identifying the question: What would solve the problem?

- The conditional probability of the condition, given a positive test result. This is known as the test’s positive predictive value (PPV): \(p(C|T)=?\).

Bayesian solution

The problem provides an unconditional (\(p(C)\)) and two conditional probabilities (\(p(T|C)\) and \(p(T|¬C)\)) and asks for the inverse of one conditional probability (i.e., \(p(C|T)\)). Problems of this type are often framed as requiring ``Bayesian reasoning’’, as their mathematical solution can be derived by a formula known as Bayes’ theorem:

\[ p(C|T) = \frac{p(C) \cdot p(T|C) } {p(C) \cdot p(T|C)~+~p(\neg C) \cdot p(T|\neg C) } = \frac{.01 \cdot .80 } { .01 \cdot .80 ~+~ (1 - .01) \cdot .096 } \approx\ 7.8\%. \]

This looks (and is) pretty complicated — and it’s not surprising that hardly anyone solves the problem correctly.

Fortunately, there are much simpler ways of solving this and related problems. Perhaps the most well-known account of so-called “facilitation effects” is provided by Gigerenzer & Hoffrage (1995). The following simulation essentially implements a version of the problem that expresses the probabilities provided by the problem in terms of natural frequencies for a population of \(N\) individuals. This will allow us to replace complex probabilistic calculations by simpler enumerations of cases.

20.2.3 Solution by enumeration

A first approach assumes a population of N individuals and applies the given probabilities to them.

We can then inspect the population to derive the desired result.

All fixed, no sampling:

# Parameters:

N <- 1000 # population size

prev <- .01 # condition prevalence

sens <- .80 # sensitivity of the test

fart <- .096 # false alarm rate of the test (i.e., 1 - specificity)Prepare data structures: Two vectors of length N:

Using the information given to compute the expected values (i.e., the number of corresponding individuals if those probabilities are perfectly accurate):

# Compute expected values:

n_cond <- round(prev * N, 0)

n_cond_test <- round(sens * n_cond, 0)

n_false_pos <- round(fart * (N - n_cond), 0)Note that we needed to make two non-trivial decisions in computing the expected values:

- Level of accuracy: Rounding to nearest integers

- Priority: Using value of

n_condin other computations ensures consistency (but also dependencies)

Using these numbers to categorize a corresponding number of elements of the cond and test vectors:

# Applying numbers to population (by subsetting):

# (a) condition:

cond[1:N] <- "healthy"

if (n_cond >= 1){ cond[1:n_cond] <- "cancer" }

cond <- factor(cond, levels = c("cancer", "healthy"))

# (b) test by condition == "cancer":

test[cond == "cancer"] <- "negative"

if (n_cond_test >= 1) {test[cond == "cancer"][1:n_cond_test] <- "positive"}

# (c) test by condition == "healthy":

test[cond == "healthy"] <- "negative"

if (n_false_pos >= 1) {test[cond == "healthy"][1:n_false_pos] <- "positive"}

test <- factor(test, levels = c("positive", "negative"))Analogies

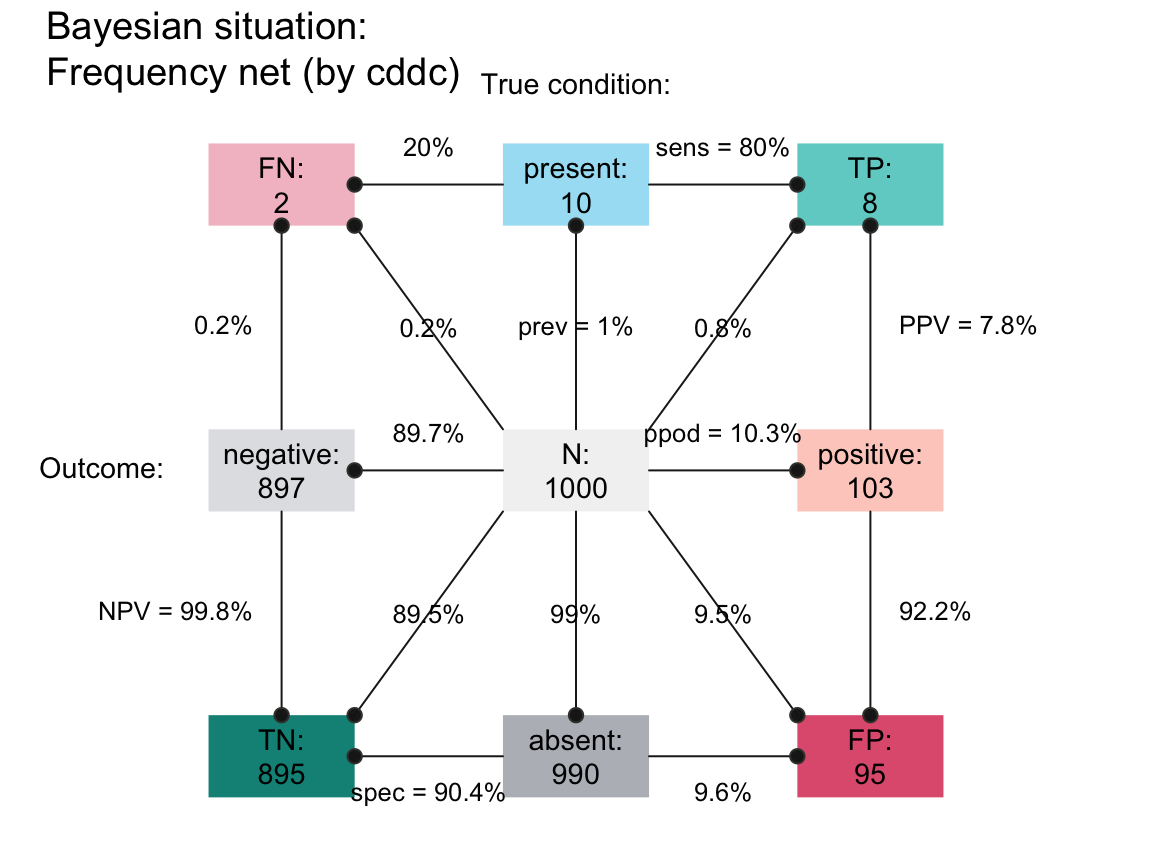

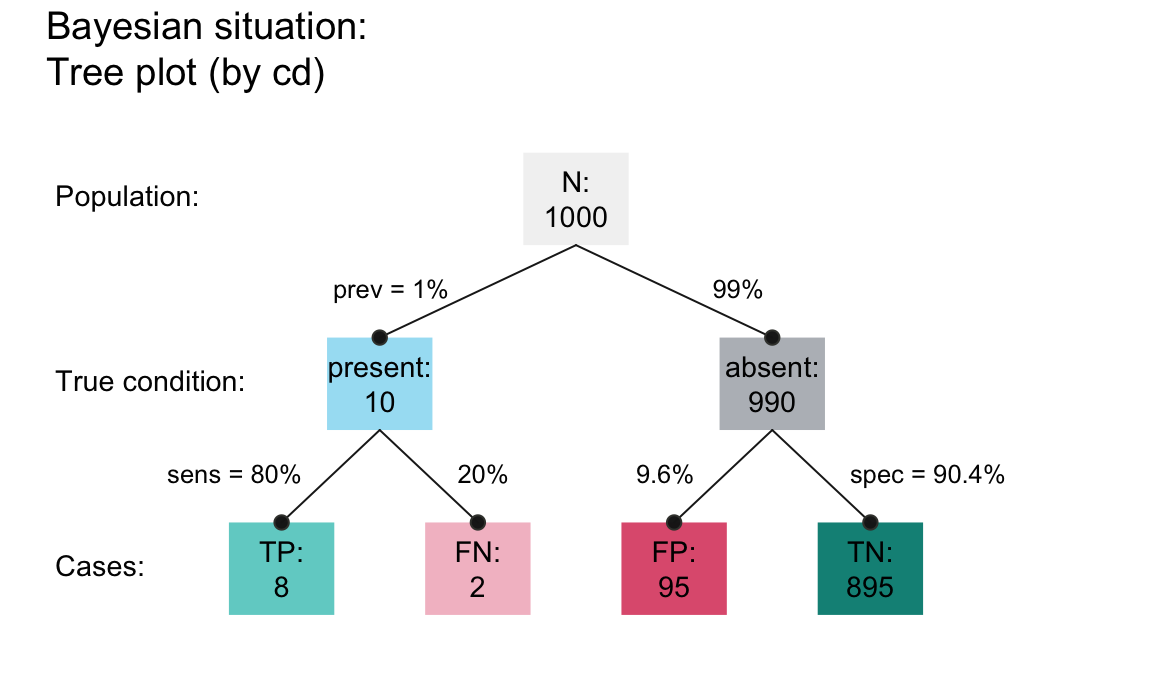

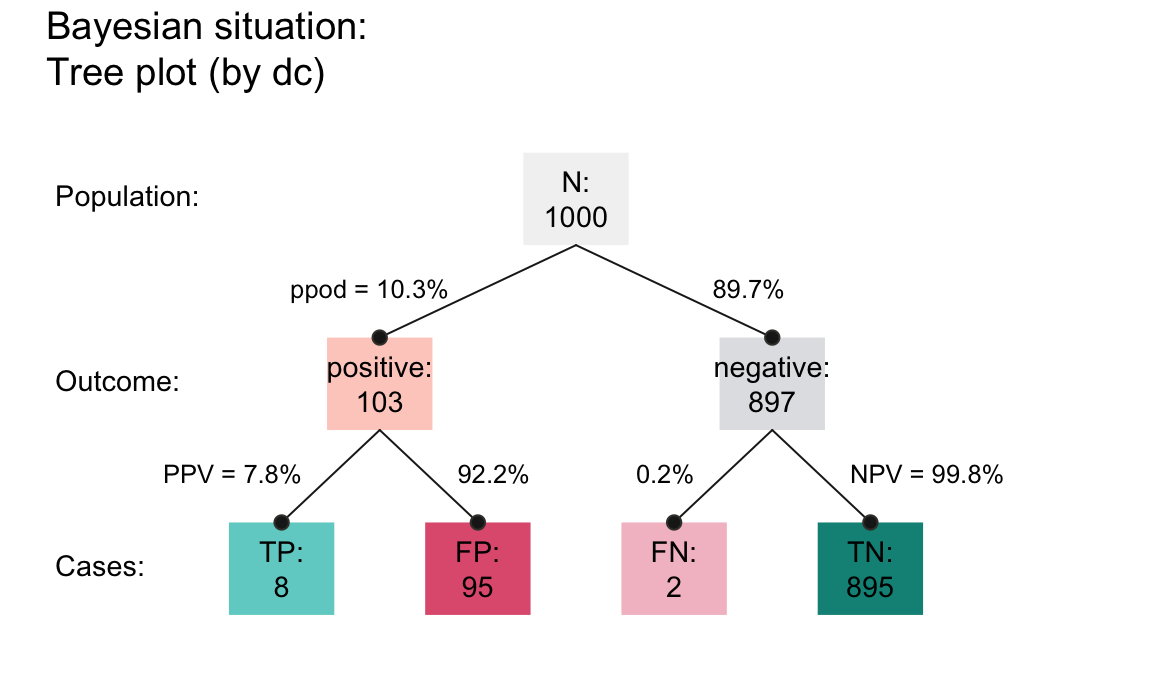

The following visualizations use the riskyr package (Neth et al., 2022):

- Drawing a tree of natural frequencies:

Visualizing the individual items of the population:

- Coloring the

Nsquares of an icon array in three steps:

Our result are two vectors (cond and test) with binary categories, which now can be inspected.

Conduct some checks:

# Checks: Are the categories exhaustive?

sum(cond == "cancer") + sum(cond == "healthy") == N

#> [1] TRUE

sum(test == "positive") + sum(test == "negative") == N

#> [1] TRUE

# Crosstabulation:

table(test, cond)

#> cond

#> test cancer healthy

#> positive 8 95

#> negative 2 895Answer by inspecting table (or the combination of vectors):

sum(test == "positive" & cond == "cancer") # frequency of target individuals

#> [1] 8

sum(test == "positive" & cond == "cancer") / sum(test == "positive") # desired conditional probability

#> [1] 0.0776699Note that this solution used the probabilistic information, but required no random or statistical processes.

Instead, we assumed a large population of N individuals and used the probabilities to enumerate the corresponding number (or proportions) of individuals in subgroups of the population.

The resulting proportion of individuals who both test positive and suffer from cancer out of those with a positive test result matches our analytic solution of \(p(C|T) = 7.8\%\) from above.

An alternative way of solving this problem could take probability more seriously by adding random sampling. We will leave this to Exercise 20.5.6, but use a different problem to illustrate sampling-based simulations (in Section 20.3).

20.2.4 Visualizing Bayesian situations

The following visualizations further illustrate the solutions for the Bayesian situation of the mammography problem that was derived in this section:

- A frequency net (Binder et al., 2020) provides an overview of the subsets of frequencies (as nodes) and the probabilities (as edges between nodes):

- The same information can be expressed in two distinct frequency tree diagrams:

The visualizations shown here use the riskyr package (Neth et al., 2022). See Neth et al. (2021) for the theoretical background of such problems.

20.3 Sampling cases

Goal: Taking probabilities more seriously by actually using the probabilities provided in a process of random sampling. This adds complexity (e.g., different runs yield different outcomes) but may also increase the realism our our simulation (as it’s unlikely to obtain perfectly stable results in reality).

Rather than calculate the expected values, we use the probabilities stated in the problem to sample from the appropriate population.

Two important aspects of managing randomness:

- Use

set.seed()for reproducible randomness. - Assess the robustness of results based on repetitions.

20.3.1 The Monty Hall problem

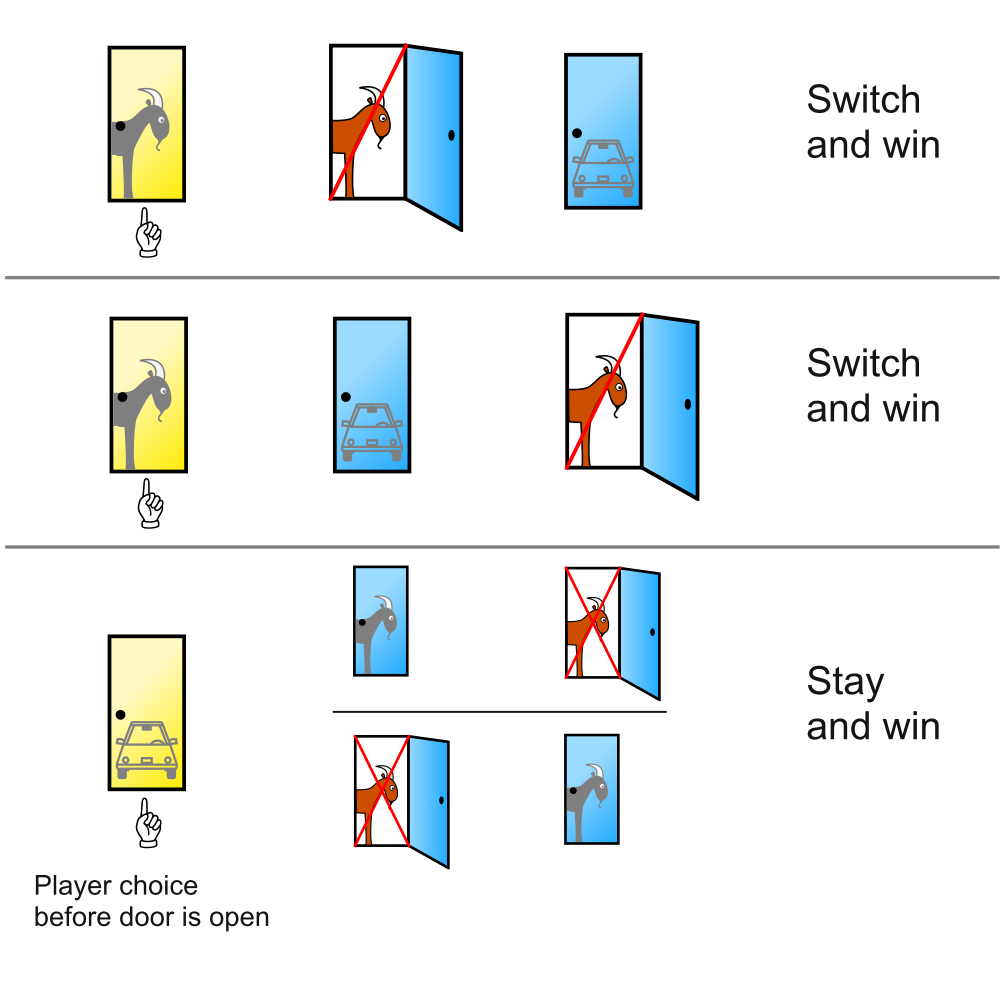

A notorious brain teaser problem is based on the US-American TV show Let’s make a deal (see Selvin, 1975). The show involves that a lucky player faces a choice between three doors. It is known that one door hides the grand prize of a car (i.e., win), whereas the other two hide goats (i.e., losses). After choosing a closed door (e.g., Door 1), the show’s host (named Monty Hall) intervenes by opening one of the unchosen doors to reveal a goat. He then offers the player the option to switch to the other closed door. Should the player switch to the other door? The player’s situation is illustrated by Figure 20.3.

Figure 20.3: The Monty Hall problem from the player’s perspective. (Source: Illustration from Wikipedia: Monty Hall problem.)

The problem

The following version of the dilemma was famously discussed by Savant (1990):

Suppose you’re on a game show, and you’re given the choice of three doors:

Behind one door is a car; behind the others, goats.

You pick a door, say No. 1, and the host, who knows what’s behind the doors,

opens another door, say No. 3, which has a goat.

He then says to you, “Do you want to pick door No. 2?”

Is it to your advantage to switch your choice?

Note that the correct answer to the question asked is either “yes” or “no”. Answering “yes” implies that switching doors is generally better than staying with the initial choice. By contrast, answering “no” implies that staying is generally at least not worse than switching doors.

20.3.2 Analysis

This problem and its variants have been widely debated — and even revealing its solution is often met with disbelief and likely to spark controversy.

Most people intuitively assume that — given Monty Hall’s intervention — the player faces a 50:50 chance of winning and it therefore makes no difference whether she sticks with her initial choice or switches to the other door. (Note that this does not yet justify why the majority of people prefer to stick with their initial door, but we could postulate a variety of so called “biases” or psychological mechanisms for this preference.)

However, the correct answer to the question is “yes”: The player is more likely to win the car if she always switches to the alternative door. There are many possible ways to explain this:

Perhaps the simplest explanation asks: What is the probability of winning the car with the player’s initial choice (i.e., without any other interactions)? Most people would agree that \(p(win\ with\ d_i) = \frac{1}{3}\) for any arbitrary door \(d_i\). Accepting that Monty Hall’s actions cannot possibly change this (as he cannot transfer the car to a different location), we should conclude that the winning chance for sticking with the initial choice also is \(p(stay) = \frac{1}{3}\) and \(p(switch) = 1 - p(stay) = \frac{2}{3}\). (The subtle reason for the benefit for switching is that Monty Hall must take the car’s location into account to reliably open a door that reveals a goat. Thus, Monty Hall curates the choices in a way that adds information.)

A model-based approach could visualize the three possible options for the car’s location (which are known to be equiprobable, given the car’s random allocation). By explicating the consequences of an initial choice (e.g., of Door 1), we see that always sticking with the initial choice has a theoretical chance of winning in \(p(stay) = \frac{1}{3}\) of cases. By contrast, always switching to the alternative door provides a higher change of winning of \(p(switch) = \frac{2}{3}\) (see Figure 20.4).

Figure 20.4: An explanation for the superiority of switching in the Monty Hall problem. (Source: Illustration from Wikipedia: Monty Hall problem.)

Many people find the correct solution so counterintuitive that they are unwilling to accept these explanations. If someone refuses to accept the theoretical arguments, an alternative way of convicing them is by simulating a large number of games and then compare the success of either staying or switching doors.34

20.3.3 Representing the environment

Our task is to use simulations to decide and justify whether the the contestant should stay or switch. More precisely, is the probability of winning the game by switching larger than by staying with the initial door? What are the probabilities for winning the car in both cases?

To solve this task, we create a simulation with the following features: 3 doors, random location of the car, Monty knows the car’s location and always opens a door that reveals a goat. (Exercise 20.5.7 will extend this solution to some variants of the problem.)

Preparations

The most important element for any simulation is to create a valid model of the game scenario.

We will first create a data structure that can represent the setups for N games:

# Generate a random setup:

setup <- sample(x = c("car", "goat", "goat"), size = 3, replace = FALSE)

setup

#> [1] "goat" "car" "goat"

# Create N games (with a column for each door):

N <- 100

games <- NA

# Prepare data structure:

games <- tibble::tibble(d1 = rep("NA", N),

d2 = rep("NA", N),

d3 = rep("NA", N))Sampling setups

We now create N random setups and store them in our prepared table of games:

# Fill table with random games:

set.seed(2468) # for reproducible randomness

for (i in 1:N){

setup <- sample(x = c("car", "goat", "goat"), size = 3, replace = FALSE)

games$d1[i] <- setup[1]

games$d2[i] <- setup[2]

games$d3[i] <- setup[3]

}

head(games)

#> # A tibble: 6 × 3

#> d1 d2 d3

#> <chr> <chr> <chr>

#> 1 goat car goat

#> 2 goat goat car

#> 3 car goat goat

#> 4 goat goat car

#> 5 car goat goat

#> 6 goat goat carNote that we use set.seed(2468) to ensure reproducible randomness.

Any value is fine, in principle, but avoid always using the same values.

Importantly, when first creating a simulation, using unconstrained randomness can often be a virtue, as it can yield different results!

20.3.4 Abstract solution

Our first simulation of the problem is rather abstract insofar as it ignores all details of Monty’s actions. When realizing that the game show host (named Monty) can always open a door with a goat (since there are two of them), we can simulate the outcome of the game without taking his actions into account.

We first create an auxiliary function that allows us to determine whether a game has been won. This depends on the contents of the three doors (i.e., the car’s location) and the player’s final choice (i.e., of Door 1, 2, or 3).

A game is won whenever the chosen door contains the car:

# Given the specific setup of a game, would the chosen door win the car?

win_car <- function(d1, d2, d3, choice){

out <- FALSE

setup <- c(d1, d2, d3)

if (setup[choice] == "car"){

out <- TRUE

}

return(out)

}

# Check:

win_car("car", "goat", "goat", 1)

#> [1] TRUE

win_car("car", "goat", "goat", 2)

#> [1] FALSE

win_car("car", "car", "goat", 2)

#> [1] TRUE

win_car("car", "car", "goat", 3)

#> [1] FALSENext, we simulate the outcomes of N games in three steps:

- Generate

Ninitial door choices:

As the player’s initial choices are independent of the game’s setup, we can either always pick the same door (e.g., Door 1) or select a random door (i.e., 1, 2, or 3) in each game.

As picking a random door in each game appears more plausible, we use sample() to draw N initial choices and add those as a new variable to games:

# 1. Generate and add N initial door choices:

sim_1 <- games %>%

mutate(init_door = sample(x = 1:3, size = N, replace = TRUE))Several details of this step are noteworthy:

Our

mutate()function to computeinit_door(in Step 1.) contained a second call tosample(), but always picking Door 1 should make no difference for the result, as long as each row ofgamesreally was created randomly above.35In this call to

sample(), we specifiedreplace = TRUEto ensure that repeatedly drawing the same door is possible and that sampling \(N>3\) times fromx = 1:3is possible.As we set

set.seed(2468)above, the first call this instance ofsample()will always yield the same sequence of results. However, as we did not fix a new value ofset.seed()here, repeating this step multiple times would create different values every time.

- Determine all wins by staying:

Given a specific setup of doors and the player’s initial choice, we can determine whether staying with the initial choice would win the car:

# 2. Determine wins by staying:

sim_1 <- sim_1 %>%

mutate(win_stay = purrr::pmap_lgl(list(d1, d2, d3, init_door), win_car))Note: An example for different map() functions of the purrr package:

# Functions:

square <- function(x){ x^2 }

expone <- function(x, y){ x^y }

# Data:

tb <- tibble(n_1 = sample(1:9, 100, replace = TRUE),

n_2 = sample(1:3, 100, replace = TRUE))

# map functions to every row of tb:

tb %>%

mutate(sqr = purrr::map_dbl(.x = tb$n_1, .f = square), # 1 argument

exp = purrr::map2_dbl(n_1, n_2, expone), # 2 arguments

sum = purrr::pmap_dbl(list(n_1, n_2, sqr), sum) # 3+ arguments

)

#> # A tibble: 100 × 5

#> n_1 n_2 sqr exp sum

#> <int> <int> <dbl> <dbl> <dbl>

#> 1 1 2 1 1 4

#> 2 9 1 81 9 91

#> 3 3 3 9 27 15

#> 4 2 3 4 8 9

#> 5 4 1 16 4 21

#> 6 9 3 81 729 93

#> # … with 94 more rows

#> # ℹ Use `print(n = ...)` to see more rows- Determine all wins by switching:

The third and final step may require some explanation: Assuming that Monty knows the car’s location, but always opens a door with a goat, we can conclude that switching doors wins the game in exactly those cases in which the player’s initial choice did not win the game. A simpler way of expressing this is: Switching doors wins the game whenever the initial choice does not succeed.

Note that we could combine the three steps above in a single mutate() command:

sim_1 <- games %>%

mutate(init_door = sample(1:3, N, replace = TRUE),

win_stay = purrr::pmap_lgl(list(d1, d2, d3, init_door), win_car),

win_switch = !win_stay)- Evaluate results:

At this point sim_1 contains all the information that we need.

For instance, to compare the result of consistently staying or switching, we simply inspect the final two variables:

head(sim_1)

#> # A tibble: 6 × 6

#> d1 d2 d3 init_door win_stay win_switch

#> <chr> <chr> <chr> <int> <lgl> <lgl>

#> 1 goat car goat 1 FALSE TRUE

#> 2 goat goat car 3 TRUE FALSE

#> 3 car goat goat 3 FALSE TRUE

#> 4 goat goat car 1 FALSE TRUE

#> 5 car goat goat 3 FALSE TRUE

#> 6 goat goat car 2 FALSE TRUE

# Results for staying vs. switching:

mean(sim_1$win_stay)

#> [1] 0.32

mean(sim_1$win_switch)

#> [1] 0.68As our theoretical analysis has shown, always switching turns out to be about twice as good as always sticking to the initial choice.

20.3.5 Detailed solution

A more concrete simulation would also incorporate the details of Monty’s actions:

Note that we can select game setups and determine the locations of the car and goats as follows:

setup <- games[1, ] # select a specific setup (row in games)

setup

# Determining locations of interest:

which(setup == "car") # car's location/index

which(setup == "goat") # goat locationsTo flesh out the details of a particular game, we first need to create two additional auxiliary functions:

- Simulate Monty’s actions (i.e., which door is being opened, based on the setup and the player’s initial choice):

# 1. Monty acts as a function of current setup and player_choice:

host_act <- function(d1, d2, d3, player_choice){

door_open <- NA

setup <- c(d1, d2, d3)

ix_goats <- which(setup == "goat") # indices of goats

# Distinguish 2 cases:

if (setup[player_choice] == "car"){ # player's initial choice would win the car:

door_open <- sample(ix_goats, 1) # show a random goat (without preference)

} else { # player's initial choice is a goat:

door_open <- ix_goats[ix_goats != player_choice] # show the other/unchosen goat

}

return(door_open)

}

# Check:

host_act("car", "goat", "goat", 2) # Monty must open d3

#> [1] 3

host_act("car", "goat", "goat", 3) # Monty must open d2

#> [1] 2

host_act("car", "goat", "goat", 1) # Monty can open d2 or d3

#> [1] 2

host_act("car", "goat", "goat", 1) # Monty can open d2 or d3

#> [1] 3Note that we enter the contents of the three doors as three distinct arguments (i.e., d1, d2, and d3), rather than as one argument that uses the vector setup. The reason for this is that we later want to use entire rows of games as inputs to the map() family of functions of the purrr package.

- Identify the door to switch to (based on an initial choice and Monty’s actions):

# 2. To which door would the player switch (based on initial choice and Monty's action):

switch_door <- function(door_init, door_open){

door_switch <- NA

doors <- 1:3

door_switch <- doors[-c(door_init, door_open)]

return(door_switch)

}

# Check:

switch_door(1, 2)

#> [1] 3

switch_door(1, 3)

#> [1] 2

switch_door(2, 1)

#> [1] 3

switch_door(2, 3)

#> [1] 1

switch_door(3, 1)

#> [1] 2

switch_door(3, 2)

#> [1] 1- Simulate

Ngames:

Equipped with these functions, we can now generate all details of N games as a single dplyr pipe:

sim_2 <- games %>%

mutate(door_init = sample(1:3, N, replace = TRUE), # sample initial choices

# door_init = rep(1, N), # (always pick Door 1 as initial choice)

# door_init = sim_1$init_door, # (use the same choices as above)

door_host = purrr::pmap_int(list(d1, d2, d3, door_init), host_act),

door_switch = purrr::pmap_int(list(door_init, door_host), switch_door),

win_stay = purrr::pmap_lgl(list(d1, d2, d3, door_init), win_car),

win_switch = purrr::pmap_lgl(list(d1, d2, d3, door_switch), win_car)

)

head(sim_2)

#> # A tibble: 6 × 8

#> d1 d2 d3 door_init door_host door_switch win_stay win_switch

#> <chr> <chr> <chr> <int> <int> <int> <lgl> <lgl>

#> 1 goat car goat 3 1 2 FALSE TRUE

#> 2 goat goat car 2 1 3 FALSE TRUE

#> 3 car goat goat 2 3 1 FALSE TRUE

#> 4 goat goat car 3 2 1 TRUE FALSE

#> 5 car goat goat 3 2 1 FALSE TRUE

#> 6 goat goat car 2 1 3 FALSE TRUE- Evaluate results:

# Results for staying vs. switching:

mean(sim_2$win_stay)

#> [1] 0.37

mean(sim_2$win_switch)

#> [1] 0.63As expected, always switching is still about twice as good as always sticking to the initial choice.

20.3.6 Visualizing simulation results

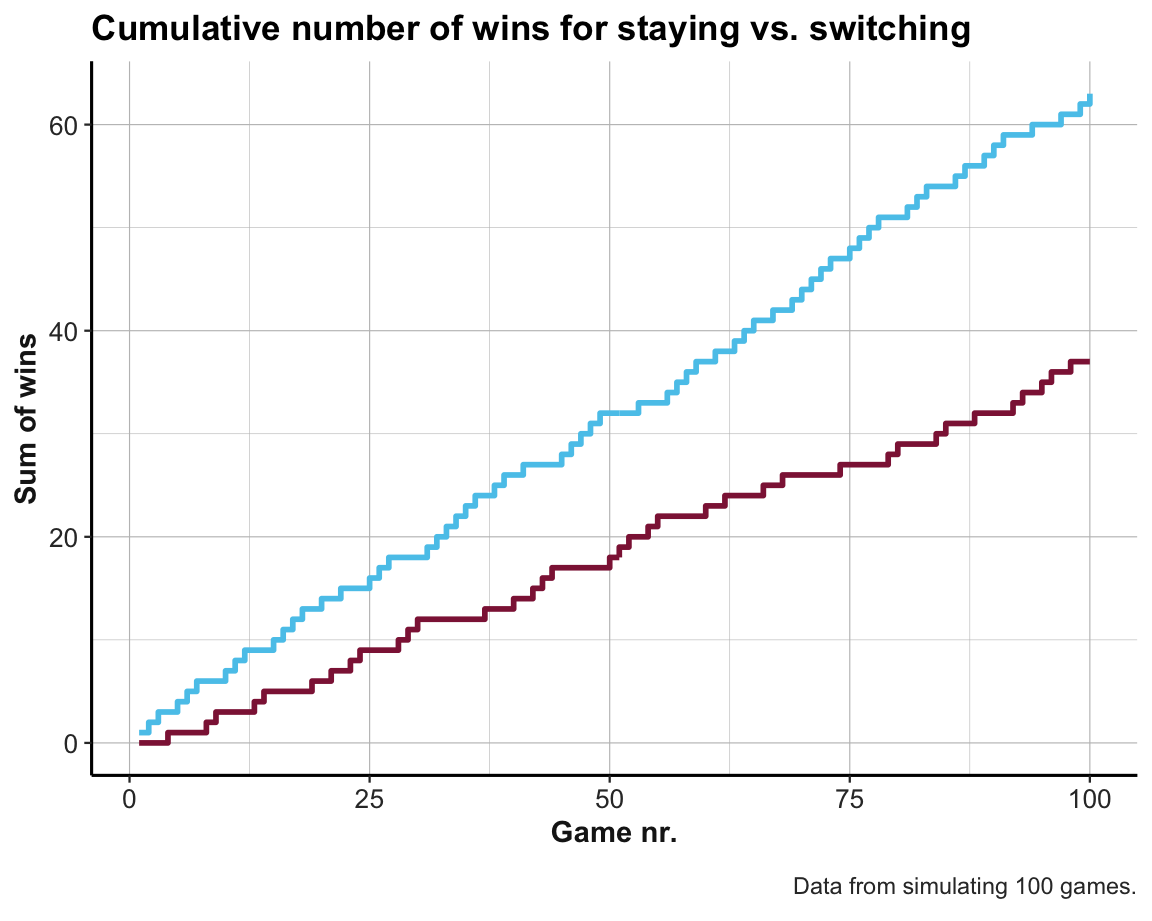

Whenever running a simulation, it is a good idea to visualize its results. To visualize the number of cumulative wins for consistently using either strategy, we first add some auxiliary variables:

# Add game nr. and cumulative sums to sim:

sim_2 <- sim_2 %>%

mutate(nr = 1:N,

cum_win_stay = cumsum(win_stay),

cum_win_switch = cumsum(win_switch))

dim(sim_2)

#> [1] 100 11Plot the number of wins per strategy (as a step function):

ggplot(sim_2) +

geom_step(aes(x = nr, y = cum_win_switch), color = Seeblau, size = 1) +

geom_step(aes(x = nr, y = cum_win_stay), color = Bordeaux, size = 1) +

labs(title = "Cumulative number of wins for staying vs. switching",

x = "Game nr.", y = "Sum of wins",

caption = paste0("Data from simulating ", N, " games.")) +

theme_ds4psy()

Note that the graph shows that both strategies are indistinguishable at first, but increasingly separate as we play more games. Also, there can be quite long stretches of games for which either strategy fails to win.

Also, the results of our abstract and detailed simulations differ although we used the same setup of games for both. This is because we used sample() to determine the player’s initial choice door_init twice. If we wanted to obtain the same results in both simulations, we could sample the player’s initial choices only once for both simulations or use set.seed() to reproduce the same random sequence twice.

However, the variation in results is actually informative. Increasing the number of games N will allow us to approximate the theoretically expected values (of \(p(stay) = \frac{1}{3}\) and \(p(switch) = \frac{2}{3}\)).

Practice

Explain in your own words why the results for our abstract and detailed solutions slightly differ from each other.

We decided to let the player choose a random initial door in each game. Confirm that simulating the case in which the player always picks Door 1 would yield the same (qualitative) result.

Adjust the abstract and detailed simulations so that both allow for random elements (e.g., random game setups and random picks of the player’s initial door), but nevertheless yield exactly the same result.

20.4 Conclusion

20.4.1 Caveat

Models and simulations can be fun, but should serve to answer questions, rather than becoming an end in itself. Beware of creating new problems by looking for particular solutions:

Only when you look at an ant through a magnifying glass on

a sunny day you realise how often they burst into flames.(Harry Hill)

In reality, most models and simulations generate at least as many questions as they answer.

20.4.2 Summary

Simulations are a programming technique that force us to explicate premises and processes that are easily overlooked when merely relying on verbal problem descriptions.

In this chapter, we introduced and illustrated two basic types of simulations:

by enumeration (explicating the information contained in a problem description)

by sampling (involving actual randomness and the need for managing repetitions)

Both types of simulations involve probabilistic information, but deal with it in different ways.

When simulating non-trivial problems with many assumptions, even basic simulations often yield unexpected and surprising results. In addition, simulations often allow to evaluate the robustness of phenomena (e.g., the variability in results due to random sampling).

20.4.3 Resources

Pointers to related sources of inspirations and ideas:

On simulations

- Chapter 20 Simulation (by Peng, 2016) provides a basic introduction into generating random numbers and simple simulations.

On the Monty Hall problem

Wikipedia: Monty Hall problem summarizes the history and controversy surrounding the problem, and provides pointers to several variants and similar puzzles.

See Statistics How To: Monty Hall problem for additional links and resources.

The psychology of the Monty Hall problem is investigated in Krauss & Wang (2003). The problem’s Bayesian structure and reasons for the most common error are explicated in Section 5. Applications of Neth et al. (2021).

-

Math 187: Introduction to Cryptography lets you play interactive simulations:

20.5 Exercises

The following exercises further explore the interplay between simulations and analytic solutions by examining different types of problems:

- simple mathematical puzzles

- more Bayesian situations

- other versions of the Monty Hall problem

- related probability puzzles

Note that some of these problems look like simple brain teasers or toy puzzles. This is ok — for it should be fun to solve them — but they still often hint at serious implications.

20.5.1 Fun factorials

How many solutions exist for the equation

- \(m! = m^3 – m\)

with \(m\) in \(\mathbb{N}\)?

We can easily conduct a small simulation that solves this problem.

- This puzzle appeared in the “Weekly riddle” column of SPON (Holger Dambeck, 2024-03-31, URL) and the ‘Mr Math11’ YouTube channel.

20.5.2 The forgetful waiter

A group of six people visits a restaurant. Each guest orders a different desert. The waiter lost track of who ordered what and serves the deserts randomly.

Use a simulation to answer the following questions:

- What is the probability that exactly four guests receive the dish they ordered?

- What is the probability that exactly one guest receives the ordered dish?

- What is the probability that nobody receives the dish they ordered?

20.5.3 Soccer simulations

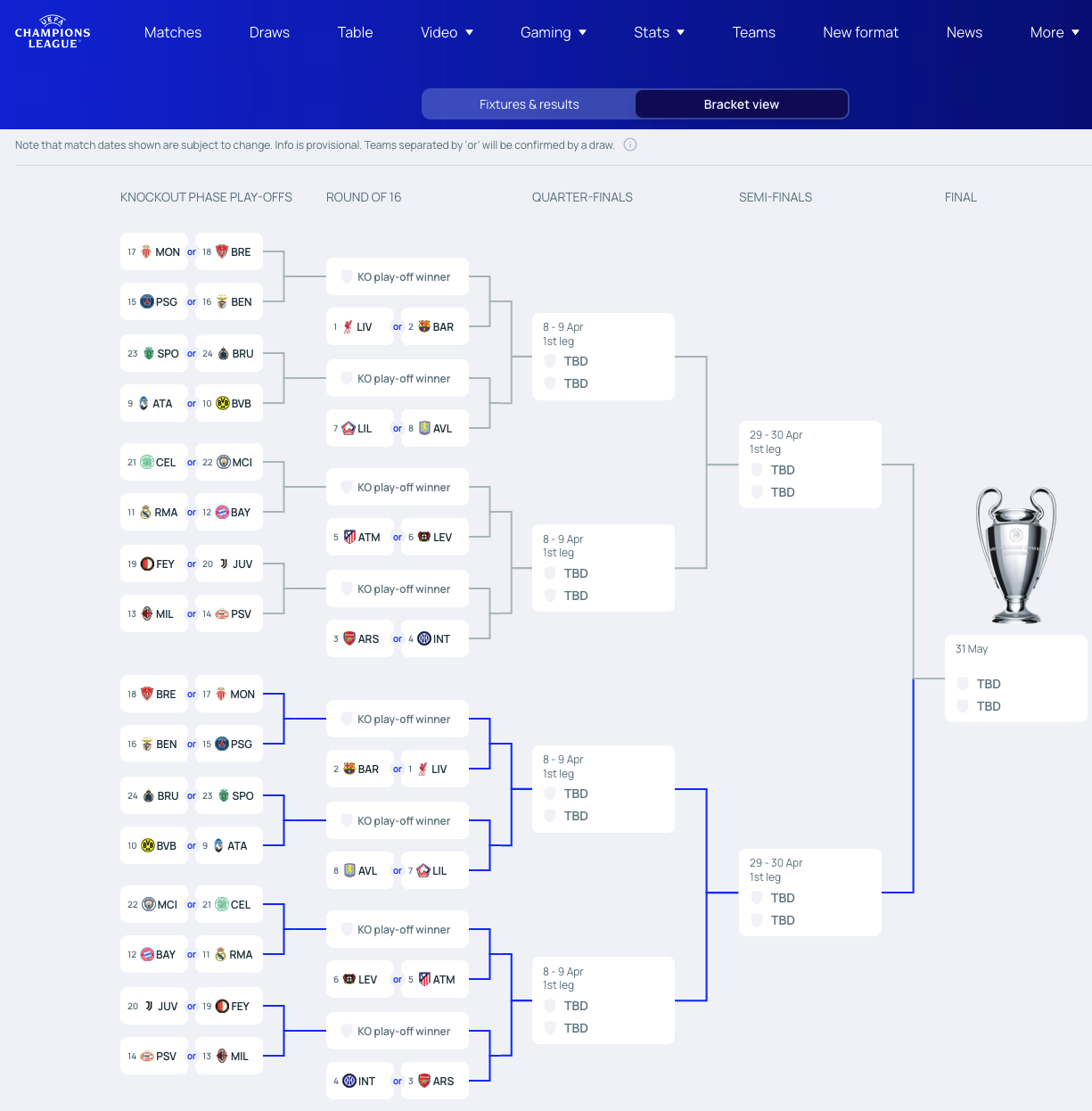

After the initial league phase of the UEFA Champions League 2024/2025, the only German team directly qualified for the next round is Bayer Leverkusen (at position 6 of 36 teams). By contrast, the teams of Real Madrid, FC Bayern München, Celtic Glasgow and Manchester City have to win a knock-out playoff match in order to advance to the next level (i.e., the round of 16). Here’s the current situation (as of 2025-01-30):

- Playoffs 3 and 4: Real Madrid or FC Bayern München vs. Celtic Glasgow or Manchester City

- Round of 16: Winner of playoffs 3 and 4 (Real, Bayern, Celtic or City) vs. Atlético or Leverkusen

Figure 20.5: Possible paths to the final of the UEFA champions league in 2024/2025 (as of 2025-01-30; image from UEFA champions league).

Prior to the random draw of playoff pairs and their victories, the press is speculating about the following scenarios:

- How likely is it that both British teams (Celtic and City) advance to the round of 16?

- How likely is a German match-up (i.e., Bayern vs. Leverkusen) in the round of 16?

- How likely is both a German and a Spanish match-up (i.e., Real vs. Atlético) in the round of 16?

- How likely is a German or a Spanish match-up in the round of 16?

Please illuminate the press by either a mathematical or a simulation model.

Hint: First assume that the outcome of each match-up is purely random is a good start. How do these probabilities change when assuming that the chance of winning for Bayern and Real is twice as high as that of their opponents?

20.5.4 How many shells in each hand?

A child collects 30 small clam shells on a beach. She holds most of them in her left hand, the others in her right hand. Asked about their number, she responds:

“When I add their number to the square and cube of itself, and do this for both hands, the total amounts to 8058.”

- How many shells does she carry in each hand?

This mathematical puzzle can be solved in a brute force simulation (using loops).

Let’s also keep track of the number of trials we consider:

- How many combinations does our simulation compute?

Notes

- An algebraic solution would require using the binomial formulas

\((a + b)^{2} = a^2 + 2ab + b^2\) and

\((a + b)^3 = a^3 + 3a^2b + 3ab^2 + b^3\).

- A version of this puzzle appears in the book Famous puzzles of great mathematicians (2009) by Miodrag S. Petkovic.

20.5.5 More Bayesian situations

The mammography problem shown in Section 20.2 is a special case of a more general class of Bayesian situations, which require the computation of an inverse conditional probability \(p(C|T)\) when given a prior probability \(p(C)\) and two conditional probabilities \(p(T|C)\) and \(p(T|\neg C)\).

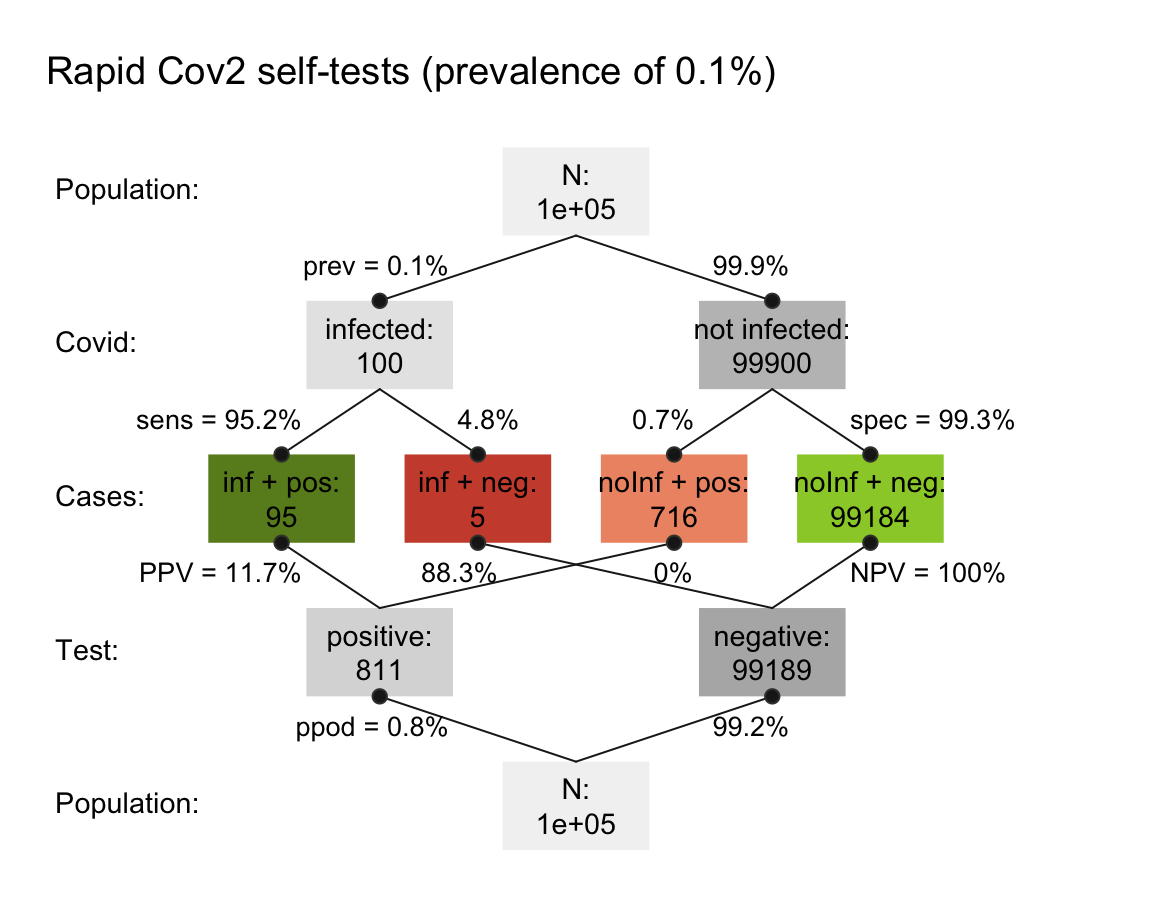

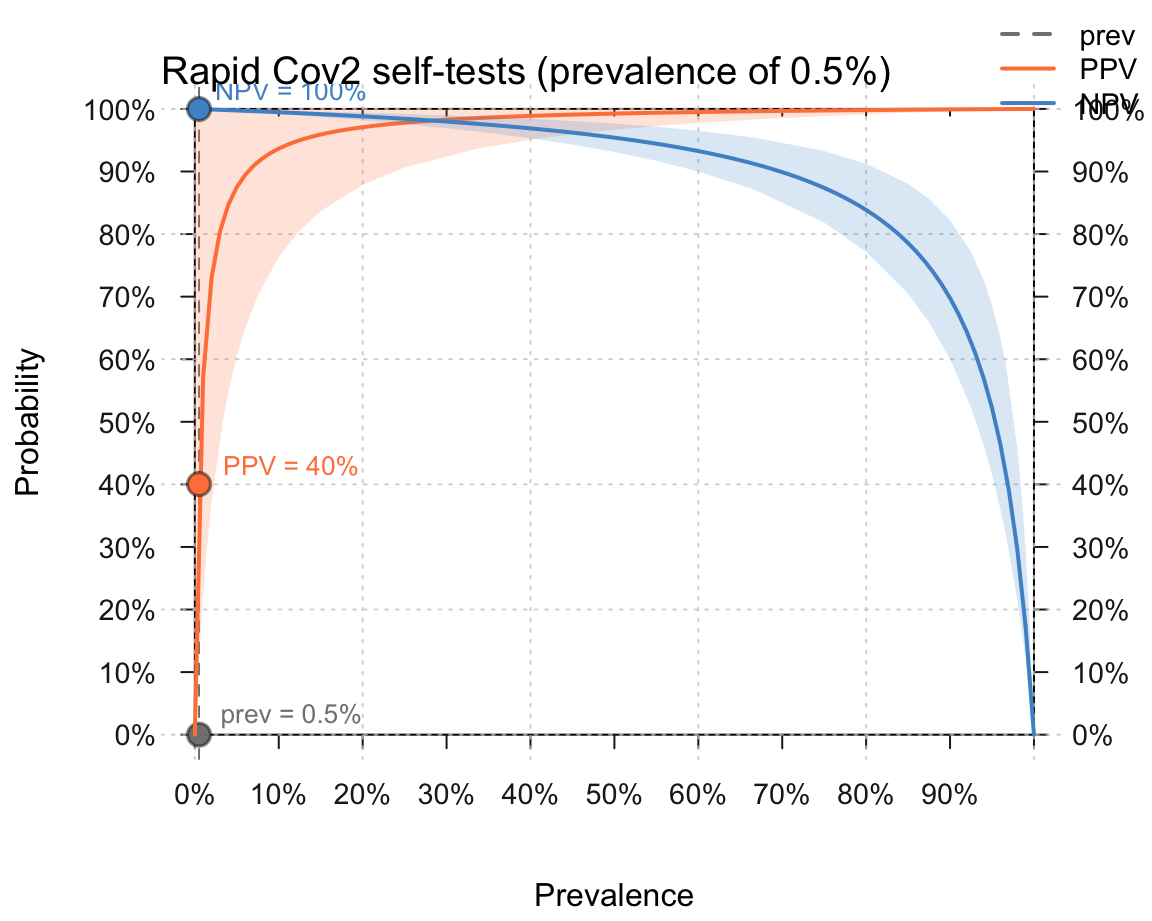

In 2021, an intriguing version of this problem is the following: The list of rapid SARS-CoV-2 self tests of the BfArM contains over 450 tests. Each test is characterized by its sensitivity \(p({T+}|infected)\) and specificity \(p({T-}|not\ infected)\). The current local prevalence of SARS-CoV-2 infections (as of 2021-05-20) is 100 out of 100,000 people.

Download the table of all tests and compute their mean sensitivity and specificity values.

-

Compute two conditional probabilities:

- What is the average probability of being infected upon a positive test result (i.e., \(PPV = p(infected|{T+})\)?

- What is the average probability of not being infected upon a negative test result (i.e., \(NPV = p(not\ infected|{T-})\)?

How would the values of 2. change, if the local prevalence of SARS-CoV-2 infections rose to 500 out of 100,000 people?

Conduct a simulation by enumeration to compute both values for a population of 10,000 people.

Bonus: Try visualizing your results (in any way, i.e., not necessarily by using the riskyr package).

Solution

The following table summarizes the mean sensitivity and specificity values of all tests:

| n | sens_mean | sens_min | sens_max | sens_sd | spec_mean | spec_min | spec_max | spec_sd |

|---|---|---|---|---|---|---|---|---|

| 455 | 95.2191 | 80.2 | 100 | 3.111103 | 99.28365 | 96.7 | 100 | 0.6847694 |

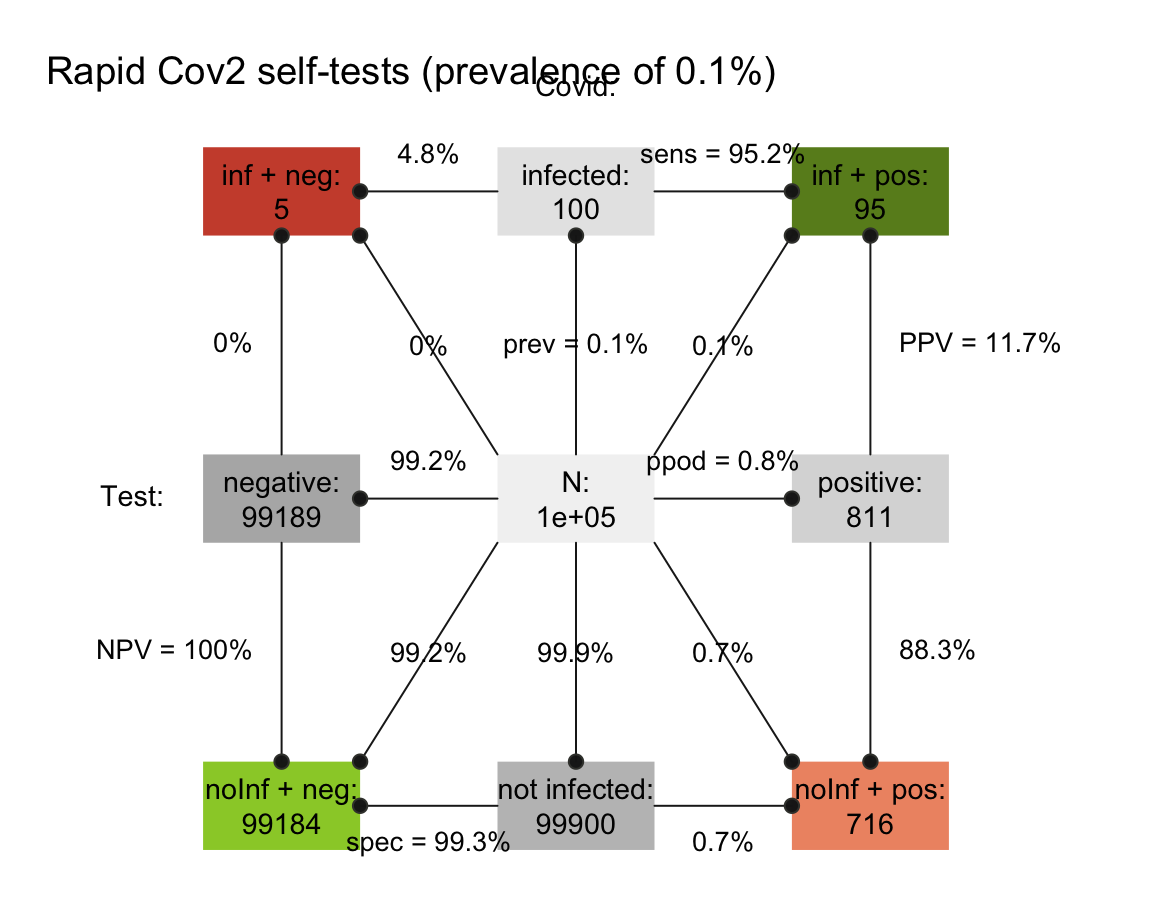

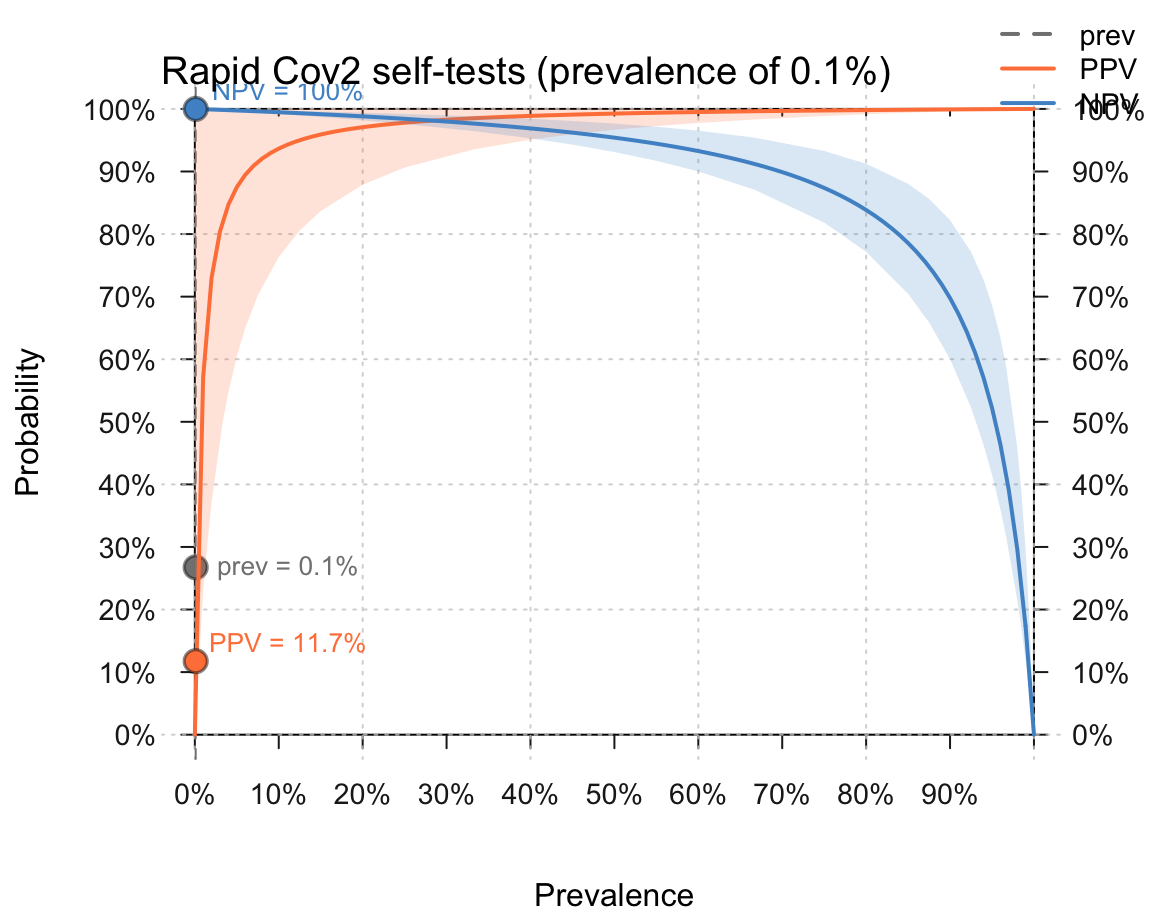

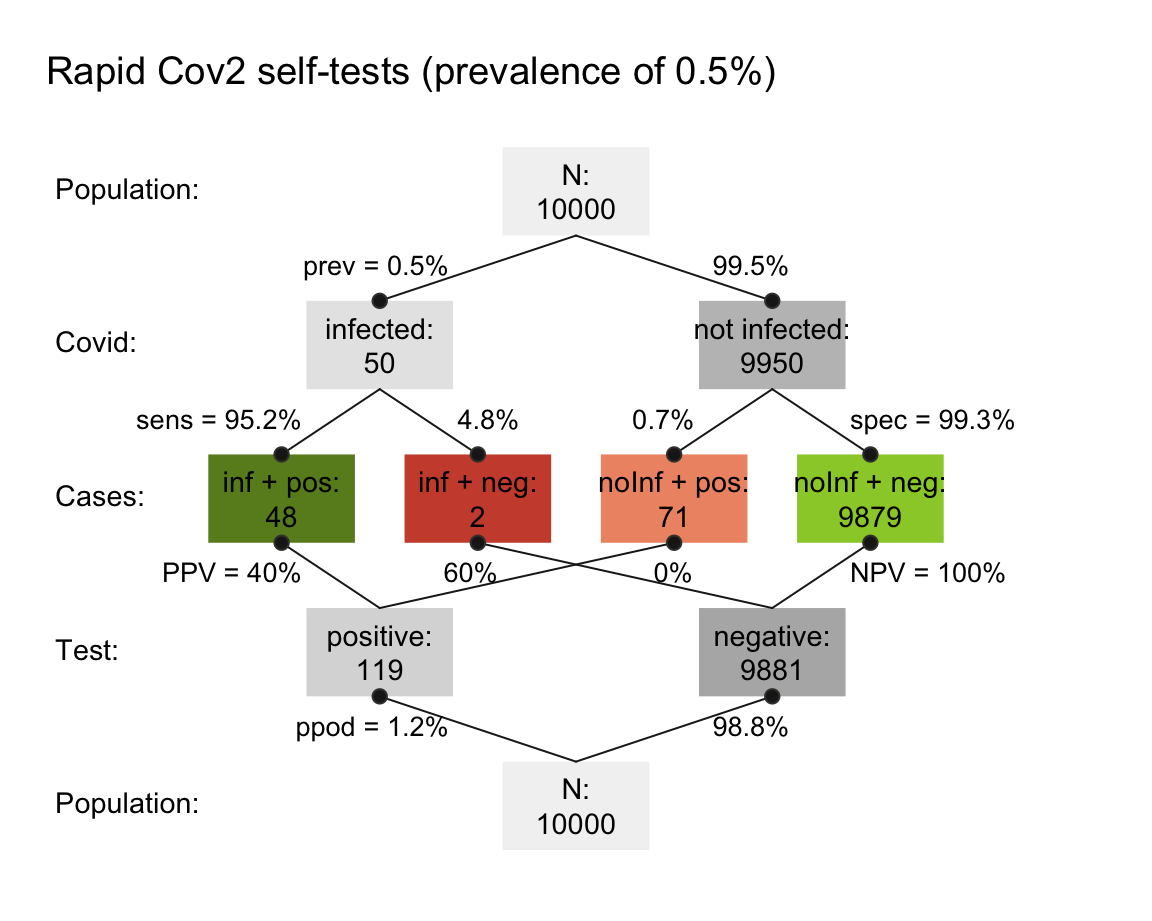

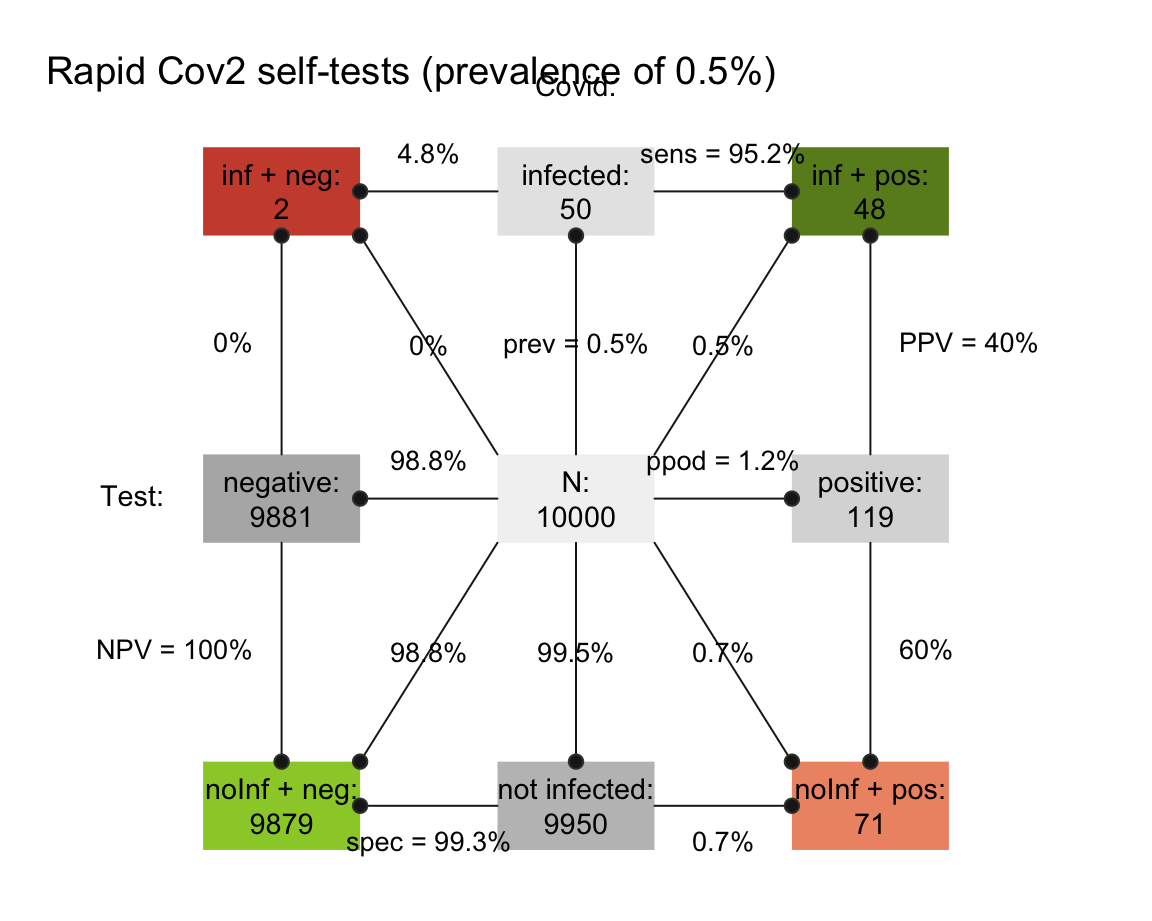

The results of 2. and 3. could be computed by the Bayesian formula (see in Section 20.2). The following visualizations result from creating riskyr scenarios.

- Solutions for a prevalence of 100 out of 100,000 people:

- Solutions for a prevalence of 500 out of 100,000 people:

20.5.6 Solving Bayesian situations by sampling

In Section 20.2, we solved so-called Bayesian situations (and the mammography problem) by enumerating cases. However, we can also use a sampling approach to address and solve such problems.

Use a sampling approach for solving the mammography problem (discussed in Section 20.2).

Adopt either approach (i.e., either enumeration and sampling) for solving the cab problem (originally introduced by Kahneman & Tversky, 1972a; and discussed by, e.g., Bar-Hillel, 1980):

A cab was involved in a hit-and-run accident at night.

Two cab companies, the Green and the Blue, operate in the city.

You are given the following data:

1. 85% of the cabs in the city are Green and 15% are Blue.

2. A witness identified the cab as a Blue cab.

The court tested his ability to identify cabs under the appropriate visibility conditions. When presented with a sample of cabs (half of which were Blue and half of which were Green) the witness correctly identified each color in 80% of the cases and erred in 20% of the cases.What is the probability that the cab involved in the accident was Blue rather than Green?

Hint: Despite their superficial differences, the structure of the cab problem is almost identical to the mammography problem (e.g., compare Figures 4 and 9 of Neth et al., 2021).

- Discuss the ways in which the enumeration and sampling approaches differ from each other and from deriving an analytic solution. What are the demands of each method and the key trade-offs between them?

20.5.7 Monty Hall reloaded

In Section 20.3.1, we introduced the notorious brain teaser known as the Monty Hall dilemma (Savant, 1990; MHD, popularized by Selvin, 1975) and showed how it can be solved by a simulation that randomly samples environmental states (i.e., the setup of games) and actions (e.g., the host’s and player’s choices).

An erroneous MHD simulation

A feature of simulations is that they are only as good as the thinking that created them. And just like statements or scientific theories, they are not immune to criticism, and can be wrong and misleading. In the following, we illustrate this potential pitfall of simulations by creating an enumeration that yields an erroneous result.

So stay alert — and try to spot any errors we make! Importantly, the error is not in the method or particular type of simulation, but in our way of thinking about the problem.

- Setup

Without considering any specific constraints, the original problem description of the MHD describes a 3x3x3 grid of cases:

- 3 car locations

- 3 initial player picks

- 3 doors that Monty could open

The following tibble grid_MH creates all 27 cases that are theoretically possible:

# Setup of options:

car <- 1:3

player <- 1:3

open <- 1:3

# Table/space of all 27 options:

grid_MH <- expand_grid(car, player, open)

# names(grid_MH) <- c("car", "player", "open")

grid_MH <- tibble::as_tibble(grid_MH) %>%

arrange(car, player)

grid_MH

#> # A tibble: 27 × 3

#> car player open

#> <int> <int> <int>

#> 1 1 1 1

#> 2 1 1 2

#> 3 1 1 3

#> 4 1 2 1

#> 5 1 2 2

#> 6 1 2 3

#> # … with 21 more rows

#> # ℹ Use `print(n = ...)` to see more rows- Adding constraints

However, not all these cases are possible, as Monty cannot open just any of the three doors. More specifically, the problem description imposes two key constraints on Monty’s action:

Condition 1: Monty cannot open the door actually containing the

car.Condition 2: Monty cannot open the door initially chosen by the

player.

This allows us to eliminate some cases, or describe which of the 27~theoretical cases are actually possible in this problem:

grid_MH$possible <- TRUE # initialize

grid_MH$possible[grid_MH$open == grid_MH$car] <- FALSE # condition 1

grid_MH$possible[grid_MH$open == grid_MH$player] <- FALSE # condition 2

grid_MH

#> # A tibble: 27 × 4

#> car player open possible

#> <int> <int> <int> <lgl>

#> 1 1 1 1 FALSE

#> 2 1 1 2 TRUE

#> 3 1 1 3 TRUE

#> 4 1 2 1 FALSE

#> 5 1 2 2 FALSE

#> 6 1 2 3 TRUE

#> # … with 21 more rows

#> # ℹ Use `print(n = ...)` to see more rows- Eliminating impossible cases

Next, we remove the impossible cases and consider only possible ones (i.e., possible == TRUE):

grid_MH_1 <- grid_MH[grid_MH$possible == TRUE, ]

grid_MH_1

#> # A tibble: 12 × 4

#> car player open possible

#> <int> <int> <int> <lgl>

#> 1 1 1 2 TRUE

#> 2 1 1 3 TRUE

#> 3 1 2 3 TRUE

#> 4 1 3 2 TRUE

#> 5 2 1 3 TRUE

#> 6 2 2 1 TRUE

#> # … with 6 more rows

#> # ℹ Use `print(n = ...)` to see more rowsNote that we used base R functions to add a new variable (possible), determine its values (by logical indexing) and then filter its rows (by logical indexing) to leave only possible cases in grid_MH_1.

The following tidyverse solution replaces the last two steps by a simple dplyr pipe (and would have worked without the additional possible variable):

grid_MH_1 <- grid_MH %>%

filter(open != car, open != player) # condition 1 and 2

grid_MH_1

#> # A tibble: 12 × 4

#> car player open possible

#> <int> <int> <int> <lgl>

#> 1 1 1 2 TRUE

#> 2 1 1 3 TRUE

#> 3 1 2 3 TRUE

#> 4 1 3 2 TRUE

#> 5 2 1 3 TRUE

#> 6 2 2 1 TRUE

#> # … with 6 more rows

#> # ℹ Use `print(n = ...)` to see more rowsToDo: Visualize the 12 possible cases in a 3x3x3 cube.

- Determine wins by strategy

Finally, we can determine the number of wins, based on the player’s strategy (i.e., staying with the original choice, or switching to the other remaining door):

Win by staying: Staying wins whenever the location of the

carmatches the initial choice of theplayer.Win by switching: As we are only left with

possiblecases, switching wins whenever staying fails to win.

grid_MH_1$win_stay <- FALSE # initialize

grid_MH_1$win_switch <- FALSE

grid_MH_1$win_stay[grid_MH_1$car == grid_MH_1$player] <- TRUE # win by staying

grid_MH_1$win_switch[grid_MH_1$win_stay == FALSE] <- TRUE # win by switching

grid_MH_1

#> # A tibble: 12 × 6

#> car player open possible win_stay win_switch

#> <int> <int> <int> <lgl> <lgl> <lgl>

#> 1 1 1 2 TRUE TRUE FALSE

#> 2 1 1 3 TRUE TRUE FALSE

#> 3 1 2 3 TRUE FALSE TRUE

#> 4 1 3 2 TRUE FALSE TRUE

#> 5 2 1 3 TRUE FALSE TRUE

#> 6 2 2 1 TRUE TRUE FALSE

#> # … with 6 more rows

#> # ℹ Use `print(n = ...)` to see more rowsOf course we could have used dplyr mutate() commands, rather than base R indexing to initialize and define these win_stay() and win_switch variables. (We will show a tidyverse solution below.)

- Count wins by strategy

To compare both strategies, we can now count how often each strategy wins:

# Result*(erroneous):

(n_win_stay <- sum(grid_MH_1$win_stay)) # win by staying

#> [1] 6

(n_win_switch <- sum(grid_MH_1$win_switch)) # win by switching

#> [1] 6- Interpret solution

This analysis suggests that the chances are 50:50 (or rather 6:6) — which is wrong!

But where is the error? And can we fix this simulation and reach the right solution by enumerating cases?

Analysis: What went wrong?

The previous simulation illustrates a crucial problem with enumerating cases:

The cases noted in the reduced grid_MH_1 are not equiprobable (i.e., expected to appear with an equal probability).

To see this, let’s take another look at our reduced grid_MH_1:

# Re-inspect grid_MH_1:

grid_MH_1 %>% filter(car == 1) # car behind door 1:

#> # A tibble: 4 × 6

#> car player open possible win_stay win_switch

#> <int> <int> <int> <lgl> <lgl> <lgl>

#> 1 1 1 2 TRUE TRUE FALSE

#> 2 1 1 3 TRUE TRUE FALSE

#> 3 1 2 3 TRUE FALSE TRUE

#> 4 1 3 2 TRUE FALSE TRUE

grid_MH_1 %>% filter(player == 1) # player picks door 1:

#> # A tibble: 4 × 6

#> car player open possible win_stay win_switch

#> <int> <int> <int> <lgl> <lgl> <lgl>

#> 1 1 1 2 TRUE TRUE FALSE

#> 2 1 1 3 TRUE TRUE FALSE

#> 3 2 1 3 TRUE FALSE TRUE

#> 4 3 1 2 TRUE FALSE TRUEAlthough the car is located equally often overall behind each door (i.e, in 4 out of 12 cases) and the player initially chooses each door with an equal chance (i.e, in 4 out of 12 cases), the combinations of car and player show an odd regularity that is not motivated by the original problem. Specifically, the three possible cases in which car == player appear twice in the table, but really describe the same original configuration of the game.

Given the problem description, each case for which car != player should be as likely as each case for which car == player.

However, our analysis doubled those cases in which Monty had two possible options for opening a door (i.e., the cases of car == player). Put another way, the set of cases in grid_MH_1 assumes that the player — by some prescient miracle — is twice as likely to choose the door with the car than to choose a different door. As staying is the successful strategy in this case, this analysis overestimates the overall success rate of staying with the initial choice.

By selecting cases that were possible, we implicitly conditionalized the number of cases on the number of Monty’s options for opening a door. This is essentially the same error that people intuitively make when assuming there is a 50:50 chance after Monty has opened a door. Somewhat ironically, this error was obscured further by disguising it as a seemingly sophisticated simulation!

The lesson to learn here is not that simulations are bad, but that they must not be trusted blindly.

As simulations explicate assumptions and the consequences of mechanisms that we cannot solve analytically, the results from a simulation are only valid if its assumptions are sound and its mechanism is implemented in a faithful manner.

Crucially, the error here was not caused by our method (i.e, using a particular type of simulation), but in an inappropriate conceptualization of the problem (assuming that all possible cases were equiprobable).

So how could we create a correct simulation by enumeration?

Correction 1

Actually, a correct solution is even simpler than our initial one.

In the following alternative, we only consider possible cases prior to Monty’s actions:

# space of only 9 options:

grid_MH_2 <- expand_grid(car, player)

# names(grid_MH_2) <- c("car", "player")

# grid_MH_2 <- tibble::as_tibble(grid_MH_2) %>% arrange(car)

grid_MH_2

#> # A tibble: 9 × 2

#> car player

#> <int> <int>

#> 1 1 1

#> 2 1 2

#> 3 1 3

#> 4 2 1

#> 5 2 2

#> 6 2 3

#> # … with 3 more rows

#> # ℹ Use `print(n = ...)` to see more rowsImportantly, given the problem description, these 9 cases actually are equi-probable! And since Monty can always open some door (as at least one of the non-chosen doors does not contain the car), we do not even need to consider his action to determine our chances of winning by staying or switching. In fact, we can count all cases of wins, based on strategy, exactly as before — independently of Monty’s actions:

grid_MH_2$win_stay <- FALSE # initialize

grid_MH_2$win_switch <- FALSE # initialize

grid_MH_2$win_stay[grid_MH_2$car == grid_MH_2$player] <- TRUE # from 1

grid_MH_2$win_switch[grid_MH_2$win_stay == FALSE] <- TRUE # from 2

grid_MH_2

#> # A tibble: 9 × 4

#> car player win_stay win_switch

#> <int> <int> <lgl> <lgl>

#> 1 1 1 TRUE FALSE

#> 2 1 2 FALSE TRUE

#> 3 1 3 FALSE TRUE

#> 4 2 1 FALSE TRUE

#> 5 2 2 TRUE FALSE

#> 6 2 3 FALSE TRUE

#> # … with 3 more rows

#> # ℹ Use `print(n = ...)` to see more rows

# Result:

(n_win_stay <- sum(grid_MH_2$win_stay)) # win by staying

#> [1] 3

(n_win_switch <- sum(grid_MH_2$win_switch)) # win by switching

#> [1] 6This yields the correct solution: \(p(win\ by\ staying) = 3/9 = 1/3\), but \(p(win\ by\ switching) = 6/9 = 2/3\).

In case this result seems odd or counter-intuitive: Contrast the original problem with an alternative one in which Monty opens a door prior to the player’s initial choice (see Monty first below). In the Monty first case, the player really faces a 50:50 chance (and will often prefer to stick with his or her initial choice, which requires an additional psychological explanation). What is different in the original version, in which the player chooses first? The difference is that — when opening a door — Monty Hall always needs to avoid the car. But in the original problem, the host also needs to conditionalize his action on the player’s initial choice. Thus, Monty Hall adds information to the problem. Whereas the chance of winning by sticking with the initial choice remains fixed at \(p(win\ by\ staying) = 3/9 = 1/3\), the chance of winning by switching to the other door is twice as high: \(p(win\ by\ switching) = 6/9 = 2/3\).

A tidyverse variant

The expand.grid() functions above (and its tidyr variant expand_grid()) create all combinations of its constituent vectors with repetitions.

This generated many impossible cases, which we eliminated by imposing the constraints of the problem.

However, by only taking into account the location of the car, our setup above ignored that there are two goats (e.g., g_1 and g_2) and two distinct arrangements of them, given a specific car location:

car <- 1:3

g_1 <- 1:3

g_2 <- 1:3

# All 27 combinations:

(t_all <- expand_grid(car, g_1, g_2))

#> # A tibble: 27 × 3

#> car g_1 g_2

#> <int> <int> <int>

#> 1 1 1 1

#> 2 1 1 2

#> 3 1 1 3

#> 4 1 2 1

#> 5 1 2 2

#> 6 1 2 3

#> # … with 21 more rows

#> # ℹ Use `print(n = ...)` to see more rows

# Eliminate impossible cases:

t_all %>%

filter((car != g_1), (car != g_2), (g_1 != g_2))

#> # A tibble: 6 × 3

#> car g_1 g_2

#> <int> <int> <int>

#> 1 1 2 3

#> 2 1 3 2

#> 3 2 1 3

#> 4 2 3 1

#> 5 3 1 2

#> 6 3 2 1Importatnly, this shows that there exist 6 equiprobable setups, rather than just 3 possible car locations: Each car location corresponds to two possible arrangements of the goats.

This raises the question:

- Can we solve the problem by taking into account the goat locations?

Let’s repeating the above simulation with a more complicated starting grid:

# Table/space of all 27 x 9 options:

grid_MH_3 <- expand_grid(car, g_1, g_2, player, open)

# Eliminate impossible cases:

grid_MH_3 <- grid_MH_3 %>%

filter((car != g_1), (car != g_2), (g_1 != g_2)) %>% # impossible setups

filter(open != car, open != player) # condition 1 and 2Note that this leaves 24 cases, rather than the 12 cases of grid_MH_1 above.

This time, we determine the wins by each strategy by using dplyr mutate() commands:

grid_MH_3 <- grid_MH_3 %>%

# mutate(win_stay = FALSE, # initialize

# win_switch = FALSE) %>%

mutate(win_stay = (car == player), # determine wins

win_switch = (win_stay == FALSE))This yields the following results:

(n_win_stay <- sum(grid_MH_3$win_stay)) # win by staying

#> [1] 12

(n_win_switch <- sum(grid_MH_3$win_switch)) # win by switching

#> [1] 12which — as staying and switching appear to be equally successful — is wrong again!

Why? Because we made exactly the same error as above: By conditionalized our (larger) number of cases based on Monty’s options for opening doors, we ended up with cases that are no longer equiprobable.

Before we correct the error, note that we could have combined all steps of this simulation in a single pipe:

# S1: Simulation in 1 pipe:

expand_grid(car, g_1, g_2, player, open) %>%

filter((car != g_1), (car != g_2), (g_1 != g_2)) %>% # eliminate impossible cases

filter(open != car, open != player) %>% # impose conditions 1 and 2

mutate(win_stay = (car == player), # determine wins

win_switch = (win_stay == FALSE)) %>%

summarise(stay_wins = sum(win_stay), # summarise results

switch_wins = sum(win_switch))

#> # A tibble: 1 × 2

#> stay_wins switch_wins

#> <int> <int>

#> 1 12 12Chunking all into one pipe allows us to easily change our simulation. Here, we can easily correct our error by simply ignoring (or commenting out) all of Monty’s actions of opening doors:

# S2: Simulation ignoring all of Monty's actions:

expand_grid(car, g_1, g_2, player, open) %>%

filter((car != g_1), (car != g_2), (g_1 != g_2)) %>% # eliminate impossible cases

# filter(open != car, open != player) %>% # DO NOT impose conditions 1 and 2

mutate(win_stay = car == player, # determine wins

win_switch = win_stay == FALSE) %>%

summarise(stay_wins = sum(win_stay), # summarise results

switch_wins = sum(win_switch))

#> # A tibble: 1 × 2

#> stay_wins switch_wins

#> <int> <int>

#> 1 18 36The simplicity of constructing a simulation pipe also allows us to test other variants. For instance, we could ask ourselves:

Would only using the condition that Monty cannot open the door with the

car(but ignoring that Monty cannot open the door initially chosen by theplayer) yield the correct solution?Would only using the condition that Monty cannot open the door chosen by the

player(but ignoring that Monty cannot open the door with thecar) yield the correct solution?

We can easily check this by separating condition 1 and 2 (and only using one of them):

# S3a: Impose only condition 1:

expand_grid(car, g_1, g_2, player, open) %>%

filter((car != g_1), (car != g_2), (g_1 != g_2)) %>% # eliminate impossible cases

filter(open != car) %>% # DO impose condition 1

# filter(open != player) %>% # BUT NOT condition 2

mutate(win_stay = car == player, # determine wins

win_switch = win_stay == FALSE) %>%

summarise(stay_wins = sum(win_stay), # summarise results

switch_wins = sum(win_switch))

#> # A tibble: 1 × 2

#> stay_wins switch_wins

#> <int> <int>

#> 1 12 24

# S3b: Impose only condition 2:

expand_grid(car, g_1, g_2, player, open) %>%

filter((car != g_1), (car != g_2), (g_1 != g_2)) %>% # eliminate impossible cases

# filter(open != car) %>% # DO NOT impose condition 1

filter(open != player) %>% # BUT condition 2

mutate(win_stay = car == player, # determine wins

win_switch = win_stay == FALSE) %>%

summarise(stay_wins = sum(win_stay), # summarise results

switch_wins = sum(win_switch))

#> # A tibble: 1 × 2

#> stay_wins switch_wins

#> <int> <int>

#> 1 12 24The answer in both cases is yes — we obtain a correct solution.

However, both of these last simulations contain cases that could not occur in any actual game:

Monty Hall would either open doors chosen by the player (in S3a)

or open doors containing the car (in S3b).

Thus, these simulations would yield correct solutions, but still not provide a faithful representation of the problem:

- In the MHD, simulations that ignore all of Monty’s actions or only impose one of two conditions on his actions for opening a door yield the correct result (i.e., always switching is twice as likely to win the car as staying). However, using both of these conditions to eliminate possible cases (i.e., taking into account what he would actually do on any particular game) yields an intuitively plausible, but incorrect solution.

Overall, these explorations enable an important insight:

- There are always multiple simulations possible. Not only are there many different ways of getting a wrong solution, but also different ways of obtaining a correct solution. Obtaining a correct result is no guarantee that a simulation (i.e., the process of using a particular method or strategy) was correct as well.

This is a fundamental point, that may seem quite trivial when illustrating it with a simpler problem: Stating that the sum of \(1+1\) is 2 does not guarantee that we actually know how to add numbers. What if we had memorized the answer? Does this mean that memorized answers are always correct? What if we actually multiplied both addends (i.e., \(1\cdot1\)) and then doubled the result (i.e., \(2\cdot 1\)) to obtain their sum? This seems absurd, but would yield the correct answer in this particular case (but wrong answers for \(1+2\)). Simulations often suffer from similar complications: They typically recruit complex machinery to answer quite basic questions. If we do not understand how they work, they can easily lead to erroneous results or to correct results for the wrong reasons. Thus, to productively use and enable trust in simulations, it is essential that they are transparent (i.e., we also understand how they work).

Combinations vs. permutations

An alternative conceptualization of the problem setup could start with the three objects, but rather than expanding all possible combinations and then eliminating cases, we could only enumerate actually possible cases by arranging them (i.e., all their permutations without repetitions).

Start with the three objects:

objects <- c("car", "goat 1", "goat 2")

# Function for all permutations:

# See <https://blog.ephorie.de/learning-r-permutations-and-combinations-with-base-r>

# for explanation of recursion!

perm <- function(v) {

n <- length(v)

if (n == 1) v

else {

X <- NULL

for (i in 1:n) X <- rbind(X, cbind(v[i], perm(v[-i])))

X

}

}

# 6 possible arrangements:

perm(objects)

#> [,1] [,2] [,3]

#> [1,] "car" "goat 1" "goat 2"

#> [2,] "car" "goat 2" "goat 1"

#> [3,] "goat 1" "car" "goat 2"

#> [4,] "goat 1" "goat 2" "car"

#> [5,] "goat 2" "car" "goat 1"

#> [6,] "goat 2" "goat 1" "car"Note: See the blog post on Learning R Permutations and Combinations at Learning Machines for the distinction between combinations and permutations.

This shows that there are only 6 initial setups of the objects.

Variations of the MHD

Several variants of the MHD can help to render its premises and solution more clear. Thus, use what you have learned above to adapt the simulation in the following ways:

- More doors: Extend your simulation to a version of the problem with 100 doors. After the player picks an initial door, Monty opens 98 of the other doors (all revealing goats) before you need to decide whether to stay or switch. How does this affect the winning probabilities for staying vs. switching?

Hint: As it is impractical to represent a game’s setup with 100 columns, this version requires a more abstract representation of the environment and/or Monty Hall’s actions. (For instance, the setup could be expressed by merely noting the car’s location…)

-

Monty’s mind: The classic version of the problem crucially assumes that Monty knows the door with the prize and curates the choices of the available doors correspondingly. Determine the player’s winning probabilities for staying vs. switching for the following alternative versions of the game:

Monty first: The host first opens a door to reveal a goat, before the player selects one of the closed doors as her initial choice. The player then is offered an option to switch. Should she do so?

Ignorant Monty: The host does not know the car’s location and opens a random door to reveal either a goat or the car. Should the player switch whenever a goat is revealed?

Angelic Monty: The host offers the option to switch only when the player has chosen incorrectly. What and how successful is the player’s best strategy?

Monty from Hell: The host offers the option to switch only when the player’s initial choice is the winning door. What and how successful is the player’s best strategy?

Additional variations

Many additional versions of the MHD exist. For instance, the three prisoners problem (see below), Bertrand’s box paradox, and the boy or girl paradox) (see Bar-Hillel & Falk, 1982 for psychological examinations.)

20.5.8 Ham or cheese sandwiches

There are two lunch boxes, each containing two sandwiches:

- Box A contains one ham sandwich and one cheese sandwich;

- Box B contains two cheese sandwiches.

You randomly choose a box and randomly draw one sandwich, which happens to be a cheese sandwich.

- What is the probability of the other sandwich in the same box also being a cheese sandwich?

- What is the probability of the first sandwich being a cheese sandwich?

Hint: We can try solving this problem in two ways:

Pseudo-sampling: Translate its premises into frequencies of a population of events and enumerate its possible cases; or

Actual sampling: Sample instances from some distribution (e.g., actually drawing boxes and sandwiches with a \(p = 50\%\)).

In either case, we collect all corresponding cases (e.g., as the elements of vectors) and then can index (or filter) some output data to determine our result.

Note

As we represented cases by vectors, our simulation needed no loops.

The variability of our results depends on the size of \(N\). Larger values take longer, but yield more robust results.

This problem differs from the Monty-Hall dilemma (above) insofar as you actually gain new information by learning that you have initially drawn a cheese sandwich. By contrast, you do not gain new information by Monty revealing a goat (assuming that he knows the location of the prize). (See also The prisoner’s dilemma puzzle below.)

20.5.9 The sock drawer puzzle

The puzzles from the classic book Fifty challenging problems in probability (by Mosteller, 1965) can be solved analytically (Figure 20.6 shows the book’s cover). However, if our mastery of the probability calculus is a bit rusty, we can also find solutions by simulation.

Figure 20.6: Fifty challenging problems in probability (by Mosteller, 1965) is a slim book, but can provide many hours of frustration or delight.

The problem

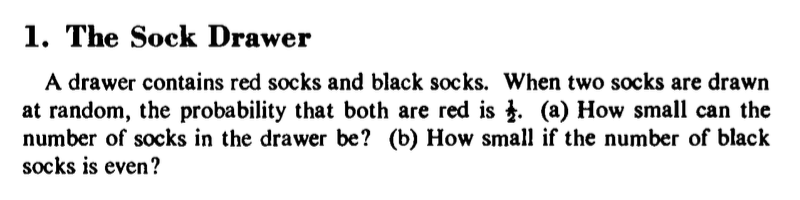

- Use a simulation for solving The sock drawer puzzle (by Mosteller, 1965, p. 1):

Figure 20.7: The sock drawer puzzle (i.e., Puzzle 1 by Mosteller, 1965).

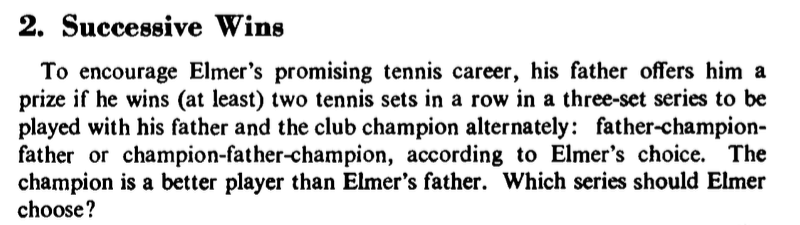

- Use an adapted simulation for solving the following variation of the puzzle:

A drawer contains red socks and black socks.

When two socks are drawn at random, the probability that both are of the same color is \(\frac{1}{2}\).

(c) How small can the number of socks in the drawer be?

(d) What are the first solutions with an odd number of red socks and an even number of black socks?

Note that the question in (c) is identical to (a), but the second sentence of the introduction has slightly changed: Rather than asking for a pair of red socks, we now require that the probability for both socks of a pair having the same color is \(\frac{1}{2}\).

1. Solution to (a)

The following preliminary thoughts help to explicate the problem and discover some constraints:

The puzzle demands that a pair of socks is drawn. Thus, socks in the drawer are drawn at random (e.g., in the dark) and without replacement (i.e., we cannot draw the same sock twice).

Given that there are only two colors of socks (red

r, vs. black,b), drawing a pair of socks can generally yield four possible outcomes:bb,br,rb, andrr. The eventsbbandrrcan only occur if there are at least two socks of the corresponding color in the drawer.For the probability of the target event

rrto be \(\frac{1}{2} = 50\%\), there must be more red socks than black socks in the drawer. Otherwise, the number of events with one or two black socks would outnumber our target eventbb.For the target event of

rrto occur, there need to be at least two red socks (i.e., \(|r| \geq 2\)).The simplest conceivable composition of the drawer is

rrb.

A sampling solution:

- Drawing without replacement in R: Use the

sample()function

# For a given drawer:

drawer <- c("r", "r", "b")

# drawing once:

sample(x = drawer, size = 2, replace = FALSE)

#> [1] "r" "b"

# drawing N times:

N <- 10000

tr <- 0 # count target events "two red"

# loop:

for (i in 1:N){

s <- sample(x = drawer, size = 2, replace = FALSE)

if (sum(s == "r") == 2){ # criterion: 2 red socks

tr <- tr + 1

}

}

# probability of tr:

tr/N

#> [1] 0.3346Target event needs to count the number of red socks (i.e., number of times of drawing the element "r"):

Test in R: sum(s == "r") must equal 2.

Create a function that abstracts (or encapsulates) some steps:

Notes

In this function, the size of each draw (

size = 2), our event of interest (sum(s == "r")), and the frequency of this event (2) were hard-encoded into the function. If we ever needed more flexibility, they could be provided as additional arguments.The function returns a probability (i.e., a number between 0 and 1). The accuracy of its value depends on the number of samples

N. Higher samples will yield more stable estimates of this value.

Some initial plausibility checks:

# Check:

draw_socks(c("r", "g", "b"))

#> [1] 0

draw_socks(c("b", "b", "r"))

#> [1] 0

draw_socks(c("b", "r"))

#> [1] 0

draw_socks(c("r", "r"))

#> [1] 1

## Note errors for:

# draw_socks(c("r"))

# draw_socks(c("b"))Note that varying the value of N varies the variability of our estimate:

drawer <- c("r", "r", "b")

draw_socks(drawer, 1) # 10^0

draw_socks(drawer, 10) # 10^1

draw_socks(drawer, 100) # 10^2

draw_socks(drawer, 1000) # 10^3

draw_socks(drawer, 10^6) # beware of computation timesNote that the range of values theoretically possible can vary between 0 and 1.

However, whereas it actually varies between those extremes for N = 1, the chance of repeatedly obtaining such an extreme outcome decreases with increasing values of N.

Thus, our estimates get more stable as N increases.

Note also that there is a considerable trade-off between accuracy and speed (due to using a loop for sampling events). Using values beyond N = 10^6 takes considerably longer, without changing our estimates much.

Thus, we face a classic dilemma between being “quick and noisy” vs. being “slow and accurate”.

What is the lowest value of N that yields a reasonable accuracy?

This raises the question: What is “reasonable”? Actually, this is one of the most important questions in many contexts of data analysis. In the present context, our target probability is specified as the fraction \(\frac{1}{2}\). Does this mean that we would only consider a probability value of exactly \(0.5\) as accurate, but any nearby values (e.g., \(0.495\) or \(.503\)) as incaccurate?

Realistically, we want some reasonable stability of the 2nd decimal digit (i.e., the percentage value):

round(draw_socks(drawer, 10^4), 2) # still varies on the 2nd digit

round(draw_socks(drawer, 10^5), 2) # hardly varies any moreOur tests show that a default value of \(N = 10^5\) is good enough for (or “satisfies”) our present purposes.

Now we can “solve” the problem for specific drawer combinations. We will initially use a pretty coarse estimate (quick and noisy) and increase accuracy if we reach a promising range:

draw_socks(c("r", "r", "b"), 10^3)

#> [1] 0.335

draw_socks(c("r", "r", "b", "b"), 10^3)

#> [1] 0.171

draw_socks(c("r", "r", "r", "b"), 10^3)

#> [1] 0.475The last one seems pretty close. Let’s zoom in by increasing our accuracy:

draw_socks(c("r", "r", "r", "b"), 10^5) # varies around target value of 0.501. Solution to (b)

Part (b) imposes an additional constraint on the drawer composition: The number of black socks must be even (which excludes our first solution).

Automating the drawer composition by making it a function of two parameters:

# Two numeric parameters:

n_r <- 2 # number of red socks

n_b <- 1 # number of black socks

# Function to make drawer (in random order):

make_drawer <- function(n_r, n_b){

drawer <- c(rep("r", n_r), rep("b", n_b)) # ordered drawer

sample(drawer, size = n_r + n_b) # a random permutation

}

# Checks:

make_drawer(4, 0)

#> [1] "r" "r" "r" "r"

make_drawer(3, 1)

#> [1] "r" "r" "r" "b"

make_drawer(2, 2)

#> [1] "r" "b" "r" "b"Note that the sample() function in make_drawer() only creates a random permutation of the drawer elements.

If we trust that the sample() function in draw_socks() draws randomly, we could omit permuting the drawer contents.

Combine both function to create a drawer and estimate the probability for N draws from it in one function:

estimate_p <- function(n_r, n_b, N){

drawer <- make_drawer(n_r, n_b)

draw_socks(drawer = drawer, N = N)

}

# Checks:

estimate_p(1, 1, 10)

#> [1] 0