Some Diagnostics for MCMC

- Consider a Metropolis algorithm where given current state

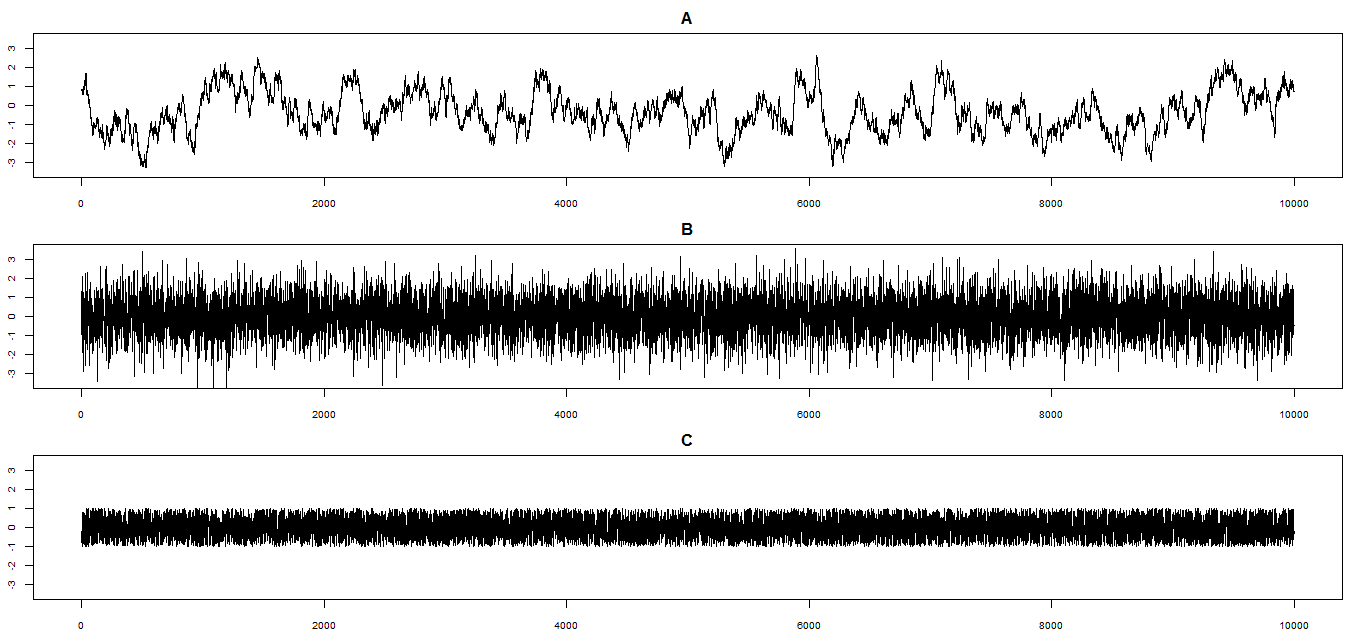

- The following are trace plots from three different MCMC algorithms for simulating values from a N(0, 1) posterior distribution. That is, the target distribution is the N(0, 1) distribution. Each algorithm has been run for 10000 steps after a warm up (burn in) period of 1000 steps. Note: all plots are on the same scale; pay attention to the scale. Use the plots to answer the following questions. For each prompt choose a plot and explain your reasoning.

- Which plot corresponds to the smallest effective sample size?

- Which plot corresponds to the most UNrepresentative sample?

- Which plot corresponds to the algorithm that best achieves its goal?

- You use a Metropolis-Hastings algorithm to simulate from the probability distribution

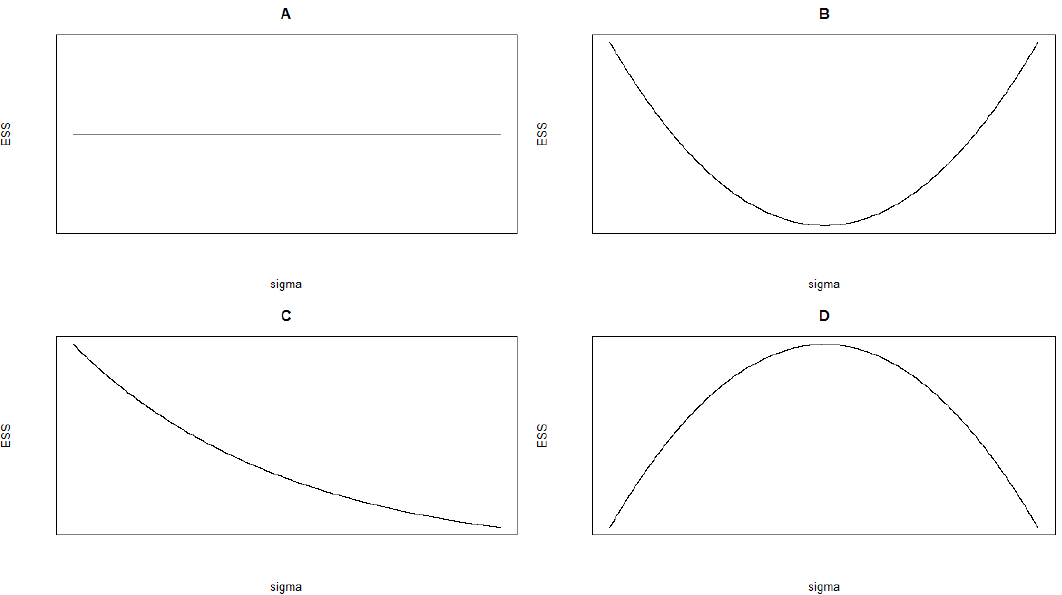

Suppose that for each of the following three proposal chains, a Metropolis-Hastings algorithm is used to run an MC.

All other things being equal, for which of the three cases would the effective sample size (ESS) be smallest? Explain your reasoning.

All other things being equal, for which of the three cases would the shortest “warm up” (“burn in”) period be required? Explain your reasoning.