33 Correlation and regression

So far, you have learnt about the research process, including analysing data using confidence intervals and conducting hypothesis tests. In this chapter, you will learn to:

- produce confidence intervals for correlation coefficients.

- conduct hypothesis tests for correlation coefficients.

- produce and interpret linear regression equations.

- conduct hypothesis tests for the slope of a regression line.

- produce confidence intervals for the slope of a regression line.

- determine whether the conditions for using these methods apply in a given situation.

33.1 Introduction: lifespan of dog breeds

So far, RQs about single variables (descriptive RQs) and RQs for comparisons (relational and repeated-measures RQs) have been studied. In this chapter, the relationship between two quantitative variables is studied (correlational RQs) when that relationship is approximately linear. The strength of the relationship (correlation) and the nature of that relationship (regression) are discussed.

For this chapter, consider this RQ:

In dog breeds, is there a relationship between breed-weight and lifespan, in general?

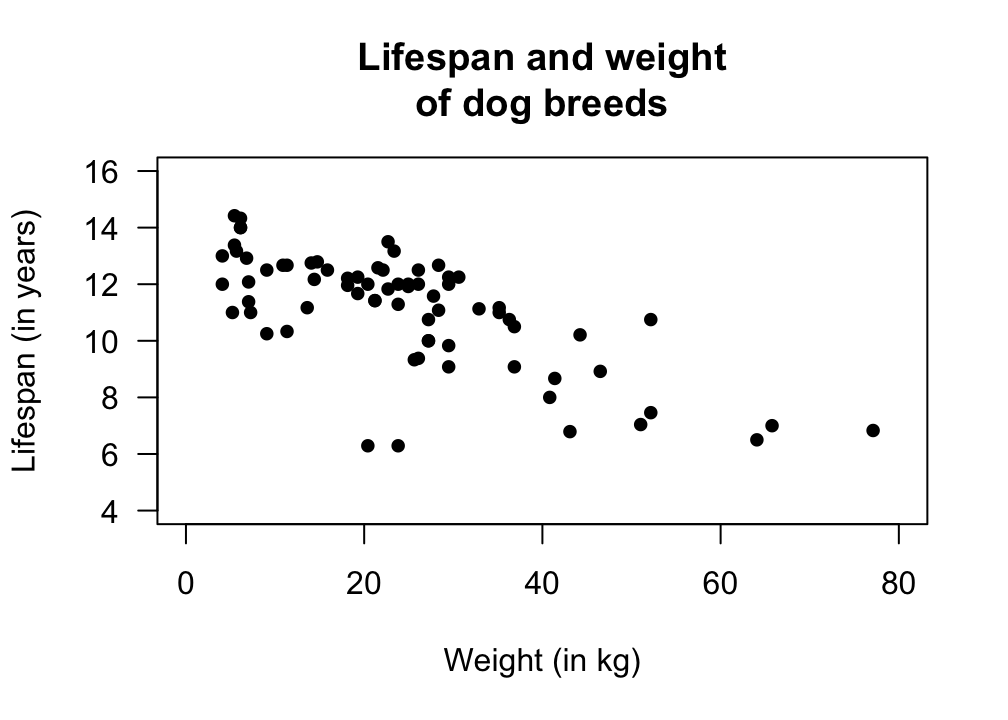

da Silva and Cross (2023) recorded the lifespan and weight of dog breeds, for at least \(50\) dogs from each breed (da Silva and Cross 2022); see Fig. 33.1. The dog breed is the unit of analysis; the individual dog is the unit of observation. The data comprises two quantitative variables (Fig. 33.2, left panel).

FIGURE 33.1: The dog-breed data.

FIGURE 33.2: Lifespan of dog breeds against breed-weight. Left: scatterplot. Right: correlation output.

Knowing the breed-weight provides some information about the lifespan: a moderate relationship between the variables seems evident. The relationship also seems somewhat linear. The Pearson correlation coefficient (Fig. 33.2, right panel) is \(r = -0.712\), so \(R^2 = (-0.712) ^2 = 50.7\)%. This means that the unexplained variation in lifespan reduces by \(50.7\)% by knowing the breed-weight.

Recall that the sample correlation coefficient is denoted by \(r\), and the population correlation coefficient is denoted by \(\rho\),

33.2 Correlation: CIs and tests for \(\rho\)

33.2.1 Correlation: CIs for \(\rho\)

In the dog-breed study, only one of the countless possible samples of dogs has been studied, and the value of \(r\) (an estimate of \(\rho\), the parameter) will vary from sample to sample. That is, the value of \(r\) has a sampling distribution, and sampling variation exists. The sampling distribution of \(r\), however, does not have a normal distribution, so CIs for \(\rho\) will be taken directly from software output (Fig. 33.2, right panel). For the dog-breed data, the \(95\)% CI for \(\rho\) is from \(-0.576\) to \(-0.809\). Note that this CI is not symmetrical: the value of \(r\) is not halfway between these limits. We write:

For dog breeds, the correlation coefficient between breed lifespan and breed-weight is \(-0.712\), with a \(95\)% CI from \(-0.576\) to \(-0.809\) (\(n = 73\)).

In other words, a population with a correlation coefficient \(\rho\) between \(-0.576\) and \(-0.809\) could reasonably have produced a sample of size \(n = 73\) with a sample correlation coefficient of \(r = -0.712\).

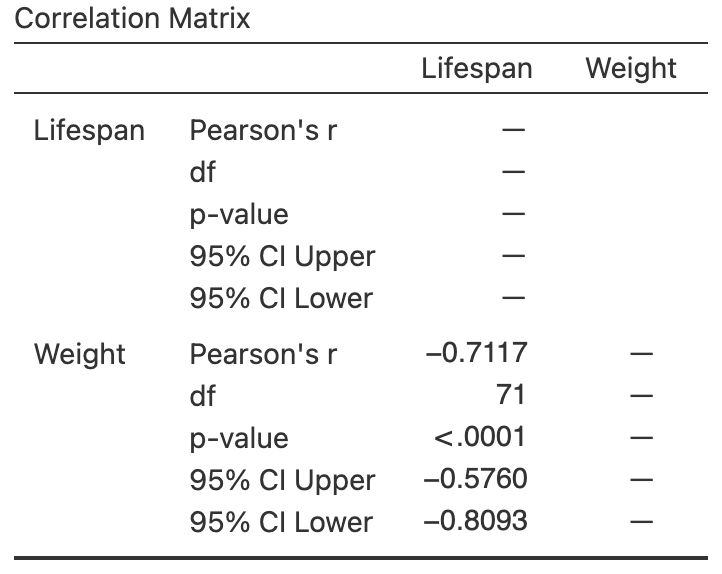

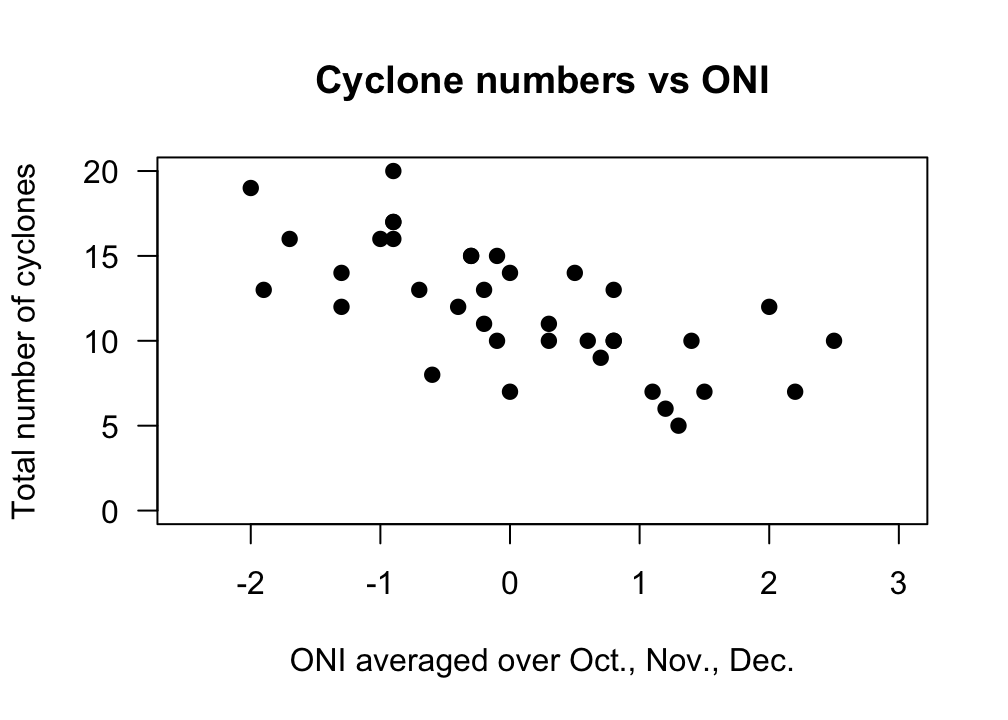

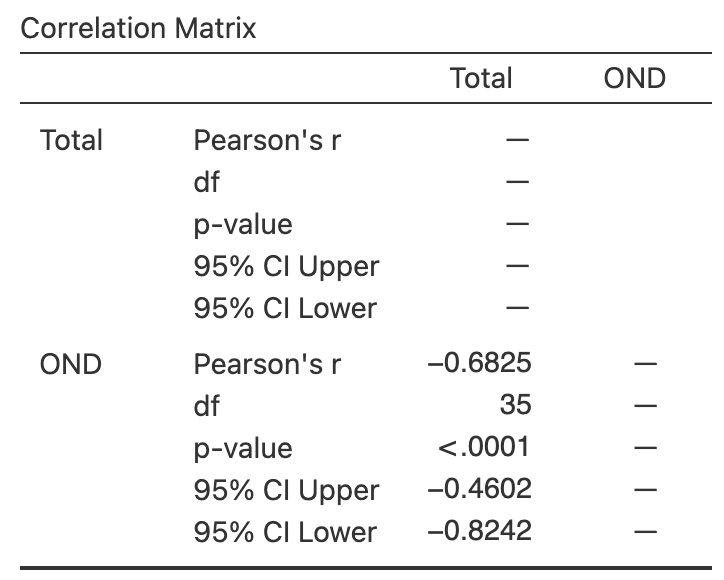

Example 33.1 (Correlation) The relationship between the number of cyclones \(y\) in the Australian region each year from 1969 to 2005, and a unitless climatological index called the Ocean Niño Index (ONI, \(x\)), averaged over October, November and December, is shown in Fig. 33.3 (left panel) (P. K. Dunn and Smyth 2018).

The relationship has a negative direction, so the value of \(r\) is negative. From the software output, \(r = -0.683\) with a \(95\)% CI from \(-0.460\) to \(-0.824\).

FIGURE 33.3: The number of cyclones in the Australian region each year from 1969 to 2005, and the ONI averaged over October, November, December. Left: scatterplot. Right: software output.

33.2.2 Correlation: hypothesis test for \(\rho\)

A hypothesis test can also be conducted regarding \(\rho\), the Pearson correlation coefficient in the population. The null hypothesis is, as always, the 'no difference, no change, no relationship' position which is, in this context, is:

- \(H_0\): \(\rho = 0\).

Clearly, the sample correlation coefficient \(r\) for the data is not zero, and the RQ is effectively asking if sampling variation is the reason for this discrepancy between \(r\) and the parameter \(\rho\).

The RQ is (as given in Sect. 33.1) is a two-tailed RQ, so the alternative hypothesis is:

- \(H_1\): \(\rho \ne 0\) (two-tailed test, based on the RQ).

As usual, initially assume that \(\rho = 0\) (from \(H_0\)), then describe what values of \(r\) could be expected using the sampling distribution, under that assumption, across all possible samples (sampling variation). Then the observed value of \(r\) is compared to the values expected through sampling variation to determine if the value of \(r\) supports or contradicts the assumption.

For a correlation coefficient, the sampling distribution of \(r\) does not have a normal distribution8. However, the output (Fig. 33.2, right panel) contains the relevant \(P\)-value for the test: less than \(0.0001\). Very strong evidence exists to support \(H_1\) (that the correlation in the population is not zero).

We write:

The sample presents very strong evidence (two-tailed \(P < 0.0001\)) that the average lifespan is associated with the breed-weight (\(r = -0.712\) with \(95\)% CI from \(-0.576\) to \(-0.809\); \(n = 73\)) in the population.

Notice the three features of writing conclusions again: an answer to the RQ, evidence to support the conclusion, and some sample summary information.

If the evidence suggests that the correlation coefficient is not zero (in the population), this does not necessarily mean a strong correlation exists. The correlation may be weak in the population (as estimated by the value of \(r\)), but evidence exists that the correlation is not zero in the population.

That is, the test is about statistical significance, not practical importance.

Example 33.2 (Correlation) The relationship between the number of cyclones \(y\) in the Australian region each year from 1969 to 2005, and the ONI \(x\) is shown in Fig. 33.3 (left panel). To test \[ \text{$H_0$: $\rho = 0$} \qquad\text{ against}\qquad \text{$H_0$: $\rho \ne 0$}, \] software reports that \(P < 0.0001\) (Fig. 33.3, right panel). There is very strong evidence of a relationship between the number of cyclones in the Australian region and the ONI (averaged over October, November and December).

33.3 Regression

33.3.1 Introducting regression

Correlation measures the strength and direction of the linear relationship between two quantitative variables \(x\) (an explanatory variable) and \(y\) (a response variable). Sometimes, however, describing the relationship is also useful. This is called regression.

The regression relationship is described mathematically using an equation, and allows us to:

- Predict the mean value of \(y\) from a given value of \(x\) (Sect. 33.3.4); and

- Understand the relationship between \(x\) and \(y\) (Sect. 33.3.5).

An example of a linear regression equation, describing the linear relationship between the observed values of an explanatory variable \(x\) and the observed values of a response variable \(y\), is \[\begin{equation} \hat{y} = -4 + (2\times x), \qquad\text{usually written}\qquad \hat{y} = -4 + 2x. \tag{33.1} \end{equation}\] The notation \(\hat{y}\) refers to the mean of all the \(y\)-values that could be observed for some given value of \(x\). That is, for some value of \(x\), many values of \(y\) could be observed, and \(\hat{y}\) is the value that regression equation predicts as the mean of all those possible values. This equation describes the connection between the values of \(x\) and the corresponding average values of \(y\).

\(y\) refers to the values of the response variable observed from individuals. \(\hat{y}\) refers to predicted mean value of \(y\) for given values of \(x\).

\(\hat{y}\) is pronounced as 'why-hat'; the 'caret' above the \(y\) is called a 'hat'.

More generally, the equation of a straight line is \[ \hat{y} = b_0 + (b_1 \times x), \qquad\text{usually written}\qquad \hat{y} = b_0 + b_1 x, \] where \(b_0\) and \(b_1\) are (unknown) numbers estimated from sample data. Notice that \(b_1\) is the number multiplied by \(x\). In Eq. (33.1), \(b_0 = -4\) and \(b_1 = 2\).

Example 33.3 (Regression equations) A report on the growth of Australian children (Pfizer Australia 2008) found an approximate linear relationship between the age of girls in years \(x\) and their height (in cm) \(y\) aged between four and seven. The regression equation was approximately \[ \hat{y} = 73 + 7x. \] The regression equation is the same if written as \[ \hat{y} = 7x + 73. \] In both cases, \(b_0 = 73\) and \(b_1 = 7\). This regression equation describes the connection between ages \(x\) and heights \(y\) for girls between four and seven years-of-age.

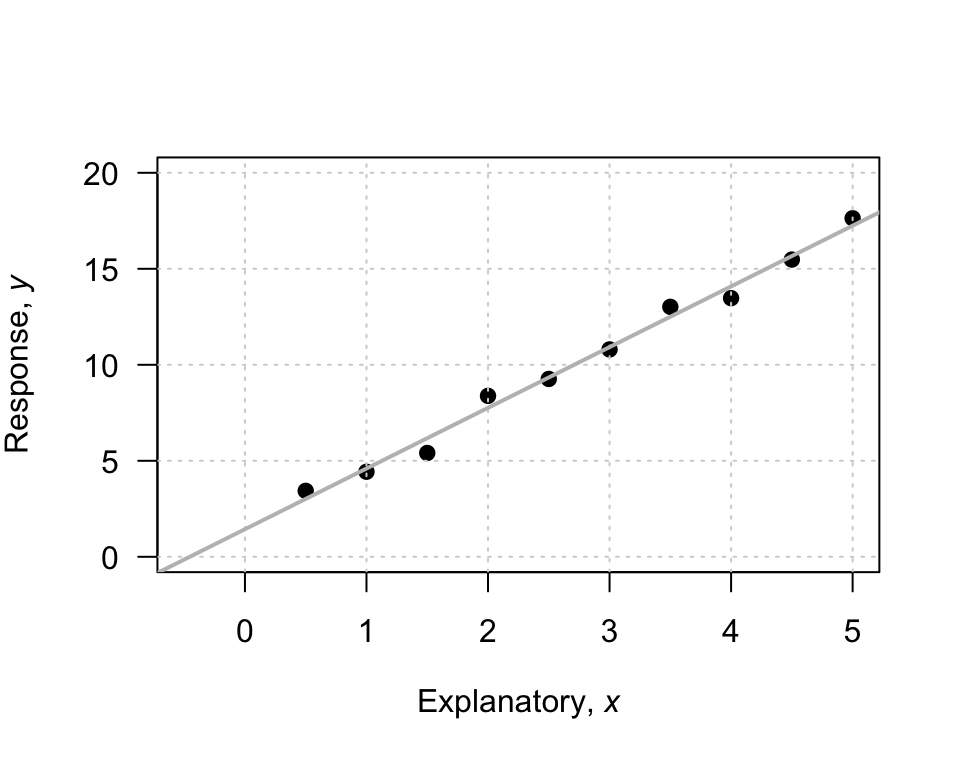

33.3.2 Reviewing linear equations

To introduce, or revise, the idea of a linear equation, consider the (artificial) data in Fig. 33.4, with an explanatory variable \(x\) and a response variable \(y\). In the graph, a sensible line is drawn on the graph that seems to capture the relationship between \(x\) and \(y\). (You may have drawn a slightly different, but similar, line.) The line describes the predicted mean values of \(y\) for various values of \(x\). The relationship is positive and linear.

A regression equation specifies the line. In the regression equation \(\hat{y} = b_0 + b_1 x\), the numbers \(b_0\) and \(b_1\) are called regression coefficients, where

- \(b_0\) is the intercept (or the \(y\)-intercept), whose value corresponds to the predicted mean value of \(y\) when \(x = 0\).

- \(b_1\) is the slope, whose value measures how much the value of \(y\) changes, on average, when the value of \(x\) increases by \(1\).

We will use software to find the values of \(b_0\) and \(b_1\) (as the formulas are tedious to use). However, a rough approximation of the values of \(b_0\) and \(b_1\) can be obtained using the rough straight line drawn on the scatterplot (Fig. 33.4).

A rough approximation of the value of the intercept \(b_0\) is the value of \(\hat{y}\) when \(x = 0\), from the drawn line. When \(x = 0\), the regression line suggests the value of \(\hat{y}\) is about \(2\) ; that is, the value of \(b_0\) is approximately \(2\).

A rough approximation of the slope \(b_1\) is found using

\[\begin{equation} \frac{\text{Change in $y$}}{\text{Corresponding INCREASE in $x$}} = \frac{\text{rise}}{\text{run}} \tag{33.2} \end{equation}\]

from the drawn line. This approximation of the slope is the change in the value of \(y\) (the 'rise') divided by the corresponding increase in the value of \(x\) (the 'run').

FIGURE 33.4: An example scatterplot.

Consider what happens in the animation below when the value of \(x\) increases from \(1\) to \(5\) (an increase of \(5 - 1 = 4\)). The corresponding value of \(y\) changes from about \(5\) to about \(17\), a change (specifically, an increase) of \(17 - 5 = 12\). So, using Eq. (33.2), \[ \frac{\text{rise}}{\text{run}} = \frac{17 - 5}{5 - 1} = \frac{12}{4} = 3. \] The value of \(b_1\) is about \(3\). When using rise-over-run, better guesses for \(b_1\) are found when using one value of \(x\) near the left-side of the scatterplot, and another value of \(x\) near the right-side of the scatterplot, but any two values can be used.

Combing the rough guesses for \(b_0\) and \(b_1\), the regression line is approximately \(\hat{y} = 2 + (3\times x)\), usually written \[ \hat{y} = 2 + 3x. \]

The regression equation has \(\hat{y}\) (not \(y\)) on the left-hand side. That is, the equation predicts the mean values of \(y\), which may not be equal to any of the observed values (which are denoted by \(y\)).

A 'good' regression equation would produce predicted values \(\hat{y}\) close to the observed values \(y\); that is, the line passes close to each point on the scatterplot.

The intercept \(b_0\) has the same measurement units as the response variable. The measurement units for the slope \(b_1\) is the 'measurement units of the response variable', per 'measurement units of the explanatory variable'.

Example 33.4 (Measurement units of regression parameters) In Example 33.3, the regression line for the relationship between the age of Australian girls in years \(x\) and their height (in cm) \(y\) was \(\hat{y} = 73 + 7x\) (for girls aged between four and seven years).

In the equation, the intercept is \(b_0 = 73\,\text{cm}\) and the slope is \(b_1 = 73\,\text{cm}\)/y (the growth rate).

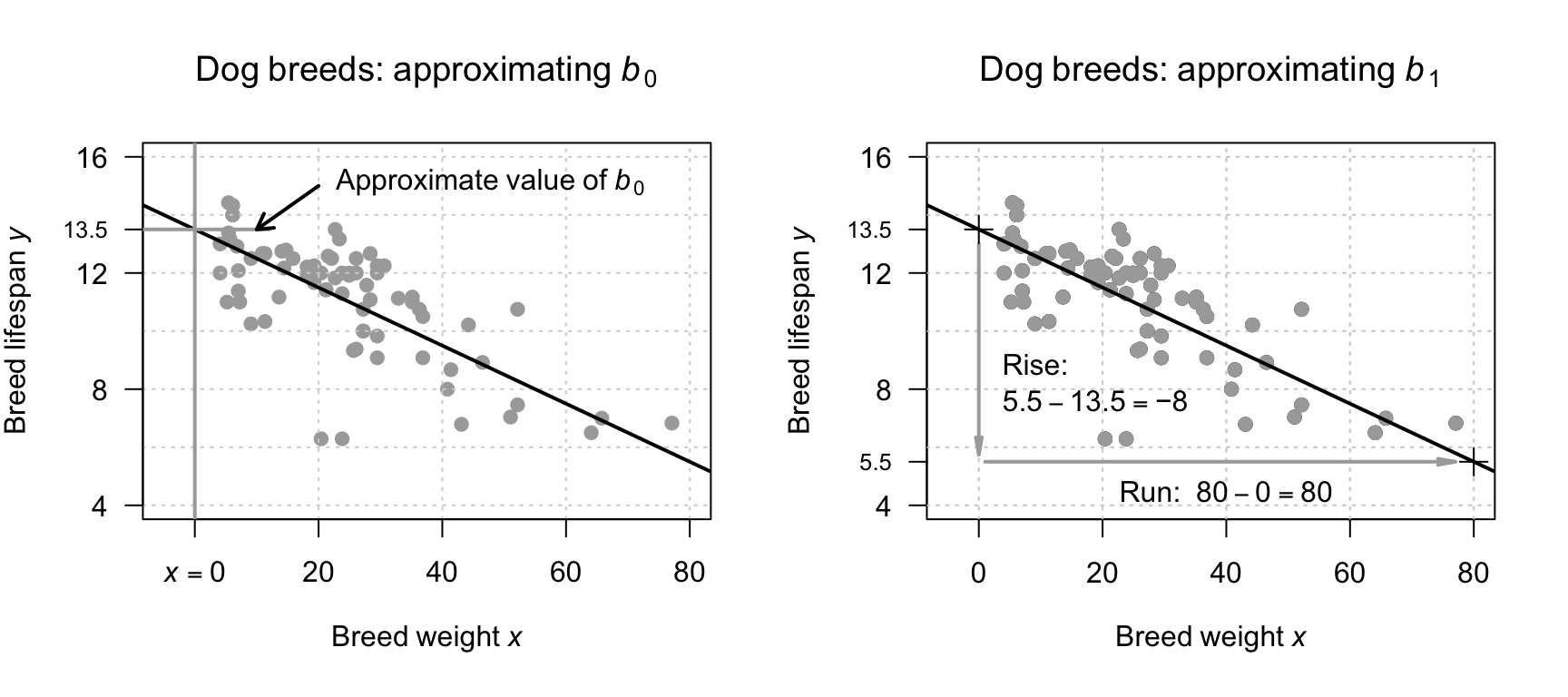

Example 33.5 (A rough approximation of the regression equation) For the dog-breed data (Fig. 33.2, left panel), a rough estimate of the regression line can be drawn on a scatterplot to estimate \(b_0\) and \(b_1\) (Fig. 33.5). The estimate of \(b_0\) (the value of \(y\) when \(x = 0\)) is roughly \(13.5\,\text{y}\).

The estimate of \(b_1\) can be found using the rise-over-run idea. When \(x = 0\), the value of \(\hat{y}\) (according to the drawn line) is about \(13.5\). At the other extreme of the plot, where \(x = 80\), the value of \(y\) is about \(5.5\). (Any two points on the line can be used, but using two points at each end gives better guesses of the slope.) So, as \(x\) increases from \(0\) to about \(80\), the value of \(\hat{y}\) reduces from about \(13.5\) to about \(5.5\), a change of about \(-8\). That is, for a 'run' of \(80 - 0 = 80\), the 'rise' is \(5.5 - 13.5 = -8\) (i.e., a drop of \(8\)), and so a rough estimate of the slope is \(-8/80 = -0.1\,\text{y}\)/kg. (The relationship is negative, so the slope is negative.)

The rough guess of the regression line is therefore \[ \hat{y} = 13.5 - 0.1x, \] where \(x\) is the breed-weight (in kgs), and \(y\) is lifespan (in years). The rough guess of the intercept \(b_0\) is \(13.5\,\text{y}\), while the rough guess of the slope \(b_1\) is \(-0.1\,\text{y}\)/kg.

FIGURE 33.5: Obtaining rough guesses for the regression equation for the dog-breed data. Left: approximating \(b_0\). Right: approximating \(b_1\) using rise-over-run. The plus signs on the right plot indicate the points used to estimate the slope.

You may like the following interactive activity, which explores slopes and intercepts.

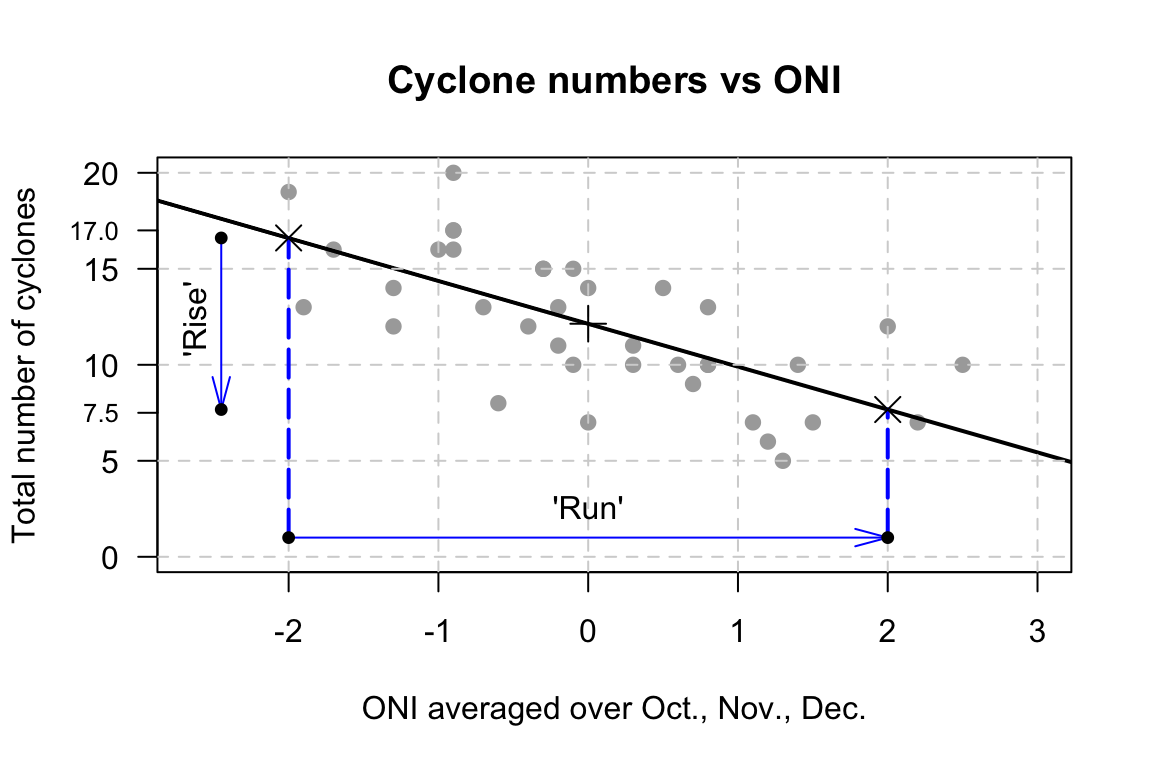

Example 33.6 (Estimating regression parameters) The relationship between the number of cyclones \(y\) in the Australian region each year from 1969 to 2005, and the ONI \(x\) is shown in Fig. 33.3 (left panel).

To make a guess of the regression coefficients, a sensible line can be drawn through the data (Fig. 33.6). When the value of \(x\) is zero, the predicted value of \(y\) is about \(12\), so \(b_0\) is about \(12\) cyclones. Recall: the intercept is the predicted value of \(y\) when \(x = 0\), which is not at the left of the graph in Fig. 33.6.

To approximate the value of \(b_1\), use the rise-over-run idea. When \(x = -2\), the predicted mean value of \(y\) is about \(17\); when \(x = 2\), the predicted mean value of \(y\) is about \(8\). The value of \(x\) increases by \(2 - (-2) = 4\), while the value of \(y\) changes by \(7.5 - 17 = -9.5\) (a decrease of about \(9.5\)). Hence, \(b_1\) is approximately \(-9.5/4 = -2.375\) cyclones per unit change in ONI. (You may get a slightly different value from a slightly different line.)

The relationship has a negative direction, so the slope must be negative. The regression line is approximately \(\hat{y} = 12 - 2.375x\).

FIGURE 33.6: The number of cyclones in the Australian region each year from 1969 to 2005, and the ONI averaged over October, November, December. The plus sign \({}+{}\) is located on the line where \(x = 0\). The crosses \({}\times{}\) are located to find rise-over-run.

The above method gives a crude approximation to the values of the intercept \(b_0\) and the slope \(b_1\). In practice, many reasonable lines could be drawn through a scatterplot of data, each giving slightly different rough guesses for \(b_0\) and \(b_1\). However, one of those lines is the 'best' line in some sense9, sometimes called the 'line of best fit'.

33.3.3 Regression: finding equations using software

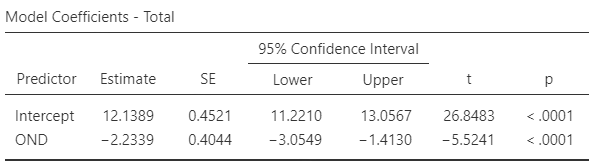

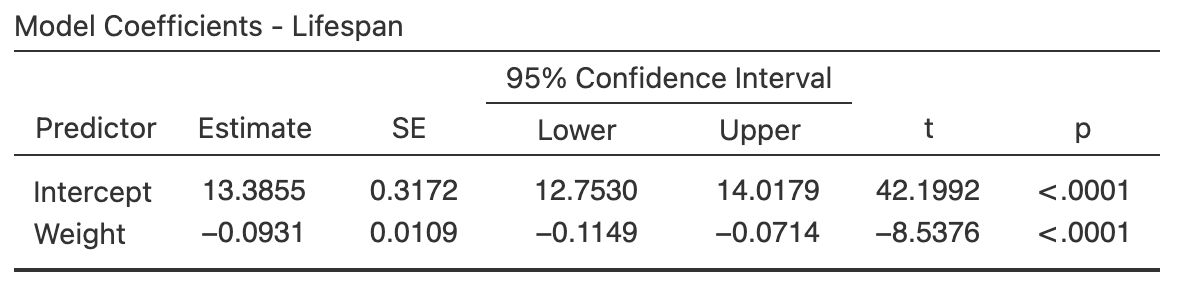

Software is almost always used to find the 'best' estimates for the intercept \(b_0\) and slope \(b_1\), as the formulas are complicated and tedious to use. For the dog-breed data (Fig. 33.2), the relevant software output is shown in Fig. 33.7.

In the output, the values of \(b_0\) and \(b_1\) are in the column labelled Estimate; the value of the sample \(y\)-intercept is \(b_0 = 13.39\,\text{y}\), and the value of the sample slope is \(b_1 = -0.093\,\text{y}\)/kg.

These are the values of the two regression coefficients.

The regression equation is, after rounding:

\[\begin{equation}

\hat{y} = 13.39 + (-0.093\times x),

\qquad\text{usually written as}\qquad

\hat{y} = 13.39 - 0.093 x.

\tag{33.3}

\end{equation}\]

These are close to the values obtained using the rough method in Sect. 33.3.2 (which gave \(b_0 = 13.5\) and \(b_1 = -0.1\) approximately).

The sign of the slope \(b_1\) and the sign of correlation coefficient \(r\) are always the same. For example, if the slope is negative, the correlation coefficient will be negative. However, the value of the slope cannot be deduced solely from the value of the correlation coefficient, nor can the value of the correlation coefficient be deduced solely from the value of the slope.

FIGURE 33.7: The regression output for the dog-breed data.

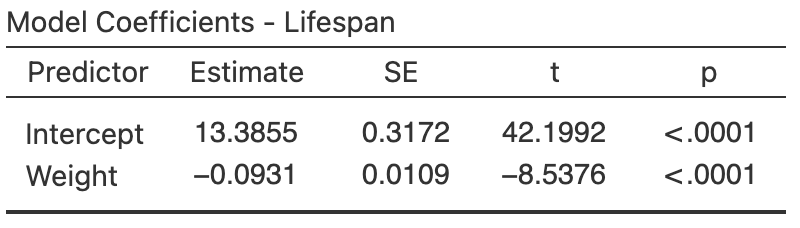

Example 33.7 (Regression coefficients) The regression equation for the cyclone data (Fig. 33.6) is found from the software output (Fig. 33.8): \[ \hat{y} = 12.1 - 2.23x, \] where \(x\) is the ONI (averaged over October, November, December) and \(y\) is the number of cyclones; that is, \(b_0 = 12.1\) cyclones and \(b_1 = -2.23\) cyclones per unit change in ONI. These values are close to the approximations made in Example 33.6 (\(b_0 = 12\) and \(b_1 = -2.375\) respectively).

FIGURE 33.8: The software output for the cyclone data.

You may like the following interactive activity, which explores regression equations.

33.3.4 Regression: making predictions

Regression equations can be used to make predictions of the mean value of \(y\) for a given value of \(x\). For example, the regression equation for the dog-breed data in Eq. (33.3) can be used to make predictions of the mean lifespan for a given breed-weight. For example, the equation can be used to predict the average lifespan of a breed with a breed-weight of \(50\,\text{kg}\). Since \(x\) represents the breed-weight, use \(x = 50\) in the regression equation: \[\begin{align*} \hat{y} &= 13.39 - (0.093\times 50)\\ &= 13.39 - 4.65 = 8.74. \end{align*}\] Dog breeds with an average weight of \(50\,\text{kg}\) are predicted to have a mean lifespan of \(8.74\,\text{y}\). Some individual breeds with a breed-weight of \(50\,\text{kg}\) will have shorter or longer lifespans, and some individual dogs with a weight of \(50\,\text{kg}\) will have shorter or longer lifespans. The model predicts that the mean lifespan for dogs with a breed-weight of \(50\,\text{kg}\) will be about \(8.74\,\text{y}\).

The value of \(\hat{y}\) is computed using the estimates \(b_0\) and \(b_1\), which are computed from sample data. Hence, the value of \(\hat{y}\) also depends on which one of the countless possible samples is used. This means that \(\hat{y}\) also has a sampling distribution and a standard error.

Suppose we were interested in dog breeds with a breed-weight of \(100\,\text{kg}\); the mean predicted lifespan is \[ \hat{y} = 13.39 - (0.093\times 100) = 4.09, \] or about \(4.09\,\text{y}\). This prediction may be a useful prediction, but it also may be rubbish. In the data, the heaviest breed is about \(80\,\text{kg}\), so the regression line may not even apply for breeds with a breed-weight exceeding \(80\,\text{kg}\). For example, the relationship may be non-linear after \(80\,\text{kg}\). The prediction may be sensible, but it may not be either. We don't know whether the prediction is sensible or not, because we have no data for breed-weights over \(80\,\text{kg}\) to inform us.

Making predictions outside the range of the available data is called extrapolation, and extrapolation beyond the data may lead to nonsense predictions. As an extreme example, a breed-age of \(x = 150\) would be predicted to have a mean lifespan of \(-0.56\,\text{y}\), which is clearly nonsense.

Definition 33.1 (Extrapolation) Extrapolation refers to making predictions outside the range of the available data. Extrapolation beyond the data may lead to nonsense.

33.3.5 Regression: understanding relationships

The regression equation can be used to understand the relationship between the two variables. Consider again the dog-breed regression equation: \[\begin{equation} \hat{y} = 13.39 - 0.093 x. \tag{33.4} \end{equation}\] What does this equation reveal about the relationship between \(x\) and \(y\)?

\(b_0\) is the predicted value of \(y\) when \(x = 0\) (Sect. 33.3.3). Using \(x = 0\) in Eq. (33.4) predicts a mean lifespan of \[ \hat{y} = 13.39 - (0.093\times 0) = 13.39 \] for breeds with a weight of zero. Using \(x = 0\) is extrapolating (the smallest breed-weight in the sample is \(4.08\,\text{kg}\)). In any case, using a breed-weight of zero is nonsense anyway.

The value of the intercept \(b_0\) is sometimes (but not always) meaningless. The value of the slope \(b_1\) is usually of greater interest, as it explains the relationship between the two variables.

The slope \(b_1\) is how much the value of \(y\) changes (on average) when the value of \(x\) increases by one (Sect. 33.3.3). For the dog-breed data, \(b_1\) is the average change in mean lifespan for each \(1\,\text{kg}\) increase in breed-weight.

Specifically, each extra kilogram of breed-weight is associated with a mean change of \(-0.093\,\text{y}\) in lifespan (from Eq. (33.4)); that is, a decrease in lifespan by a mean of \(0.093\,\text{y}\) for each extra kilogram of breed-weight. The lifespan of some breeds will be shorter and some longer, and the lifespan of some individual dogs will be shorter and some longer; the value is a mean change in breed lifespan.

To demonstrate, consider the case where \(x = 10\), and the regression equation predicts \(\hat{y}= 12.46\,\text{y}\). For breeds one kilogram heavier than this (i.e., \(x = 11\)), the value of the prediction \(\hat{y}\) will increase by an average of \(-0.093\,\text{y}\) (or, equivalently, decrease by an average of \(0.093\,\text{y}\)). That is, we would predict \(\hat{y} = 12.46 - 0.093 = 12.367\,\text{g}\). This is the same prediction made by using \(x = 11\) in Eq. (33.4).

If the value of \(b_1\) is positive, then the predicted values of \(y\) increase as the values of \(x\) increase. If the value of \(b_1\) is negative, then the predicted values of \(y\) decrease as the values of \(x\) increase.

This interpretation of \(b_1\) explains the relationship: the lifespan is, on average, about \(0.093\,\text{y}\) shorter for each extra kilogram of breed-weight.

In general, we say that a change in the value of \(x\) is associated with a change in the value of \(y\). Unless the study is experimental (Sect. 4.4), we cannot say that the change in the value of \(x\) causes the change in the value of \(y\).

If the value of the slope is zero, there is no linear relationship between \(x\) and \(y\). A slope of zero means that a change in the value of \(x\) is associated with a change of zero in the value of \(y\). In this case, the correlation coefficient is also zero.

33.4 Regression: CIs and \(t\)-test for regression parameters

33.4.1 Introduction

A regression equation exists in the population that connects the values of \(x\) and \(y\). This regression line is estimated from one of the countless possible samples, and is an estimate of the regression line in the population.

In the population, the intercept is denoted by \(\beta_0\) and the slope by \(\beta_1\). Hence, the regression line in the population is \[ \hat{y} = \beta_0 + \beta_1 x. \] The values of the parameters \(\beta_0\) and \(\beta_1\) are unknown, and are estimated by the statistics \(b_0\) and \(b_1\) respectively.

The symbol \(\beta\) is the Greek letter 'beta', pronounced 'beater' (as in 'egg beater'). So \(\beta_0\) is pronounced as 'beater-zero', and \(\beta_1\) as 'beater-one'.

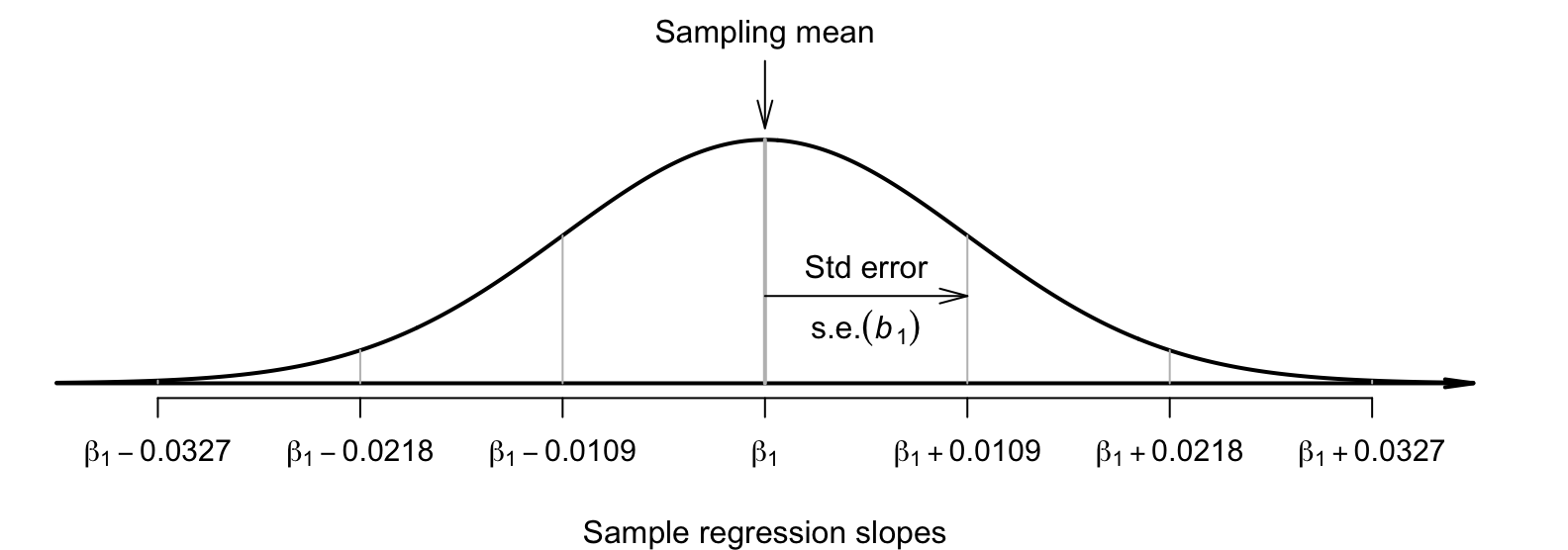

Every sample is likely to produce slightly different values for both \(b_0\) and \(b_1\) (sampling variation), so both \(b_0\) and \(b_1\) have a sampling distribution and a standard error. The formulas for computing the values of \(b_0\) and \(b_1\) (and their standard errors) are intimidating, so we will use software to perform the calculations. The sampling distributions for \(b_0\) and \(b_1\) also have approximate normal distributions under certain conditions (Sect. 33.5).

Usually the slope is of greater interest than the intercept, because the slope explains the relationship between the two variables (Sect. 33.3.5). For this reason, the sampling distribution for the slope only is given below, but the sampling distribution for the intercept is analogous.

Definition 33.2 (Sampling distribution of a sample slope) The sampling distribution of the sample regression slope is (when certain conditions are met; Sect. 33.5) described by

- an approximate normal distribution,

- with a mean of \(\beta_1\), and

- a standard deviation, called the standard error of the slope and denoted \(\text{s.e.}(b_1)\).

A formula exists for finding \(\text{s.e.}(b_1)\), but is tedious to use, and we will not give it.

33.4.2 CIs for the regression parameters

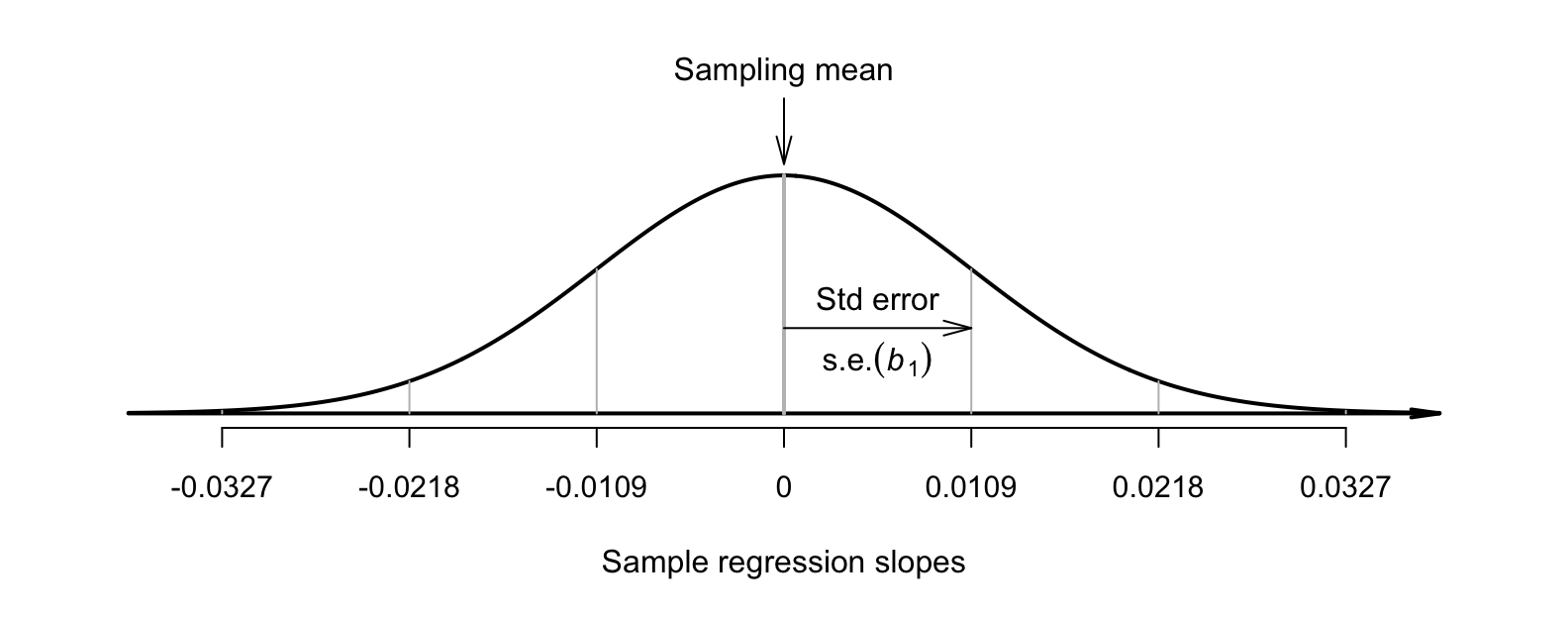

The sampling distribution describes all possible values of the sample slope from all possible samples, through sampling variation. For the dog-breed data then, the values of the sample slope across all possible samples is described (Fig. 33.9) as, using Def. 33.2:

- an approximate normal distribution;

- with a sampling mean whose value is \(\beta_1\); and

- a standard deviation of \(\text{s.e.}(b_1) = 0.0109\) (from software; Fig. 33.7).

FIGURE 33.9: The distribution of sample slope for the dog-breed data, around the population slope \(\beta_1\).

As seen earlier, when the sampling distribution is an approximate normal distribution CIs have the form \[ \text{statistic} \pm ( \text{multiplier} \times \text{standard error}), \] where the multiplier is \(2\) for an approximate \(95\)% CI (from the \(68\)--\(95\)--\(99.7\) rule). In this context, a CI for the slope is \[ b_1 \pm \big( \text{multiplier} \times \text{s.e.}(b _1)\big). \] Thus, an approximate \(95\)% CI for the slope is \[ -0.093 \pm (2\times 0.0109)\qquad\text{or}\qquad -0.093 \pm 0.0218, \] which is from \(-0.115\) to \(-0.071\,\text{y}\)/kg (after rounding).

Software can be used to produce exact CIs too; the exact \(95\)% CI is from \(-0.115\) to \(-0.071\,\text{g}\)/year (Fig. 33.10). The approximate and exact \(95\)% CIs are very similar. We write:

For each increase of one kilogram in the breed-weight of dogs, the mean lifespan increases by \(-0.093\) years per kg (\(95\)% CI: \(-0.115\) to \(-0.071\); \(n = 73\)).

Alternatively (and equivalently, but probably easier to understand):

For each increase of one kilogram in the breed-weight of dogs, the mean lifespan decreases by \(0.093\) years per kg (\(95\)% CI: \(0.071\) to \(0.115\); \(n = 73\)).

FIGURE 33.10: Output for the dog-breed data, including the CIs for the regression parameters.

Example 33.8 (Cyclones) Using the software output (Fig. 33.8) for the cyclone data, \(\text{s.e.}(b_1) = 0.404\), so the approximate \(95\)% CI for the regression slope \(\beta_1\) is \[ -2.23 \pm (2 \times 0.404)\text{\qquad {or}\qquad} -2.23 \pm 0.808. \] The interval from \(-3.04\) to \(-1.42\) is likely to straddle the population slope. This approximate CI is very similar to the exact CI shown in Fig. 33.8.

33.4.3 Regression: \(t\)-tests for regression parameters

Since the regression line describing the relationship between \(x\) and \(y\) is computed from one of countless possible samples, any relationship between \(x\) and \(y\) observed in the sample may be due to sampling variation; possibly, no relationship actually exists in the population (i.e., \(\beta_1 = 0\)). In other words, a hypothesis test can be conducted for the slope to determine if sampling variation can explain the discrepancy between \(\beta_1\) and \(b_1\). (Similar hypothesis tests can be conducted for testing if the intercept is zero, but are usually of less interest.)

The null hypothesis for tests about the slope is the usual 'no relationship' hypothesis. In this context, 'no relationship' means that the slope is zero (Sect. 33.3.5), so the null hypothesis (about the population) is \(H_0\): \(\beta_1 = 0\). A slope of zero is equivalent to no relationship between the variables. (We would also find \(\rho = 0\).) The RQ implies these hypotheses about the slope: \[ \text{$H_0$: } \beta_1 = 0\quad\text{and}\quad\text{$H_1$: } \beta_1 \ne 0. \] The parameter is \(\beta_1\), the population slope for the regression equation predicting lifespan from breed-weight. The alternative hypothesis is two-tailed, based on the RQ.

Assuming the null hypothesis is true (i.e., that \(\beta_1 = 0\)), the possible values of the sample slope \(b_1\) can be described (Def. 33.2). The variation in the sample slope across all possible samples when \(\beta_1 = 0\) is described (Fig. 33.11) using:

- an approximate normal distribution;

- with a sampling mean whose value is \(\beta_1 = 0\) (from \(H_0\)); and

- a standard deviation of \(\text{s.e.}(b_1) = 0.0109\) (from software; Fig. 33.10).

FIGURE 33.11: The distribution of sample slopes for the dog-breed data, if the population slope is \(\beta_1 = 0\).

The observed sample slope for the dog-breed data is \(b_1 = -0.181\). Locating this value on Fig. 33.11 shows that it is very unlikely that any of the many possible samples would produce such a slope, just through sampling variation, if the population slope really was \(\beta_1 = 0\). The test statistic is found using the usual approach when the sampling distribution has an approximate normal distribution: \[\begin{align*} t &= \frac{\text{observed value} - \text{mean of the distribution of the statistic}}{\text{std deviation of the distribution of the statistic}}\\ &= \frac{ b_1 - \beta_1}{\text{s.e.}(b_1)} = \frac{-0.0931 - 0}{0.0109} = -8.54, \end{align*}\] where the values of \(b_1\) and \(\text{s.e.}(b_1)\) are taken from the software output (Fig. 33.10). This \(t\)-score is the same value reported by the software.

To determine if the statistic is consistent with the null hypothesis, the \(P\)-value can be approximated using the \(68\)--\(95\)--\(99.7\) rule, approximated using tables, or taken from software output (Fig. 33.10). Since \(t = -8.54\), the \(P\)-value will be very small; software shows the two-tailed \(P\)-value is \(P < 0.0001\).

We write:

The sample presents very strong evidence (\(t = -8.54\); two-tailed \(P < 0.0001\)) that, in the population, the mean lifespan of dog breeds is related to the breed-weight (slope: \(-0.093\); \(95\)% CI from \(-0.115\) to \(-0.071\); \(n = 73\)).

Notice the three features of writing conclusions again: an answer to the RQ; evidence to support the conclusion (\(t = -8.54\); two-tailed \(P < 0.0001\)); and some sample summary information (slope: \(-0.0931\); \(95\)% CI from \(-0.115\) to \(-0.071\); \(n = 73\)).

The \(P\)-value for a test of \(H_0\): \(\rho = 0\) will be the same as the \(P\)-value from a test of \(H_0\): \(\beta_1 = 0\). The test are effectively equivalent, both testing if the relationship observed in the sample can be explained by sampling variation.

Example 33.9 (Hypothesis testing) For the cyclone data (Example 33.7), the RQ is

In the Australian region, is there a relationship between ONI and the number of cyclones?

This RQ implies these hypotheses: \[ \text{$H_0$: } \beta_1 = 0\quad\text{and}\quad\text{$H_1$: } \beta_1 \ne 0. \] From the output (Fig. 33.8), \(t = -5.52\) and the \(P\)-value is small: \(P < 0.001\). We write:

The sample presents very strong evidence (\(t = -5.52\); two-tailed \(P < 0.001\)) that, in the population, the number of cyclones is related to the ONI (slope: \(-2.23\); \(95\)% CI from \(-3.04\) to \(-1.42\); \(n = 37\)).

33.5 Statistical validity conditions

As usual, these results hold under certain conditions. The conditions for which the CIs and tests are statistically valid are:

- The relationship is approximately linear (necessary for the (Pearson) correlation coefficient and regression line to be appropriate).

- The variation in the response variable is approximately constant for all values of the explanatory variable.

- The sample size is at least \(25\).

The sample size of \(25\) is a rough figure; some books give other values. The units of analysis are also assumed to be independent (e.g., from a simple random sample).

If the relationship non-linear but is increasing-only or decreasing-only, alternatives to the Pearson correlation coefficient include the Spearman or Kendall correlation coefficients (Conover 2003) or using resampling methods (Efron and Hastie 2021). Depending on which statistical validity conditions are not met, other regression-like options may be available. For example, generalized linear models (P. K. Dunn and Smyth 2018) may be appropriate for some non-linear relationships and/or relationships with non-constant variation in \(y\).

Example 33.10 (Statistical validity) For the dog-breed data, the scatterplot (Fig. 33.2, left panel) shows that the relationship is approximately linear, so using a (Pearson) correlation coefficient and a regression line is appropriate. For the hypothesis test, the variation in lifespans doesn't seem to be obviously getting consistently larger or smaller for heavier breeds (though two breeds with a lifespan near \(6\,\text{kg}\) with a breed-weight near \(20\) seem unusual), and the sample size is also greater than \(25\). The CIs and tests are statistically valid.

Example 33.11 (Cyclones) The scatterplot for the cyclone data (Fig. 33.6) shows the relationship is approximately linear, that the variation in the number of cyclones seems reasonably constant for different values of the ONI, and the sample size is larger than \(25\) (\(n = 37\)). The CIs (Examples 33.1 and 33.8) and the tests (Example 33.2 and 33.9) are statistically valid.

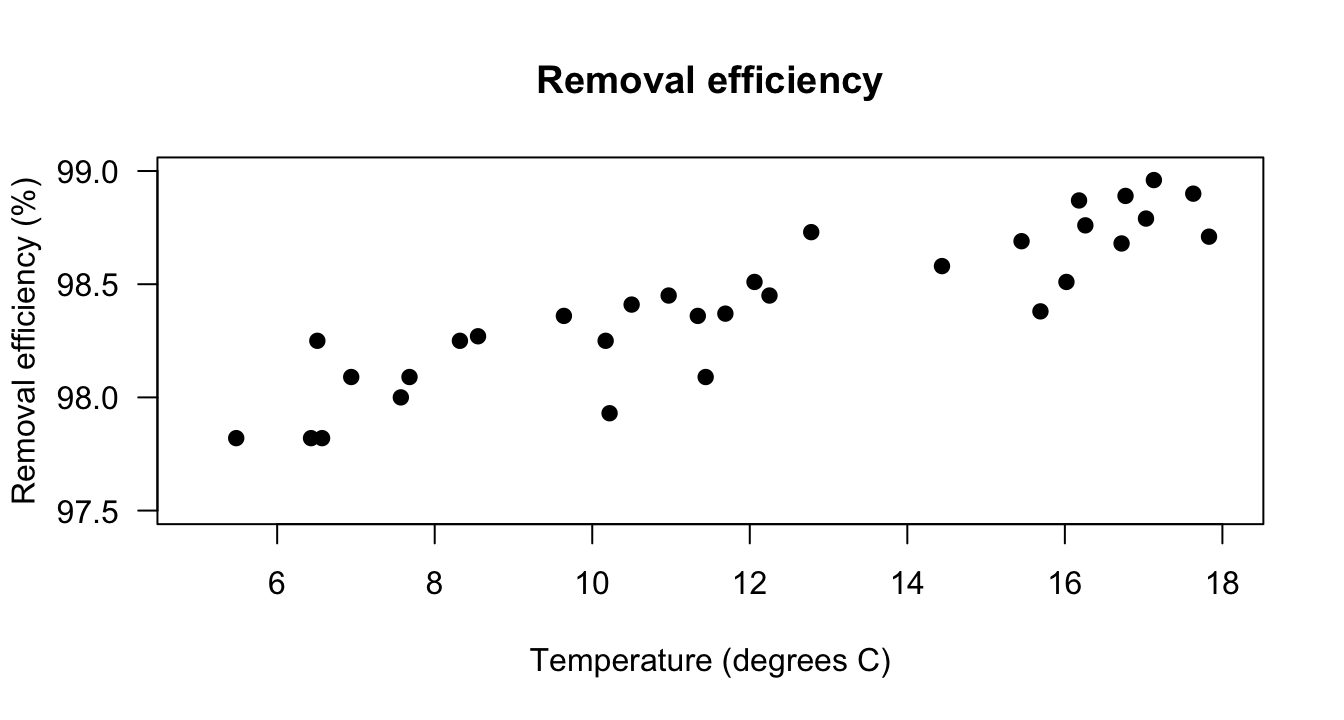

33.6 Example: removal efficiency

In wastewater treatment facilities, air from biofiltration is passed through a membrane and dissolved in water, and is transformed into harmless by-products. The removal efficiency \(y\) (in %) may depend on the inlet temperature (in \(^\circ\)C; \(x\)). Chitwood and Devinny (2001) asked:

In treating biofiltration wastewater, is the removal efficiency linearly associated with the inlet temperature?

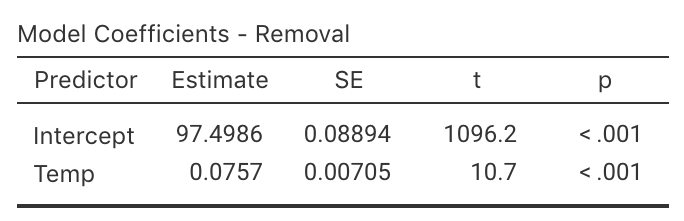

The scatterplot of the \(n = 32\) observations was shown (and described) in Sect. 16.6, and repeated here (Fig. 33.12); the relationship is positive and approximately linear.

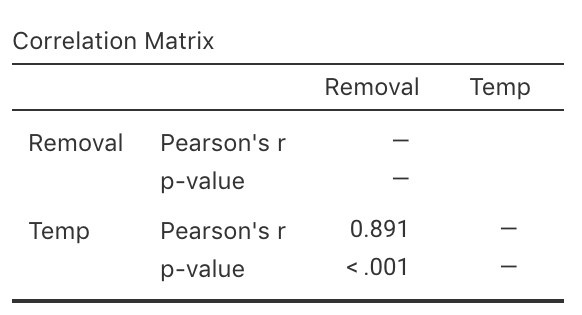

The output (Fig. 33.13) shows that the sample correlation coefficient is \(r = 0.891\) (with a \(95\) CI from \(0.79\) to \(0.95\)), and so \(R^2 = (0.891)^2 = 79.4\)%. This means that the unexplained variation in removal efficiency reduces by about \(79.4\)% by knowing the inlet temperature.

FIGURE 33.12: The scatterplot showing the relationship between removal efficiency and inlet temperature.

FIGURE 33.13: The software output exploring the relationship between removal efficiency and inlet temperature.

As always, the RQ is about the parameter, the correlation between the removal efficiency and inlet temperature in the population \(\rho\). To test if a linear relationship exists in the population, write: \[ \text{$H_0$: } \rho = 0\quad\text{and}\quad \text{$H_1$: } \rho \ne 0. \] The alternative hypothesis is two-tailed (as implied by the RQ). The software output (Fig. 33.12, right panel) shows that \(P < 0.001\).

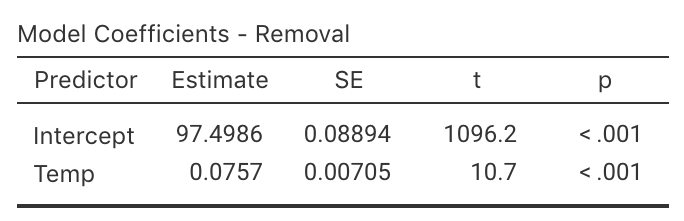

Since the scatterplot of the data (Fig. 33.12) shows the relationship is approximately linear, a regression line is appropriate. From the software output (Fig. 33.14), \(b_0 = 97.5\) and \(b_1 = 0.0757\); hence \[ \hat{y} = 97.5 + 0.0757x \] for \(x\) and \(y\) defined above. The slope quantifies the relationship, so we can test \[ \text{$H_0$: } \beta_1 = 0 \qquad\text{and}\qquad \text{$H_1$: } \beta_1 \ne 0. \] From the output, \(t = 10.7\) which is huge; the \(P\)-value is small as expected: \(P < 0.001\). The output does not include the CI for the slope, but since \(\text{s.e.}(b_1) = 0.00705\), the approximate \(95\)% CI is \[ 0.0757 \pm (2 \times 0.00705), \quad\text{ or }\quad 0.0757 \pm 0.0141. \] We write:

Very strong evidence exists (\(t = 10.7\); \(P < 0.001\)) that inlet temperature is linearly related to removal efficiency (slope: \(0.0757\); approximate \(95\)% CI: \(0.0616\) to \(0.0898\)).

FIGURE 33.14: Regression output for the removal-efficiency data.

The CI and test are statistically valid: the relationship is approximately linear, the variation in \(y\) is approximately constant for all values of \(x\), and \(n = 32\).

33.7 Chapter summary

The CI for the correlation coefficient is found from software output. To test a hypothesis about a correlation between two variables, \(\rho\):

- Write the null hypothesis (\(H_0\): \(\rho = 0\)) and the alternative hypothesis (\(H_1\)).

- Initially assume the value of \(\rho\) in the null hypothesis to be true (usually zero).

- The \(P\)-value for the test is found from software.

- Make a decision, and write a conclusion.

- Check the statistical validity conditions.

The following short video may help explain some of these concepts:

Regression mathematically describes the relationship between two quantitative variables: the response variable \(y\), and the explanatory variable \(x\). The linear relationship between \(x\) and \(y\) (the regression equation), in the sample, is \[ \hat{y} = b_0 + b_1 x, \] where \(b_0\) is a number (the intercept), \(b_1\) is a number (the slope), and the 'hat' above the \(y\) indicates that the equation gives a predicted mean value of \(y\) for a given \(x\)-value. Software provides the values of \(b_0\) and \(b_1\).

The intercept is the predicted mean value of \(y\) when the value of \(x\) is zero. The slope is how much the predicted mean value of \(y\) changes, on average, when the value of \(x\) increases by \(1\).

The regression equation can be used to make predictions or to understand the relationship between the two variables. Predictions made with values of \(x\) outside the values of \(x\) used to create the regression equation (called extrapolation) may not be reliable.

The regression equation in the population is \(\hat{y} = \beta_0 + \beta_1 x\). To compute a confidence interval (CI) for the slope of a regression equation, software provides the standard error of \(b_1\); then, the CI is \[ {b_1} \pm \big( \text{multiplier}\times\text{s.e.}(b_1) \big). \] The margin of error is (multiplier\({}\times{}\)standard error), where the multiplier is \(2\) for an approximate \(95\)% CI (using the \(68\)--\(95\)--\(99.7\) rule).

To test a hypothesis about a population slope \(\beta_1\):

- Write the null hypothesis (\(H_0\): \(\beta_1 = 0\)) and the alternative hypothesis (\(H_1\)).

- Initially assume the value of \(\beta_1\) in the null hypothesis to be true.

- Then, describe the sampling distribution, which describes what to expect from the sample slope on this assumption: under certain statistical validity conditions, the sample slope varies with:

- an approximate normal distribution,

- with sampling mean whose value is the value of \(\beta_1 = 0\) (from \(H_0\)), and

- having a standard deviation of \(\displaystyle \text{s.e.}(b_1)\).

- Compute the value of the test statistic: \[ t = \frac{b_1 - \beta_1}{\text{s.e.}(b_1)}, \] where \(b_1\) is sample slope.

- The \(t\)-value is like a \(z\)-score, and so an approximate \(P\)-value can be approximated using the \(68\)--\(95\)--\(99.7\) rule, or found using software.

- Make a decision, and write a conclusion.

- Check the statistical validity conditions.

33.8 Quick review questions

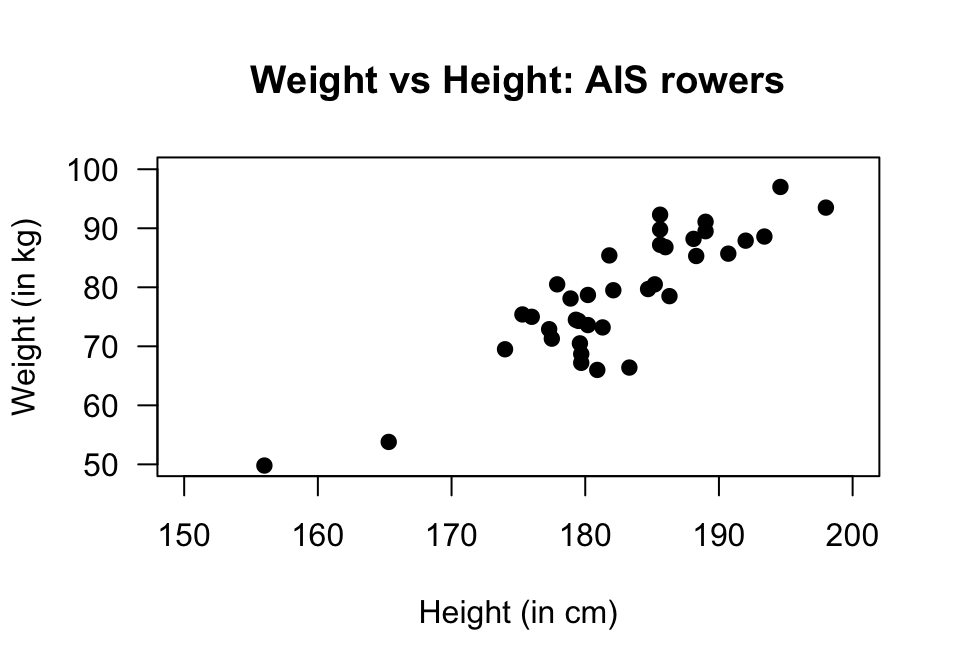

Telford and Cunningham (1991) examined the relationship between the height and weight of \(n = 37\) rowers at the Australian Institute of Sport (AIS; Fig. 33.15). The regression equation is \(\hat{y} = -138 + 1.2 x\), and \(P < 0.0001\) for the two-tailed \(P\)-value for a test of the correlation.

FIGURE 33.15: Scatterplot of weight against height for rowers at the AIS.

Are the following statements true or false?

- The \(x\)-variable is the height of the rower.

- Since the \(P\)-value is small, the correlation is quite strong.

- The relationship is a positive relationship.

- Based on the scatterplot, 'weight of the rower' is considered the \(y\)-variable.

- Using the rise-over-run idea, a very rough estimate of the value of the slope is \(1.2\).

- The measurements units for the slope are kg.

- The measurements units for the intercept are kg.

- The standard error of the slope is \(0.112\), so the value of the test statistic to test if the population slope is zero is \(t = 10.7\).

- The \(P\)-value for this test will be very small.

- Predicting the mean weight of a \(220\,\text{cm}\)-tall rower would be extrapolation.

Select the correct answer:

- What does the 'hat' above the \(y\) mean?

- What mean weight is predicted for a rower who is \(180\,\text{cm}\) tall?

33.9 Exercises

Answers to odd-numbered exercises are given at the end of the book.

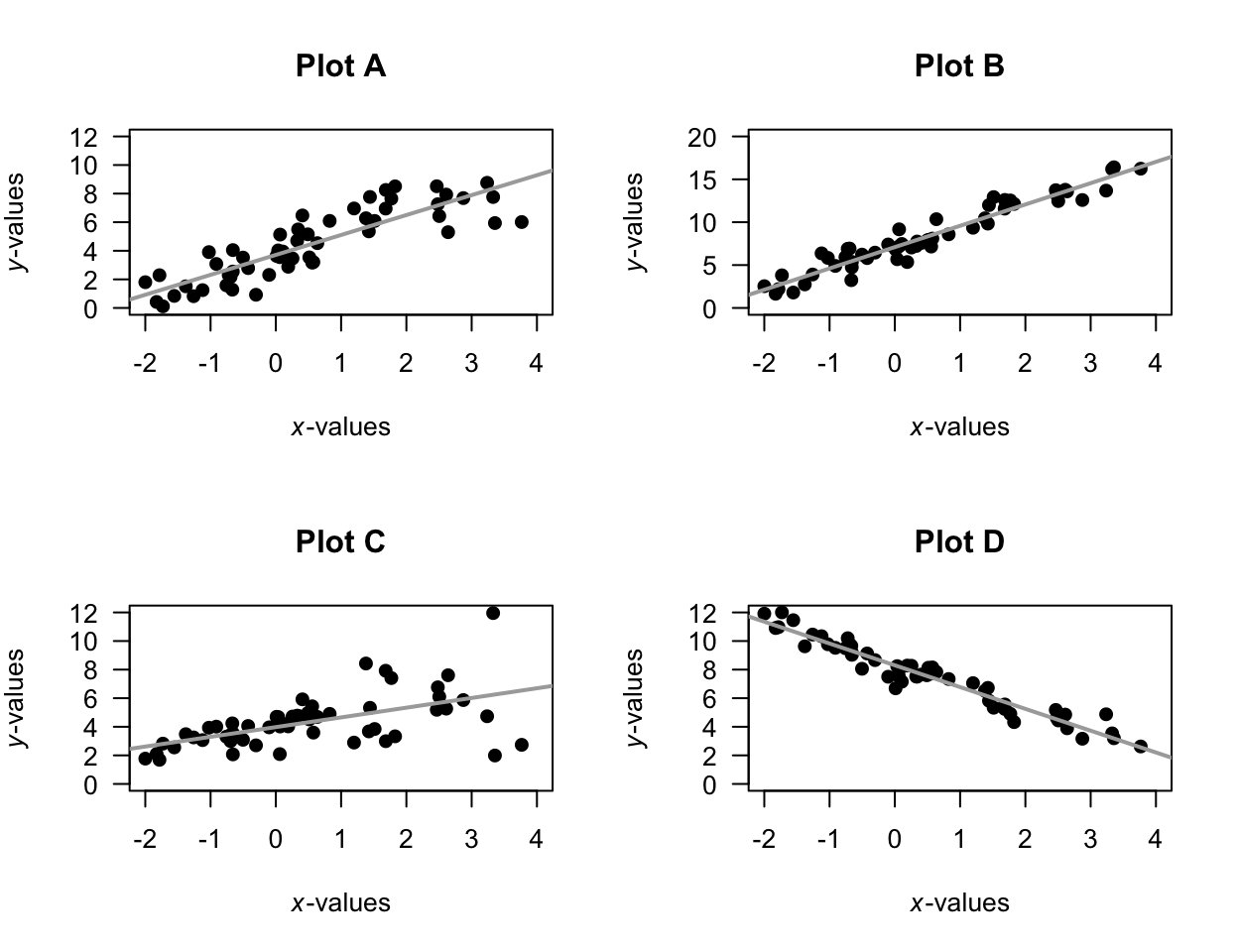

Exercise 33.1 For each of the plots in Fig. 33.16, where appropriate:

- estimate the value of \(r\) (this is hard!).

- estimate the intercept of the regression line.

- estimate the slope of the regression line, using the rise-over-run idea.

- write down the estimated regression equation.

FIGURE 33.16: Four scatterplots.

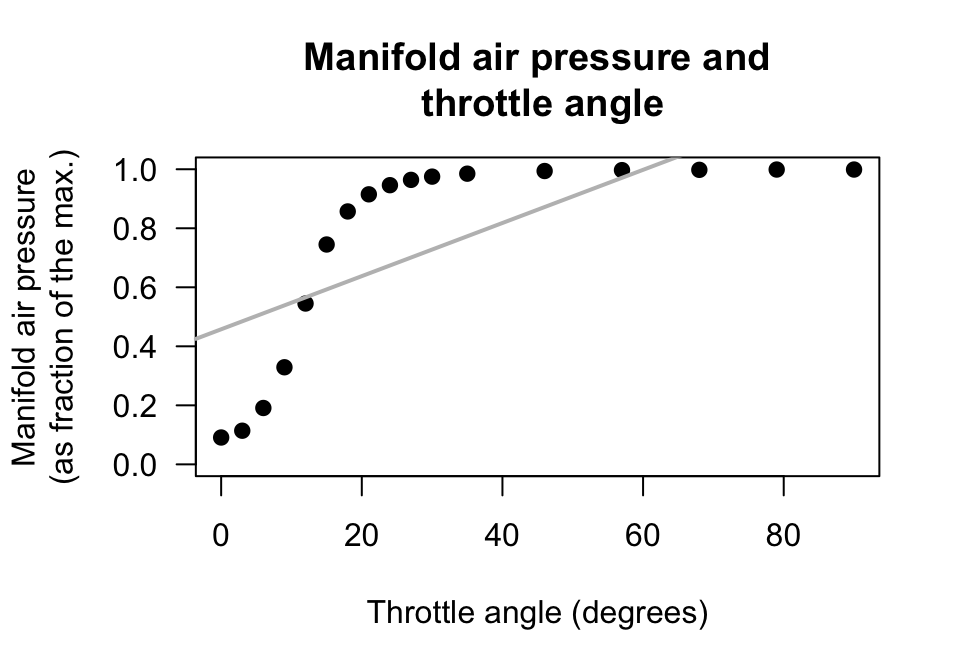

Exercise 33.2 [Dataset: Throttle]

Amin and Mahmood-ul-Hasan (2019) measured the throttle angle (\(x\)) and the manifold air pressure (\(y\)), as a fraction of the maximum value, in gas engines.

- The value of \(r\) is given in the article as \(0.972986604\). Comment on this, and what it means.

- Comment on the use of a regression model, based on the scatterplot (Fig. 33.17).

- The authors fitted the following regression model: \(y = 0.009 + 0.458x\). Identify errors that the researchers have made when giving this regression equation.

- Critique the researchers' approach.

FIGURE 33.17: Manifold air pressure plotted against throttle angle for an internal-combustion gas engine.

Exercise 33.3 In a correlation analysis, the researchers find that \(P = 0.0002\). Which (if any) of these statements are consistent with this \(P\)-value?

- \(r = 0.89\).

- \(r = -0.891\).

- \(r = 0.04\).

- \(r = -0.06\).

Exercise 33.4 In a correlation analysis, the researchers find that \(r = 0.36\). Which (if any) of these statements are consistent with this value of the correlation coefficient?

- The \(P\)-value is very small.

- The \(P\)-value is very large.

- The \(P\)-value is \(0.36\).

- The \(P\)-value is \(0.36^2\), or \(13\)%.

Exercise 33.5 For each regression equation below, identify the values of \(b_0\) and \(b_1\).

- \(\hat{y} = 3.5 - 0.14x\).

- \(\hat{y} = -0.0047x + 2.1\).

- \(\hat{y} = -25.2 - 0.95x\).

- \(\hat{y} = -0.22x + 0.15\).

Exercise 33.6 For each regression equation below, identify the values of \(b_0\) and \(b_1\).

- \(\hat{y} = -1.03 + 7.2x\).

- \(\hat{y} = -1.88x - 0.46\).

- \(\hat{y} = 201x + 16\).

- \(\hat{y} = 3.04x - 0.032\).

Exercise 33.7 Draw the regression line \(\hat{y} = 5 + 2x\) for values of \(x\) between \(0\) and \(10\).

- Add some points to the scatterplot such that the correlation is approximately \(r = 0.9\).

- Add some more points to the scatterplot such that the correlation is approximately \(r = 0.3\).

Exercise 33.8 Draw the regression line \(\hat{y} = 20 - 3x\) for values of \(x\) between \(0\) and \(5\).

- Add some points to the scatterplot such that the correlation is approximately \(r = -0.95\).

- Add some more points to the scatterplot such that the correlation is approximately \(r = -0.2\).

Exercise 33.9 LeBlanc et al. (2005) studied \(n = 30\) paramedicine students, using correlations to study the relationship between the amount of stress experienced while performing drug-dose calculations (measured using the State–Trait Anxiety Inventory, stai), and length of work experience.

- Write the hypotheses for testing if a relationship exists between the stai score and the length of work experience.

- The article gives the correlation coefficient as \(r = 0.346\) and \(P = 0.18\). What do you conclude?

- What must be assumed for the test to be statistically valid?

Exercise 33.10 Einsiedel et al. (2024) used correlations to study the relationship between amount of pesticide residue reported on a variety of fresh fruits and vegetables, and various weather measurements. One pesticide studied was perchlorate.

- Write the hypotheses for testing if a relationship exists between the perchlorate residue and maximum temperature at the growing location.

- The article gives the correlation coefficient as \(r = -0.059\) and \(P = 0.035\). What do you conclude?

- Write the hypotheses for testing if a relationship exists between the perchlorate residue and minimum temperature at the growing location.

- The article gives the correlation coefficient as \(r = -0.025\) and \(P = 0.365\). What do you conclude?

- What must be assumed for the tests to be statistically valid?

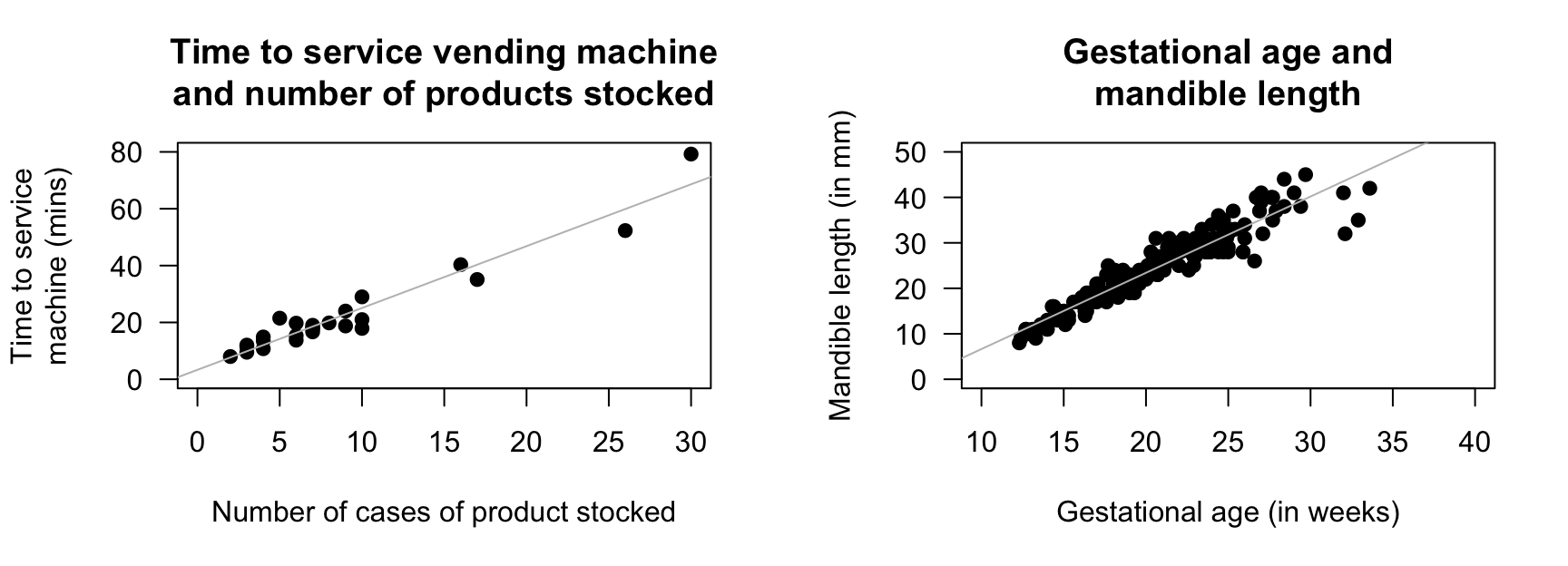

Exercise 33.11 [Dataset: SDrink]

A study examined the time taken to deliver soft drinks to vending machines (Montgomery and Peck 1992) using a sample of size \(n = 25\) (Fig. 33.18, left panel).

To test if a linear relationship exists, are the statistical validity conditions met?

Exercise 33.12 [Dataset: Mandible]

Royston and Altman (1994) examined the mandible length and gestational age for \(n = 167\) foetuses from the \(12\)th week of gestation onward (Fig. 33.18, right panel).

To test if a linear relationship exists, are the statistical validity conditions met?

FIGURE 33.18: Two scatterplots. Left: the time taken to deliver soft drinks to vending machines. Right: the relationship between gestational age and mandible length. In both plots, the solid line displays the linear relationship.

Exercise 33.13 Heerfordt et al. (2018) studied the relationship between the time (in minutes) spent on sunscreen application \(x\), and the amount (in grams) of sunscreen applied \(y\), using \(n = 31\) people. The fitted regression equation was \(\hat{y} = 0.27 + 2.21x\).

- Interpret the meaning of \(b_0\) and \(b_1\). Do they seem sensible?

- What are the units of measurement for the slope and intercept?

- According to the article, a hypothesis test for testing \(H_0\): \(\beta_0 = 0\) produced a \(P\)-value much larger than \(0.05\). What does this mean?

- For people who spend \(8\,\text{mins}\) applying sunscreen, how much sunscreen would they use, on average?

- The article reports that \(R^2 = 0.64\). Interpret this value.

- What is the value of the correlation coefficient?

- What would a test of \(H_0\): \(\beta_0 = 0\) mean?

Exercise 33.14 Bhargava et al. (1985) stated (p. \(1\,617\)):

In developing countries [...] logistic problems prevent the weighing of every newborn child. A study was performed to see whether other simpler measurements could be substituted for weight to identify neonates of low birth weight and those at risk.

One relationship they studied was between infant chest circumference (in cm) \(x\) and birth weight (in grams) \(y\). The regression equation was given as: \[ \hat{y} = -3440.2403 + 199.2987x. \] The correlation coefficient was \(r = 0.8696\) with \(P < 0.001\).

- Critique the way in which the regression equation and correlation coefficient are reported.

- Based on the correlation information, could chest circumference be used as a useful predictor of birth weight? Explain.

- Interpret the intercept and the slope of the regression equation.

- What are the units of measurement for the intercept and slope?

- Predict the mean birth weight of an infant with a chest circumference of \(30\,\text{cm}\).

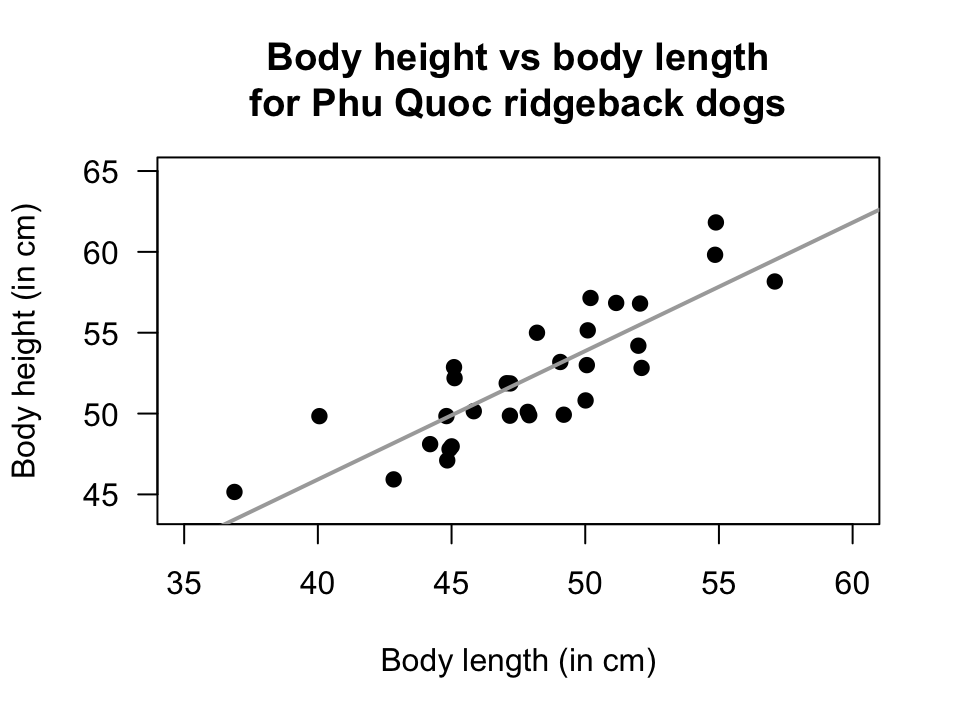

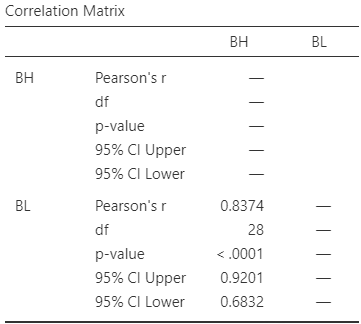

Exercise 33.15 [Dataset: Dogs]

Quan, Tran, and Chung (2017) studied Phu Quoc Ridgeback dogs (Canis familiaris), and recorded many measurements of the dogs, including body length and body height.

The scatterplot displaying this relationship and the software output are shown in Fig. 33.19.

In this example, it does not matter which variable is used as \(x\) or \(y\).

- Describe the relationship.

- Taller dogs might be expected to be longer. To test this, write the hypotheses in terms of correlations.

- Perform the test, using the output. Write a conclusion.

- Is the test statistically valid?

FIGURE 33.19: Phu Quoc ridgeback dogs. Left: a scatterplot of the body height vs length. Right: software output.

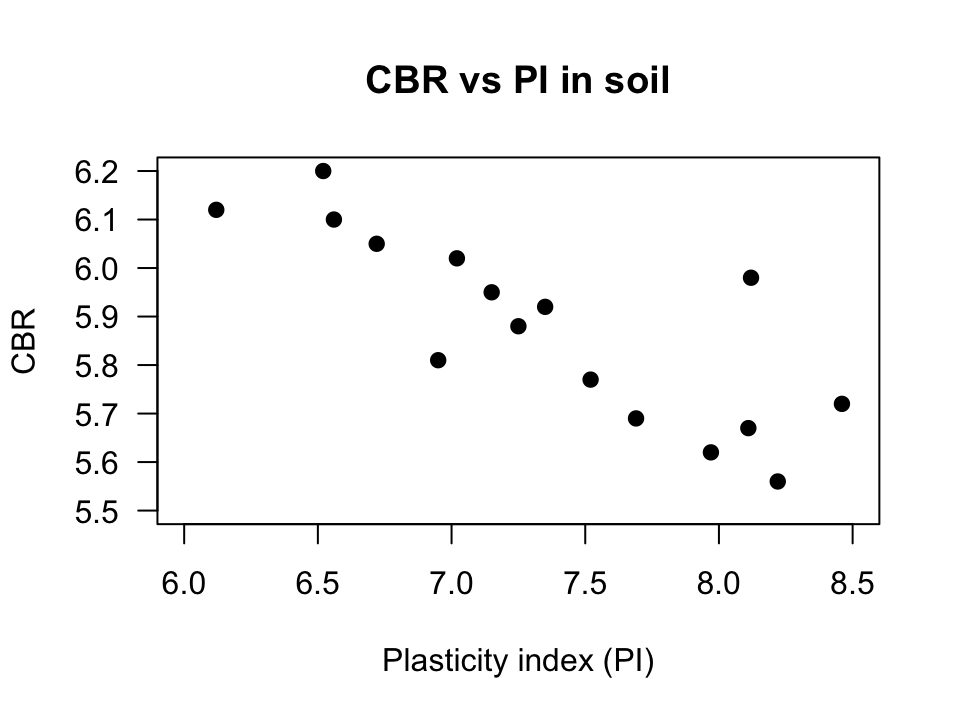

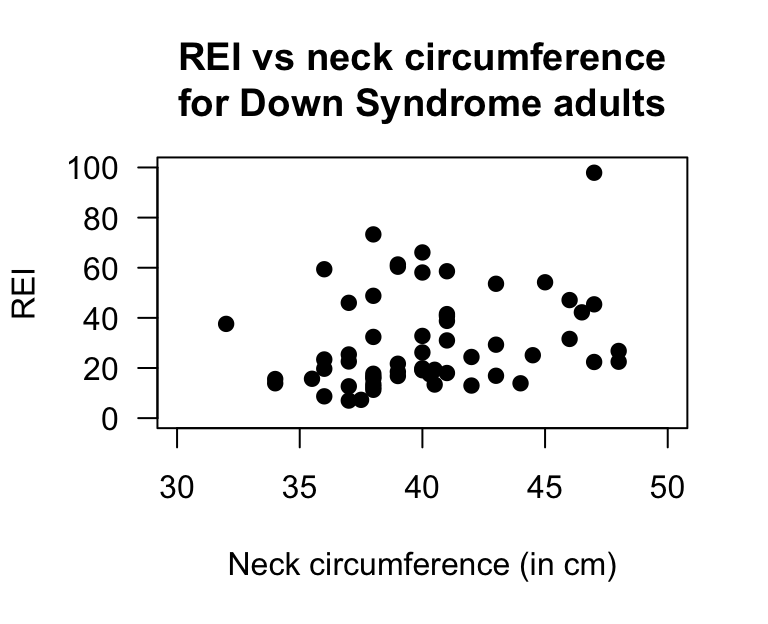

Exercise 33.16 [Dataset: Soils]

The California Bearing Ratio (CBR) value is used to describe soil sub-grade for flexible pavements (such as in the design of air field runways).

Talukdar (2014) examined the relationship between CBR and other properties of soil, including the plasticity index (PI, a measure of the plasticity of the soil).

The scatterplot and software output from \(16\) different soil samples from Assam, India, are shown in Fig. 33.20.

- Describe the plot in words

- Find and interpret the value of \(R^2\).

- Write down the CI for the correlation coefficient.

- Conduct a hypothesis test for \(\rho\).

- Would the test be statistically valid?

FIGURE 33.20: The relationship between CBR and PI in sixteen soil samples. Left: scatterplot. Right: software output.

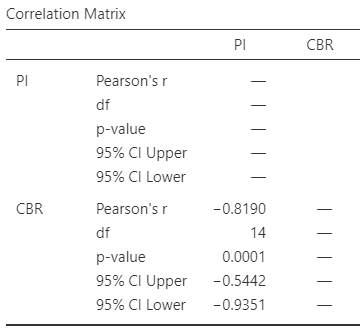

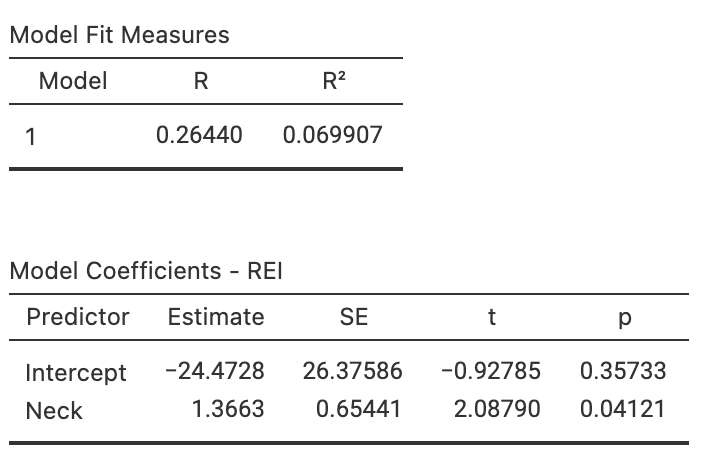

Exercise 33.17 [Dataset: OSA]

de Carvalho et al. (2020) studied obstructive sleep apnoea (OSA) in adults with Down Syndrome.

Sixty adults participated in a sleep study and had information recorded.

The response variable is OSA severity: the average number of episodes of sleep disruption (according to specific criteria) per hour of sleep, called the Respiratory Event Index (REI).

One research question is:

Among Down Syndrome adults, is there a linear relationship between the REI and neck size?

Here, \(x\) is the neck size (in cm), and \(y\) is the REI value. The data are plotted in Fig. 33.21 (left panel).

- Using the software output (Fig. 33.21), determine the value of \(r\).

- Interpret the value of \(R^2\).

- Write down the values of the intercept and the slope, and hence the regression equation.

- Explain what the slope in the regression equation means.

- Find an approximate \(95\)% CI for the slope.

- Perform a hypothesis to test if a relationship exists between the variables.

- Are the test and CI statistically valid?

FIGURE 33.21: Left: Scatterplot of the neck circumference vs REI for Down Syndrome adults. Right: software output.

FIGURE 33.22: The obstructive sleep apnoea dataset.

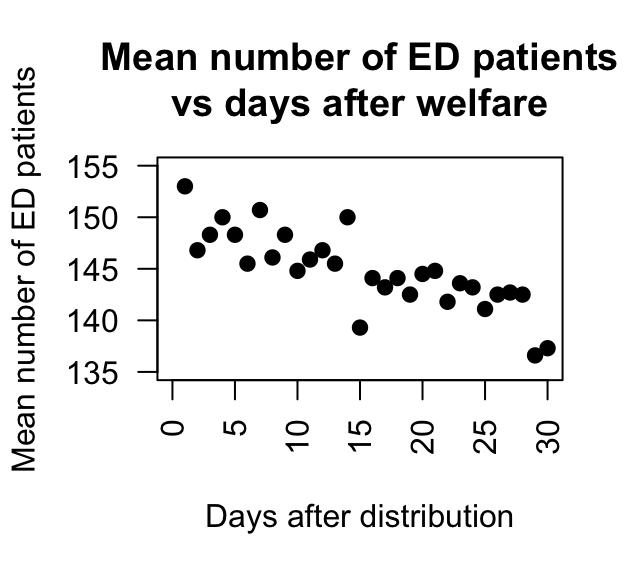

Exercise 33.18 [Dataset: EDpatients]

Brunette, Kominsky, and Ruiz (1991) studied the relationship between the number of emergency department (ED) patients and the number of days following the distribution of monthly welfare monies, from 1986 to 1988 in Minneapolis, MN.

The data (extracted from Brunette, Kominsky, and Ruiz (1991)) and the software output are displayed in Fig. 33.23.

- Write down the estimated regression equation.

- Interpret the slope in the regression equation.

- Find an approximate \(95\)% CI for the slope.

- Conduct a hypothesis test for the slope, and explain what the result means.

- What is the value of the correlation coefficient?

FIGURE 33.23: The number of emergency department patients, and the number of days since distribution of welfare. Left: scatterplot. Right: software output.

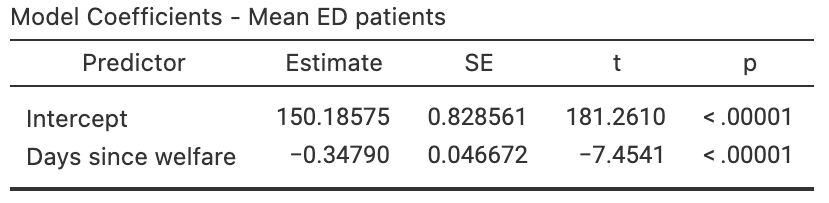

Exercise 33.19 [Dataset: Bitumen]

Panda, Das, and Sahoo (2018) made \(n = 42\) observations of hot mix asphalt, and measured the volume of air voids and the bitumen content by weight (Fig. 33.24, left panel).

The software output is shown in Fig. 33.24 (right panel).

- Describe the plot in words.

- For the data, \(R^2 = 99.29\)%. Determine, and interpret, the value of \(r\).

- Write down the regression equation using the software output.

- Interpret what the regression equation means.

- Perform a test to determine if there is a relationship between the variables.

- What is the \(P\)-value for testing \(H_0\): \(\rho = 0\)?

- Predict the mean percentage of air voids by volume when the percentage bitumen is \(5.0\)%. Do you expect this to be a good prediction? Why or why not?

- Predict the mean percentage of air voids by volume when the percentage bitumen is \(6.0\)%. Do you expect this to be a good prediction? Why or why not?

- Would the test be statistically valid?

FIGURE 33.24: Air voids in bitumen. Left: scatterplot. Right: software output

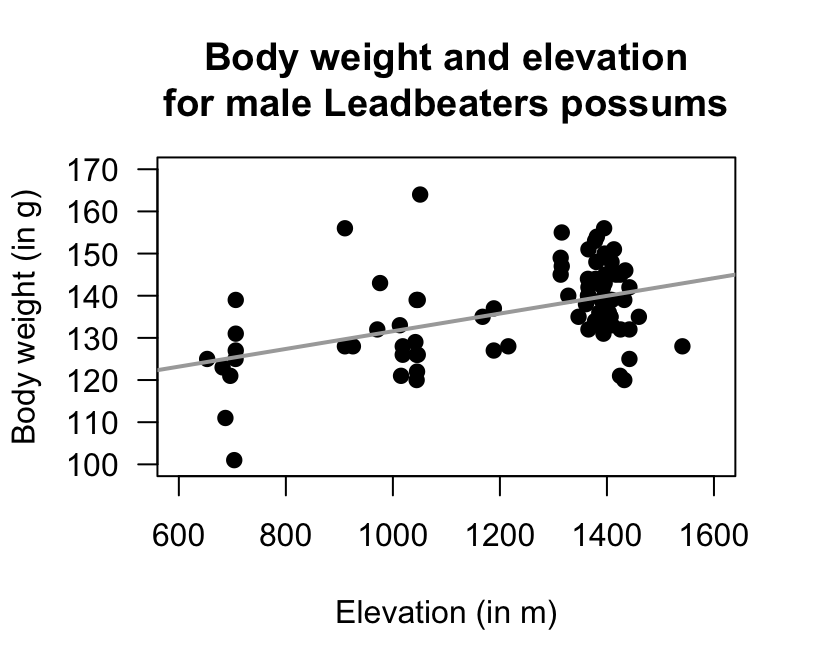

Exercise 33.20 [Dataset: Possums]

J. L. Williams et al. (2022) studied Leadbeater's possums in the Victorian Central Highlands.

They recorded, among other information, the body weight of the possums (in g) and their location, including the elevation of the location (in m; DEM), as shown in Fig. 33.25 (left panel).

The software output is shown in Fig. 33.25 (right panel).

- The value of \(R^2\) is \(23.0\)%. Determine, and interpret, the value of \(r\).

- Write down the regression equation.

- Determine if there is a relationship between the possum weight and the elevation, using a test of the slope.

- What is the \(P\)-value for a test of \(H_0\): \(\rho = 0\)?

- Interpret the meaning of the slope.

- Predict the mean weight of male possums at an elevation of \(1\,000\,\text{m}\). Do you expect this to be a good prediction? Why or why not?

- Predict the mean weight of male possums at an elevation of \(200\,\text{m}\). Do you expect this to be a good prediction? Why or why not?

FIGURE 33.25: The relationship between weight of possums and the elevation of their location. Left: scatterplot. Right: software output.

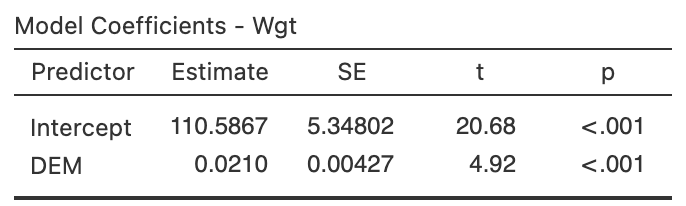

Exercise 33.21 [Dataset: Gorillas]

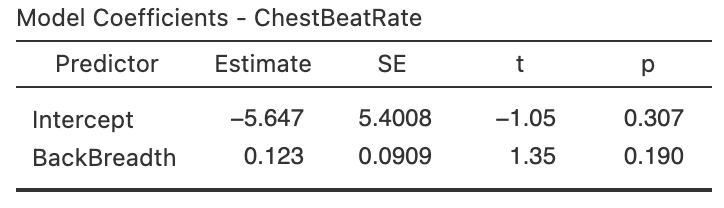

Wright et al. (2021) examined \(25\) gorillas and recorded information about their chest-beating rates and their size (measured by the breadth of the gorillas' backs).

The relationship is shown in Fig. 33.26.

Use the software output (Fig. 33.27) to study the relationship.

- Determine the value of \(r\) and \(R^2\).

- Perform a hypothesis test for the slope, and write a conclusion.

- Find the regression equation.

FIGURE 33.26: The scatterplot for the chest-beating data.

FIGURE 33.27: Software regression output for the gorilla data.

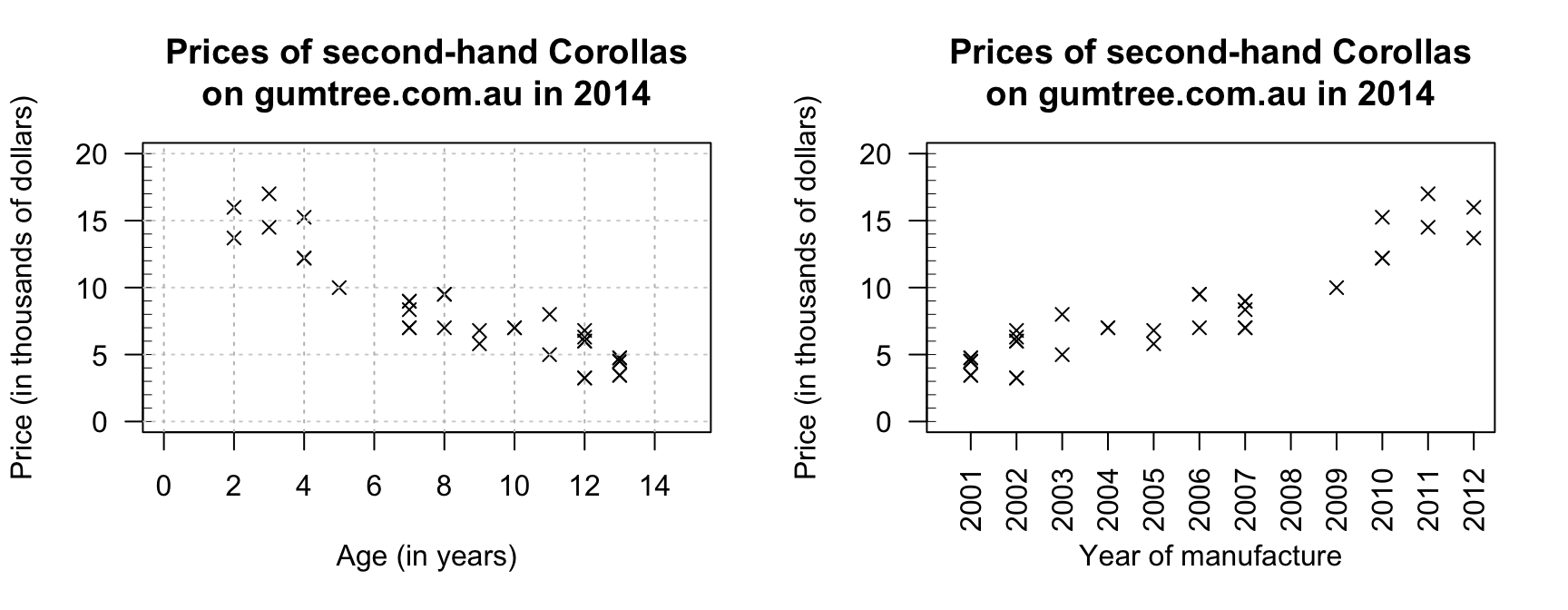

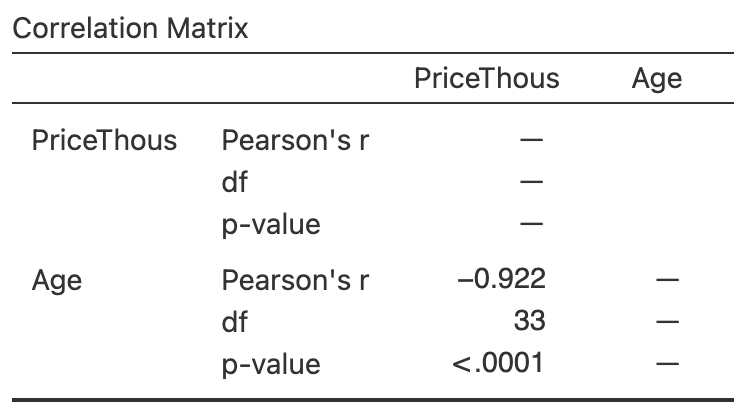

Exercise 33.22 [Dataset: Corollas]

I was wondering about how the age of second-hand cars impact their price.

On 25 June 2014, I searched

Gum Tree

(an Australian online marketplace), for Toyota Corolla in the 'Cars, Vans & Utes' category.

I recorded the age and the price of each (second-hand) car from the first two pages of results that were returned.

I restricted the data to cars less than \(14\) years old at the time, removed one \(13\)-year-old Corolla advertised for sale for $\(390\,000\) (assuming this was an error), then produced the scatterplot in Fig. 33.28 (left panel).

FIGURE 33.28: The price of second-hand Toyota Corollas (\(n = 38\)) as advertised on Gum Tree on 25 June 2014, plotted against age (left) and year of manufacture (right).

- Describe the relationship displayed in the graph, in words.

- What else could influence the price of a second-hand Corolla besides the age?

- Consider a seven-year-old Corolla selling for $\(15\,000\). Would this be cheap or expensive? Explain.

- As stated, I removed one observation: a \(13\)-year-old Corolla for sale at $\(390\,000\). What do you think the price was meant to be listed as, by looking at the scatterplot? Explain.

- From the scatterplot, draw (if you can), or estimate by eye, an approximation of the regression line. Then, estimate the value of \(b_0\) (the intercept) from the line you drew. What does this mean? Do you think this value is meaningful?

- Estimate the value of \(b_1\) (the slope) from the line you drew. What does this mean? Do you think this value is meaningful?

- From the line you drew above, write down an estimate of the regression equation.

- What are the units of the intercept and the slope?

- Use the software output (Fig. 33.29) relating the price (in thousands of dollars) to age to write down the regression equation.

- Using the software output, write down the value of \(r\). Using this value of \(r\), compute the value of \(R^2\). What does this mean?

FIGURE 33.29: The jamovi output, analysing the Corolla data

Use the regression equation from the software output to estimate the sale price of a Corolla that is \(20\)-years-old, and explain your answer.

Would a Corolla \(6\)-years-old advertised for sale at $\(15\,000\) appear to be good value? Estimate the sale price and explain your answer.

Using the software output, perform a suitable hypothesis test to determine if there is evidence that lower prices are associated with older Corollas.

Compute an approximate \(95\)% CI for the population slope (use the software output).

-

I could have drawn a scatterplot with Price on the vertical axis and Year of manufacture on the horizontal axis (Fig. 33.28, right panel). For this graph:

- What is the value of the correlation coefficient?

- How would the value of \(R^2\) change?

- How would the value of the slope change?

- How would the value of the intercept change?

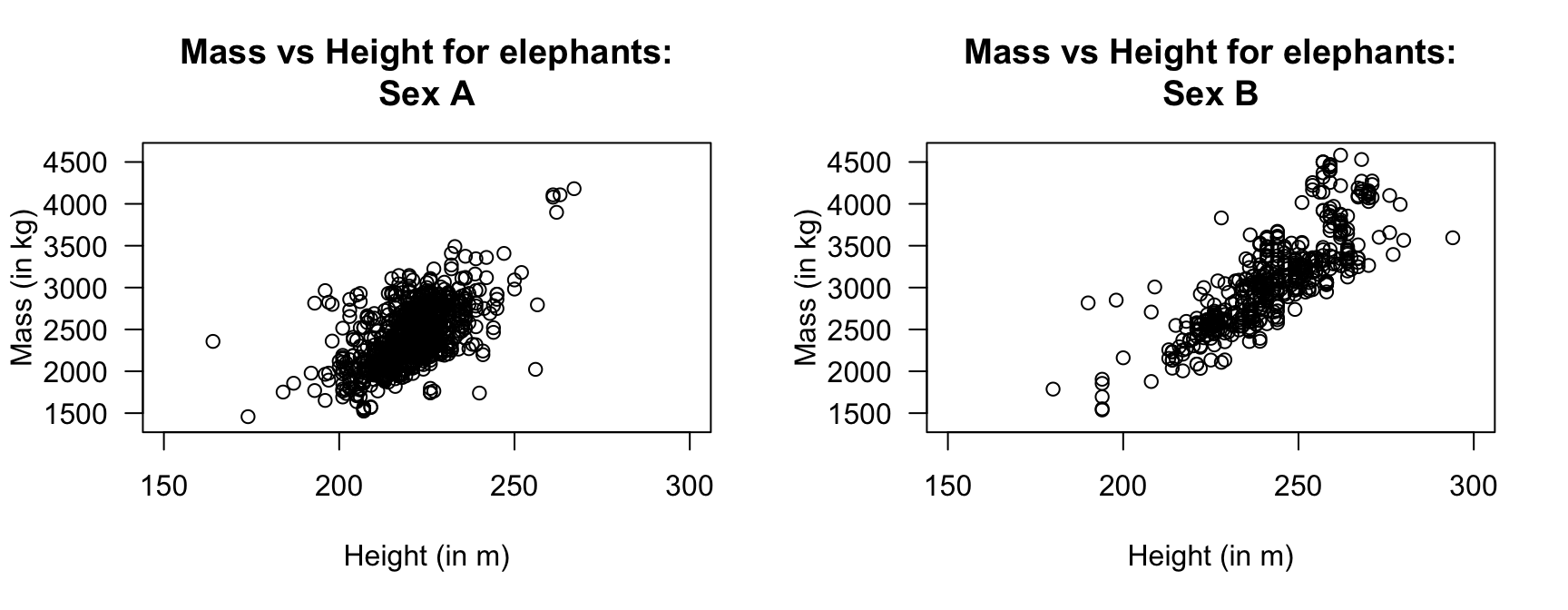

Exercise 33.23 [Dataset: Elephants]

Weighing elephants is not easy due to their size.

Height (to the shoulder), which is easier to measure, may be a useful proxy for the mass of the elephant (Lalande et al. 2022a).

Two scatterplots of some relevant data (Lalande et al. 2022b) are shown in Fig. 33.30.

- Which graph do you think is for males and which for female elephants? Explain.

- Which plot has a correlation coefficient closest to one? Explain.

- Use software to compute the correlation coefficients for each sex.

- For which sex is the height likely to be better for estimating mass? Explain.

- Use software to compute the regression equation for predicting mass from height, one line for each sex.

- Test to confirm the relationship between mass and height, for each sex.

- Use the regression lines to predict the mass of an elephant with a height of \(225\,\text{m}\), for each sex.

- Discuss the statistical validity conditions.

FIGURE 33.30: Mass and hieght of elephants.

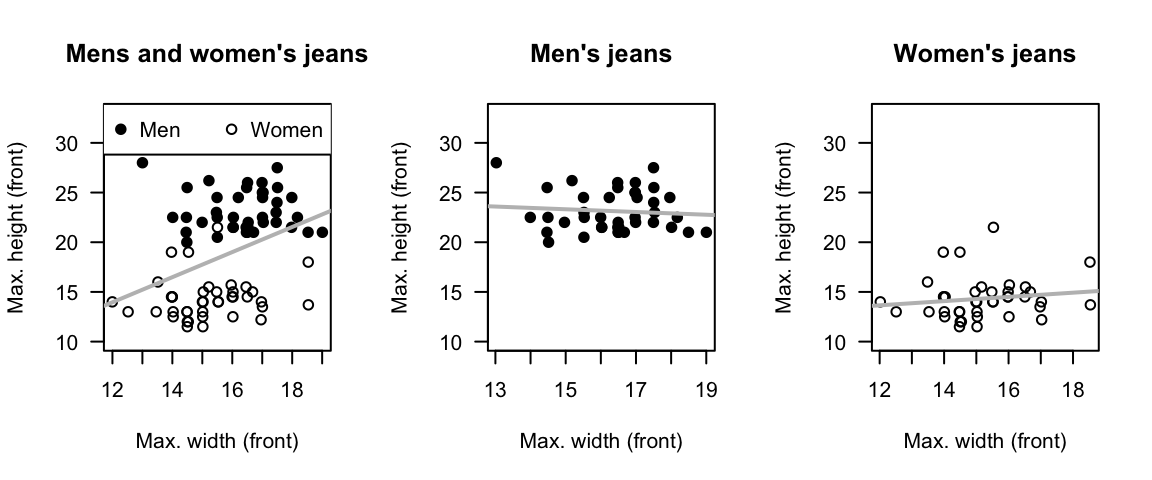

Exercise 33.24 [Dataset: Jeans]

Diehm and Thomas (2018) recorded data on the size of pockets in men's and women's jeans.

This exercise considers the correlation between the maximum widths and maximum heights of front pockets (Fig. 33.31).

- The correlation for all jeans is \(r = 0.38\), with \(P = 0.00051\). What does this mean?

- For men's jeans only, the correlation is \(r = -0.09\), with \(P = 0.59\). What does this mean?

- For women's jeans only, the correlation is \(r = 0.14\), with \(P = 0.38\). What does this mean?

- From the last three questions, how would you describe the relationship between the maximum widths and maximum heights of the front pockets of jeans?

FIGURE 33.31: The relationships between minimum and maximum heights of front pockets for all jeans (left), men's jeans only (centre) and women's jeans only (right).

Exercise 33.25 [Dataset: Typing]

The Typing dataset contains four variables: typing speed (mTS), typing accuracy (mAcc), age (Age), and sex (Sex) for \(1\,301\) students (Pinet et al. 2022).

Is there evidence of a linear relationship between a person's mean typing speed and mean accuracy?

Explain.